Despite the advancement of tools and technologies, mankind has yet to develop applications to assist visually/auditory/speech impaired people. With the rise of Data Modelling techniques that can be used to infuse “intelligence” even into dumb computers and the ease of accessibility, this “intelligence” can be extended to our Smartphones to help the visually/auditory/speech impaired people cope with their surroundings and get assistance in their daily activities. Our Application aims to bridge the gap between them and the physical world by leveraging the power of Deep Learning which can be made accessible even on low-ended devices with a lucid User-Interface that would allow them to better understand the world around them.

-

Clone the repository:

git clone https://github.com/SilentCruzer/Lumos.git -

Open your code favourite editor (VS Code, Android Studio etc)

-

Click on 'Open an Existing Project'

-

Browse to the directory where you cloned the repo and click OK

-

Let your code editor import the project

-

To install the dependencies run

flutter pub getin the terminal. -

Build the application in your device by clicking 'run' button or use an emulator.

|

|

|

|---|---|---|

|

|

|

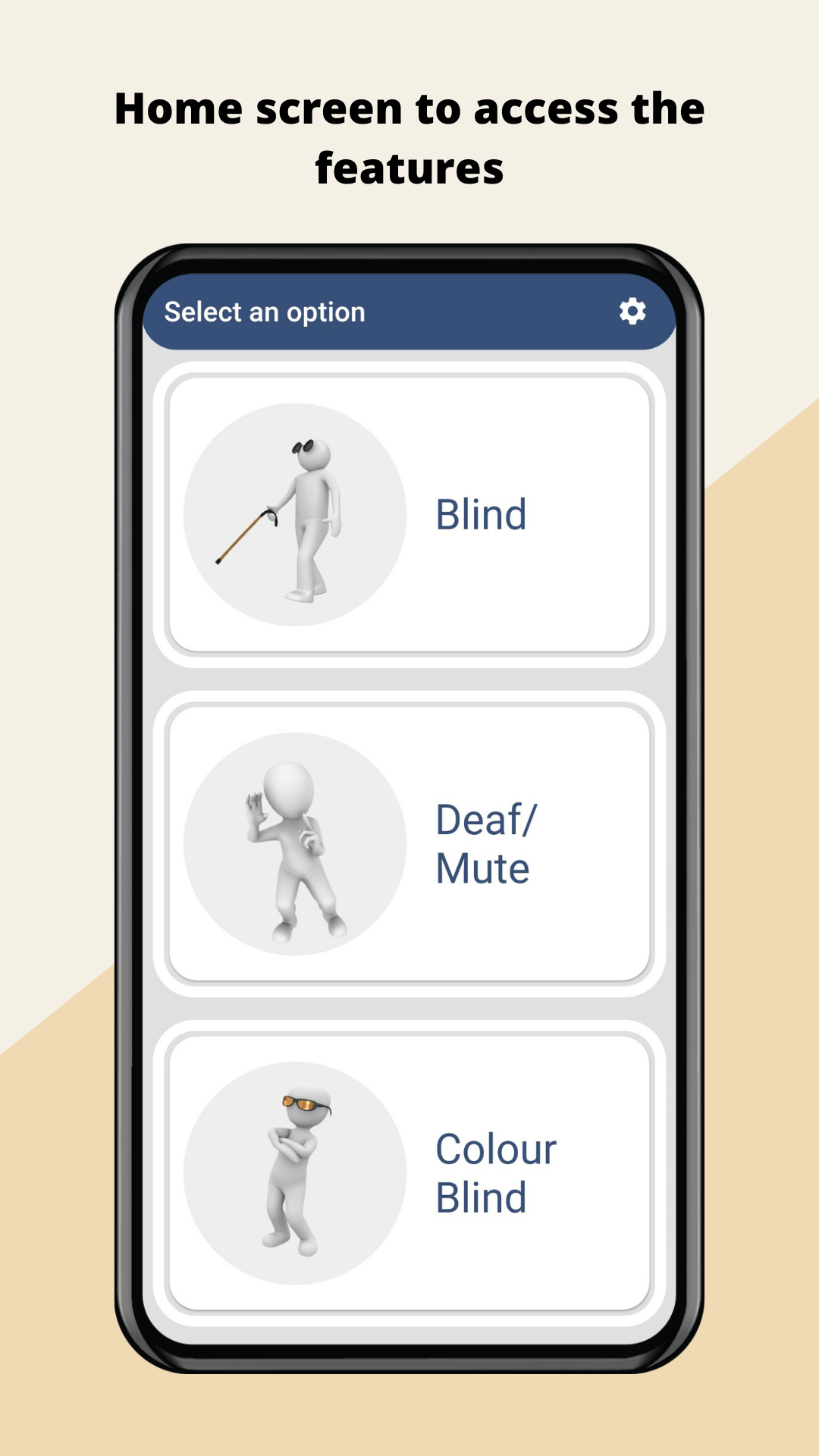

Our primary purpose behind this project is to leverage and study how Deep Learning Architectures along with easy prototyping tools can help us develop applications that can be easily rendered even on low-end devices. With this application, we will develop a one-stop solution to allow blind or partially blind people to better understand the surroundings around them and to be able to cope with the dynamic world ahead of them. An assistance to deaf/mute people to communicate with others who are not even aware of ASL Sign Language and assist those who are colour blind by helping them to get to know the exact colour of any object.

The Minimal Viable Product (MVP) would allow the Users to leverage Image Captioning Architecture to generate a real-time insight into their surroundings while using Natural Language Processing to speak out in a lucid manner. The cornerstone of the application would be its User Interface, which would infuse a lucid experience for the user with its ease of handling and use.

For this project, we will be collaborating on various domains like:

- Collecting data and training models using tflite

- Prototyping Mobile Application using Flutter

- UI/UX Designing

This was an enriching experience for all of us that are part of this team.

- Every feature, from image labelling to currency detection, uses a text-to-speech feature to speak out to the user about whatever is detected 🗣️

- Each screen vibrates with a different intensity when being opened, helping the user to navigate seamlessly. The buttons also have unique vibrations for better accessibility 📳

- We have used a minimum number of buttons, but whatever buttons are there, are of a large size. For instance, the top half of the screen will have one button, and the bottom half, another button, so that a user does not need to precisely click on a particular position in order to be easy for blind people to navigate the application 🔘

- All features work completely offline and do not require any internet connection 📶

- All features work in real-time and do not require any preprocessing time for the models to make predictions, so the user can get instant updates 🏎️

- The ASL feature uses a simple user interface so that quick access can be done to all the features and convenient communication can be established between a speech/auditory impaired person and a person totally unaware of ASL Sign Language. Also, the text to speech feature is implemented to make it usable for people who also have auditory impairment along with speech impairment 👂

- The Colour Blind Feature also continues with a simple and easily accessible UI so that the user can easily get the exact colour which he was earlier not able to see with his eyes 👓

You can test out the application by installing it to your own device. The apk is available here. You can also try it out live over on Appetize.io. We recommend using the app on a real device because the application relies heavily on the device camera.

A few resources to get you started if this is your first Flutter project:

For help getting started with Flutter, view the online documentation, which offers tutorials, samples, guidance on mobile development, and a full API reference.

Thanks goes to these wonderful people (emoji key):

Adithya Kumar 💻 📖 🤔 |

Yash Khare 📖 🧑🏫🤔 |

Akshat Tripathi 📖 💻 🤔 |

Aman Kumar Singh 💻 🤔 📖 |