BenchOpt is a benchmarking suite for optimization algorithms. It is built for simplicity, transparency, and reproducibility.

Benchopt is implemented in Python, and can run algorithms written in many programming languages (example). So far, Benchopt has been tested with Python, R, Julia and C/C++ (compiled binaries with a command line interface). Programs available via conda should be compatible.

BenchOpt is run through a command line interface as described in the API Documentation. Replicating an optimization benchmark should be as simple as doing:

pip install benchopt git clone https://github.com/benchopt/benchmark_logreg_l2 benchopt install -e benchmark_logreg_l2 benchopt run --env ./benchmark_logreg_l2

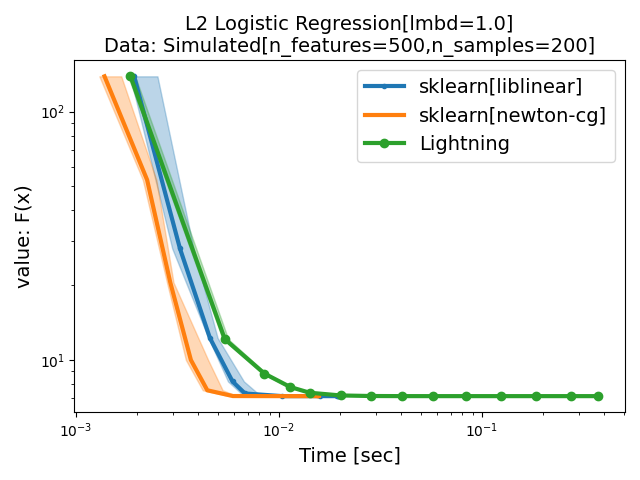

Running this command will give you a benchmark plot on l2-regularized logistic regression:

See the List of optimization problems available below.

Learn how to create a new benchmark using the benchmark template.

The command line tool to run the benchmarks can be installed through pip. To get the latest release, use:

pip install benchopt

To get the latest development version, use:

pip install -U -i https://test.pypi.org/simple/ benchopt

Then, existing benchmarks can be retrieved from git or created locally. For instance, the benchmark for Lasso can be retrieved with:

git clone https://github.com/benchopt/benchmark_lasso

To run the Lasso benchmark on all datasets and with all solvers, run:

benchopt run --env ./benchmark_lasso

To get more details about the different options, run:

benchopt run -h

or read the API Documentation.