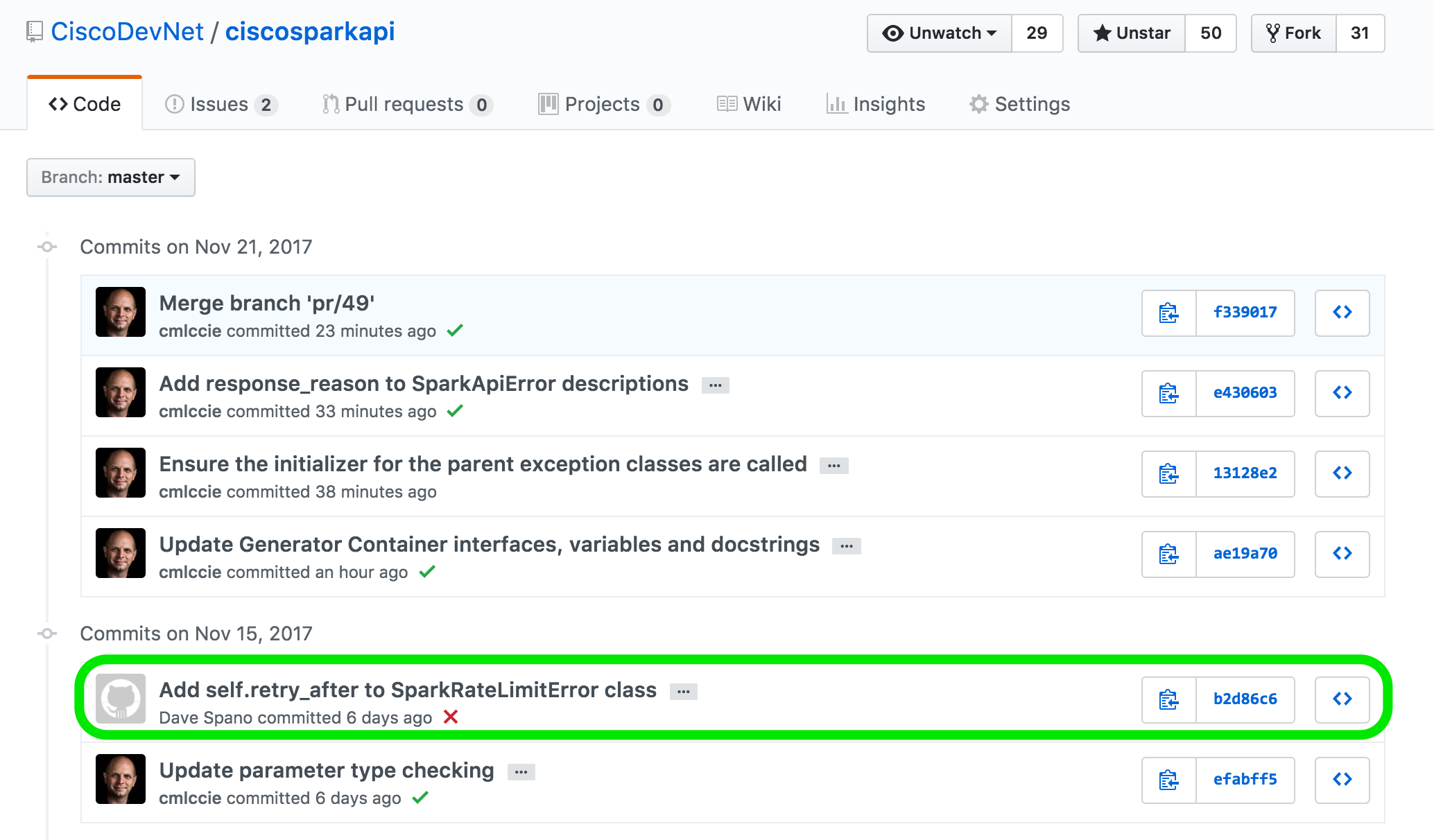

Add self.retry_after to SparkRateLimitError class #49

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

If one sets the wait_on_rate_limit to True in their CiscoSparkAPI

class instantiation, they will hit an error telling them that

retry_after is not an attribute of the SparkRateLimitError class.

This is because the RestSession class checks for its existence

when it catches the error at line 282 in restsession.py

Error:

File "/home/daspano/../ciscosparkapi/restsession.py", line 282, in request

if self.wait_on_rate_limit and e.retry_after:

AttributeError: 'SparkRateLimitError' object has no attribute 'retry_after'

This commit adds that the retry_after attribute as an int, as my testing

shows the Retry-After result always being an integer in seconds.

Ex:

-----------------------------------Response------------------------------------

429 Too Many Requests

Date: Wed, 15 Nov 2017 21:47:00 GMT

Content-Type: application/json

Content-Length: 182

Connection: keep-alive

TrackingID: ROUTER_5A0CB5D2-B92F-01BB-7C3F-AC12D9227C3F

Cache-Control: no-cache

Retry-After: 194

Server: Redacted

l5d-success-class: 1.0

Via: 1.1 linkerd

content-encoding: gzip

Strict-Transport-Security: max-age=63072000; includeSubDomains; preload