-

Notifications

You must be signed in to change notification settings - Fork 0

An Overview of the Razor Microkernel

The Razor Microkernel itself is a small, in-memory Linux kernel that is used to boot 'nodes' (in this case, servers) that are discovered by the Razor server. It provides the Razor Server with an essential point of control that the Razor Server needs in order to discover and manage these nodes in the network. The Razor Microkernel also performs an essential task for the Razor Server; discovering the capabilities of these nodes and reporting those capabilities back to the Razor server (so that the Razor server can determine what it should do with them). Without the Razor Microkernel (or something similar to it), the Razor Server cannot do its job (classifying nodes and, based on their capabilities, applying models to them).

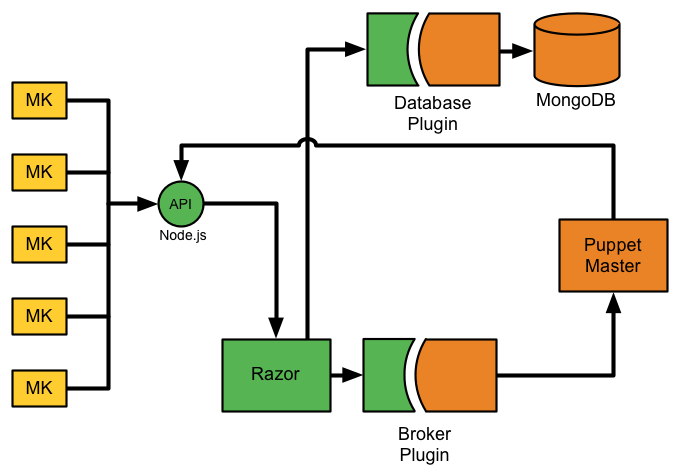

So how exactly does the Razor Microkernel work? What does it do and how does it interact with the Razor Server in order to accomplish those tasks? The following diagram shows how the Razor Microkernel and Razor Server relate to each other (and the interactions between them):

As you can see in this diagram, the Razor Server (which is represented by the green-colored components in the center of this diagram) interacts with the Razor Microkernel instances through a request-based channel, and those interactions are driven by the HTTP 'checkin' and 'register' requests that are sent to the Razor Server by the individual Razor Microkernel Controller instances (using an API that is defined by the Razor Server's API Node.js instance). The "checkin response" that is sent back to these Microkernel instances can include meta-data and commands that the Razor server would like to pass back to these Microkernel instances (more on this below), allowing for an event-driven, two-way communications channel between the Microkernel instances and the Razor server that is managing those instances.

The other two components shown in this diagram (the MongoDB instance and the Puppet Master) are key components that the Razor server interacts with over time, but we won’t go into any specifics here as to how those interactions occur other than to point out that for both of these components the Razor Server uses a plugin model (allowing for wholesale replacement of these two components with any component that implements the other side of this plugin). The current Razor implementation (for example) supports both Puppet and Chef as DevOps systems (via separate broker plugins), and implementations database plugins for both an in-memory datastore (for use with unit tests and continuous integration systems) and a PostgreSQL datastore have already been added to Razor. We have no doubt that more plugins for both of these components of the Razor ecosystem will be developed over time.

So, now that we’ve shown how the Razor Microkernel and Razor Server relate to each other, what exactly is the role that the Razor Microkernel plays in this process? As some of you may already know, the primary responsibility of the Razor Server is to use the properties (or “facts”) about the hardware (or nodes) that are “discovered” in order to determine what should be done with those nodes. The properties that are reported to Razor during the node registration process (more on this, below) are used to “tag” the nodes being managed by Razor, and those tags can then be used to map a policy to each of the nodes (which can trigger the process of provisioning an OS to one or more nodes, for example). In this picture, the primary responsibility of the Razor Microkernel is to provide the Razor Server with the facts for the nodes onto which the Microkernel is “deployed”. The Razor Microkernel gathers these facts using a combination of tools (primarily the Facter tool, from Puppet Labs, along with the lshw, dmidecode, and lscpu commands), and these facts are reported back to the Razor Server by the Microkernel as part of the node registration process (more on this, below). Without the Microkernel (or something like it) running on these nodes, the Razor Server has no way to determine what the capabilities of the nodes are and, using those capabilities, determine what sort of policy it should be applying to any given node.

The Razor Microkernel also has a secondary responsibility in this picture. That secondary responsibility is to provide a default boot state for any node that is discovered by the Razor Server. When a new node is discovered (any node for which the Razor Server cannot find an applicable policy), the Razor Server applies a default policy that that node which results in that newly discovered node being booted using the Razor Microkernel. This will typically trigger the process of node checkin and registration, but in the future we might use the same pattern to trigger additional actions using customized Microkernel instances (a Microkernel that performs a system audit or a “boot-nuke”, for example). The existence of the Microkernel instance (and the fact that the Razor Server is selecting the Microkernel instance based on policies defined within the Razor Server) means the the possibilities here are almost endless.

Given that the Razor Microkernel is the default boot state for any new node encountered by the Razor Server, perhaps it would be worthwhile to describe the Microkernel boot process itself. This process begins with the delivery of the Razor Microkernel (as a compressed kernel image and a ram-disk image) by the Razor Server’s “Image Service”. As part of the Microkernel boot process, a number of “built-in” extensions to our base-line Tiny Core Linux OS are installed. In the current implementation, the built-in extensions (and their dependencies) that are installed include the extensions for Ruby (v1.8.7), bash, lshw, and dmidecode along with the drivers and/or firmware needed to support accessing SCSI disks and the Broadcom NetXtreme II networking card. In addition to the extensions that are installed at boot, there are also extensions that are installed during the post-boot process. Currently the only additional extension that is installed during the post-boot phase is an Open VM Tools extension that we have built, and that extension (and its dependencies) is only installed if we are booting a VM in a VMware environment using our Microkernel. In that case, not only is the extension installed, but the kernel modules provided by that extension are dynamically loaded, providing us with complete access to information about the underlying VM (information we would not be able to see without these kernel modules).

Once the Microkernel has been booted and these extensions have been installed, the next step in the boot process is to finish the process of initializing the OS. This includes finalizing the initial configuration that will be used by our Microkernel Controller (using information gathered from the DHCP server to change this configuration so that it points to the correct Razor Server instance for its initial checkin, for example) and setting the hostname for the Microkernel instance (so that the hostnames will be unique, based on the underlying hardware). Once the configuration is finalized, a few key services are started up, along with the Microkernel Controller itself. When this process is finally complete, the following processes will be running in our Microkernel Controller instance:

- The Microkernel Controller – a Ruby-based daemon process that interacts with the Razor Server via HTTP

- The Microkernel TCE Mirror – a WEBrick instance that provides a completely internal web-server that can be used to obtain TCL extensions that should be installed once the boot process has completed. As was mentioned previously, the only extension that is currently provided by this mirror is the Open VM Tools extension (and its dependencies).

- The Microkernel Web Server – a WEBrick instance that can be used to interact with the Microkernel Controller via HTTP; currently this server is only used by the Microkernel Controller itself to save any configuration changes it might receive from the Razor Server (this action actually triggers a restart of the Microkernel Controller by this web server instance), but this is the most-likely point of interaction between the MCollective and the Microkernel Controller in the future.

- The Local Gem Mirror – this gem mirror is used during the Microkernel initialization process to provide access to the gems that are needed by the Microkernel Controller and the services that it depends on; since these gems must be installed before the network is available, a local gem mirror (or something like it) is needed.

- The OpenSSH server daemon – only installed and running if we are in a “development” Microkernel; in a “production” Microkernel this daemon process is not started (in fact, the package containing this daemon process isn’t even installed).

When the system is fully initialized, the components that are running (and the connections between them) look something like this:

The Razor Microkernel itself includes the four components on the left-hand side of this diagram (as was mentioned previously, the OpenSSH server daemon may also be running but, since it is optional, it is not shown here).

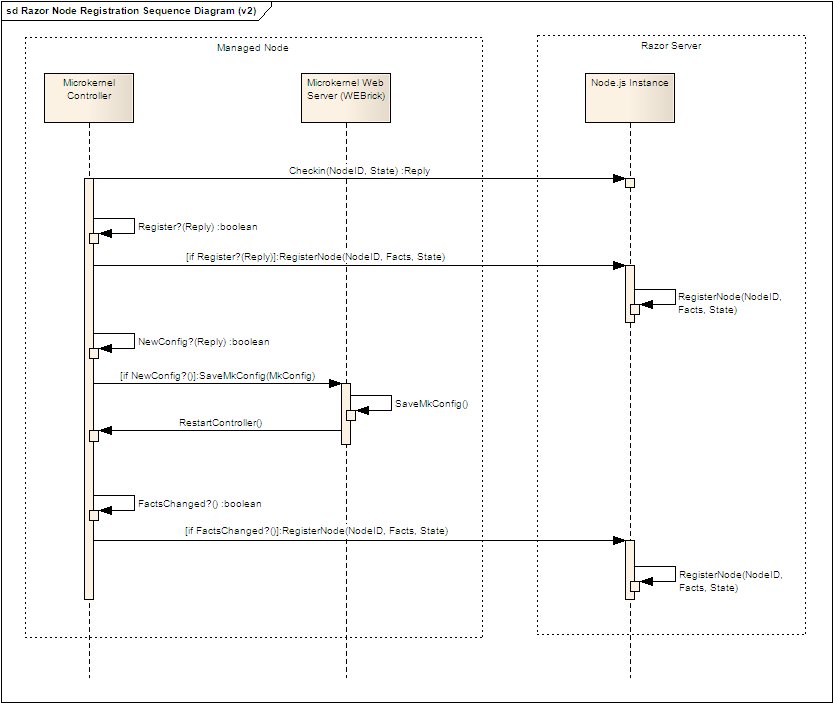

Once the node has been successfully booted using the Microkernel, the the Microkernel Controller’s first action is to checkin with the Razor Server (this “checkin action” is repeated periodically, and the timeing of these checkins is set in the configuration that the Razor Server passes back to the Microkernel in the checkin response, more on this below). In the Razor Server’s response to these checkin requests, the server includes two additional components. The first component included by the server's checkin response is a command that tells the Microkernel what it should do next. Currently, this set of commands is limited to oen of the following:

- acknowledge – A command from the Razor Server indicating that the checkin request has been received and that there is no action necessary on the part of the Microkernel at this time

- register – A command from the Razor server asking the Microkernel to report back the “facts” that it can discover about the underlying hardware that it has been deployed onto

- reboot – A command from the Razor Server asking the Microkernel instance to reboot itself. This is typically the result of the Razor Server finding an applicable policy for that node after the Microkernel has registered the node with the Razor Server (or after a new policy has been defined), but this command might be sent back under other circumstances in the future.

The second component sent back to the Microkernel Controller in the server's checkin response is the configuration that the Razor Server would like that Microkernel instance to use (which includes parameters like the periodicity that the Microkernel should be using for its checkin requests, a pattern indicating which “facts” should NOT be reported, the TCE mirror location that the Microkernel should use to download any extensions, and even the URL of the Razor Server itself). If this configuration has changed in any way since the last checkin by the Microkernel, the Microkernel Controller will save this new configuration and that Microkernel Controller will be restarted (forcing it to picks up the new configuration). This ability to set the Microkernel Controller’s configuration using the checkin response gives the Razor Server complete control over the behavior of the Microkernel instances that it is interacting with.

As was mentioned previously, once the node has checked in the Razor Server may send back a command triggering the Node Registration process. This process can be triggered by any of the following situations:

- If the Razor server has not ever seen that node before, or if the Razor server has not seen that node in a while (the timing for this is configurable), then it will send back a register command in the server's checkin response.

- When the Microkernel Controller first starts after the Microkernel finishes booting it will include a special first_checkin flag in its checkin request. If that flag is included in the checkin request received from the Microkernel, then the Razor server will send back a “register” command in the checkin response (forcing the Microkernel Controller to register with the Razor server, see above). This is an important variant of the first use case (above), so it has been shown separately here.

- Whenever the Microkernel Controller detects that the facts that it gathers about the underlying node that it has been deployed to are different than they were during its last successful checkin with the Razor server, the Microkernel Controller will register with the Razor Server (without being prompted to do so).

The following sequence diagram can be used to visualize the sequence of actions outlined above (showing both the node checkin and node registration events):

So, at a high-level we have described how the Razor Microkernel interacts with the Razor Server to checkin and register nodes with the server. How exactly is the data that is reported in the registration process gathered (and how is that data reported)?

The meta-data that is reported by the Razor Microkernel to the Razor Server during the node registration process is gathered using several tools that are built into the Razor Microkernel:

- Facter (a cross-platform library available from Puppet Labs that they designed to gather information about nodes being managed by their DevOps framework, Puppet) is used to gather most of the facts that are included in the node registration request. This has the advantage gathering a lot of data about the nodes without much effort on our part.

- The lshw command is used to gather additional information about the underlying hardware (processor, bus, firmware, memory, disks, and network). This additional information is used to supplement the information that we can gather using Facter, and provides us with a lot of detail that is not available in the Facter view of the nodes.

- the lscpu command is used to gather additional information about the CPU itself that are not available from either Facter or through the lshw command (virtualization support, cache sizes, byte order, etc.) and, as was the case with the lshw output, this additional information is used to supplement the facts that can be gathered from other sources

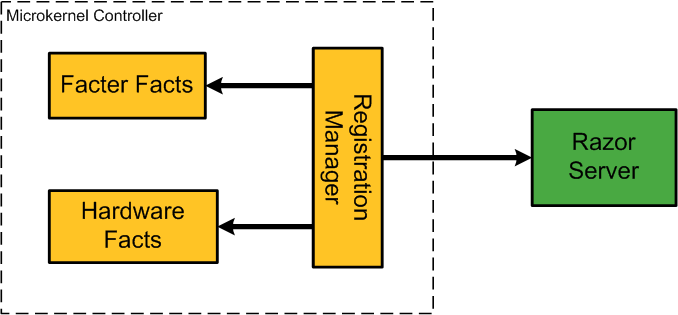

The following diagram shows the Razor Microkernel components that are involved in gathering these facts and reporting them to the Razor Server:

As is shown in this diagram, the Microkernel's Registration Manager first uses a 'Fact Manager' class to gather Facter Facts (using Facter). Those Facter Facts are then supplemented with a set of Hardware Facts (which are gathered using a 'Hardware Facter' class that manages the process of gathering detailed, 'hardware-specific' facts about the underlying platform using the lshw and lscpu commands, as was outlined above).

The combined set of meta-data gathered from these sources are then passed through a filter, which uses the 'mk_fact_excl_pattern' property from the Microkernel configuration to filter out any facts with a name that matches the regular expression pattern contained in that property. This lets us restrict the facts that are reported to the Razor Server by the Microkernel to only those facts that the Razor Server is interested in (all of the 'array-style' facts are suppressed in the current default configuration that is provided by the Razor Server, for example) and also lets us minimize the number of registration requests received by the Razor Server by eliminating fields that we aren't interested in but that change constantly in the Microkernel instances (the free memory in the Microkernel, for example). Once the fields in the 'facts map' have been winnowed down to just those facts that are 'of interest', the 'facts map' is converted to a JSON-style string, and that map is then sent to the Razor Server as part of the Node Registration request.