A Python package focussing on causal inference in quasi-experimental settings. The package allows for sophisticated Bayesian model fitting methods to be used in addition to traditional OLS.

To get the latest release:

pip install CausalPyAlternatively, if you want the very latest version of the package you can install from GitHub:

pip install git+https://github.com/pymc-labs/CausalPy.gitimport causalpy as cp

# Import and process data

df = (

cp.load_data("drinking")

.rename(columns={"agecell": "age"})

.assign(treated=lambda df_: df_.age > 21)

)

# Run the analysis

result = cp.pymc_experiments.RegressionDiscontinuity(

df,

formula="all ~ 1 + age + treated",

running_variable_name="age",

model=cp.pymc_models.LinearRegression(),

treatment_threshold=21,

)

# Visualize outputs

fig, ax = result.plot();

# Get a results summary

result.summary()Plans for the repository can be seen in the Issues.

Click on the thumbnail below to watch a video about CausalPy on YouTube.

This is appropriate when you have multiple units, one of which is treated. You build a synthetic control as a weighted combination of the untreated units.

| Time | Outcome | Control 1 | Control 2 | Control 3 |

|---|---|---|---|---|

| 0 | ||||

| 1 | ||||

| T |

| Frequentist | Bayesian |

|---|---|

The data (treated and untreated units), pre-treatment model fit, and counterfactual (i.e. the synthetic control) are plotted (top). The causal impact is shown as a blue shaded region. The Bayesian analysis shows shaded Bayesian credible regions of the model fit and counterfactual. Also shown is the causal impact (middle) and cumulative causal impact (bottom).

We can also use synthetic control methods to analyse data from geographical lift studies. For example, we can try to evaluate the causal impact of an intervention (e.g. a marketing campaign) run in one geographical area by using control geographical areas which are similar to the intervention area but which did not recieve the specific marketing intervention.

This is appropriate for non-equivalent group designs when you have a single pre and post intervention measurement and have a treament and a control group.

| Group | pre | post |

|---|---|---|

| 0 | ||

| 0 | ||

| 1 | ||

| 1 |

| Frequentist | Bayesian |

|---|---|

| coming soon |

The data from the control and treatment group are plotted, along with posterior predictive 94% credible intervals. The lower panel shows the estimated treatment effect.

This is appropriate for non-equivalent group designs when you have pre and post intervention measurement and have a treament and a control group. Unlike the ANCOVA approach, difference in differences is appropriate when there are multiple pre and/or post treatment measurements.

Data is expected to be in the following form. Shown are just two units - one in the treated group (group=1) and one in the untreated group (group=0), but there can of course be multiple units per group. This is panel data (also known as repeated measures) where each unit is measured at 2 time points.

| Unit | Time | Group | Outcome |

|---|---|---|---|

| 0 | 0 | 0 | |

| 0 | 1 | 0 | |

| 1 | 0 | 1 | |

| 1 | 1 | 1 |

| Frequentist | Bayesian |

|---|---|

The data, model fit, and counterfactual are plotted. Frequentist model fits result in points estimates, but the Bayesian analysis results in posterior distributions, represented by the violin plots. The causal impact is the difference between the counterfactual prediction (treated group, post treatment) and the observed values for the treated group, post treatment.

Regression discontinuity designs are used when treatment is applied to units according to a cutoff on the running variable (e.g.

| Running variable | Outcome | Treated |

|---|---|---|

| False | ||

| False | ||

| True | ||

| True |

| Frequentist | Bayesian |

|---|---|

The data, model fit, and counterfactual are plotted (top). Frequentist analysis shows the causal impact with the blue shaded region, but this is not shown in the Bayesian analysis to avoid a cluttered chart. Instead, the Bayesian analysis shows shaded Bayesian credible regions of the model fits. The Frequentist analysis visualises the point estimate of the causal impact, but the Bayesian analysis also plots the posterior distribution of the regression discontinuity effect (bottom).

Regression discontinuity designs are used when treatment is applied to units according to a cutoff on a running variable, which is typically not time. By looking for the presence of a discontinuity at the precise point of the treatment cutoff then we can make causal claims about the potential impact of the treatment.

| Running variable | Outcome |

|---|---|

| Frequentist | Bayesian |

|---|---|

| coming soon |

The data and model fit. The Bayesian analysis shows the posterior mean with credible intervals (shaded regions). We also report the Bayesian

$R^2$ on the data along with the posterior mean and credible intervals of the change in gradient at the kink point.

Interrupted time series analysis is appropriate when you have a time series of observations which undergo treatment at a particular point in time. This kind of analysis has no control group and looks for the presence of a change in the outcome measure at or soon after the treatment time. Multiple predictors can be included.

| Time | Outcome | Treated | Predictor |

|---|---|---|---|

| False | |||

| False | |||

| True | |||

| True |

| Frequentist | Bayesian |

|---|---|

The data, pre-treatment model fit, and counterfactual are plotted (top). The causal impact is shown as a blue shaded region. The Bayesian analysis shows shaded Bayesian credible regions of the model fit and counterfactual. Also shown is the causal impact (middle) and cumulative causal impact (bottom).

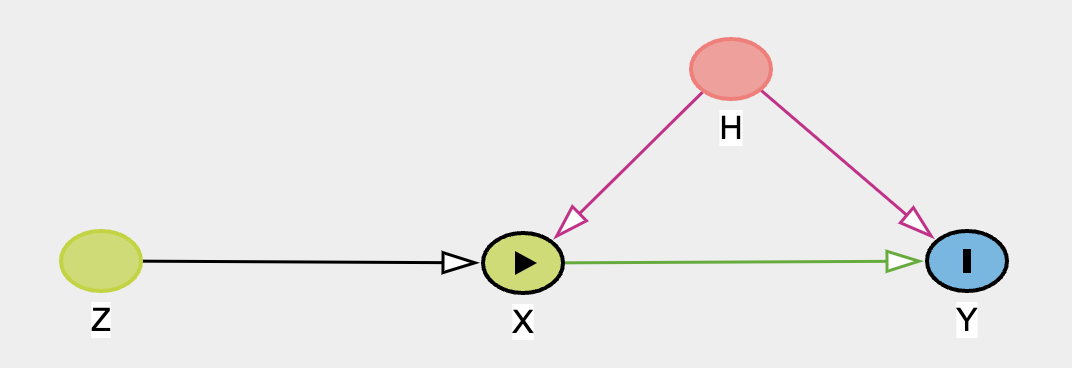

Instrumental Variable regression is an appropriate technique when you wish to estimate the treatment effect of some variable on another, but are concerned that the treatment variable is endogenous in the system of interest i.e. correlated with the errors. In this case an “instrument” variable can be used in a regression context to disentangle treatment effect due to the threat of confounding due to endogeneity.

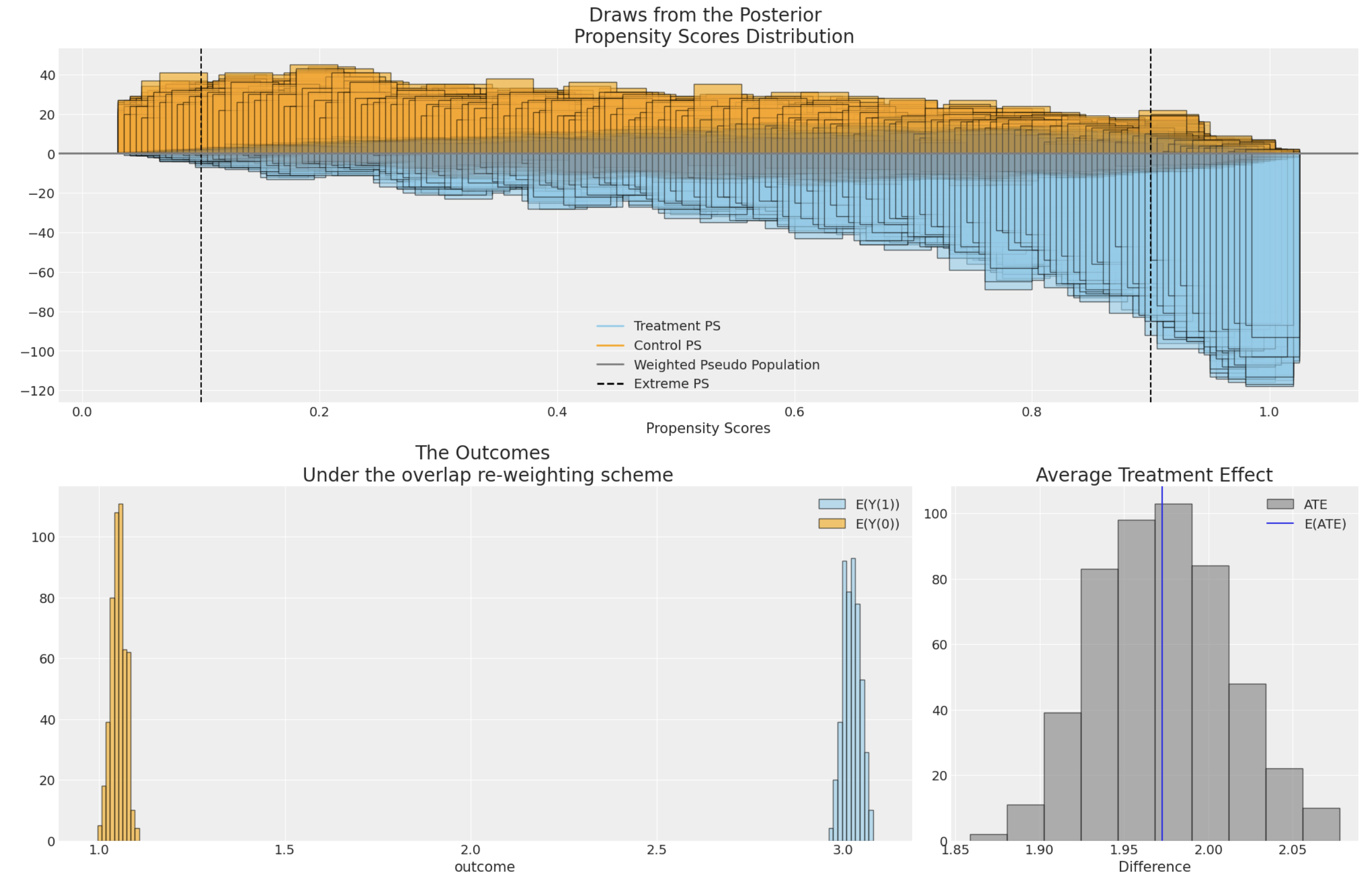

Propensity scores are often used to address the risks of bias or confounding introduced in an observational study by selection effects into the treatment condition. Propensity scores can be used in a number of ways, but here we demonstrate their usage within corrective weighting schemes aimed to recover as-if random allocation of subjects to the treatment condition. The technique "up-weights" or "down-weights" individual observations to better estimate a causal estimand such as the average treatment effect.

Here are some general resources about causal inference:

- The official PyMC examples gallery has a set of examples specifically relating to causal inference.

- Angrist, J. D., & Pischke, J. S. (2009). Mostly harmless econometrics: An empiricist's companion. Princeton university press.

- Angrist, J. D., & Pischke, J. S. (2014). Mastering'metrics: The path from cause to effect. Princeton university press.

- Cunningham, S. (2021). Causal inference: The Mixtape. Yale University Press.

- Huntington-Klein, N. (2021). The effect: An introduction to research design and causality. Chapman and Hall/CRC.

- Reichardt, C. S. (2019). Quasi-experimentation: A guide to design and analysis. Guilford Publications.

This repository is supported by PyMC Labs.

If you are interested in seeing what PyMC Labs can do for you, then please email ben.vincent@pymc-labs.com. We work with companies at a variety of scales and with varying levels of existing modeling capacity. We also run corporate workshop training events and can provide sessions ranging from introduction to Bayes to more advanced topics.