Lightweight and fast OCR models for license plate text recognition. You can train models from scratch or use the trained models for inference.

The idea is to use this after a plate object detector, since the OCR expects the cropped plates.

- Keras 3 Backend Support: Compatible with TensorFlow, JAX, and PyTorch backends 🧠

- Augmentation Variety: Diverse augmentations via Albumentations library 🖼️

- Efficient Execution: Lightweight models that are cheap to run 💰

- ONNX Runtime Inference: Fast and optimized inference with ONNX runtime ⚡

- User-Friendly CLI: Simplified CLI for training and validating OCR models 🛠️

- Model HUB: Access to a collection of pre-trained models ready for inference 🌟

| Model Name | Time b=1 (ms)[1] |

Throughput (plates/second)[1] |

Accuracy[2] | Dataset |

|---|---|---|---|---|

argentinian-plates-cnn-model |

2.1 | 476 | 94.05% | Non-synthetic, plates up to 2020. Dataset arg_plate_dataset.zip. |

argentinian-plates-cnn-synth-model |

2.1 | 476 | 94.19% | Plates up to 2020 + synthetic plates. Dataset arg_plate_dataset_plus_synth.zip. |

european-plates-mobile-vit-v2-model |

2.9 | 344 | 92.5%[3] | European plates (from +40 countries, trained on 40k+ plates). |

🆕🔥 global-plates-mobile-vit-v2-model |

2.9 | 344 | 93.3%[4] | Worldwide plates (from +65 countries, trained on 85k+ plates). |

Tip

Try fast-plate-ocr pre-trained models in Hugging Spaces.

Notes

[1] Inference on Mac M1 chip using CPUExecutionProvider. Utilizing CoreMLExecutionProvider accelerates speed by 5x in the CNN models.

[2] Accuracy is what we refer to as plate_acc. See metrics section.

[3] For detailed accuracy for each country see results and the corresponding val split used.

[4] For detailed accuracy for each country see results.

Reproduce results

-

Calculate Inference Time:

pip install fast_plate_ocr

from fast_plate_ocr import ONNXPlateRecognizer m = ONNXPlateRecognizer("argentinian-plates-cnn-model") m.benchmark()

-

Calculate Model accuracy:

pip install fast-plate-ocr[train] curl -LO https://github.com/ankandrew/fast-plate-ocr/releases/download/arg-plates/arg_cnn_ocr_config.yaml curl -LO https://github.com/ankandrew/fast-plate-ocr/releases/download/arg-plates/arg_cnn_ocr.keras curl -LO https://github.com/ankandrew/fast-plate-ocr/releases/download/arg-plates/arg_plate_benchmark.zip unzip arg_plate_benchmark.zip fast_plate_ocr valid \ -m arg_cnn_ocr.keras \ --config-file arg_cnn_ocr_config.yaml \ --annotations benchmark/annotations.csv

For inference, install:

pip install fast_plate_ocrTo predict from disk image:

from fast_plate_ocr import ONNXPlateRecognizer

m = ONNXPlateRecognizer('argentinian-plates-cnn-model')

print(m.run('test_plate.png'))To run model benchmark:

from fast_plate_ocr import ONNXPlateRecognizer

m = ONNXPlateRecognizer('argentinian-plates-cnn-model')

m.benchmark()Make sure to check out the docs for more information.

To train or use the CLI tool, you'll need to install:

pip install fast_plate_ocr[train]Important

Make sure you have installed a supported backend for Keras.

To train the model you will need:

- A configuration used for the OCR model. Depending on your use case, you might have more plate slots or different set

of characters. Take a look at the config for Argentinian license plate as an example:

# Config example for Argentinian License Plates # The old license plates contain 6 slots/characters (i.e. JUH697) # and new 'Mercosur' contain 7 slots/characters (i.e. AB123CD) # Max number of plate slots supported. This represents the number of model classification heads. max_plate_slots: 7 # All the possible character set for the model output. alphabet: '0123456789ABCDEFGHIJKLMNOPQRSTUVWXYZ_' # Padding character for plates which length is smaller than MAX_PLATE_SLOTS. It should still be present in the alphabet. pad_char: '_' # Image height which is fed to the model. img_height: 70 # Image width which is fed to the model. img_width: 140

- A labeled dataset, see arg_plate_dataset.zip for the expected data format.

- Run train script:

# You can set the backend to either TensorFlow, JAX or PyTorch # (just make sure it is installed) KERAS_BACKEND=tensorflow fast_plate_ocr train \ --annotations path_to_the_train.csv \ --val-annotations path_to_the_val.csv \ --config-file config.yaml \ --batch-size 128 \ --epochs 750 \ --dense \ --early-stopping-patience 100 \ --reduce-lr-patience 50

You will probably want to change the augmentation pipeline to apply to your dataset.

In order to do this define an Albumentations pipeline:

import albumentations as A

transform_pipeline = A.Compose(

[

# ...

A.RandomBrightnessContrast(brightness_limit=0.1, contrast_limit=0.1, p=1),

A.MotionBlur(blur_limit=(3, 5), p=0.1),

A.CoarseDropout(max_holes=10, max_height=4, max_width=4, p=0.3),

# ... and any other augmentation ...

]

)

# Export to a file (this resultant YAML can be used by the train script)

A.save(transform_pipeline, "./transform_pipeline.yaml", data_format="yaml")And then you can train using the custom transformation pipeline with the --augmentation-path option.

It's useful to visualize the augmentation pipeline before training the model. This helps us to identify if we should apply more heavy augmentation or less, as it can hurt the model.

You might want to see the augmented image next to the original, to see how much it changed:

fast_plate_ocr visualize-augmentation \

--img-dir benchmark/imgs \

--columns 2 \

--show-original \

--augmentation-path '/transform_pipeline.yaml'You will see something like:

After finishing training you can validate the model on a labeled test dataset.

Example:

fast_plate_ocr valid \

--model arg_cnn_ocr.keras \

--config-file arg_plate_example.yaml \

--annotations benchmark/annotations.csvOnce you finish training your model, you can view the model predictions on raw data with:

fast_plate_ocr visualize-predictions \

--model arg_cnn_ocr.keras \

--img-dir benchmark/imgs \

--config-file arg_cnn_ocr_config.yamlYou will see something like:

Exporting the Keras model to ONNX format might be beneficial to speed-up inference time.

fast_plate_ocr export-onnx \

--model arg_cnn_ocr.keras \

--output-path arg_cnn_ocr.onnx \

--opset 18 \

--config-file arg_cnn_ocr_config.yamlTo train the model, you can install the ML Framework you like the most. Keras 3 has support for TensorFlow, JAX and PyTorch backends.

To change the Keras backend you can either:

- Export

KERAS_BACKENDenvironment variable, i.e. to use JAX for training:KERAS_BACKEND=jax fast_plate_ocr train --config-file ...

- Edit your local config file at

~/.keras/keras.json.

Note: You will probably need to install your desired framework for training.

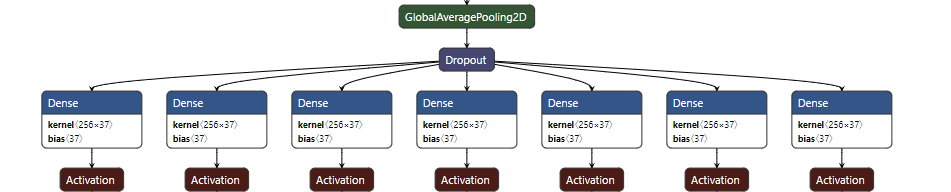

The current model architecture is quite simple but effective. See cnn_ocr_model for implementation details.

The model output consists of several heads. Each head represents the prediction of a character of the

plate. If the plate consists of 7 characters at most (max_plate_slots=7), then the model would have 7 heads.

Example of Argentinian plates:

Each head will output a probability distribution over the vocabulary specified during training. So the output

prediction for a single plate will be of shape (max_plate_slots, vocabulary_size).

During training, you will see the following metrics

-

plate_acc: Compute the number of license plates that were fully classified. For a single plate, if the ground truth is

ABC123and the prediction is alsoABC123, it would score 1. However, if the prediction wasABD123, it would score 0, as not all characters were correctly classified. -

cat_acc: Calculate the accuracy of individual characters within the license plates that were correctly classified. For example, if the correct label is

ABC123and the prediction isABC133, it would yield a precision of 83.3% (5 out of 6 characters correctly classified), rather than 0% as in plate_acc, because it's not completely classified correctly. -

top_3_k: Calculate how frequently the true character is included in the top-3 predictions (the three predictions with the highest probability).

Contributions to the repo are greatly appreciated. Whether it's bug fixes, feature enhancements, or new models, your contributions are warmly welcomed.

To start contributing or to begin development, you can follow these steps:

- Clone repo

git clone https://github.com/ankandrew/fast-plate-ocr.git

- Install all dependencies using Poetry:

poetry install --all-extras

- To ensure your changes pass linting and tests before submitting a PR:

make checks

If you want to train a model and share it, we'll add it to the HUB 🚀

If you look to contribute to the repo, some cool things are in the backlog: