This is a simple instruction on how to locally run Llama3 on your NAS using Open WebUI and Ollama.

- UGREEN NASync DXP480T Plus

- CPU: Intel® Core™ i5-1235U Processor (12M Cache, up to 4.40 GHz, 10 Cores 12 Threads)

- RAM: 8G

Open a terminal and run the following commands to create directories for your AI models and the web interface:

$ mkdir -p /home/ansonhe/AI/ollama

$ mkdir -p /home/ansonhe/AI/Llama3/open-webuiCopy the provided docker-compose.yml file to your directory, then start the services using Docker:

$ sudo docker-compose up -dOpen web browser and enter YOUR_NAS_IP:8080

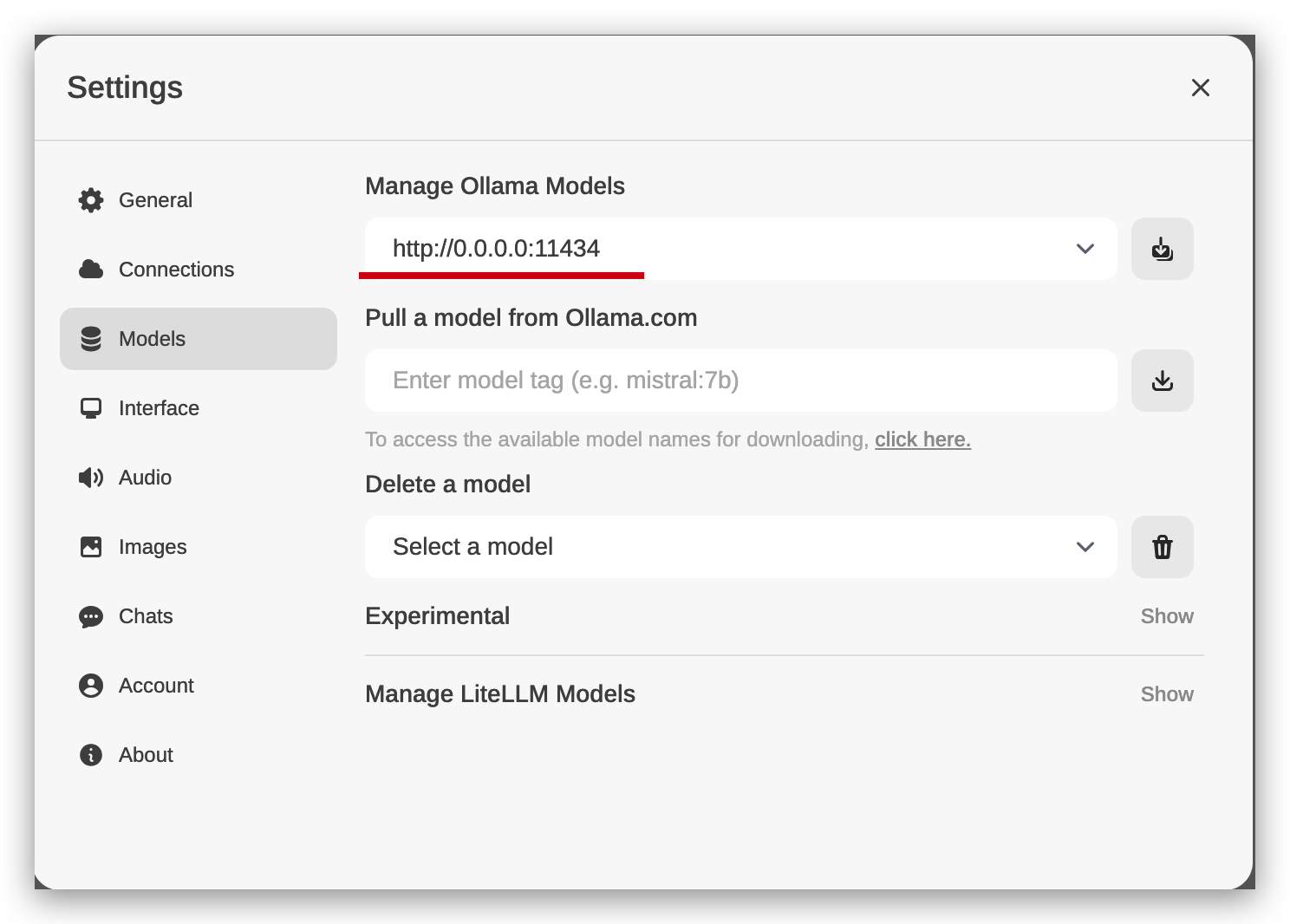

In the web interface, change the Ollama Models address to 0.0.0.0:11434.

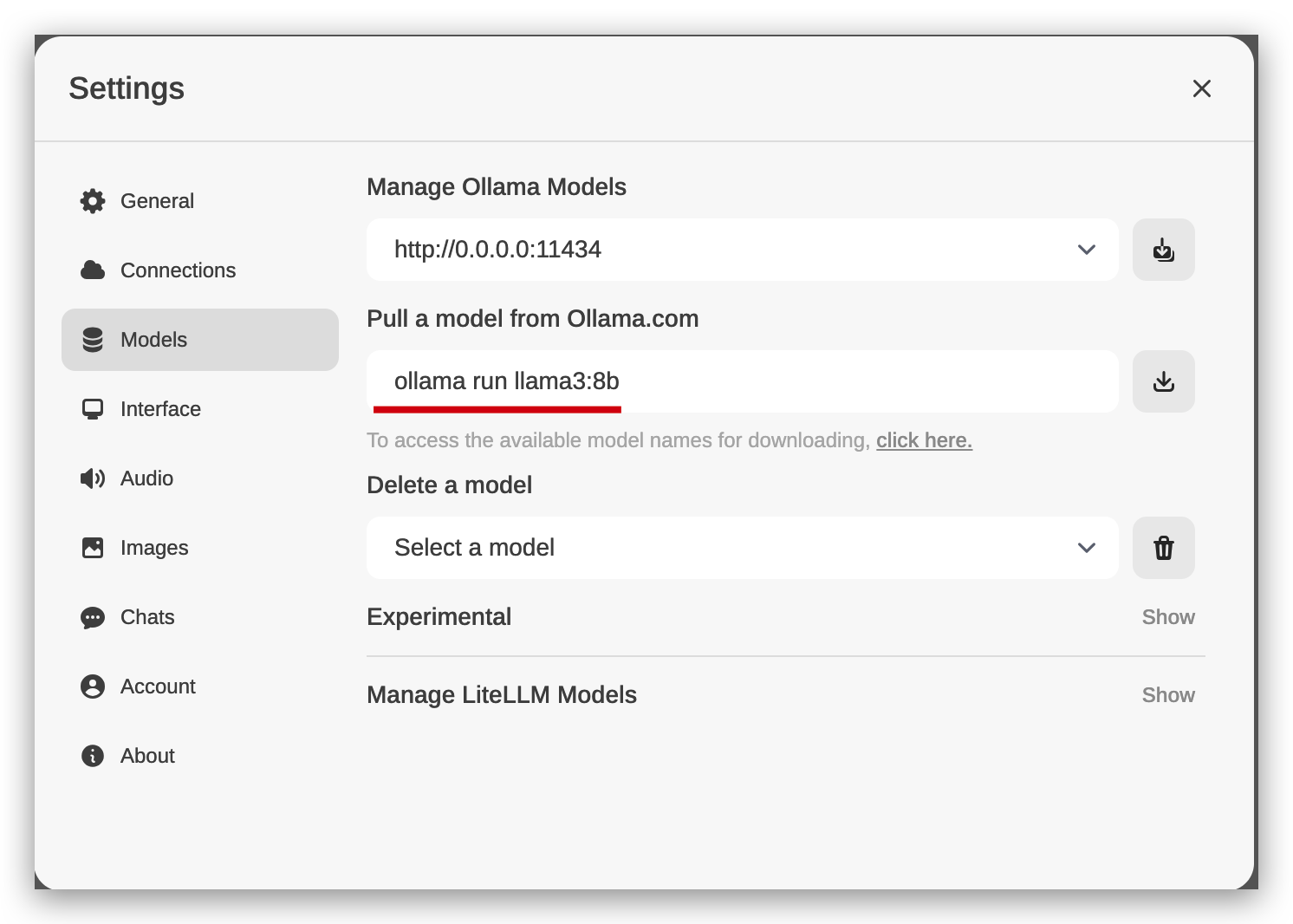

Download the Llama3 Model ollama run llama3:8b, (Only using 8b versions here since performance limitations on NAS)

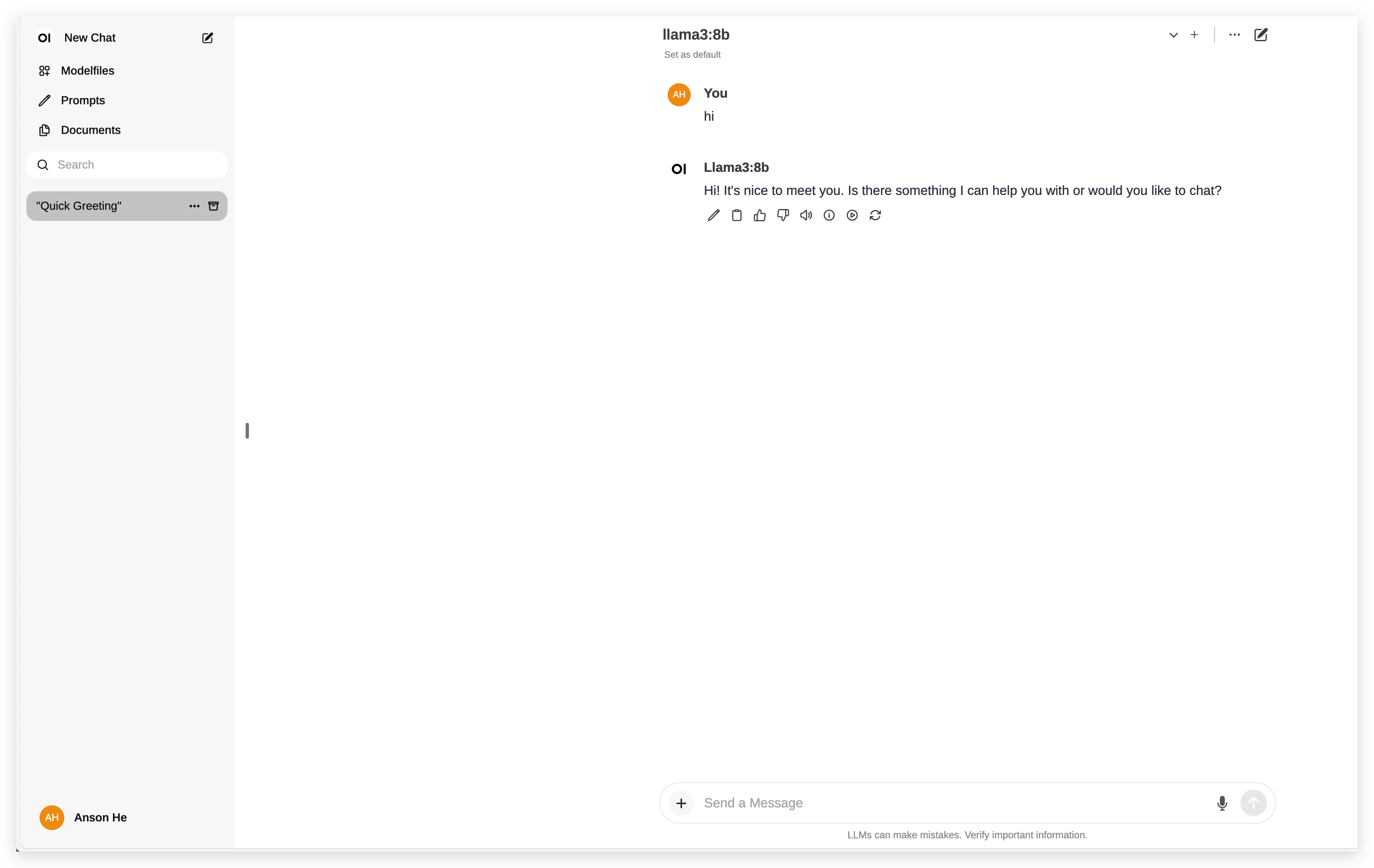

After the model has been downloaded, you can select Llama3 as your default model and host it locally.

Please note that the CPU of the NAS may still face limitations when running large language models (LLMs) due to its processing capabilities.