-

Notifications

You must be signed in to change notification settings - Fork 16.3k

Description

Apache Airflow version

3.1.0

If "Other Airflow 2 version" selected, which one?

No response

What happened?

I upgraded from 3.0.3 to 3.1.0, then my jobs started to fail.

In my configuration, I'm using value CeleryExecutor,KubernetesExecutor for AIRFLOW__CORE__EXECUTOR. Previously in 3.0.3 this has been working fine: all TIs will be executed via CeleryExecutor, except those operators where I specified executor='KubernetesExecutor'.

But in 3.1.0, those operators where I specified executor='KubernetesExecutor' always fail, with the error below:

[2025-09-30 15:13:00] INFO - Filling up the DagBag from /tmp/s3_dag_bundle_ri5yvvpv/dags/tenant_test/xd_test.py source=airflow.models.dagbag.DagBag loc=dagbag.py:593

[2025-09-30 15:13:00] ERROR - Failed to bag_dag: /tmp/s3_dag_bundle_ri5yvvpv/dags/tenant_test/xd_test.py source=airflow.models.dagbag.DagBag loc=dagbag.py:518

UnknownExecutorException: Task 'xd_asked_for_another_task' specifies executor 'KubernetesExecutor', which is not available. Make sure it is listed in your [core] executors configuration, or update the task's executor to use one of the configured executors.

File "/usr/local/lib/python3.12/site-packages/airflow/models/dagbag.py", line 513 in _process_modules

File "/usr/local/lib/python3.12/site-packages/airflow/models/dagbag.py", line 156 in _validate_executor_fields

UnknownExecutorException: Unknown executor being loaded: KubernetesExecutor

File "/usr/local/lib/python3.12/site-packages/airflow/models/dagbag.py", line 154 in _validate_executor_fields

File "/usr/local/lib/python3.12/site-packages/airflow/executors/executor_loader.py", line 245 in lookup_executor_name_by_str

[2025-09-30 15:13:00] ERROR - Dag not found during start up dag_id=xd_test bundle=BundleInfo(name='s3-dags', version=None) path=dags/tenant_test/xd_test.py source=task loc=task_runner.py:633

[2025-09-30 15:13:05] WARNING - Process exited abnormally exit_code=1 source=task

Seems it's not recognizing the 2nd executors specified under AIRFLOW__CORE__EXECUTOR.

This is breaking the multi executor feature

UPDATE:

Actually seems it's not the multi executor feature being broken, instead, it's the KubernetesExecutor feature.

In the Pod Template file, if I put value LocalExecutor,KubernetesExecutor for AIRFLOW__CORE__EXECUTOR in the Pod Template, it can work. But it should only require LocalExecutor, according to the doc https://airflow.apache.org/docs/apache-airflow-providers-cncf-kubernetes/stable/kubernetes_executor.html#example-pod-templates

So something seems wrong and broken. This will impact a lot KubernetesExecutor users.

What you think should happen instead?

No response

How to reproduce

The DAG I used

from __future__ import annotations

# [START tutorial]

# [START import_module]

import textwrap

from datetime import datetime, timedelta

# Operators; we need this to operate!

from airflow.providers.standard.operators.bash import BashOperator

# The DAG object; we'll need this to instantiate a DAG

from airflow.sdk import DAG

# [END import_module]

# [START instantiate_dag]

with DAG(

"xd_test",

# [START default_args]

# These args will get passed on to each operator

# You can override them on a per-task basis during operator initialization

default_args={

"depends_on_past": False,

"retries": 1,

"retry_delay": timedelta(minutes=5),

# 'queue': 'bash_queue',

# 'pool': 'backfill',

# 'priority_weight': 10,

# 'end_date': datetime(2016, 1, 1),

# 'wait_for_downstream': False,

# 'execution_timeout': timedelta(seconds=300),

# 'on_failure_callback': some_function, # or list of functions

# 'on_success_callback': some_other_function, # or list of functions

# 'on_retry_callback': another_function, # or list of functions

# 'sla_miss_callback': yet_another_function, # or list of functions

# 'on_skipped_callback': another_function, #or list of functions

# 'trigger_rule': 'all_success'

},

# [END default_args]

description="A simple tutorial DAG",

schedule=timedelta(days=1),

start_date=datetime(2021, 1, 1),

catchup=False,

tags=["example"],

) as dag:

# [END instantiate_dag]

# t1, t2 and t3 are examples of tasks created by instantiating operators

# [START basic_task]

t1 = BashOperator(

task_id="print_date",

bash_command="date",

)

t2 = BashOperator(

task_id="sleep",

depends_on_past=False,

bash_command="sleep 5",

retries=3,

)

# [END basic_task]

# [START documentation]

t1.doc_md = textwrap.dedent(

"""\

#### Task Documentation

You can document your task using the attributes `doc_md` (markdown),

`doc` (plain text), `doc_rst`, `doc_json`, `doc_yaml` which gets

rendered in the UI's Task Instance Details page.

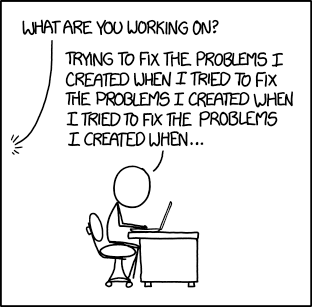

**Image Credit:** Randall Munroe, [XKCD](https://xkcd.com/license.html)

"""

)

dag.doc_md = __doc__ # providing that you have a docstring at the beginning of the DAG; OR

dag.doc_md = """

This is a documentation placed anywhere

""" # otherwise, type it like this

# [END documentation]

# [START jinja_template]

templated_command = textwrap.dedent(

"""

{% for i in range(5) %}

echo "{{ ds }}"

echo "{{ macros.ds_add(ds, 7)}}"

{% endfor %}

"""

)

t3 = BashOperator(

task_id="templated",

depends_on_past=False,

bash_command=templated_command,

)

# [END jinja_template]

t4 = BashOperator(

task_id="xd_asked_for_another_task",

depends_on_past=False,

bash_command="echo Hi_dude~",

executor='KubernetesExecutor'

)

t1 >> [t2, t3]

# [END tutorial]

Operating System

NAME="Red Hat Enterprise Linux" VERSION="9.6 (Plow)" ID="rhel" ID_LIKE="fedora" VERSION_ID="9.6" PLATFORM_ID="platform:el9" PRETTY_NAME="Red Hat Enterprise Linux 9.6 (Plow)"

Versions of Apache Airflow Providers

No response

Deployment

Official Apache Airflow Helm Chart

Deployment details

No response

Anything else?

No response

Are you willing to submit PR?

- Yes I am willing to submit a PR!

Code of Conduct

- I agree to follow this project's Code of Conduct