-

Notifications

You must be signed in to change notification settings - Fork 16.4k

Reduce "start-up" time for tasks in LocalExecutor #11327

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

I was thinking I'd follow up with a separate PR to add the same to CeleryExecutor. |

|

I just noticed that we still need a fork in here - |

cddc189 to

af20de4

Compare

|

Updated benchmarks -- the fork is basically free. |

|

Hmmm, something broken in all the kube test unrelated to this. |

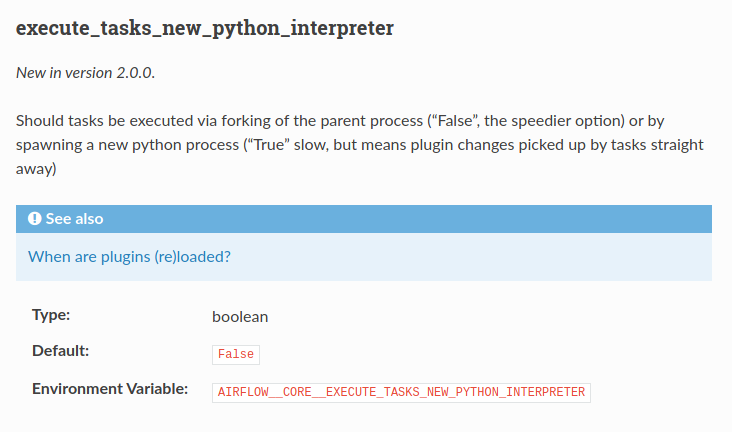

Spawning a whole new python process and then re-loading all of Airflow is expensive. All though this time fades to insignificance for long running tasks, this delay gives a "bad" experience for new users when they are just trying out Airflow for the first time. For the LocalExecutor this cuts the "queued time" down from 1.5s to 0.1s on average.

af20de4 to

3429c2d

Compare

|

Oh, those kube tests will fail because I haven't re-triggered the image build. If the rests of the tests pass I'll merge this. |

This is similar to apache#11327, but for Celery this time. The impact is not quite as pronounced here (for simple dags at least) but takes the average queued to start delay from 1.5s to 0.4s

This is similar to #11327, but for Celery this time. The impact is not quite as pronounced here (for simple dags at least) but takes the average queued to start delay from 1.5s to 0.4s

Spawning a whole new python process and then re-loading all of Airflow

is expensive. All though this time fades to insignificance for long

running tasks, this delay gives a "bad" experience for new users when

they are just trying out Airflow for the first time.

For the LocalExecutor this cuts the "queued time" down from 1.5s to 0.1s

on average.

Data on this for a simple 10-task sequential DAG:

This was discovered in my general benchmarking and profiling of the scheduler for AIP-15, but it's not tied to any of that work. There are more of these kind of improvements coming, each unrelated but all add up.

^ Add meaningful description above

Read the Pull Request Guidelines for more information.

In case of fundamental code change, Airflow Improvement Proposal (AIP) is needed.

In case of a new dependency, check compliance with the ASF 3rd Party License Policy.

In case of backwards incompatible changes please leave a note in UPDATING.md.