-

Notifications

You must be signed in to change notification settings - Fork 3.6k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Added ZooKeeper instrumentation for enhanced stats #436

Merged

Conversation

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

rdhabalia

approved these changes

Jun 8, 2017

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

👍

hangc0276

added a commit

to hangc0276/pulsar

that referenced

this pull request

May 26, 2021

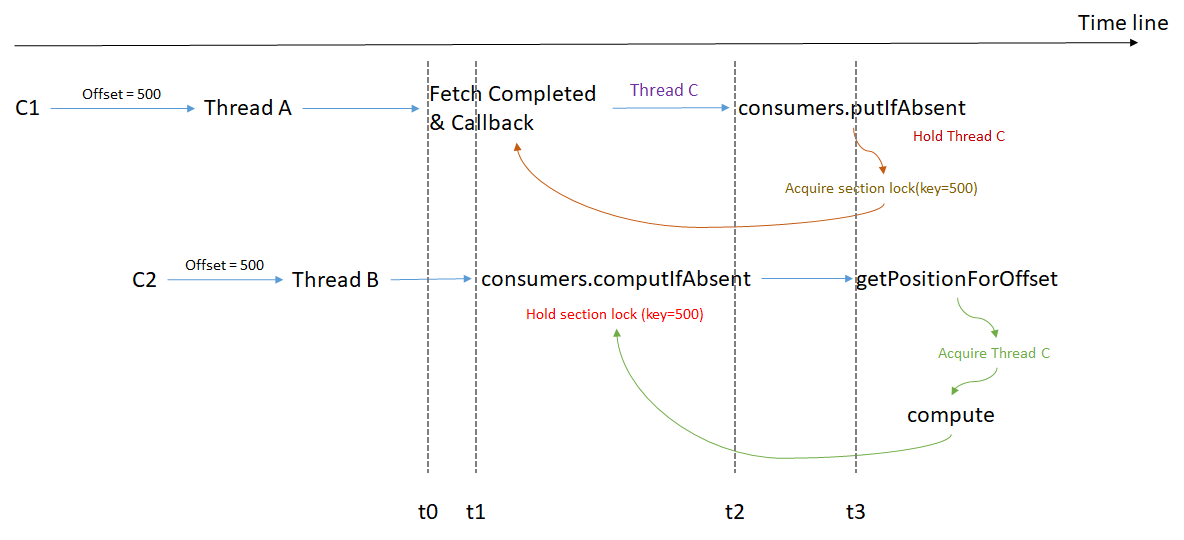

Fix apache#431 ### Motivation When multi consumers poll the same topic for the same position at the same time, it may trigger KafkaTopicConsumerManager#consumers concurrentHashMap deadlock and block the following read/write operation related to the same topic. ### Deadlock Parse deadlock stack ``` "bookkeeper-ml-workers-OrderedExecutor-0-0" apache#141 prio=5 os_prio=0 tid=0x00007f4866042000 nid=0x1013 waiting on condition [0x00007f47c9ad6000] java.lang.Thread.State: WAITING (parking) at sun.misc.Unsafe.park(Native Method) - parking to wait for <0x00000000865b0b58> (a org.apache.pulsar.common.util.collections.ConcurrentLongHashMap$Section) at java.util.concurrent.locks.StampedLock.acquireWrite(StampedLock.java:1119) at java.util.concurrent.locks.StampedLock.writeLock(StampedLock.java:354) at org.apache.pulsar.common.util.collections.ConcurrentLongHashMap$Section.put(ConcurrentLongHashMap.java:272) at org.apache.pulsar.common.util.collections.ConcurrentLongHashMap.putIfAbsent(ConcurrentLongHashMap.java:129) at io.streamnative.pulsar.handlers.kop.KafkaTopicConsumerManager.add(KafkaTopicConsumerManager.java:258) at io.streamnative.pulsar.handlers.kop.MessageFetchContext.lambda$null$10(MessageFetchContext.java:342) at io.streamnative.pulsar.handlers.kop.MessageFetchContext$$Lambda$1021/1882277105.accept(Unknown Source) at java.util.concurrent.CompletableFuture.uniAccept(CompletableFuture.java:656) at java.util.concurrent.CompletableFuture.uniAcceptStage(CompletableFuture.java:669) at java.util.concurrent.CompletableFuture.thenAccept(CompletableFuture.java:1997) at io.streamnative.pulsar.handlers.kop.MessageFetchContext.lambda$null$12(MessageFetchContext.java:337) at io.streamnative.pulsar.handlers.kop.MessageFetchContext$$Lambda$1020/843113083.accept(Unknown Source) at java.util.concurrent.ConcurrentHashMap$EntrySetView.forEach(ConcurrentHashMap.java:4795) at io.streamnative.pulsar.handlers.kop.MessageFetchContext.lambda$readMessagesInternal$13(MessageFetchContext.java:328) at io.streamnative.pulsar.handlers.kop.MessageFetchContext$$Lambda$1015/1232352552.accept(Unknown Source) at java.util.concurrent.CompletableFuture.uniWhenComplete(CompletableFuture.java:760) at java.util.concurrent.CompletableFuture$UniWhenComplete.tryFire(CompletableFuture.java:736) at java.util.concurrent.CompletableFuture.postComplete(CompletableFuture.java:474) at java.util.concurrent.CompletableFuture.complete(CompletableFuture.java:1962) at io.streamnative.pulsar.handlers.kop.MessageFetchContext$2.readEntriesComplete(MessageFetchContext.java:479) at org.apache.bookkeeper.mledger.impl.OpReadEntry.lambda$checkReadCompletion$2(OpReadEntry.java:156) at org.apache.bookkeeper.mledger.impl.OpReadEntry$$Lambda$845/165410888.run(Unknown Source) at org.apache.bookkeeper.mledger.util.SafeRun$1.safeRun(SafeRun.java:36) at org.apache.bookkeeper.common.util.SafeRunnable.run(SafeRunnable.java:36) at org.apache.bookkeeper.common.util.OrderedExecutor$TimedRunnable.run(OrderedExecutor.java:204) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) at io.netty.util.concurrent.FastThreadLocalRunnable.run(FastThreadLocalRunnable.java:30) at java.lang.Thread.run(Thread.java:748) ``` The deadlock happens as the following picture.  When Consumer C1 send poll request with `offset = 500`, KOP dispatch Thread A to fetch data from bookkeeper, and then Consuemr C2 also send poll request with `offset = 500` and KOP dispatch Thread B to fetch data. In `t0` timeStamp, thread A fetch data completed and do callback operation. KOP will **own thread C** to fetch data from BookKeeper. In `t1` timeStamp, thread B call `consumers.computeIfAbsent` with `key = 500` to get related cursor. In concurrentHashMap implementation, `computeIfAbsent` method will **hold the section write lock of `key = 500`** and do computation operation. In `t2` timeStamp, thread C fetch data completed and call `consumers.putIfAbsent` with `key = 500` in callback operation. It will try to **acquire the section write lock of `key = 500`** in concurrentHashMap. Howere the section write lock of `key = 500` has been hold by Thread B in `t1` timeStamp, thread C will stay to wait for write lock. In `t3` timeStamp, thread B call `getPositionForOffset` in `computeIfAbsent` operation. `getPositionForOffset` need to acquire thread C to read entry from BookKeeper. However thread C has been own in t0 timeStamp, thus, thread B will hold section write lock of `key = 500` in concurrentHashMap to wait for thread C. Dead lock happens. ### Modification 1. Move `getPositionForOffset` out of concurrentHashMap section write lock. 2. Add test to simulate the above multi consumers poll the same topic for the same offset at the same time situation.

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Labels

type/enhancement

The enhancements for the existing features or docs. e.g. reduce memory usage of the delayed messages

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

Motivation

Current ZK stats are very basic and it's difficult to get real numbers for latency or understand

which requests are being done on the ZK servers.

Modifications

break down by request type

http://0.0.0.0:8080/metricsResult

It is possible to build better monitoring dashboard. Since the code it's instrumented at load time, there is no performance penalty at runtime.