-

Notifications

You must be signed in to change notification settings - Fork 29k

[SPARK-24215][PySpark] Implement eager evaluation for DataFrame APIs in PySpark #21370

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

Not sure who is the right reviewer, maybe @rdblue @gatorsmile ? |

|

Test build #90834 has finished for PR 21370 at commit

|

|

we will wait for the tests to be fixed first. @xuanyuanking could you update the PR description to clarify which screenshot is "before" which is "after"? |

felixcheung

left a comment

felixcheung

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

this will need to escape the values to make sure it is legal html too right?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

why all these comment changes? could you revert them

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Sorry for this, I'll revert the IDE changes.

python/pyspark/sql/dataframe.py

Outdated

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

let's add all these to documentation too

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Got it. Do it in next commit. Thanks for reminding.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

when the output is truncated, does jupyter handle that properly?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

or does that depend on the html output on L300?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes, the truncated string will be showed in table row and controlled by spark.jupyter.default.truncate

|

retest this please |

docs/configuration.md

Outdated

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Jupyter ..

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Copy.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

nit: Open -> Enable

docs/configuration.md

Outdated

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

HTML

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Got it.

Yes you're right, thanks for your guidance, the new patch consider the escape and add new UT. @felixcheung |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

We don't need .map(_.toString)?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

We need this toString, because the appendRowString method including both column names and data in original logic, which call toString in data part.

https://github.com/apache/spark/pull/21370/files/f2bb8f334631734869ddf5d8ef1eca1fa29d334a#diff-7a46f10c3cedbf013cf255564d9483cdL312

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I know this is not your change, but the rows is already Seq[Seq[String]] and the row is Seq[String], so I think we can remove it.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I see, the cell.toString has been called here. https://github.com/apache/spark/pull/21370/files/f2bb8f334631734869ddf5d8ef1eca1fa29d334a#diff-7a46f10c3cedbf013cf255564d9483cdR271

Got it, I'll fix this in next commit.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

nit: add \n?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

the "\n" added in seperatedLine:https://github.com/apache/spark/pull/21370/files#diff-7a46f10c3cedbf013cf255564d9483cdR300

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Hmm, the header looks okay, but the data section will be a long line without \n? How about adding \n here and the data section, and just using "" for the seperatedLine?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Ah, I understand your consideration. I'll add this in next commit.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks, done. feb5f4a.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

ditto.

docs/configuration.md

Outdated

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

spark.jupyter.eagerEval.showRows or something?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

change to spark.jupyter.eagerEval.showRows,thanks

docs/configuration.md

Outdated

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

spark.jupyter.eagerEval.truncate or something?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yep, change to spark.jupyter.eagerEval.truncate

python/pyspark/sql/dataframe.py

Outdated

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

What will be shown if spark.jupyter.eagerEval.enabled is False? Fallback the original automatically?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

We need to return None if self._eager_eval is False.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

What will be shown if spark.jupyter.eagerEval.enabled is False? Fallback the original automatically?

Yes, it will fallback to call __repr__.

We need to return None if self._eager_eval is False.

Got it, more clear in code logic.

python/pyspark/sql/dataframe.py

Outdated

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

spark.jupyter.default.truncate?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

My bad, sorry for this.

python/pyspark/sql/dataframe.py

Outdated

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I guess we shouldn't hold these three values but define as @property or refer each time in _repr_html_. Otherwise, we'll hit unexpected behavior, e.g.:

df = ...

spark.conf.set("spark.jupyter.eagerEval.enabled", True)

dfwon't show the html.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

docs/configuration.md

Outdated

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

true instead of yes?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

true is better since the default value is false.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Got it, thanks.

|

Test build #90872 has finished for PR 21370 at commit

|

|

Test build #90871 has finished for PR 21370 at commit

|

|

So one thing we might want to take a look at is application/vnd.dataresource+json for tables in the notebooks (see https://github.com/nteract/improved-spark-viz ). |

docs/configuration.md

Outdated

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

nit: Open -> Enable

docs/configuration.md

Outdated

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Is it usually we include a JIRA ticket in user document like this? I think we should just briefly describe this feature here.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yea, I felt the same thing too but there were the same few instances in this page.

docs/configuration.md

Outdated

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

true is better since the default value is false.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

hmm, should we do this html thing in python side?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

We can do this in python side, I implement it in scala side mainly consider to reuse the code and logic of show(), maybe it's more natural in show df as html call showString.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This should not be done in Dataset.scala.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Can we create refactor showString to make it reusable for both show and repr_html?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

+1 for reusing relevant parts of show.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks for guidance, I will do this in next commit.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@viirya @gatorsmile @rdblue Sorry for the late commit, the refactor do in 94f3414. I spend some time on testing and implementing the transformation of rows between python and scala.

|

Thanks all reviewer's comments, I address all comments in this commit. Please have a look. |

python/pyspark/sql/dataframe.py

Outdated

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Add a simple test for _repr_html too?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

No problem, I'll added in SQLTests in next commit.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks, done. feb5f4a.

docs/configuration.md

Outdated

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Should we add sql? E.g., spark.sql.jupyter.eagerEval.enabled? Because this is just for SQL Dataset. @HyukjinKwon @ueshin

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Oh, yes, I'd prefer to add sql.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Got it, fix it in next commit.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Is this Jupyter specific? Can we make it more general?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

btw the config flag isn't jupyter specific.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Maybe rename to spark.sql.repl.eagerEval.enabled instead? It should also be documented that this is a hint. There's no guarantee that the repl you're using supports eager evaluation.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks, done. feb5f4a.

|

Test build #90896 has finished for PR 21370 at commit

|

python/pyspark/sql/dataframe.py

Outdated

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Add the function descriptions above this line,

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks, done. feb5f4a.

|

Can we also do something a bit more generic that works for non-Jupyter notebooks as well? For example, in IPython or just plain Python REPL. This is in addition to the repl_html stuff which is really cool and useful. |

|

@rxin, |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I'm sorry, but I don't think we need the middle \n, it's okay with only the last one.

"<tr><th>", "</th><th>", "</th></tr>\n"

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Got it, I'll change it.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I change the format in python _repr_html_ in 94f3414.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

ditto.

|

Test build #91022 has finished for PR 21370 at commit

|

Can we accept

I test offline an it can work both python shell and Jupyter, if we agree this way, I'll add this support in next commit together will the refactor of showString in scala Dataset. |

python/pyspark/sql/dataframe.py

Outdated

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

repr -> repl?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think it works either way. REPL is better in my opinion because these settings should (ideally) apply when using any REPL.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks, change to REPL in 94f3414.

python/pyspark/sql/tests.py

Outdated

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

We don't have to compile the pattern each time here since it's not going to be reused. You could just put this into re.sub I believe.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks! Fix it in 94f3414.

docs/configuration.md

Outdated

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I don't think it is obvious what "showRows" does. I would assume that it is a boolean, but it is a limit instead. What about calling this "limit" or "numRows"?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

maxNumRows

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks, change it in 94f3414.

docs/configuration.md

Outdated

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

What is the difference between this and showRows? Why are there two properties?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

maybe he wants to follow what dataframe.show does, which truncates num characters within a cell. That's useful for console output, but not so much for notebooks.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yep, I just want to keep the same behavior of dataframe.show.

That's useful for console output, but not so much for notebooks.

Notebooks aren't afraid for too many characters within a cell, so I just delete this?

python/pyspark/sql/dataframe.py

Outdated

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I agree that it would be better to respect spark.sql.repr.eagerEval.enabled here as well.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks for your reply, this implement in 94f3414.

python/pyspark/sql/dataframe.py

Outdated

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

use named arguments for boolean flags

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks, fix in 94f3414.

|

Test build #91449 has finished for PR 21370 at commit

|

|

Merged to master |

|

Thanks @HyukjinKwon and all reviewers. |

| <td>20</td> | ||

| <td> | ||

| Default number of rows in eager evaluation output HTML table generated by <code>_repr_html_</code> or plain text, | ||

| this only take effect when <code>spark.sql.repl.eagerEval.enabled</code> is set to true. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

take -> takes

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Got it, thanks.

| <td>20</td> | ||

| <td> | ||

| Default number of truncate in eager evaluation output HTML table generated by <code>_repr_html_</code> or | ||

| plain text, this only take effect when <code>spark.sql.repl.eagerEval.enabled</code> set to true. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

take -> takes

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Got it, thanks, address in next follow up PR.

|

@xuanyuanking @HyukjinKwon Sorry for the delay. Super busy in the week of Spark summit. Will carefully review this PR today or tomorrow. |

| finally: | ||

| shutil.rmtree(path) | ||

|

|

||

| def test_repr_html(self): |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This function only covers the most basic positive case. We need also add more test cases. For example, the results when spark.sql.repl.eagerEval.enabled is set to false.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Got it, the follow up pr will enhance this test.

| truncate: Int, | ||

| vertical: Boolean): Array[Any] = { | ||

| EvaluatePython.registerPicklers() | ||

| val numRows = _numRows.max(0).min(Int.MaxValue - 1) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This should be also part of the conf description.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Got it, will be fixed in another pr.

| self._support_repr_html = True | ||

| if self._eager_eval: | ||

| max_num_rows = max(self._max_num_rows, 0) | ||

| vertical = False |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Any discussion about this?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes, the discussion before linked below:

#21370 (comment)

and

#21370 (comment)

We need a named arguments for boolean flags here, but here limited by the named arguments can't work during python call _jdf func, so we do the work around like this.

| from JVM to Python worker for every task. | ||

| </td> | ||

| </tr> | ||

| <tr> |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

All the SQL configurations should follow what we did in the section of Spark SQL https://spark.apache.org/docs/latest/configuration.html#spark-sql.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

These confs are not part of spark.sql("SET -v").show(numRows = 200, truncate = false).

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Got it, I'll add there configurations into SQLConf.scala in the follow up pr.

| """Returns true if the eager evaluation enabled. | ||

| """ | ||

| return self.sql_ctx.getConf( | ||

| "spark.sql.repl.eagerEval.enabled", "false").lower() == "true" |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Is that possible we can avoid hard-coding these conf key values? cc @ueshin @HyukjinKwon

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Probably, we should access to SQLConf object. 1. Agree with not hardcoding it in general but 2. IMHO I want to avoid Py4J JVM accesses in the test because the test can likely be more flaky up to my knowledge, on the other hand (unlike Scala or Java side).

Maybe we should try to take a look about this hardcoding if we see more occurrences next time

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

In the ongoing release, a nice-to-have refactoring is to move all the Core Confs into a single file just like what we did in Spark SQL Conf. Default values, boundary checking, types and descriptions. Thus, in PySpark, it would be better to do it starting from now.

| } | ||

| } | ||

|

|

||

| private[sql] def getRowsToPython( |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

In DataFrameSuite, we have multiple test cases for showString instead of getRows , which is introduced in this PR.

We also need the unit test cases for getRowsToPython.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Got it, the follow up pr I'm working on will add more test for getRows and getRowsToPython.

|

@xuanyuanking Thanks for your contributions! Test coverage is the most critical when we refactor the existing code and add new features. Hopefully, when you submit new PRs in the future, could you also improve this part? |

| <td><code>spark.sql.repl.eagerEval.enabled</code></td> | ||

| <td>false</td> | ||

| <td> | ||

| Enable eager evaluation or not. If true and the REPL you are using supports eager evaluation, |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Just a question. When the REPL does not support eager evaluation, could we do anything better instead of silently ignoring the user inputs?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Maybe it's hard to have a better way because we can hardly perceive it in dataset while REPL does not support eager evaluation.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I don't completely follow actually - what makes a "REPL does not support eager evaluation"?

in fact, this "eager evaluation" is just object rendering support build into REPL, notebook etc (like print), it's not really a design to be "eager evaluation"

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

That is true. We are not adding the eager execution in the our Dataset/DataFrame. We just rely on the REPL to trigger it. We need an update on the parameter description to emphasize it.

| return int(self.sql_ctx.getConf( | ||

| "spark.sql.repl.eagerEval.truncate", "20")) | ||

|

|

||

| def __repr__(self): |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This PR also changed __repr__. Thus, we need to update the PR title and description. A better PR title should be like Implement eager evaluation for DataFrame APIs in PySpark

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Done for this and also the PR description as your comment, sorry for this and I'll pay more attention to that next time when the implementation changes during PR review. Is there any way to change the committed git log? Sorry for this.

Of cause and sorry for the late reply, I'll do this in a follow up PR and answer all question from Xiao this night. Thanks for all your comments. |

|

@xuanyuanking Just for your reference, for this PR, the PR description can be improved to something like

|

| val toJava: (Any) => Any = EvaluatePython.toJava(_, ArrayType(ArrayType(StringType))) | ||

| val iter: Iterator[Array[Byte]] = new SerDeUtil.AutoBatchedPickler( | ||

| rows.iterator.map(toJava)) | ||

| PythonRDD.serveIterator(iter, "serve-GetRows") |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

PythonRDD.serveIterator(iter, "serve-GetRows") returns Int, but the return type of getRowsToPython is Array[Any]. How does it work? cc @xuanyuanking @HyukjinKwon

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think we return Array[Any] for PythonRDD.serveIterator too.

| def serveIterator(items: Iterator[_], threadName: String): Array[Any] = { |

Did I maybe miss something?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Same answer with @HyukjinKwon about the return type, and actually the exact return type we need here is Array[Array[String]], this defined in toJava func.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The changes of the return types were made in a commit [PYSPARK] Update py4j to version 0.10.7.... No PR was opened...

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yup ..

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

re the py4j commit - there's a good reason for it @gatorsmile

not sure if the change to return type is required with the py4j change though

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think we better don't talk about this here though.

…Frame APIs in PySpark ## What changes were proposed in this pull request? Address comments in #21370 and add more test. ## How was this patch tested? Enhance test in pyspark/sql/test.py and DataFrameSuite Author: Yuanjian Li <xyliyuanjian@gmail.com> Closes #21553 from xuanyuanking/SPARK-24215-follow.

* [SPARK-24381][TESTING] Add unit tests for NOT IN subquery around null values

## What changes were proposed in this pull request?

This PR adds several unit tests along the `cols NOT IN (subquery)` pathway. There are a scattering of tests here and there which cover this codepath, but there doesn't seem to be a unified unit test of the correctness of null-aware anti joins anywhere. I have also added a brief explanation of how this expression behaves in SubquerySuite. Lastly, I made some clarifying changes in the NOT IN pathway in RewritePredicateSubquery.

## How was this patch tested?

Added unit tests! There should be no behavioral change in this PR.

Author: Miles Yucht <miles@databricks.com>

Closes #21425 from mgyucht/spark-24381.

* [SPARK-24334] Fix race condition in ArrowPythonRunner causes unclean shutdown of Arrow memory allocator

## What changes were proposed in this pull request?

There is a race condition of closing Arrow VectorSchemaRoot and Allocator in the writer thread of ArrowPythonRunner.

The race results in memory leak exception when closing the allocator. This patch removes the closing routine from the TaskCompletionListener and make the writer thread responsible for cleaning up the Arrow memory.

This issue be reproduced by this test:

```

def test_memory_leak(self):

from pyspark.sql.functions import pandas_udf, col, PandasUDFType, array, lit, explode

# Have all data in a single executor thread so it can trigger the race condition easier

with self.sql_conf({'spark.sql.shuffle.partitions': 1}):

df = self.spark.range(0, 1000)

df = df.withColumn('id', array([lit(i) for i in range(0, 300)])) \

.withColumn('id', explode(col('id'))) \

.withColumn('v', array([lit(i) for i in range(0, 1000)]))

pandas_udf(df.schema, PandasUDFType.GROUPED_MAP)

def foo(pdf):

xxx

return pdf

result = df.groupby('id').apply(foo)

with QuietTest(self.sc):

with self.assertRaises(py4j.protocol.Py4JJavaError) as context:

result.count()

self.assertTrue('Memory leaked' not in str(context.exception))

```

Note: Because of the race condition, the test case cannot reproduce the issue reliably so it's not added to test cases.

## How was this patch tested?

Because of the race condition, the bug cannot be unit test easily. So far it has only happens on large amount of data. This is currently tested manually.

Author: Li Jin <ice.xelloss@gmail.com>

Closes #21397 from icexelloss/SPARK-24334-arrow-memory-leak.

* [SPARK-24373][SQL] Add AnalysisBarrier to RelationalGroupedDataset's and KeyValueGroupedDataset's child

## What changes were proposed in this pull request?

When we create a `RelationalGroupedDataset` or a `KeyValueGroupedDataset` we set its child to the `logicalPlan` of the `DataFrame` we need to aggregate. Since the `logicalPlan` is already analyzed, we should not analyze it again. But this happens when the new plan of the aggregate is analyzed.

The current behavior in most of the cases is likely to produce no harm, but in other cases re-analyzing an analyzed plan can change it, since the analysis is not idempotent. This can cause issues like the one described in the JIRA (missing to find a cached plan).

The PR adds an `AnalysisBarrier` to the `logicalPlan` which is used as child of `RelationalGroupedDataset` or a `KeyValueGroupedDataset`.

## How was this patch tested?

added UT

Author: Marco Gaido <marcogaido91@gmail.com>

Closes #21432 from mgaido91/SPARK-24373.

* [SPARK-24392][PYTHON] Label pandas_udf as Experimental

## What changes were proposed in this pull request?

The pandas_udf functionality was introduced in 2.3.0, but is not completely stable and still evolving. This adds a label to indicate it is still an experimental API.

## How was this patch tested?

NA

Author: Bryan Cutler <cutlerb@gmail.com>

Closes #21435 from BryanCutler/arrow-pandas_udf-experimental-SPARK-24392.

* [SPARK-19613][SS][TEST] Random.nextString is not safe for directory namePrefix

## What changes were proposed in this pull request?

`Random.nextString` is good for generating random string data, but it's not proper for directory name prefix in `Utils.createDirectory(tempDir, Random.nextString(10))`. This PR uses more safe directory namePrefix.

```scala

scala> scala.util.Random.nextString(10)

res0: String = 馨쭔ᎰႻ穚䃈兩㻞藑並

```

```scala

StateStoreRDDSuite:

- versioning and immutability

- recovering from files

- usage with iterators - only gets and only puts

- preferred locations using StateStoreCoordinator *** FAILED ***

java.io.IOException: Failed to create a temp directory (under /.../spark/sql/core/target/tmp/StateStoreRDDSuite8712796397908632676) after 10 attempts!

at org.apache.spark.util.Utils$.createDirectory(Utils.scala:295)

at org.apache.spark.sql.execution.streaming.state.StateStoreRDDSuite$$anonfun$13$$anonfun$apply$6.apply(StateStoreRDDSuite.scala:152)

at org.apache.spark.sql.execution.streaming.state.StateStoreRDDSuite$$anonfun$13$$anonfun$apply$6.apply(StateStoreRDDSuite.scala:149)

at org.apache.spark.sql.catalyst.util.package$.quietly(package.scala:42)

at org.apache.spark.sql.execution.streaming.state.StateStoreRDDSuite$$anonfun$13.apply(StateStoreRDDSuite.scala:149)

at org.apache.spark.sql.execution.streaming.state.StateStoreRDDSuite$$anonfun$13.apply(StateStoreRDDSuite.scala:149)

...

- distributed test *** FAILED ***

java.io.IOException: Failed to create a temp directory (under /.../spark/sql/core/target/tmp/StateStoreRDDSuite8712796397908632676) after 10 attempts!

at org.apache.spark.util.Utils$.createDirectory(Utils.scala:295)

```

## How was this patch tested?

Pass the existing tests.StateStoreRDDSuite:

Author: Dongjoon Hyun <dongjoon@apache.org>

Closes #21446 from dongjoon-hyun/SPARK-19613.

* [SPARK-24377][SPARK SUBMIT] make --py-files work in non pyspark application

## What changes were proposed in this pull request?

For some Spark applications, though they're a java program, they require not only jar dependencies, but also python dependencies. One example is Livy remote SparkContext application, this application is actually an embedded REPL for Scala/Python/R, it will not only load in jar dependencies, but also python and R deps, so we should specify not only "--jars", but also "--py-files".

Currently for a Spark application, --py-files can only be worked for a pyspark application, so it will not be worked in the above case. So here propose to remove such restriction.

Also we tested that "spark.submit.pyFiles" only supports quite limited scenario (client mode with local deps), so here also expand the usage of "spark.submit.pyFiles" to be alternative of --py-files.

## How was this patch tested?

UT added.

Author: jerryshao <sshao@hortonworks.com>

Closes #21420 from jerryshao/SPARK-24377.

* [SPARK-24250][SQL][FOLLOW-UP] support accessing SQLConf inside tasks

## What changes were proposed in this pull request?

We should not stop users from calling `getActiveSession` and `getDefaultSession` in executors. To not break the existing behaviors, we should simply return None.

## How was this patch tested?

N/A

Author: Xiao Li <gatorsmile@gmail.com>

Closes #21436 from gatorsmile/followUpSPARK-24250.

* [SPARK-23991][DSTREAMS] Fix data loss when WAL write fails in allocateBlocksToBatch

When blocks tried to get allocated to a batch and WAL write fails then the blocks will be removed from the received block queue. This fact simply produces data loss because the next allocation will not find the mentioned blocks in the queue.

In this PR blocks will be removed from the received queue only if WAL write succeded.

Additional unit test.

Author: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Closes #21430 from gaborgsomogyi/SPARK-23991.

Change-Id: I5ead84f0233f0c95e6d9f2854ac2ff6906f6b341

* [SPARK-24371][SQL] Added isInCollection in DataFrame API for Scala and Java.

## What changes were proposed in this pull request?

Implemented **`isInCollection `** in DataFrame API for both Scala and Java, so users can do

```scala

val profileDF = Seq(

Some(1), Some(2), Some(3), Some(4),

Some(5), Some(6), Some(7), None

).toDF("profileID")

val validUsers: Seq[Any] = Seq(6, 7.toShort, 8L, "3")

val result = profileDF.withColumn("isValid", $"profileID". isInCollection(validUsers))

result.show(10)

"""

+---------+-------+

|profileID|isValid|

+---------+-------+

| 1| false|

| 2| false|

| 3| true|

| 4| false|

| 5| false|

| 6| true|

| 7| true|

| null| null|

+---------+-------+

""".stripMargin

```

## How was this patch tested?

Several unit tests are added.

Author: DB Tsai <d_tsai@apple.com>

Closes #21416 from dbtsai/optimize-set.

* [SPARK-24365][SQL] Add Data Source write benchmark

## What changes were proposed in this pull request?

Add Data Source write benchmark. So that it would be easier to measure the writer performance.

Author: Gengliang Wang <gengliang.wang@databricks.com>

Closes #21409 from gengliangwang/parquetWriteBenchmark.

* [SPARK-24331][SPARKR][SQL] Adding arrays_overlap, array_repeat, map_entries to SparkR

## What changes were proposed in this pull request?

The PR adds functions `arrays_overlap`, `array_repeat`, `map_entries` to SparkR.

## How was this patch tested?

Tests added into R/pkg/tests/fulltests/test_sparkSQL.R

## Examples

### arrays_overlap

```

df <- createDataFrame(list(list(list(1L, 2L), list(3L, 1L)),

list(list(1L, 2L), list(3L, 4L)),

list(list(1L, NA), list(3L, 4L))))

collect(select(df, arrays_overlap(df[[1]], df[[2]])))

```

```

arrays_overlap(_1, _2)

1 TRUE

2 FALSE

3 NA

```

### array_repeat

```

df <- createDataFrame(list(list("a", 3L), list("b", 2L)))

collect(select(df, array_repeat(df[[1]], df[[2]])))

```

```

array_repeat(_1, _2)

1 a, a, a

2 b, b

```

```

collect(select(df, array_repeat(df[[1]], 2L)))

```

```

array_repeat(_1, 2)

1 a, a

2 b, b

```

### map_entries

```

df <- createDataFrame(list(list(map = as.environment(list(x = 1, y = 2)))))

collect(select(df, map_entries(df$map)))

```

```

map_entries(map)

1 x, 1, y, 2

```

Author: Marek Novotny <mn.mikke@gmail.com>

Closes #21434 from mn-mikke/SPARK-24331.

* [SPARK-23754][PYTHON] Re-raising StopIteration in client code

## What changes were proposed in this pull request?

Make sure that `StopIteration`s raised in users' code do not silently interrupt processing by spark, but are raised as exceptions to the users. The users' functions are wrapped in `safe_iter` (in `shuffle.py`), which re-raises `StopIteration`s as `RuntimeError`s

## How was this patch tested?

Unit tests, making sure that the exceptions are indeed raised. I am not sure how to check whether a `Py4JJavaError` contains my exception, so I simply looked for the exception message in the java exception's `toString`. Can you propose a better way?

## License

This is my original work, licensed in the same way as spark

Author: e-dorigatti <emilio.dorigatti@gmail.com>

Author: edorigatti <emilio.dorigatti@gmail.com>

Closes #21383 from e-dorigatti/fix_spark_23754.

* [SPARK-24419][BUILD] Upgrade SBT to 0.13.17 with Scala 2.10.7 for JDK9+

## What changes were proposed in this pull request?

Upgrade SBT to 0.13.17 with Scala 2.10.7 for JDK9+

## How was this patch tested?

Existing tests

Author: DB Tsai <d_tsai@apple.com>

Closes #21458 from dbtsai/sbt.

* [SPARK-24369][SQL] Correct handling for multiple distinct aggregations having the same argument set

## What changes were proposed in this pull request?

This pr fixed an issue when having multiple distinct aggregations having the same argument set, e.g.,

```

scala>: paste

val df = sql(

s"""SELECT corr(DISTINCT x, y), corr(DISTINCT y, x), count(*)

| FROM (VALUES (1, 1), (2, 2), (2, 2)) t(x, y)

""".stripMargin)

java.lang.RuntimeException

You hit a query analyzer bug. Please report your query to Spark user mailing list.

```

The root cause is that `RewriteDistinctAggregates` can't detect multiple distinct aggregations if they have the same argument set. This pr modified code so that `RewriteDistinctAggregates` could count the number of aggregate expressions with `isDistinct=true`.

## How was this patch tested?

Added tests in `DataFrameAggregateSuite`.

Author: Takeshi Yamamuro <yamamuro@apache.org>

Closes #21443 from maropu/SPARK-24369.

* [SPARK-24384][PYTHON][SPARK SUBMIT] Add .py files correctly into PythonRunner in submit with client mode in spark-submit

## What changes were proposed in this pull request?

In client side before context initialization specifically, .py file doesn't work in client side before context initialization when the application is a Python file. See below:

```

$ cat /home/spark/tmp.py

def testtest():

return 1

```

This works:

```

$ cat app.py

import pyspark

pyspark.sql.SparkSession.builder.getOrCreate()

import tmp

print("************************%s" % tmp.testtest())

$ ./bin/spark-submit --master yarn --deploy-mode client --py-files /home/spark/tmp.py app.py

...

************************1

```

but this doesn't:

```

$ cat app.py

import pyspark

import tmp

pyspark.sql.SparkSession.builder.getOrCreate()

print("************************%s" % tmp.testtest())

$ ./bin/spark-submit --master yarn --deploy-mode client --py-files /home/spark/tmp.py app.py

Traceback (most recent call last):

File "/home/spark/spark/app.py", line 2, in <module>

import tmp

ImportError: No module named tmp

```

### How did it happen?

In client mode specifically, the paths are being added into PythonRunner as are:

https://github.com/apache/spark/blob/628c7b517969c4a7ccb26ea67ab3dd61266073ca/core/src/main/scala/org/apache/spark/deploy/SparkSubmit.scala#L430

https://github.com/apache/spark/blob/628c7b517969c4a7ccb26ea67ab3dd61266073ca/core/src/main/scala/org/apache/spark/deploy/PythonRunner.scala#L49-L88

The problem here is, .py file shouldn't be added as are since `PYTHONPATH` expects a directory or an archive like zip or egg.

### How does this PR fix?

We shouldn't simply just add its parent directory because other files in the parent directory could also be added into the `PYTHONPATH` in client mode before context initialization.

Therefore, we copy .py files into a temp directory for .py files and add it to `PYTHONPATH`.

## How was this patch tested?

Unit tests are added and manually tested in both standalond and yarn client modes with submit.

Author: hyukjinkwon <gurwls223@apache.org>

Closes #21426 from HyukjinKwon/SPARK-24384.

* [SPARK-23161][PYSPARK][ML] Add missing APIs to Python GBTClassifier

## What changes were proposed in this pull request?

Add featureSubsetStrategy in GBTClassifier and GBTRegressor. Also make GBTClassificationModel inherit from JavaClassificationModel instead of prediction model so it will have numClasses.

## How was this patch tested?

Add tests in doctest

Author: Huaxin Gao <huaxing@us.ibm.com>

Closes #21413 from huaxingao/spark-23161.

* [SPARK-23901][SQL] Add masking functions

## What changes were proposed in this pull request?

The PR adds the masking function as they are described in Hive's documentation: https://cwiki.apache.org/confluence/display/Hive/LanguageManual+UDF#LanguageManualUDF-DataMaskingFunctions.

This means that only `string`s are accepted as parameter for the masking functions.

## How was this patch tested?

added UTs

Author: Marco Gaido <marcogaido91@gmail.com>

Closes #21246 from mgaido91/SPARK-23901.

* [SPARK-24276][SQL] Order of literals in IN should not affect semantic equality

## What changes were proposed in this pull request?

When two `In` operators are created with the same list of values, but different order, we are considering them as semantically different. This is wrong, since they have the same semantic meaning.

The PR adds a canonicalization rule which orders the literals in the `In` operator so the semantic equality works properly.

## How was this patch tested?

added UT

Author: Marco Gaido <marcogaido91@gmail.com>

Closes #21331 from mgaido91/SPARK-24276.

* [SPARK-24337][CORE] Improve error messages for Spark conf values

## What changes were proposed in this pull request?

Improve the exception messages when retrieving Spark conf values to include the key name when the value is invalid.

## How was this patch tested?

Unit tests for all get* operations in SparkConf that require a specific value format

Author: William Sheu <william.sheu@databricks.com>

Closes #21454 from PenguinToast/SPARK-24337-spark-config-errors.

* [SPARK-24146][PYSPARK][ML] spark.ml parity for sequential pattern mining - PrefixSpan: Python API

## What changes were proposed in this pull request?

spark.ml parity for sequential pattern mining - PrefixSpan: Python API

## How was this patch tested?

doctests

Author: WeichenXu <weichen.xu@databricks.com>

Closes #21265 from WeichenXu123/prefix_span_py.

* [WEBUI] Avoid possibility of script in query param keys

As discussed separately, this avoids the possibility of XSS on certain request param keys.

CC vanzin

Author: Sean Owen <srowen@gmail.com>

Closes #21464 from srowen/XSS2.

* [SPARK-24414][UI] Calculate the correct number of tasks for a stage.

This change takes into account all non-pending tasks when calculating

the number of tasks to be shown. This also means that when the stage

is pending, the task table (or, in fact, most of the data in the stage

page) will not be rendered.

I also fixed the label when the known number of tasks is larger than

the recorded number of tasks (it was inverted).

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes #21457 from vanzin/SPARK-24414.

* [SPARK-24397][PYSPARK] Added TaskContext.getLocalProperty(key) in Python

## What changes were proposed in this pull request?

This adds a new API `TaskContext.getLocalProperty(key)` to the Python TaskContext. It mirrors the Java TaskContext API of returning a string value if the key exists, or None if the key does not exist.

## How was this patch tested?

New test added.

Author: Tathagata Das <tathagata.das1565@gmail.com>

Closes #21437 from tdas/SPARK-24397.

* [SPARK-23900][SQL] format_number support user specifed format as argument

## What changes were proposed in this pull request?

`format_number` support user specifed format as argument. For example:

```sql

spark-sql> SELECT format_number(12332.123456, '##################.###');

12332.123

```

## How was this patch tested?

unit test

Author: Yuming Wang <yumwang@ebay.com>

Closes #21010 from wangyum/SPARK-23900.

* [SPARK-24232][K8S] Add support for secret env vars

## What changes were proposed in this pull request?

* Allows to refer a secret as an env var.

* Introduces new config properties in the form: spark.kubernetes{driver,executor}.secretKeyRef.ENV_NAME=name:key

ENV_NAME is case sensitive.

* Updates docs.

* Adds required unit tests.

## How was this patch tested?

Manually tested and confirmed that the secrets exist in driver's and executor's container env.

Also job finished successfully.

First created a secret with the following yaml:

```

apiVersion: v1

kind: Secret

metadata:

name: test-secret

data:

username: c3RhdnJvcwo=

password: Mzk1MjgkdmRnN0pi

-------

$ echo -n 'stavros' | base64

c3RhdnJvcw==

$ echo -n '39528$vdg7Jb' | base64

MWYyZDFlMmU2N2Rm

```

Run a job as follows:

```./bin/spark-submit \

--master k8s://http://localhost:9000 \

--deploy-mode cluster \

--name spark-pi \

--class org.apache.spark.examples.SparkPi \

--conf spark.executor.instances=1 \

--conf spark.kubernetes.container.image=skonto/spark:k8envs3 \

--conf spark.kubernetes.driver.secretKeyRef.MY_USERNAME=test-secret:username \

--conf spark.kubernetes.driver.secretKeyRef.My_password=test-secret:password \

--conf spark.kubernetes.executor.secretKeyRef.MY_USERNAME=test-secret:username \

--conf spark.kubernetes.executor.secretKeyRef.My_password=test-secret:password \

local:///opt/spark/examples/jars/spark-examples_2.11-2.4.0-SNAPSHOT.jar 10000

```

Secret loaded correctly at the driver container:

Also if I log into the exec container:

kubectl exec -it spark-pi-1526555613156-exec-1 bash

bash-4.4# env

> SPARK_EXECUTOR_MEMORY=1g

> SPARK_EXECUTOR_CORES=1

> LANG=C.UTF-8

> HOSTNAME=spark-pi-1526555613156-exec-1

> SPARK_APPLICATION_ID=spark-application-1526555618626

> **MY_USERNAME=stavros**

>

> JAVA_HOME=/usr/lib/jvm/java-1.8-openjdk

> KUBERNETES_PORT_443_TCP_PROTO=tcp

> KUBERNETES_PORT_443_TCP_ADDR=10.100.0.1

> JAVA_VERSION=8u151

> KUBERNETES_PORT=tcp://10.100.0.1:443

> PWD=/opt/spark/work-dir

> HOME=/root

> SPARK_LOCAL_DIRS=/var/data/spark-b569b0ae-b7ef-4f91-bcd5-0f55535d3564

> KUBERNETES_SERVICE_PORT_HTTPS=443

> KUBERNETES_PORT_443_TCP_PORT=443

> SPARK_HOME=/opt/spark

> SPARK_DRIVER_URL=spark://CoarseGrainedSchedulerspark-pi-1526555613156-driver-svc.default.svc:7078

> KUBERNETES_PORT_443_TCP=tcp://10.100.0.1:443

> SPARK_EXECUTOR_POD_IP=9.0.9.77

> TERM=xterm

> SPARK_EXECUTOR_ID=1

> SHLVL=1

> KUBERNETES_SERVICE_PORT=443

> SPARK_CONF_DIR=/opt/spark/conf

> PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/lib/jvm/java-1.8-openjdk/jre/bin:/usr/lib/jvm/java-1.8-openjdk/bin

> JAVA_ALPINE_VERSION=8.151.12-r0

> KUBERNETES_SERVICE_HOST=10.100.0.1

> **My_password=39528$vdg7Jb**

> _=/usr/bin/env

>

Author: Stavros Kontopoulos <stavros.kontopoulos@lightbend.com>

Closes #21317 from skonto/k8s-fix-env-secrets.

* [MINOR][YARN] Add YARN-specific credential providers in debug logging message

This PR adds a debugging log for YARN-specific credential providers which is loaded by service loader mechanism.

It took me a while to debug if it's actually loaded or not. I had to explicitly set the deprecated configuration and check if that's actually being loaded.

The change scope is manually tested. Logs are like:

```

Using the following builtin delegation token providers: hadoopfs, hive, hbase.

Using the following YARN-specific credential providers: yarn-test.

```

Author: hyukjinkwon <gurwls223@apache.org>

Closes #21466 from HyukjinKwon/minor-log.

Change-Id: I18e2fb8eeb3289b148f24c47bb3130a560a881cf

* [SPARK-24330][SQL] Refactor ExecuteWriteTask and Use `while` in writing files

## What changes were proposed in this pull request?

1. Refactor ExecuteWriteTask in FileFormatWriter to reduce common logic and improve readability.

After the change, callers only need to call `commit()` or `abort` at the end of task.

Also there is less code in `SingleDirectoryWriteTask` and `DynamicPartitionWriteTask`.

Definitions of related classes are moved to a new file, and `ExecuteWriteTask` is renamed to `FileFormatDataWriter`.

2. As per code style guide: https://github.com/databricks/scala-style-guide#traversal-and-zipwithindex , we avoid using `for` for looping in [FileFormatWriter](https://github.com/apache/spark/pull/21381/files#diff-3b69eb0963b68c65cfe8075f8a42e850L536) , or `foreach` in [WriteToDataSourceV2Exec](https://github.com/apache/spark/pull/21381/files#diff-6fbe10db766049a395bae2e785e9d56eL119).

In such critical code path, using `while` is good for performance.

## How was this patch tested?

Existing unit test.

I tried the microbenchmark in https://github.com/apache/spark/pull/21409

| Workload | Before changes(Best/Avg Time(ms)) | After changes(Best/Avg Time(ms)) |

| --- | --- | -- |

|Output Single Int Column| 2018 / 2043 | 2096 / 2236 |

|Output Single Double Column| 1978 / 2043 | 2013 / 2018 |

|Output Int and String Column| 6332 / 6706 | 6162 / 6298 |

|Output Partitions| 4458 / 5094 | 3792 / 4008 |

|Output Buckets| 5695 / 6102 | 5120 / 5154 |

Also a microbenchmark on my laptop for general comparison among while/foreach/for :

```

class Writer {

var sum = 0L

def write(l: Long): Unit = sum += l

}

def testWhile(iterator: Iterator[Long]): Long = {

val w = new Writer

while (iterator.hasNext) {

w.write(iterator.next())

}

w.sum

}

def testForeach(iterator: Iterator[Long]): Long = {

val w = new Writer

iterator.foreach(w.write)

w.sum

}

def testFor(iterator: Iterator[Long]): Long = {

val w = new Writer

for (x <- iterator) {

w.write(x)

}

w.sum

}

val data = 0L to 100000000L

val start = System.nanoTime

(0 to 10).foreach(_ => testWhile(data.iterator))

println("benchmark while: " + (System.nanoTime - start)/1000000)

val start2 = System.nanoTime

(0 to 10).foreach(_ => testForeach(data.iterator))

println("benchmark foreach: " + (System.nanoTime - start2)/1000000)

val start3 = System.nanoTime

(0 to 10).foreach(_ => testForeach(data.iterator))

println("benchmark for: " + (System.nanoTime - start3)/1000000)

```

Benchmark result:

`while`: 15401 ms

`foreach`: 43034 ms

`for`: 41279 ms

Author: Gengliang Wang <gengliang.wang@databricks.com>

Closes #21381 from gengliangwang/refactorExecuteWriteTask.

* [SPARK-24444][DOCS][PYTHON] Improve Pandas UDF docs to explain column assignment

## What changes were proposed in this pull request?

Added sections to pandas_udf docs, in the grouped map section, to indicate columns are assigned by position.

## How was this patch tested?

NA

Author: Bryan Cutler <cutlerb@gmail.com>

Closes #21471 from BryanCutler/arrow-doc-pandas_udf-column_by_pos-SPARK-21427.

* [SPARK-24326][MESOS] add support for local:// scheme for the app jar

## What changes were proposed in this pull request?

* Adds support for local:// scheme like in k8s case for image based deployments where the jar is already in the image. Affects cluster mode and the mesos dispatcher. Covers also file:// scheme. Keeps the default case where jar resolution happens on the host.

## How was this patch tested?

Dispatcher image with the patch, use it to start DC/OS Spark service:

skonto/spark-local-disp:test

Test image with my application jar located at the root folder:

skonto/spark-local:test

Dockerfile for that image.

From mesosphere/spark:2.3.0-2.2.1-2-hadoop-2.6

COPY spark-examples_2.11-2.2.1.jar /

WORKDIR /opt/spark/dist

Tests:

The following work as expected:

* local normal example

```

dcos spark run --submit-args="--conf spark.mesos.appJar.local.resolution.mode=container --conf spark.executor.memory=1g --conf spark.mesos.executor.docker.image=skonto/spark-local:test

--conf spark.executor.cores=2 --conf spark.cores.max=8

--class org.apache.spark.examples.SparkPi local:///spark-examples_2.11-2.2.1.jar"

```

* make sure the flag does not affect other uris

```

dcos spark run --submit-args="--conf spark.mesos.appJar.local.resolution.mode=container --conf spark.executor.memory=1g --conf spark.executor.cores=2 --conf spark.cores.max=8

--class org.apache.spark.examples.SparkPi https://s3-eu-west-1.amazonaws.com/fdp-stavros-test/spark-examples_2.11-2.1.1.jar"

```

* normal example no local

```

dcos spark run --submit-args="--conf spark.executor.memory=1g --conf spark.executor.cores=2 --conf spark.cores.max=8

--class org.apache.spark.examples.SparkPi https://s3-eu-west-1.amazonaws.com/fdp-stavros-test/spark-examples_2.11-2.1.1.jar"

```

The following fails

* uses local with no setting, default is host.

```

dcos spark run --submit-args="--conf spark.executor.memory=1g --conf spark.mesos.executor.docker.image=skonto/spark-local:test

--conf spark.executor.cores=2 --conf spark.cores.max=8

--class org.apache.spark.examples.SparkPi local:///spark-examples_2.11-2.2.1.jar"

```

Author: Stavros Kontopoulos <stavros.kontopoulos@lightbend.com>

Closes #21378 from skonto/local-upstream.

* [SPARK-23920][SQL] add array_remove to remove all elements that equal element from array

## What changes were proposed in this pull request?

add array_remove to remove all elements that equal element from array

## How was this patch tested?

add unit tests

Author: Huaxin Gao <huaxing@us.ibm.com>

Closes #21069 from huaxingao/spark-23920.

* [SPARK-24351][SS] offsetLog/commitLog purge thresholdBatchId should be computed with current committed epoch but not currentBatchId in CP mode

## What changes were proposed in this pull request?

Compute the thresholdBatchId to purge metadata based on current committed epoch instead of currentBatchId in CP mode to avoid cleaning all the committed metadata in some case as described in the jira [SPARK-24351](https://issues.apache.org/jira/browse/SPARK-24351).

## How was this patch tested?

Add new unit test.

Author: Huang Tengfei <tengfei.h@gmail.com>

Closes #21400 from ivoson/branch-cp-meta.

* Revert "[SPARK-24369][SQL] Correct handling for multiple distinct aggregations having the same argument set"

This reverts commit 1e46f92f956a00d04d47340489b6125d44dbd47b.

* [INFRA] Close stale PRs.

Closes #21444

* [SPARK-24340][CORE] Clean up non-shuffle disk block manager files following executor exits on a Standalone cluster

## What changes were proposed in this pull request?

Currently we only clean up the local directories on application removed. However, when executors die and restart repeatedly, many temp files are left untouched in the local directories, which is undesired behavior and could cause disk space used up gradually.

We can detect executor death in the Worker, and clean up the non-shuffle files (files not ended with ".index" or ".data") in the local directories, we should not touch the shuffle files since they are expected to be used by the external shuffle service.

Scope of this PR is limited to only implement the cleanup logic on a Standalone cluster, we defer to experts familiar with other cluster managers(YARN/Mesos/K8s) to determine whether it's worth to add similar support.

## How was this patch tested?

Add new test suite to cover.

Author: Xingbo Jiang <xingbo.jiang@databricks.com>

Closes #21390 from jiangxb1987/cleanupNonshuffleFiles.

* [SPARK-23668][K8S] Added missing config property in running-on-kubernetes.md

## What changes were proposed in this pull request?

PR https://github.com/apache/spark/pull/20811 introduced a new Spark configuration property `spark.kubernetes.container.image.pullSecrets` for specifying image pull secrets. However, the documentation wasn't updated accordingly. This PR adds the property introduced into running-on-kubernetes.md.

## How was this patch tested?

N/A.

foxish mccheah please help merge this. Thanks!

Author: Yinan Li <ynli@google.com>

Closes #21480 from liyinan926/master.

* [SPARK-24356][CORE] Duplicate strings in File.path managed by FileSegmentManagedBuffer

This patch eliminates duplicate strings that come from the 'path' field of

java.io.File objects created by FileSegmentManagedBuffer. That is, we want

to avoid the situation when multiple File instances for the same pathname

"foo/bar" are created, each with a separate copy of the "foo/bar" String

instance. In some scenarios such duplicate strings may waste a lot of memory

(~ 10% of the heap). To avoid that, we intern the pathname with

String.intern(), and before that we make sure that it's in a normalized

form (contains no "//", "///" etc.) Otherwise, the code in java.io.File

would normalize it later, creating a new "foo/bar" String copy.

Unfortunately, the normalization code that java.io.File uses internally

is in the package-private class java.io.FileSystem, so we cannot call it

here directly.

## What changes were proposed in this pull request?

Added code to ExternalShuffleBlockResolver.getFile(), that normalizes and then interns the pathname string before passing it to the File() constructor.

## How was this patch tested?

Added unit test

Author: Misha Dmitriev <misha@cloudera.com>

Closes #21456 from countmdm/misha/spark-24356.

* [SPARK-24455][CORE] fix typo in TaskSchedulerImpl comment

change runTasks to submitTasks in the TaskSchedulerImpl.scala 's comment

Author: xueyu <xueyu@yidian-inc.com>

Author: Xue Yu <278006819@qq.com>

Closes #21485 from xueyumusic/fixtypo1.

* [SPARK-24369][SQL] Correct handling for multiple distinct aggregations having the same argument set

## What changes were proposed in this pull request?

bring back https://github.com/apache/spark/pull/21443

This is a different approach: just change the check to count distinct columns with `toSet`

## How was this patch tested?

a new test to verify the planner behavior.

Author: Wenchen Fan <wenchen@databricks.com>

Author: Takeshi Yamamuro <yamamuro@apache.org>

Closes #21487 from cloud-fan/back.

* [SPARK-23786][SQL] Checking column names of csv headers

## What changes were proposed in this pull request?

Currently column names of headers in CSV files are not checked against provided schema of CSV data. It could cause errors like showed in the [SPARK-23786](https://issues.apache.org/jira/browse/SPARK-23786) and https://github.com/apache/spark/pull/20894#issuecomment-375957777. I introduced new CSV option - `enforceSchema`. If it is enabled (by default `true`), Spark forcibly applies provided or inferred schema to CSV files. In that case, CSV headers are ignored and not checked against the schema. If `enforceSchema` is set to `false`, additional checks can be performed. For example, if column in CSV header and in the schema have different ordering, the following exception is thrown:

```

java.lang.IllegalArgumentException: CSV file header does not contain the expected fields

Header: depth, temperature

Schema: temperature, depth

CSV file: marina.csv

```

## How was this patch tested?

The changes were tested by existing tests of CSVSuite and by 2 new tests.

Author: Maxim Gekk <maxim.gekk@databricks.com>

Author: Maxim Gekk <max.gekk@gmail.com>

Closes #20894 from MaxGekk/check-column-names.

* [SPARK-23903][SQL] Add support for date extract

## What changes were proposed in this pull request?

Add support for date `extract` function:

```sql

spark-sql> SELECT EXTRACT(YEAR FROM TIMESTAMP '2000-12-16 12:21:13');

2000

```

Supported field same as [Hive](https://github.com/apache/hive/blob/rel/release-2.3.3/ql/src/java/org/apache/hadoop/hive/ql/parse/IdentifiersParser.g#L308-L316): `YEAR`, `QUARTER`, `MONTH`, `WEEK`, `DAY`, `DAYOFWEEK`, `HOUR`, `MINUTE`, `SECOND`.

## How was this patch tested?

unit tests

Author: Yuming Wang <yumwang@ebay.com>

Closes #21479 from wangyum/SPARK-23903.

* [SPARK-21896][SQL] Fix StackOverflow caused by window functions inside aggregate functions

## What changes were proposed in this pull request?

This PR explicitly prohibits window functions inside aggregates. Currently, this will cause StackOverflow during analysis. See PR #19193 for previous discussion.

## How was this patch tested?

This PR comes with a dedicated unit test.

Author: aokolnychyi <anton.okolnychyi@sap.com>

Closes #21473 from aokolnychyi/fix-stackoverflow-window-funcs.

* [SPARK-24290][ML] add support for Array input for instrumentation.logNamedValue

## What changes were proposed in this pull request?

Extend instrumentation.logNamedValue to support Array input

change the logging for "clusterSizes" to new method

## How was this patch tested?

N/A

Please review http://spark.apache.org/contributing.html before opening a pull request.

Author: Lu WANG <lu.wang@databricks.com>

Closes #21347 from ludatabricks/SPARK-24290.

* [SPARK-24300][ML] change the way to set seed in ml.cluster.LDASuite.generateLDAData

## What changes were proposed in this pull request?

Using different RNG in all different partitions.

## How was this patch tested?

manually

Please review http://spark.apache.org/contributing.html before opening a pull request.

Author: Lu WANG <lu.wang@databricks.com>

Closes #21492 from ludatabricks/SPARK-24300.

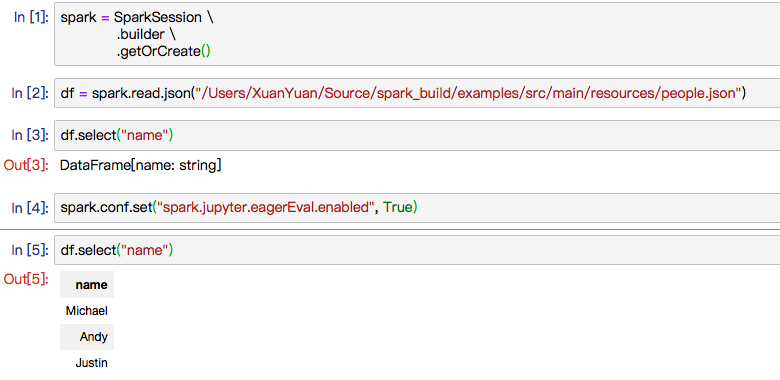

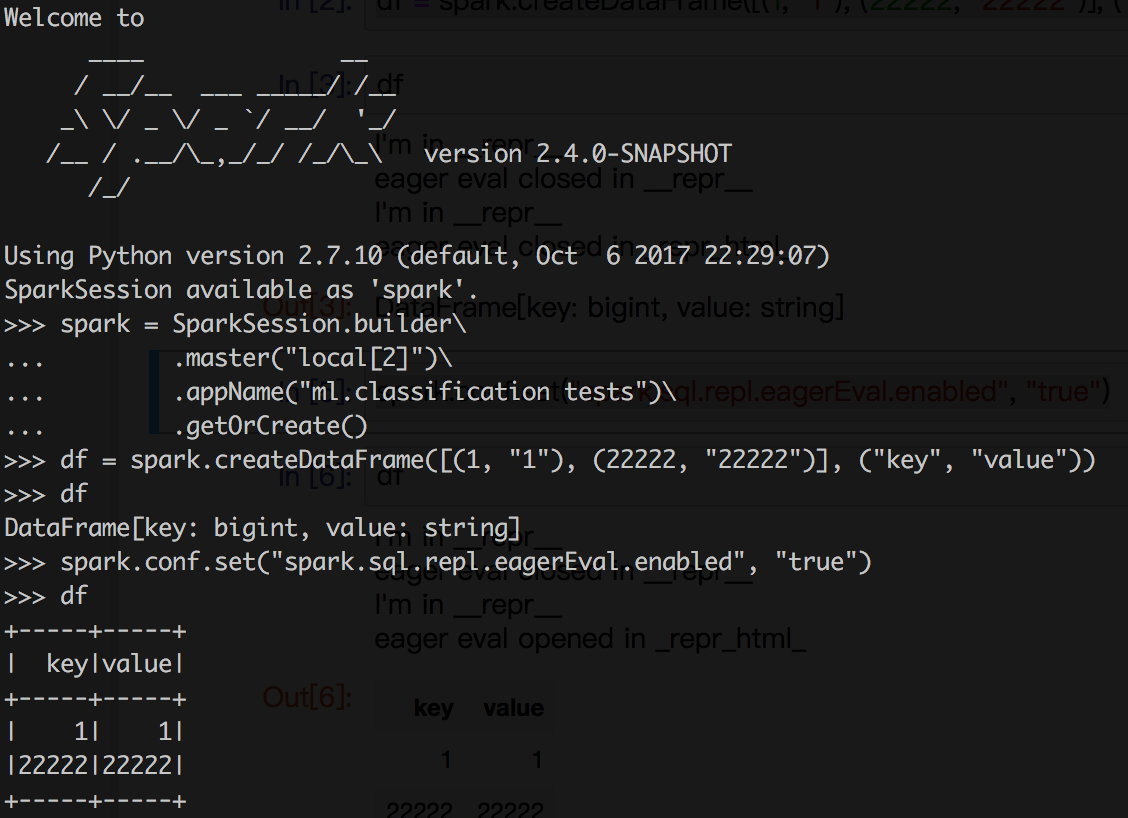

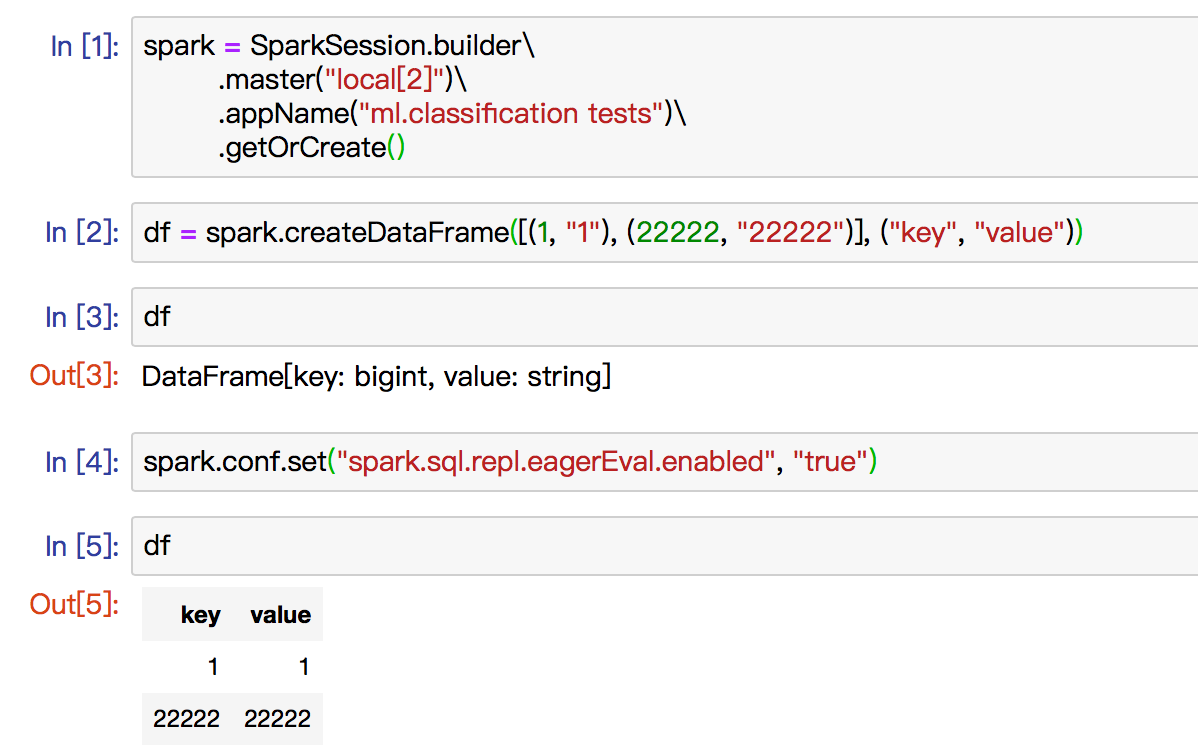

* [SPARK-24215][PYSPARK] Implement _repr_html_ for dataframes in PySpark

## What changes were proposed in this pull request?

Implement `_repr_html_` for PySpark while in notebook and add config named "spark.sql.repl.eagerEval.enabled" to control this.

The dev list thread for context: http://apache-spark-developers-list.1001551.n3.nabble.com/eager-execution-and-debuggability-td23928.html

## How was this patch tested?

New ut in DataFrameSuite and manual test in jupyter. Some screenshot below.

**After:**

**Before:**

Author: Yuanjian Li <xyliyuanjian@gmail.com>

Closes #21370 from xuanyuanking/SPARK-24215.

* [SPARK-16451][REPL] Fail shell if SparkSession fails to start.

Currently, in spark-shell, if the session fails to start, the

user sees a bunch of unrelated errors which are caused by code

in the shell initialization that references the "spark" variable,

which does not exist in that case. Things like:

```

<console>:14: error: not found: value spark

import spark.sql

```

The user is also left with a non-working shell (unless they want

to just write non-Spark Scala or Python code, that is).

This change fails the whole shell session at the point where the

failure occurs, so that the last error message is the one with

the actual information about the failure.

For the python error handling, I moved the session initialization code

to session.py, so that traceback.print_exc() only shows the last error.

Otherwise, the printed exception would contain all previous exceptions

with a message "During handling of the above exception, another

exception occurred", making the actual error kinda hard to parse.

Tested with spark-shell, pyspark (with 2.7 and 3.5), by forcing an

error during SparkContext initialization.

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes #21368 from vanzin/SPARK-16451.

* [SPARK-15784] Add Power Iteration Clustering to spark.ml

## What changes were proposed in this pull request?

According to the discussion on JIRA. I rewrite the Power Iteration Clustering API in `spark.ml`.

## How was this patch tested?

Unit test.

Please review http://spark.apache.org/contributing.html before opening a pull request.

Author: WeichenXu <weichen.xu@databricks.com>

Closes #21493 from WeichenXu123/pic_api.

* [SPARK-24453][SS] Fix error recovering from the failure in a no-data batch

## What changes were proposed in this pull request?

The error occurs when we are recovering from a failure in a no-data batch (say X) that has been planned (i.e. written to offset log) but not executed (i.e. not written to commit log). Upon recovery the following sequence of events happen.

1. `MicroBatchExecution.populateStartOffsets` sets `currentBatchId` to X. Since there was no data in the batch, the `availableOffsets` is same as `committedOffsets`, so `isNewDataAvailable` is `false`.

2. When `MicroBatchExecution.constructNextBatch` is called, ideally it should immediately return true because the next batch has already been constructed. However, the check of whether the batch has been constructed was `if (isNewDataAvailable) return true`. Since the planned batch is a no-data batch, it escaped this check and proceeded to plan the same batch X *once again*.

The solution is to have an explicit flag that signifies whether a batch has already been constructed or not. `populateStartOffsets` is going to set the flag appropriately.

## How was this patch tested?

new unit test

Author: Tathagata Das <tathagata.das1565@gmail.com>

Closes #21491 from tdas/SPARK-24453.

* [SPARK-22384][SQL] Refine partition pruning when attribute is wrapped in Cast

## What changes were proposed in this pull request?

Sql below will get all partitions from metastore, which put much burden on metastore;

```

CREATE TABLE `partition_test`(`col` int) PARTITIONED BY (`pt` byte)

SELECT * FROM partition_test WHERE CAST(pt AS INT)=1

```

The reason is that the the analyzed attribute `dt` is wrapped in `Cast` and `HiveShim` fails to generate a proper partition filter.

This pr proposes to take `Cast` into consideration when generate partition filter.

## How was this patch tested?

Test added.

This pr proposes to use analyzed expressions in `HiveClientSuite`

Author: jinxing <jinxing6042@126.com>

Closes #19602 from jinxing64/SPARK-22384.

* [SPARK-24187][R][SQL] Add array_join function to SparkR

## What changes were proposed in this pull request?

This PR adds array_join function to SparkR

## How was this patch tested?

Add unit test in test_sparkSQL.R

Author: Huaxin Gao <huaxing@us.ibm.com>

Closes #21313 from huaxingao/spark-24187.

* [SPARK-23803][SQL] Support bucket pruning