-

Notifications

You must be signed in to change notification settings - Fork 29k

[Streaming][DOC] Fix typo & formatting for JavaDoc #22593

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

ok to test |

|

Test build #96805 has finished for PR 22593 at commit

|

| * - `OutputMode.Update()`: only the rows that were updated in the streaming DataFrame/Dataset | ||

| * <ul> | ||

| * <li> `OutputMode.Append()`: only the new rows in the streaming DataFrame/Dataset will be | ||

| * written to the sink.</li> |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I would just format this similarly with

spark/sql/core/src/main/scala/org/apache/spark/sql/DataFrameReader.scala

Lines 338 to 366 in e06da95

| * <ul> | |

| * <li>`primitivesAsString` (default `false`): infers all primitive values as a string type</li> | |

| * <li>`prefersDecimal` (default `false`): infers all floating-point values as a decimal | |

| * type. If the values do not fit in decimal, then it infers them as doubles.</li> | |

| * <li>`allowComments` (default `false`): ignores Java/C++ style comment in JSON records</li> | |

| * <li>`allowUnquotedFieldNames` (default `false`): allows unquoted JSON field names</li> | |

| * <li>`allowSingleQuotes` (default `true`): allows single quotes in addition to double quotes | |

| * </li> | |

| * <li>`allowNumericLeadingZeros` (default `false`): allows leading zeros in numbers | |

| * (e.g. 00012)</li> | |

| * <li>`allowBackslashEscapingAnyCharacter` (default `false`): allows accepting quoting of all | |

| * character using backslash quoting mechanism</li> | |

| * <li>`allowUnquotedControlChars` (default `false`): allows JSON Strings to contain unquoted | |

| * control characters (ASCII characters with value less than 32, including tab and line feed | |

| * characters) or not.</li> | |

| * <li>`mode` (default `PERMISSIVE`): allows a mode for dealing with corrupt records | |

| * during parsing. | |

| * <ul> | |

| * <li>`PERMISSIVE` : when it meets a corrupted record, puts the malformed string into a | |

| * field configured by `columnNameOfCorruptRecord`, and sets other fields to `null`. To | |

| * keep corrupt records, an user can set a string type field named | |

| * `columnNameOfCorruptRecord` in an user-defined schema. If a schema does not have the | |

| * field, it drops corrupt records during parsing. When inferring a schema, it implicitly | |

| * adds a `columnNameOfCorruptRecord` field in an output schema.</li> | |

| * <li>`DROPMALFORMED` : ignores the whole corrupted records.</li> | |

| * <li>`FAILFAST` : throws an exception when it meets corrupted records.</li> | |

| * </ul> | |

| * </li> | |

| * <li>`columnNameOfCorruptRecord` (default is the value specified in |

|

ping @niofire |

|

This is fine, although unfortunately there are lots of instances of this type of formatting error. We don't need to fix them all, but what about looking for similar instances in |

|

Ping @niofire to update or close |

|

@srowen Guessing you're referring to some other files? Could you link? |

|

Test build #97204 has finished for PR 22593 at commit

|

|

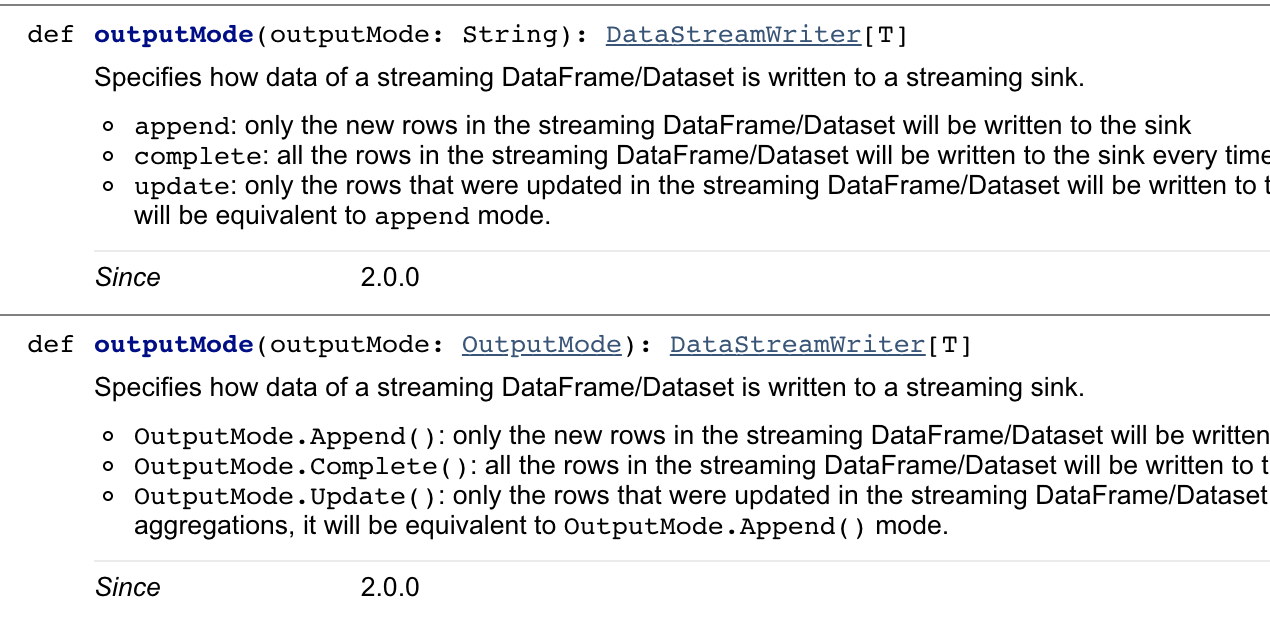

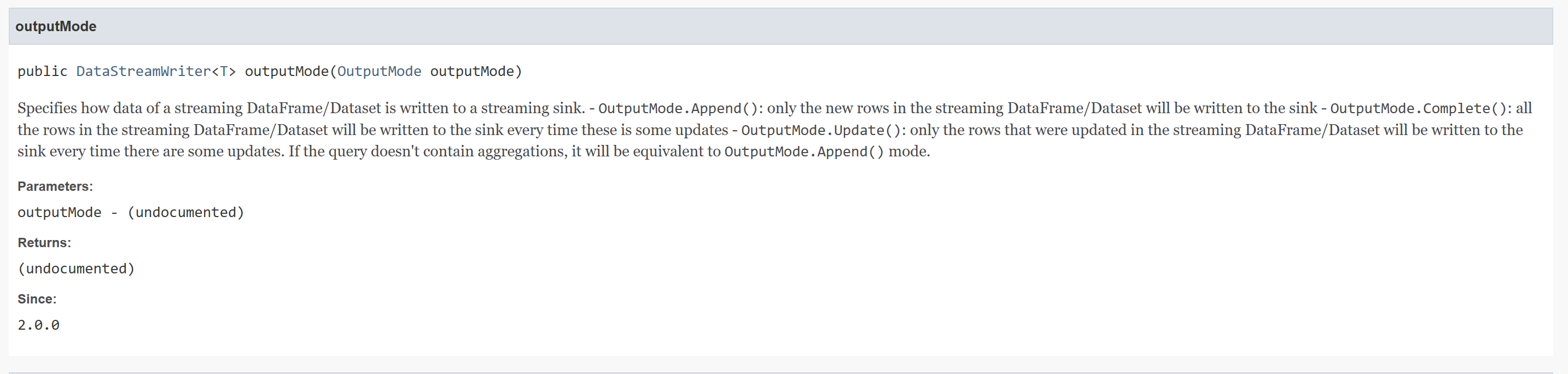

From https://spark.apache.org/docs/2.3.2/api/java/org/apache/spark/sql/streaming/DataStreamWriter.html |

|

Although it's the Scala API, it's callable from Java just as well. There's no Java-specific API here. So, yeah, actually it makes sense to have javadoc and scaladoc for this. And I think there are probably many markdown-like things that work in scaladoc not in javadoc. While you're welcome to fix all of it ideally, it may be way too much. OK, I'd say go ahead but at least fix all the instances of bullet lists in the .sql package. It's easy enough to search for something like |

|

Test build #97205 has finished for PR 22593 at commit

|

|

Seems like this wasn't able to build due to a transient error with CI |

|

org.apache.spark.sql.hive.client.HiveClientSuites.(It is not a test it is a sbt.testing.SuiteSelector) |

|

retest this please |

|

Test build #97268 has finished for PR 22593 at commit

|

|

Hm, that's a weird error. Big javadoc failures from unrelated classes. This looks like errors you get when you run javadoc on translated Scala classes. No idea why it's just popped up. Let me run again. |

|

Test build #4371 has finished for PR 22593 at commit

|

|

Huh, I'm really confused why this would fail, or at least, start failing right now. We use these HTML tags elsewhere. You could try updating the unidoc plugin version, but I think it's a genjavadoc issue if anything. But we're on the latest genjavadoc. Really confused! |

|

Is this happening for other PRs as well? |

|

Usually no.. i only saw this once in Tdas's PR before - at that time the order of methods in the change was related |

|

|

||

| /** | ||

| * <ul> | ||

| * Specifies the behavior when data or table already exists. Options include: |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This one looks wrongly formatted btw. Looks ul should be below of this line

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Crap yeah, will move it down

|

Test build #97265 has finished for PR 22593 at commit

|

|

Also, let's mention this PR targets to fix javadoc in the PR description and/or title. |

|

Test build #97311 has finished for PR 22593 at commit

|

|

Merged to master/2.4 |

## What changes were proposed in this pull request?

- Fixed typo for function outputMode

- OutputMode.Complete(), changed `these is some updates` to `there are some updates`

- Replaced hyphenized list by HTML unordered list tags in comments to fix the Javadoc documentation.

Current render from most recent [Spark API Docs](https://spark.apache.org/docs/2.3.1/api/java/org/apache/spark/sql/streaming/DataStreamWriter.html):

#### outputMode(OutputMode) - List formatted as a prose.

#### outputMode(String) - List formatted as a prose.

#### partitionBy(String*) - List formatted as a prose.

## How was this patch tested?

This PR contains a document patch ergo no functional testing is required.

Closes #22593 from niofire/fix-typo-datastreamwriter.

Authored-by: Mathieu St-Louis <mastloui@microsoft.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

(cherry picked from commit 4e141a4)

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

- Fixed typo for function outputMode

- OutputMode.Complete(), changed `these is some updates` to `there are some updates`

- Replaced hyphenized list by HTML unordered list tags in comments to fix the Javadoc documentation.

Current render from most recent [Spark API Docs](https://spark.apache.org/docs/2.3.1/api/java/org/apache/spark/sql/streaming/DataStreamWriter.html):

#### outputMode(OutputMode) - List formatted as a prose.

#### outputMode(String) - List formatted as a prose.

#### partitionBy(String*) - List formatted as a prose.

## How was this patch tested?

This PR contains a document patch ergo no functional testing is required.

Closes apache#22593 from niofire/fix-typo-datastreamwriter.

Authored-by: Mathieu St-Louis <mastloui@microsoft.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

What changes were proposed in this pull request?

- OutputMode.Complete(), changed

these is some updatestothere are some updatesCurrent render from most recent Spark API Docs:

outputMode(OutputMode) - List formatted as a prose.

outputMode(String) - List formatted as a prose.

partitionBy(String*) - List formatted as a prose.

How was this patch tested?

This PR contains a document patch ergo no functional testing is required.