-

Notifications

You must be signed in to change notification settings - Fork 29k

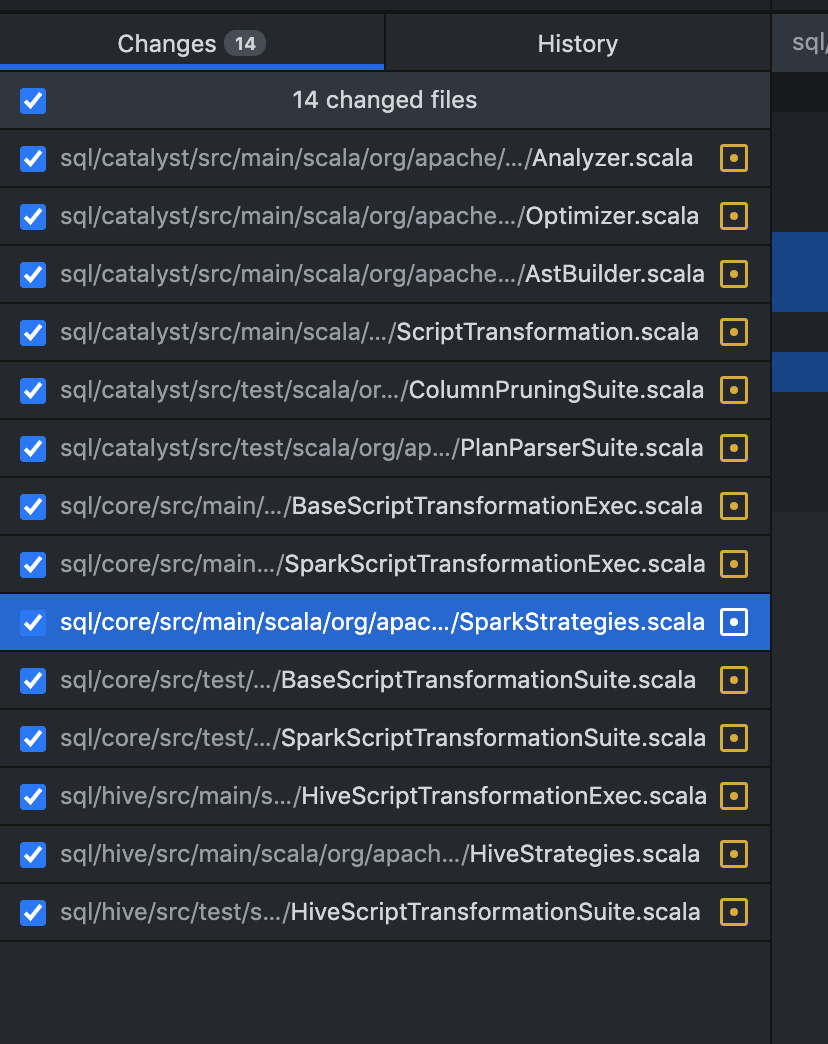

[SPARK-28227][SQL] Support projection, aggregate/window functions, and lateral view in the TRANSFORM clause #29087

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Changes from all commits

d51c0dc

5d85160

dbb4d04

2b8912e

d89afa9

b1cc739

f2a640b

b04909c

671711b

1a4262b

8df104b

1b4e0c1

a85753f

3eb8d11

2e146c3

ca5a032

614f8f9

ee16a2f

9ef73b6

707f1e6

327566f

fa293cd

a8233d4

c3d423a

cf4085a

1278705

File filter

Filter by extension

Conversations

Jump to

Diff view

Diff view

There are no files selected for viewing

| Original file line number | Diff line number | Diff line change |

|---|---|---|

|

|

@@ -150,7 +150,11 @@ class AstBuilder extends SqlBaseBaseVisitor[AnyRef] with SQLConfHelper with Logg | |

| withTransformQuerySpecification( | ||

| ctx, | ||

| ctx.transformClause, | ||

| ctx.lateralView, | ||

| ctx.whereClause, | ||

| ctx.aggregationClause, | ||

| ctx.havingClause, | ||

| ctx.windowClause, | ||

| plan | ||

| ) | ||

| } else { | ||

|

|

@@ -587,7 +591,16 @@ class AstBuilder extends SqlBaseBaseVisitor[AnyRef] with SQLConfHelper with Logg | |

| val from = OneRowRelation().optional(ctx.fromClause) { | ||

| visitFromClause(ctx.fromClause) | ||

| } | ||

| withTransformQuerySpecification(ctx, ctx.transformClause, ctx.whereClause, from) | ||

| withTransformQuerySpecification( | ||

| ctx, | ||

| ctx.transformClause, | ||

| ctx.lateralView, | ||

| ctx.whereClause, | ||

| ctx.aggregationClause, | ||

| ctx.havingClause, | ||

| ctx.windowClause, | ||

| from | ||

| ) | ||

| } | ||

|

|

||

| override def visitRegularQuerySpecification( | ||

|

|

@@ -641,14 +654,12 @@ class AstBuilder extends SqlBaseBaseVisitor[AnyRef] with SQLConfHelper with Logg | |

| private def withTransformQuerySpecification( | ||

| ctx: ParserRuleContext, | ||

| transformClause: TransformClauseContext, | ||

| lateralView: java.util.List[LateralViewContext], | ||

| whereClause: WhereClauseContext, | ||

| relation: LogicalPlan): LogicalPlan = withOrigin(ctx) { | ||

| // Add where. | ||

| val withFilter = relation.optionalMap(whereClause)(withWhereClause) | ||

|

|

||

| // Create the transform. | ||

| val expressions = visitNamedExpressionSeq(transformClause.namedExpressionSeq) | ||

|

|

||

| aggregationClause: AggregationClauseContext, | ||

| havingClause: HavingClauseContext, | ||

| windowClause: WindowClauseContext, | ||

| relation: LogicalPlan): LogicalPlan = withOrigin(ctx) { | ||

| // Create the attributes. | ||

| val (attributes, schemaLess) = if (transformClause.colTypeList != null) { | ||

| // Typed return columns. | ||

|

|

@@ -664,12 +675,22 @@ class AstBuilder extends SqlBaseBaseVisitor[AnyRef] with SQLConfHelper with Logg | |

| AttributeReference("value", StringType)()), true) | ||

| } | ||

|

|

||

| // Create the transform. | ||

| val plan = visitCommonSelectQueryClausePlan( | ||

| relation, | ||

| lateralView, | ||

| transformClause.namedExpressionSeq, | ||

| whereClause, | ||

| aggregationClause, | ||

| havingClause, | ||

| windowClause, | ||

| isDistinct = false) | ||

AngersZhuuuu marked this conversation as resolved.

Show resolved

Hide resolved

|

||

|

|

||

| ScriptTransformation( | ||

| expressions, | ||

| // TODO: Remove this logic and see SPARK-34035 | ||

| Seq(UnresolvedStar(None)), | ||

maropu marked this conversation as resolved.

Show resolved

Hide resolved

Member

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. hm, on second thought, we cannot remove this param

Contributor

Author

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more.

It looks like this. We can replace

Member

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. Nice. Just removing the param causes a big diff? I'd like to remove the current the weird Analyzer code to handle the unresolved star in this PR though.

Contributor

Author

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more.

Yea, will change a lot of file. Compared to the origin code here(the current the weird Analyzer code) ,current change seem s not so weird, but for the whole process, really weird. Create a ticket for this https://issues.apache.org/jira/browse/SPARK-34035

Member

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more.

How many changed lines of codes there?

Contributor

Author

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more.

Nearly 100 lines, most of changes are about UT

Member

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. okay, I don't have a strong opinion on it, so please follow other reviewer's comment.

Contributor

Author

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more.

Yea |

||

| string(transformClause.script), | ||

| attributes, | ||

| withFilter, | ||

| plan, | ||

| withScriptIOSchema( | ||

| ctx, | ||

| transformClause.inRowFormat, | ||

|

|

@@ -697,13 +718,40 @@ class AstBuilder extends SqlBaseBaseVisitor[AnyRef] with SQLConfHelper with Logg | |

| havingClause: HavingClauseContext, | ||

| windowClause: WindowClauseContext, | ||

| relation: LogicalPlan): LogicalPlan = withOrigin(ctx) { | ||

| val isDistinct = selectClause.setQuantifier() != null && | ||

| selectClause.setQuantifier().DISTINCT() != null | ||

|

|

||

| val plan = visitCommonSelectQueryClausePlan( | ||

| relation, | ||

| lateralView, | ||

| selectClause.namedExpressionSeq, | ||

| whereClause, | ||

| aggregationClause, | ||

| havingClause, | ||

| windowClause, | ||

| isDistinct) | ||

|

|

||

| // Hint | ||

| selectClause.hints.asScala.foldRight(plan)(withHints) | ||

| } | ||

|

|

||

| def visitCommonSelectQueryClausePlan( | ||

| relation: LogicalPlan, | ||

| lateralView: java.util.List[LateralViewContext], | ||

| namedExpressionSeq: NamedExpressionSeqContext, | ||

| whereClause: WhereClauseContext, | ||

| aggregationClause: AggregationClauseContext, | ||

| havingClause: HavingClauseContext, | ||

| windowClause: WindowClauseContext, | ||

| isDistinct: Boolean): LogicalPlan = { | ||

| // Add lateral views. | ||

| val withLateralView = lateralView.asScala.foldLeft(relation)(withGenerate) | ||

|

|

||

| // Add where. | ||

| val withFilter = withLateralView.optionalMap(whereClause)(withWhereClause) | ||

|

|

||

| val expressions = visitNamedExpressionSeq(selectClause.namedExpressionSeq) | ||

| val expressions = visitNamedExpressionSeq(namedExpressionSeq) | ||

|

|

||

| // Add aggregation or a project. | ||

| val namedExpressions = expressions.map { | ||

| case e: NamedExpression => e | ||

|

|

@@ -737,9 +785,7 @@ class AstBuilder extends SqlBaseBaseVisitor[AnyRef] with SQLConfHelper with Logg | |

| } | ||

|

|

||

| // Distinct | ||

| val withDistinct = if ( | ||

| selectClause.setQuantifier() != null && | ||

| selectClause.setQuantifier().DISTINCT() != null) { | ||

| val withDistinct = if (isDistinct) { | ||

| Distinct(withProject) | ||

| } else { | ||

| withProject | ||

|

|

@@ -748,8 +794,7 @@ class AstBuilder extends SqlBaseBaseVisitor[AnyRef] with SQLConfHelper with Logg | |

| // Window | ||

| val withWindow = withDistinct.optionalMap(windowClause)(withWindowClause) | ||

|

|

||

| // Hint | ||

| selectClause.hints.asScala.foldRight(withWindow)(withHints) | ||

| withWindow | ||

| } | ||

|

|

||

| // Script Transform's input/output format. | ||

|

|

||

| Original file line number | Diff line number | Diff line change |

|---|---|---|

|

|

@@ -5,6 +5,12 @@ CREATE OR REPLACE TEMPORARY VIEW t AS SELECT * FROM VALUES | |

| ('3', true, unhex('537061726B2053514C'), tinyint(3), 3, smallint(300), bigint(3), float(3.0), 3.0, Decimal(3.0), timestamp('1997-02-10 17:32:01-08'), date('2000-04-03')) | ||

| AS t(a, b, c, d, e, f, g, h, i, j, k, l); | ||

|

|

||

| CREATE OR REPLACE TEMPORARY VIEW script_trans AS SELECT * FROM VALUES | ||

| (1, 2, 3), | ||

| (4, 5, 6), | ||

| (7, 8, 9) | ||

| AS script_trans(a, b, c); | ||

|

|

||

| SELECT TRANSFORM(a) | ||

| USING 'cat' AS (a) | ||

| FROM t; | ||

|

|

@@ -184,6 +190,132 @@ SELECT a, b, decode(c, 'UTF-8'), d, e, f, g, h, i, j, k, l FROM ( | |

| FROM t | ||

| ) tmp; | ||

|

|

||

| SELECT TRANSFORM(b, a, CAST(c AS STRING)) | ||

| USING 'cat' AS (a, b, c) | ||

| FROM script_trans | ||

| WHERE a <= 4; | ||

|

|

||

| SELECT TRANSFORM(1, 2, 3) | ||

| USING 'cat' AS (a, b, c) | ||

| FROM script_trans | ||

| WHERE a <= 4; | ||

|

|

||

| SELECT TRANSFORM(1, 2) | ||

| USING 'cat' AS (a INT, b INT) | ||

| FROM script_trans | ||

| LIMIT 1; | ||

|

|

||

| SELECT TRANSFORM( | ||

| b AS d5, a, | ||

| CASE | ||

| WHEN c > 100 THEN 1 | ||

| WHEN c < 100 THEN 2 | ||

| ELSE 3 END) | ||

| USING 'cat' AS (a, b, c) | ||

| FROM script_trans | ||

| WHERE a <= 4; | ||

|

|

||

| SELECT TRANSFORM(b, a, c + 1) | ||

| USING 'cat' AS (a, b, c) | ||

| FROM script_trans | ||

| WHERE a <= 4; | ||

|

|

||

| SELECT TRANSFORM(*) | ||

| USING 'cat' AS (a, b, c) | ||

| FROM script_trans | ||

| WHERE a <= 4; | ||

|

Comment on lines

+193

to

+226

Member

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. These queries above are not supported in the current master?

Contributor

Author

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more.

Support, what's wrong? It have a correct answer.

Member

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. I just want to know why these tests are added in this PR... That's because these tests seems to be not related to aggregation/window/lateralView.

Contributor

Author

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more.

Yea, here want to show after this pr's change, each kind of expressions can work well, such as |

||

|

|

||

| SELECT TRANSFORM(b AS d, MAX(a) as max_a, CAST(SUM(c) AS STRING)) | ||

| USING 'cat' AS (a, b, c) | ||

| FROM script_trans | ||

| WHERE a <= 4 | ||

| GROUP BY b; | ||

|

|

||

| SELECT TRANSFORM(b AS d, MAX(a) FILTER (WHERE a > 3) AS max_a, CAST(SUM(c) AS STRING)) | ||

| USING 'cat' AS (a,b,c) | ||

| FROM script_trans | ||

| WHERE a <= 4 | ||

| GROUP BY b; | ||

|

|

||

| SELECT TRANSFORM(b, MAX(a) as max_a, CAST(sum(c) AS STRING)) | ||

| USING 'cat' AS (a, b, c) | ||

| FROM script_trans | ||

| WHERE a <= 2 | ||

| GROUP BY b; | ||

|

|

||

| SELECT TRANSFORM(b, MAX(a) as max_a, CAST(SUM(c) AS STRING)) | ||

| USING 'cat' AS (a, b, c) | ||

| FROM script_trans | ||

| WHERE a <= 4 | ||

| GROUP BY b | ||

| HAVING max_a > 0; | ||

|

|

||

| SELECT TRANSFORM(b, MAX(a) as max_a, CAST(SUM(c) AS STRING)) | ||

| USING 'cat' AS (a, b, c) | ||

| FROM script_trans | ||

| WHERE a <= 4 | ||

| GROUP BY b | ||

| HAVING max(a) > 1; | ||

|

|

||

| SELECT TRANSFORM(b, MAX(a) OVER w as max_a, CAST(SUM(c) OVER w AS STRING)) | ||

| USING 'cat' AS (a, b, c) | ||

| FROM script_trans | ||

| WHERE a <= 4 | ||

| WINDOW w AS (PARTITION BY b ORDER BY a); | ||

|

|

||

| SELECT TRANSFORM(b, MAX(a) as max_a, CAST(SUM(c) AS STRING), myCol, myCol2) | ||

| USING 'cat' AS (a, b, c, d, e) | ||

| FROM script_trans | ||

| LATERAL VIEW explode(array(array(1,2,3))) myTable AS myCol | ||

| LATERAL VIEW explode(myTable.myCol) myTable2 AS myCol2 | ||

| WHERE a <= 4 | ||

| GROUP BY b, myCol, myCol2 | ||

| HAVING max(a) > 1; | ||

|

|

||

| FROM( | ||

| FROM script_trans | ||

| SELECT TRANSFORM(a, b) | ||

| USING 'cat' AS (`a` INT, b STRING) | ||

| ) t | ||

| SELECT a + 1; | ||

|

|

||

| FROM( | ||

| SELECT TRANSFORM(a, SUM(b) b) | ||

| USING 'cat' AS (`a` INT, b STRING) | ||

| FROM script_trans | ||

| GROUP BY a | ||

| ) t | ||

| SELECT (b + 1) AS result | ||

| ORDER BY result; | ||

|

|

||

| MAP k / 10 USING 'cat' AS (one) FROM (SELECT 10 AS k); | ||

|

|

||

| FROM (SELECT 1 AS key, 100 AS value) src | ||

| MAP src.*, src.key, CAST(src.key / 10 AS INT), CAST(src.key % 10 AS INT), src.value | ||

| USING 'cat' AS (k, v, tkey, ten, one, tvalue); | ||

|

|

||

| SELECT TRANSFORM(1) | ||

| USING 'cat' AS (a) | ||

| FROM script_trans | ||

| HAVING true; | ||

|

|

||

| SET spark.sql.legacy.parser.havingWithoutGroupByAsWhere=true; | ||

|

|

||

| SELECT TRANSFORM(1) | ||

| USING 'cat' AS (a) | ||

| FROM script_trans | ||

| HAVING true; | ||

|

|

||

AngersZhuuuu marked this conversation as resolved.

Show resolved

Hide resolved

|

||

| SET spark.sql.legacy.parser.havingWithoutGroupByAsWhere=false; | ||

|

|

||

| SET spark.sql.parser.quotedRegexColumnNames=true; | ||

|

|

||

| SELECT TRANSFORM(`(a|b)?+.+`) | ||

| USING 'cat' AS (c) | ||

|

Contributor

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. nit: 2 spaces indentation |

||

| FROM script_trans; | ||

|

|

||

| SET spark.sql.parser.quotedRegexColumnNames=false; | ||

|

|

||

|

Contributor

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. can we test something like

Contributor

Author

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. Will raise a follow up soon |

||

| -- SPARK-34634: self join using CTE contains transform | ||

| WITH temp AS ( | ||

| SELECT TRANSFORM(a) USING 'cat' AS (b string) FROM t | ||

|

|

||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Please do not forget to fix this, @AngersZhuuuu