-

Notifications

You must be signed in to change notification settings - Fork 29k

[SPARK-33454][INFRA] Add GitHub Action job for Hadoop 2 #30378

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

.github/workflows/build_and_test.yml

Outdated

| ./build/sbt -Pyarn -Pmesos -Pkubernetes -Phive -Phive-thriftserver -Phadoop-cloud -Pkinesis-asl -Djava.version=11 -Pscala-2.13 compile test:compile | ||

| hadoop-2: | ||

| name: Hadoop 2 build |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

We can upgrade from Hadoop 2.7.4 to Hadoop 2.10.x. So, the job name is only mentioning the major version.

|

Kubernetes integration test starting |

|

Kubernetes integration test status success |

|

Thanks for making this change @dongjoon-hyun ! Or does jenkins leverage the same ? |

|

Ya. That's true. However, For Jenkins, IIUC, it's difficult to apply this in PR Builder. Currently, all PR Builders (PRBuilder, K8s IT PRBuilder) are focusing on the default profile because the runs are too heavy. You may ask Shane for new PRBuilder. He knows better than me about the capacity and new PR Builder for Hadoop 2. |

|

BTW, I have only read-only access privilege to see Jenkins configuration. |

|

+CC @shaneknapp |

I am concerned sometimes we might just not see it - given it is listed at the bottom of the list (if jenkins gives green). |

|

Sure! Given the importance of Hadoop 2, it sounds good to me. |

|

Test build #131096 has finished for PR 30378 at commit

|

|

Kubernetes integration test starting |

|

Kubernetes integration test status failure |

|

Test build #131098 has finished for PR 30378 at commit

|

|

There looks a legitimate failure but I like this idea +1. |

|

Yes, @HyukjinKwon . The failure is the motive of this PR. We should prevent that happens again. |

|

Since #30375 is merged now, I retriggered GitHub Action. cc @mridulm , @HyukjinKwon , @wangyum |

viirya

left a comment

viirya

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Looks okay, just one question.

|

It seems that there exists some delay on GitHub Action. I retriggered GitHub Action because the previous run fails with compilation error again. |

|

BTW, @HyukjinKwon . Do you still want to use Java 8 in this PR?

|

|

Thank you, @wangyum . I'm looking at both this PR and vanilla master branch, too. The previous failure might happen by another unknown reason instead of the API issue. |

|

It's weird. The new code is not consumed at the GitHub Action re-trigger. I'll rebase this to the master~ |

|

Thank you @dongjoon-hyun. |

|

Sorry for the rebasing after your approval, @HyukjinKwon , @wangyum , @viirya . It was inevitable to bring the latest master branch. (I didn't notice that GitHub Action doesn't use the latest master.) Also, I switched to Java 8 according to @HyukjinKwon 's advice. |

|

Could you approve once more please? |

|

Merged to master. |

|

Yay! Thank you, @viirya and @HyukjinKwon ! 😄 |

|

Kubernetes integration test starting |

|

Kubernetes integration test status success |

|

Test build #131132 has finished for PR 30378 at commit

|

|

Another related question, do you think we should test Java 14 by Github Action? |

|

There's a discussion thread in the mailing list about JDK 14 FYI: http://apache-spark-developers-list.1001551.n3.nabble.com/Spark-on-JDK-14-td30348.html |

|

Although it's important to track upstream, Java 14 is already EOL. |

What changes were proposed in this pull request?

This PR aims to protect

Hadoop 2.xprofile compilation in Apache Spark 3.1+.Why are the changes needed?

Since Apache Spark 3.1+ switch our default profile to Hadoop 3, we had better prevent at least compilation error with

Hadoop 2.xprofile at the PR review phase. Although this is an additional workload, it will finish quickly because it's compilation only.Does this PR introduce any user-facing change?

No.

How was this patch tested?

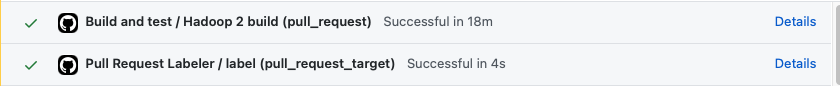

Pass the GitHub Action.