-

Notifications

You must be signed in to change notification settings - Fork 29k

[SPARK-36598][SHUFFLE][SQL] Avoid the memory leak of Guava cache by add maximumWeight limit #33848

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

| File indexFile = new File("shuffle_" + 0 + "_" + i + "_0.index"); | ||

| ShuffleIndexInformation indexInfo = Mockito.mock(ShuffleIndexInformation.class); | ||

| Mockito.when(indexInfo.getSize()).thenReturn(unitSize); | ||

| shuffleIndexCache.get(indexFile, () -> indexInfo); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Not easy to mock the init behavior of ShuffleIndexInformation, so there re-write CacheLoader.

| try{ | ||

| Assert.assertTrue(size <= maxCacheCount); | ||

| Assert.assertTrue(totalWeight < maximumWeight); | ||

| fail("The tests code should not enter this line now."); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Should enter line 153, but it won't now

|

Maybe there the following ways can solve the problem:

I will try to upgrade Guava version first after 98458fd build successful Do you have any better suggestions ? @HyukjinKwon @dongjoon-hyun @srowen |

| public void testShuffleIndexCacheEvictionBehavior() throws IOException, ExecutionException { | ||

| Map<String, String> config = new HashMap<>(); | ||

| String indexCacheSize = "8192m"; | ||

| config.put("spark.shuffle.service.index.cache.size", indexCacheSize); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

configure spark.shuffle.service.index.cache.size with 8g and the default concurrencyLevel is 4, so the maxSegmentWeight Local Cache is Int.MAX_VALUE, these condition will trigger the bug

|

Kubernetes integration test unable to build dist. exiting with code: 1 |

|

Test build #142802 has finished for PR 33848 at commit

|

|

8ec7525 upgrade Guava to 30.1.1-jre to fix |

| datanucleus-rdbms/4.1.19//datanucleus-rdbms-4.1.19.jar | ||

| derby/10.14.2.0//derby-10.14.2.0.jar | ||

| dropwizard-metrics-hadoop-metrics2-reporter/0.1.2//dropwizard-metrics-hadoop-metrics2-reporter-0.1.2.jar | ||

| error_prone_annotations/2.5.1//error_prone_annotations-2.5.1.jar |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@HyukjinKwon @dongjoon-hyun @srowen It seems that upgrading the Guava version can solve the problem, but it will also add additional dependencies. I'm not sure whether it is valuable

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This new dep is fine, it's ALv2 https://github.com/google/error-prone

yes the problem is that it can potentially interfere with other libs like Hadoop

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Ya, I agree with @srowen 's comment.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

If upgradingGuava version is not a good way, it seems that it is not easy to fix this potential bug. I have manually verified that Caffeine does not have this problem, but maybe we are not willing to accept it because #33784

So can we prevent users from using a problematic config by adding some config value check?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@srowen @dongjoon-hyun

As comments above:

- Upgrading the Guava version will have potential interfere with other libs like Hadoop

- Use Caffeine will introduce new dependent libs. (Revert "[SPARK-34309][BUILD][CORE][SQL][K8S] Use Caffeine instead of Guava Cache" #33784)

Therefore, I added a maximum limit to the related configurations to avoid the potential bug of Guava Cache Guava#1761

|

Kubernetes integration test unable to build dist. exiting with code: 1 |

This reverts commit 8ec7525.

|

Kubernetes integration test unable to build dist. exiting with code: 1 |

|

Test build #142818 has finished for PR 33848 at commit

|

|

Test build #142819 has finished for PR 33848 at commit

|

|

Kubernetes integration test starting |

|

Kubernetes integration test status failure |

|

Test build #142888 has finished for PR 33848 at commit

|

|

Kubernetes integration test starting |

|

Kubernetes integration test status failure |

|

Kubernetes integration test starting |

|

Test build #142956 has finished for PR 33848 at commit

|

|

Kubernetes integration test status failure |

|

Test build #142960 has finished for PR 33848 at commit

|

|

Kubernetes integration test starting |

|

Kubernetes integration test status failure |

|

Test build #143256 has finished for PR 33848 at commit

|

|

We're closing this PR because it hasn't been updated in a while. This isn't a judgement on the merit of the PR in any way. It's just a way of keeping the PR queue manageable. |

What changes were proposed in this pull request?

The Guava Cache use in Spark with weight eviction mechanism has risk of memory leak because

LocalCacheweight eviction does not work whenmaxSegmentWeight is >= Int.MAX_VALUEGuava#1761The main change of this pr is add the

maximumWeightvalue limit for Guava cache instances that use the weight eviction strategy, include:spark.shuffle.service.index.cache.sizeshould <= 8g bytesspark.shuffle.push.server.mergedIndexCacheSizeshould <= 8g bytesspark.sql.hive.filesourcePartitionFileCacheSizeshould <= 256gWhy are the changes needed?

Avoiding the weight eviction bug of Guava Cache(Guava#1761)

Before this pr, the following test will fail, some cache entries was not evicted as expected and memory leak will occur

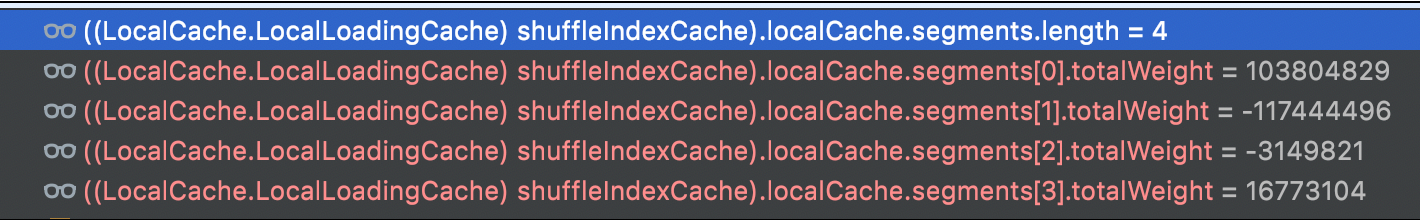

And through the debug view, there are 2

segments.totalWeightoverflowed.After this pr, the above issue are avoided through the maximum limit because

maximumWeightcan no longer be greater than 2g.Does this PR introduce any user-facing change?

The following 3 configs add the maximum limit:

spark.shuffle.service.index.cache.sizeshould <= 8g bytesspark.shuffle.push.server.mergedIndexCacheSizeshould <= 8g bytesspark.sql.hive.filesourcePartitionFileCacheSizeshould <= 256gHow was this patch tested?

Pass GA or Jenkins Tests.