-

Notifications

You must be signed in to change notification settings - Fork 29k

[SPARK-40331][DOCS] Recommend use Java 11/17 as the runtime environment of Spark #37799

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

In addition, using Java 11(with numa bind of cpu and memory ) on the arm architecture will also have better performance than Java 8(with numa bind of cpu and memory ), with a general performance improvement of about 5% ~ 10% |

|

cc @rednaxelafx FYI |

| Spark runs on both Windows and UNIX-like systems (e.g. Linux, Mac OS), and it should run on any platform that runs a supported version of Java. This should include JVMs on x86_64 and ARM64. It's easy to run locally on one machine --- all you need is to have `java` installed on your system `PATH`, or the `JAVA_HOME` environment variable pointing to a Java installation. | ||

|

|

||

| Spark runs on Java 8/11/17, Scala 2.12/2.13, Python 3.7+ and R 3.5+. | ||

| Java 11/17 is the recommended version to run Spark on. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I wonder if we should explicitly mention this though. Some benchmark results weren't good actually IIRC.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I meant that the performance you mentioned looks limited to whole stage codegen and JIT complier only. I remember we saw some slower performance (e.g., at https://github.com/apache/spark/tree/master/core/benchmarks)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

For compatibility, is the current code more inclined to the best practice of Java 8? Just as the current use of Scala 2.13 has not reached the best practice. I think it should be improved through some refactoring work, . @rednaxelafx , WDYT?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes we should move forward. But we should deprecate Java 8 first which I think would mean that encourage users to use JDK 11 and 17.

What I am unsure is that whether it's better to recommend something (JDK 11/17) slower when the old stuff (JDK 8) is not even deprecated. To end users, JDK 8 is still a faster option in general if I am not mistaken.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

+1 for @HyukjinKwon 's comment. Yes, it does. Our benchmark shows JDK 8 is faster in general.

| Spark runs on Java 8/11/17, Scala 2.12/2.13, Python 3.7+ and R 3.5+. | ||

| Java 11/17 is the recommended version to run Spark on. | ||

| Python 3.7 support is deprecated as of Spark 3.4.0. | ||

| Java 8 prior to version 8u201 support is deprecated as of Spark 3.2.0. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Hm, why do we remove this deprecation BTW?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I am ok to keep this line

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes, we should not remove this, @LuciferYang . Please revert this from this PR.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

ok

|

also cc @Yikun |

|

Some info FYI:

Above all, personally think, unless we see that Java 11 has advantages in most scenarios in Apache Spark, otherwise users should choose the appropriate JDK among 8, 11, 17 according to their own situation (at current time). |

To clarify, this is NUMA bound through the capabilities of the container, not the capabilities of Java 11 |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Apache Spark community still supports Hadoop 2.7.4 distribution officially. What do you think about that, @LuciferYang ?

Is this question related to the current PR? Or is it a separate question? Is there any bad case with |

|

@dongjoon-hyun 20% of SQL type jobs in our production environment use "Spark 3.1 (or Spark 3.2) + Hadoop 2.7.3 + Java 11" on the Arm architecture, and no fatal problems have been found in the last 8 months, but our production environment is not strongly dependent on Hadoop, so I may miss some fatal cases |

|

Yes it is related because this PR is about a general recommendation for Spark, not a specific distribution.

I want to confirm that we didn't miss any thing there. So, what about |

|

@dongjoon-hyun Java 17 has not been used in our production environment, but for the scenarios mentioned in the PR description, the performance using 11 and 17 is obviously better than 8. May be @wangyum have some performance data of Java 17 in the production environment that can be shared? |

|

Just for curiosity: are we already using java 11 to run tests in Github Actions? |

Currently, Java 11/17 only have daily test. For each pr, only build, no test, am I right? @HyukjinKwon |

|

If this is at all controversial, let's just not make this change |

yup. |

|

The performance Issue fixed by JDK-8159720. |

Seems to be fixed only in Java 9+? |

What changes were proposed in this pull request?

This PR suggests using Java 11/17 as runtime of Spark in the

index.md.Why are the changes needed?

The JIT optimizer in Java 11/17 is better than Java 8, running Spark using Java 11/17 will bring some performance benefits.

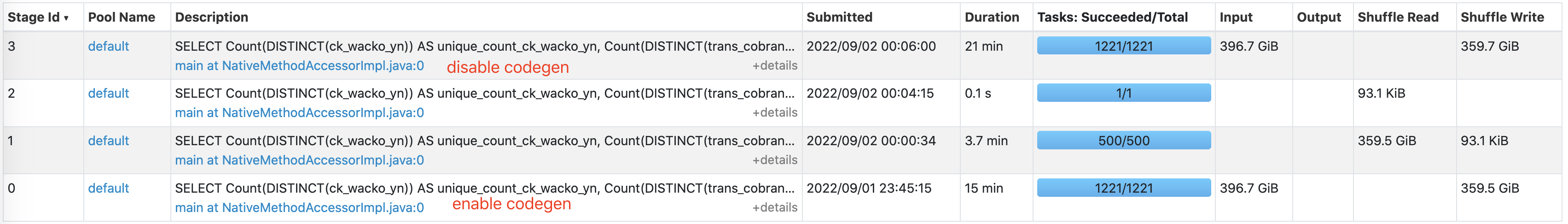

Spark uses

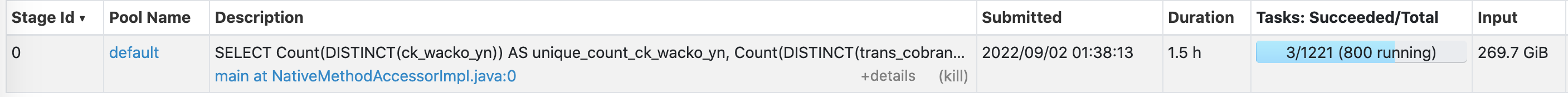

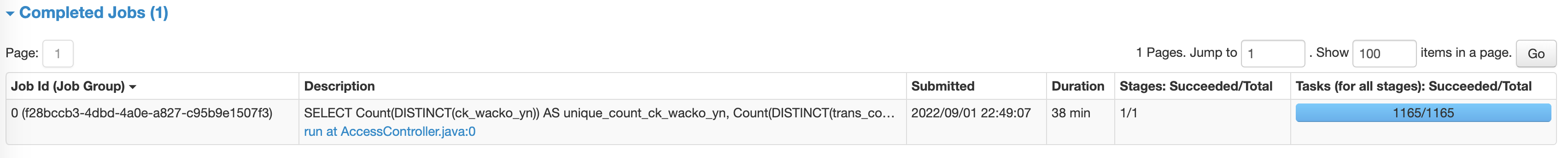

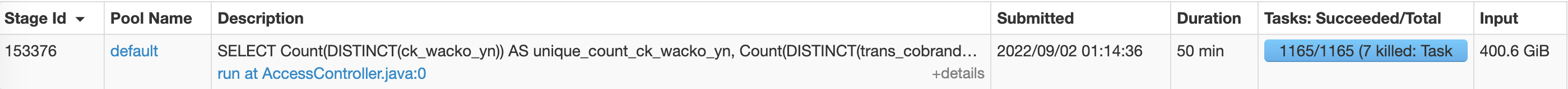

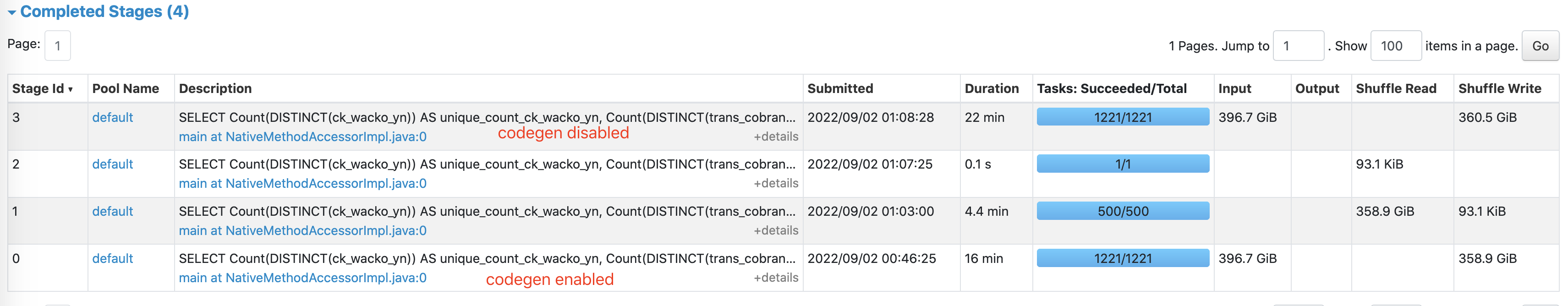

Whole Stage Code Gento improve performance, but the generated code may not be friendly to the JIT optimizer. For example, the case mentioned in SPARK-40303 by @wangyum :When use Java 8 to run the above case in

local[2]mode, there will be obvious negative effects whencntis 35, 40, 50, 60 or 70 withcodegenenabled. (the performance aftercnt > 70is meet expectations due to fallback with InternalCompilerException: Code grows beyond 64 KB):But run the above cases using Java 11 or Java 17, there will be no obvious negative effects:

Java 11

Java 17

After turning on the

-XX:+PrintCompilationoption, we can found the logs related to theoperatorName_doConsumemethod compilation failure of the C2 compiler:When using Java 8, they are identified as

not retryable, this indicates that the compiler deemed this method should not be attempted to compile again on any tier of compilation, and because this is an OSR compilation (i.e. loop compilation), this will mark the method as "never try to perform OSR compilation again on all tiers". But when using Java 11/17 they are identified asretry at different tier, it'll try tier 1, this also makes the performance of the above cases seem acceptable.So this pr started to suggest using Java 11/17 as runtime environment of Spark in the document.

Does this PR introduce any user-facing change?

No

How was this patch tested?

Just change the document.