-

Notifications

You must be signed in to change notification settings - Fork 29k

[SPARK-40739][SPARK-40738] Fixes for cygwin/msys2/mingw sbt build and bash scripts #38228

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

| RESOURCE_DIR="$1" | ||

| mkdir -p "$RESOURCE_DIR" | ||

| SPARK_BUILD_INFO="${RESOURCE_DIR}"/spark-version-info.properties | ||

| SPARK_BUILD_INFO="${RESOURCE_DIR%/}"/spark-version-info.properties |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Is the "%" Windows-specific? this script isn't.

|

The change is portable, it removes a trailing slash, if present, to avoid

having two consecutive slashes.

…On Thu, Oct 13, 2022, 07:11 Sean Owen ***@***.***> wrote:

***@***.**** commented on this pull request.

------------------------------

In build/spark-build-info

<#38228 (comment)>:

> @@ -24,7 +24,7 @@

RESOURCE_DIR="$1"

mkdir -p "$RESOURCE_DIR"

-SPARK_BUILD_INFO="${RESOURCE_DIR}"/spark-version-info.properties

+SPARK_BUILD_INFO="${RESOURCE_DIR%/}"/spark-version-info.properties

Is the "%" Windows-specific? this script isn't.

—

Reply to this email directly, view it on GitHub

<#38228 (review)>,

or unsubscribe

<https://github.com/notifications/unsubscribe-auth/AAYGLFR5DQGKHDEXJCI76WTWDADAZANCNFSM6AAAAAARDVFULU>

.

You are receiving this because you authored the thread.Message ID:

***@***.***>

|

|

Looks fine though may need to investigate the test failure to see if it's related |

|

Can one of the admins verify this patch? |

|

@vitaliili-db is this error something you faced before? (https://github.com/philwalk/spark/actions/runs/3237799999/jobs/5317149214) about write permission within your fork. |

@HyukjinKwon Yes, this is exact error I encountered. Solved by going to my fork Here is a blog post about it: https://seekdavidlee.medium.com/how-to-fix-post-repos-check-runs-403-error-on-github-action-workflow-f2c5a9bb67d |

|

cc @Yikun FYI |

|

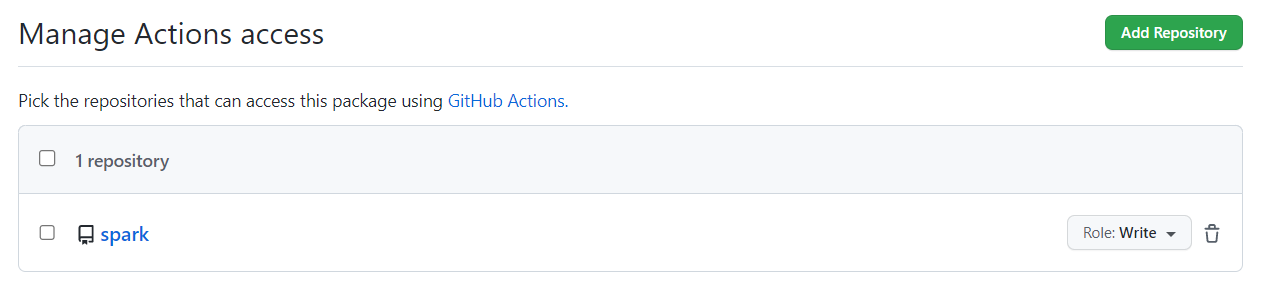

https://github.com/users/philwalk/packages/container/package/apache-spark-ci-image I just saw the local image is once generated successfully before but now it not shows under

|

|

@vitaliili-db Did you set any permission by yourself manually before? I just have a try (fork other apache project) the default permission is |

The |

|

Okay, I was able to add |

|

Seems OK now |

| object Core { | ||

| import scala.sys.process.Process | ||

| def buildenv = Process(Seq("uname")).!!.trim.replaceFirst("[^A-Za-z0-9].*", "").toLowerCase | ||

| def bashpath = Process(Seq("where", "bash")).!!.split("[\r\n]+").head.replace('\\', '/') |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

You could inline this where used below, but not sure it matters

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I originally had it inline, but it was not as readable, so I pulled it out.

|

Merged to master |

… bash scripts This fixes two problems that affect development in a Windows shell environment, such as `cygwin` or `msys2`. ### The fixed build error Running `./build/sbt packageBin` from A Windows cygwin `bash` session fails. This occurs if `WSL` is installed, because `project\SparkBuild.scala` creates a `bash` process, but `WSL bash` is called, even though `cygwin bash` appears earlier in the `PATH`. In addition, file path arguments to bash contain backslashes. The fix is to insure that the correct `bash` is called, and that arguments passed to `bash` are passed with slashes rather than **slashes.** ### The build error message: ```bash ./build.sbt packageBin ``` <pre> [info] compiling 9 Java sources to C:\Users\philwalk\workspace\spark\common\sketch\target\scala-2.12\classes ... /bin/bash: C:Usersphilwalkworkspacesparkcore/../build/spark-build-info: No such file or directory [info] compiling 1 Scala source to C:\Users\philwalk\workspace\spark\tools\target\scala-2.12\classes ... [info] compiling 5 Scala sources to C:\Users\philwalk\workspace\spark\mllib-local\target\scala-2.12\classes ... [info] Compiling 5 protobuf files to C:\Users\philwalk\workspace\spark\connector\connect\target\scala-2.12\src_managed\main [error] stack trace is suppressed; run last core / Compile / managedResources for the full output [error] (core / Compile / managedResources) Nonzero exit value: 127 [error] Total time: 42 s, completed Oct 8, 2022, 4:49:12 PM sbt:spark-parent> sbt:spark-parent> last core /Compile /managedResources last core /Compile /managedResources [error] java.lang.RuntimeException: Nonzero exit value: 127 [error] at scala.sys.package$.error(package.scala:30) [error] at scala.sys.process.ProcessBuilderImpl$AbstractBuilder.slurp(ProcessBuilderImpl.scala:138) [error] at scala.sys.process.ProcessBuilderImpl$AbstractBuilder.$bang$bang(ProcessBuilderImpl.scala:108) [error] at Core$.$anonfun$settings$4(SparkBuild.scala:604) [error] at scala.Function1.$anonfun$compose$1(Function1.scala:49) [error] at sbt.internal.util.$tilde$greater.$anonfun$$u2219$1(TypeFunctions.scala:62) [error] at sbt.std.Transform$$anon$4.work(Transform.scala:68) [error] at sbt.Execute.$anonfun$submit$2(Execute.scala:282) [error] at sbt.internal.util.ErrorHandling$.wideConvert(ErrorHandling.scala:23) [error] at sbt.Execute.work(Execute.scala:291) [error] at sbt.Execute.$anonfun$submit$1(Execute.scala:282) [error] at sbt.ConcurrentRestrictions$$anon$4.$anonfun$submitValid$1(ConcurrentRestrictions.scala:265) [error] at sbt.CompletionService$$anon$2.call(CompletionService.scala:64) [error] at java.base/java.util.concurrent.FutureTask.run(FutureTask.java:264) [error] at java.base/java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:515) [error] at java.base/java.util.concurrent.FutureTask.run(FutureTask.java:264) [error] at java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1128) [error] at java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:628) [error] at java.base/java.lang.Thread.run(Thread.java:834) [error] (core / Compile / managedResources) Nonzero exit value: 127 </pre> ### bash scripts fail when run from `cygwin` or `msys2` The other problem fixed by the PR is to address problems preventing the `bash` scripts (`spark-shell`, `spark-submit`, etc.) from being used in Windows `SHELL` environments. The problem is that the bash version of `spark-class` fails in a Windows shell environment, the result of `launcher/src/main/java/org/apache/spark/launcher/Main.java` not following the convention expected by `spark-class`, and also appending CR to line endings. The resulting error message not helpful. There are two parts to this fix: 1. modify `Main.java` to treat a `SHELL` session on Windows as a `bash` session 2. remove the appended CR character when parsing the output produced by `Main.java` ### Does this PR introduce _any_ user-facing change? These changes should NOT affect anyone who is not trying build or run bash scripts from a Windows SHELL environment. ### How was this patch tested? Manual tests were performed to verify both changes. ### related JIRA issues The following 2 JIRA issue were created. Both are fixed by this PR. They are both linked to this PR. - Bug SPARK-40739 "sbt packageBin" fails in cygwin or other windows bash session - Bug SPARK-40738 spark-shell fails with "bad array" Closes apache#38228 from philwalk/windows-shell-env-fixes. Authored-by: Phil <philwalk9@gmail.com> Signed-off-by: Sean Owen <srowen@gmail.com>

… bash scripts This fixes two problems that affect development in a Windows shell environment, such as `cygwin` or `msys2`. ### The fixed build error Running `./build/sbt packageBin` from A Windows cygwin `bash` session fails. This occurs if `WSL` is installed, because `project\SparkBuild.scala` creates a `bash` process, but `WSL bash` is called, even though `cygwin bash` appears earlier in the `PATH`. In addition, file path arguments to bash contain backslashes. The fix is to insure that the correct `bash` is called, and that arguments passed to `bash` are passed with slashes rather than **slashes.** ### The build error message: ```bash ./build.sbt packageBin ``` <pre> [info] compiling 9 Java sources to C:\Users\philwalk\workspace\spark\common\sketch\target\scala-2.12\classes ... /bin/bash: C:Usersphilwalkworkspacesparkcore/../build/spark-build-info: No such file or directory [info] compiling 1 Scala source to C:\Users\philwalk\workspace\spark\tools\target\scala-2.12\classes ... [info] compiling 5 Scala sources to C:\Users\philwalk\workspace\spark\mllib-local\target\scala-2.12\classes ... [info] Compiling 5 protobuf files to C:\Users\philwalk\workspace\spark\connector\connect\target\scala-2.12\src_managed\main [error] stack trace is suppressed; run last core / Compile / managedResources for the full output [error] (core / Compile / managedResources) Nonzero exit value: 127 [error] Total time: 42 s, completed Oct 8, 2022, 4:49:12 PM sbt:spark-parent> sbt:spark-parent> last core /Compile /managedResources last core /Compile /managedResources [error] java.lang.RuntimeException: Nonzero exit value: 127 [error] at scala.sys.package$.error(package.scala:30) [error] at scala.sys.process.ProcessBuilderImpl$AbstractBuilder.slurp(ProcessBuilderImpl.scala:138) [error] at scala.sys.process.ProcessBuilderImpl$AbstractBuilder.$bang$bang(ProcessBuilderImpl.scala:108) [error] at Core$.$anonfun$settings$4(SparkBuild.scala:604) [error] at scala.Function1.$anonfun$compose$1(Function1.scala:49) [error] at sbt.internal.util.$tilde$greater.$anonfun$$u2219$1(TypeFunctions.scala:62) [error] at sbt.std.Transform$$anon$4.work(Transform.scala:68) [error] at sbt.Execute.$anonfun$submit$2(Execute.scala:282) [error] at sbt.internal.util.ErrorHandling$.wideConvert(ErrorHandling.scala:23) [error] at sbt.Execute.work(Execute.scala:291) [error] at sbt.Execute.$anonfun$submit$1(Execute.scala:282) [error] at sbt.ConcurrentRestrictions$$anon$4.$anonfun$submitValid$1(ConcurrentRestrictions.scala:265) [error] at sbt.CompletionService$$anon$2.call(CompletionService.scala:64) [error] at java.base/java.util.concurrent.FutureTask.run(FutureTask.java:264) [error] at java.base/java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:515) [error] at java.base/java.util.concurrent.FutureTask.run(FutureTask.java:264) [error] at java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1128) [error] at java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:628) [error] at java.base/java.lang.Thread.run(Thread.java:834) [error] (core / Compile / managedResources) Nonzero exit value: 127 </pre> ### bash scripts fail when run from `cygwin` or `msys2` The other problem fixed by the PR is to address problems preventing the `bash` scripts (`spark-shell`, `spark-submit`, etc.) from being used in Windows `SHELL` environments. The problem is that the bash version of `spark-class` fails in a Windows shell environment, the result of `launcher/src/main/java/org/apache/spark/launcher/Main.java` not following the convention expected by `spark-class`, and also appending CR to line endings. The resulting error message not helpful. There are two parts to this fix: 1. modify `Main.java` to treat a `SHELL` session on Windows as a `bash` session 2. remove the appended CR character when parsing the output produced by `Main.java` ### Does this PR introduce _any_ user-facing change? These changes should NOT affect anyone who is not trying build or run bash scripts from a Windows SHELL environment. ### How was this patch tested? Manual tests were performed to verify both changes. ### related JIRA issues The following 2 JIRA issue were created. Both are fixed by this PR. They are both linked to this PR. - Bug SPARK-40739 "sbt packageBin" fails in cygwin or other windows bash session - Bug SPARK-40738 spark-shell fails with "bad array" Closes apache#38228 from philwalk/windows-shell-env-fixes. Authored-by: Phil <philwalk9@gmail.com> Signed-off-by: Sean Owen <srowen@gmail.com>

This fixes two problems that affect development in a Windows shell environment, such as

cygwinormsys2.The fixed build error

Running

./build/sbt packageBinfrom A Windows cygwinbashsession fails.This occurs if

WSLis installed, becauseproject\SparkBuild.scalacreates abashprocess, butWSL bashis called, even thoughcygwin bashappears earlier in thePATH. In addition, file path arguments to bash contain backslashes. The fix is to insure that the correctbashis called, and that arguments passed tobashare passed with slashes rather than slashes.The build error message:

bash scripts fail when run from

cygwinormsys2The other problem fixed by the PR is to address problems preventing the

bashscripts (spark-shell,spark-submit, etc.) from being used in WindowsSHELLenvironments. The problem is that the bash version ofspark-classfails in a Windows shell environment, the result oflauncher/src/main/java/org/apache/spark/launcher/Main.javanot following the convention expected byspark-class, and also appending CR to line endings. The resulting error message not helpful.There are two parts to this fix:

Main.javato treat aSHELLsession on Windows as abashsessionMain.javaDoes this PR introduce any user-facing change?

These changes should NOT affect anyone who is not trying build or run bash scripts from a Windows SHELL environment.

How was this patch tested?

Manual tests were performed to verify both changes.

related JIRA issues

The following 2 JIRA issue were created. Both are fixed by this PR. They are both linked to this PR.