-

Notifications

You must be signed in to change notification settings - Fork 29k

[SPARK-40910][SQL] Replace UnsupportedOperationException with SparkUnsupportedOperationException #38387

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[SPARK-40910][SQL] Replace UnsupportedOperationException with SparkUnsupportedOperationException #38387

Conversation

…supportedOperationException

| parseAndResolve(query) | ||

| }, | ||

| errorClass = "_LEGACY_ERROR_TEMP_2067", | ||

| parameters = Map("transform" -> transform)) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

In fact, it throws SparkUnsupportedOperationException.

|

cc @MaxGekk |

itholic

left a comment

itholic

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM when CI pass

| "Not enough memory to build and broadcast the table to all worker nodes. As a workaround, you can either disable broadcast by setting <autoBroadcastjoinThreshold> to -1 or increase the spark driver memory by setting <driverMemory> to a higher value<analyzeTblMsg>" | ||

| ] | ||

| }, | ||

| "_LEGACY_ERROR_TEMP_2251" : { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Let's introduce a appropriate name as you are here.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Done.

|

Can one of the admins verify this patch? |

…supportedOperationException

…supportedOperationException

…supportedOperationException

…supportedOperationException

| "Literal for '<value>' of <type>." | ||

| ] | ||

| }, | ||

| "MULTIPLE_BUCKET_TRANSFORM" : { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

MULTIPLE_BUCKET_TRANSFORM -> MULTIPLE_BUCKET_TRANSFORMS

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Done

| }, | ||

| "MULTIPLE_BUCKET_TRANSFORM" : { | ||

| "message" : [ | ||

| "TRANSFORM on multiple bucket." |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Multiple bucket TRANSFORMs

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Done

…supportedOperationException

MaxGekk

left a comment

MaxGekk

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Waiting for CI.

|

+1, LGTM. Merging to master. |

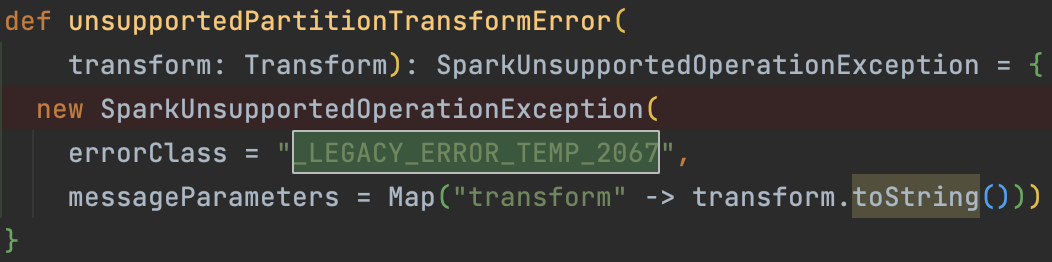

…supportedOperationException ### What changes were proposed in this pull request? This pr aims to replace UnsupportedOperationException with SparkUnsupportedOperationException. ### Why are the changes needed? 1.When I work on https://issues.apache.org/jira/browse/SPARK-40889, I found `QueryExecutionErrors.unsupportedPartitionTransformError` throw **UnsupportedOperationException**(but not **SparkUnsupportedOperationException**), it seem not to fit into the new error framework. https://github.com/apache/spark/blob/a27b459be3ca2ad2d50b9d793b939071ca2270e2/sql/catalyst/src/main/scala/org/apache/spark/sql/connector/catalog/CatalogV2Implicits.scala#L71-L72 2.`QueryExecutionErrors.unsupportedPartitionTransformError` throw SparkUnsupportedOperationException, but UT catch `UnsupportedOperationException`. https://github.com/apache/spark/blob/a27b459be3ca2ad2d50b9d793b939071ca2270e2/sql/core/src/test/scala/org/apache/spark/sql/execution/command/PlanResolutionSuite.scala#L288-L301 https://github.com/apache/spark/blob/a27b459be3ca2ad2d50b9d793b939071ca2270e2/sql/catalyst/src/main/scala/org/apache/spark/sql/errors/QueryExecutionErrors.scala#L904-L909 https://github.com/apache/spark/blob/a27b459be3ca2ad2d50b9d793b939071ca2270e2/core/src/main/scala/org/apache/spark/SparkException.scala#L144-L154 ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? Existed UT. Closes apache#38387 from panbingkun/replace_UnsupportedOperationException. Authored-by: panbingkun <pbk1982@gmail.com> Signed-off-by: Max Gekk <max.gekk@gmail.com>

### What changes were proposed in this pull request? This pr aims to upgrade Arrow from 14.0.2 to 15.0.0, this version fixes the compatibility issue with Netty 4.1.104.Final(GH-39265). Additionally, since the `arrow-vector` module uses `eclipse-collections` to replace `netty-common` as a compile-level dependency, Apache Spark has added a dependency on `eclipse-collections` after upgrading to use Arrow 15.0.0. ### Why are the changes needed? The new version brings the following major changes: Bug Fixes GH-34610 - [Java] Fix valueCount and field name when loading/transferring NullVector GH-38242 - [Java] Fix incorrect internal struct accounting for DenseUnionVector#getBufferSizeFor GH-38254 - [Java] Add reusable buffer getters to char/binary vectors GH-38366 - [Java] Fix Murmur hash on buffers less than 4 bytes GH-38387 - [Java] Fix JDK8 compilation issue with TestAllTypes GH-38614 - [Java] Add VarBinary and VarCharWriter helper methods to more writers GH-38725 - [Java] decompression in Lz4CompressionCodec.java does not set writer index New Features and Improvements GH-38511 - [Java] Add getTransferPair(Field, BufferAllocator, CallBack) for StructVector and MapVector GH-14936 - [Java] Remove netty dependency from arrow-vector GH-38990 - [Java] Upgrade to flatc version 23.5.26 GH-39265 - [Java] Make it run well with the netty newest version 4.1.104 The full release notes as follows: - https://arrow.apache.org/release/15.0.0.html ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Pass GitHub Actions ### Was this patch authored or co-authored using generative AI tooling? No Closes #44797 from LuciferYang/SPARK-46718. Authored-by: yangjie01 <yangjie01@baidu.com> Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

What changes were proposed in this pull request?

This pr aims to replace UnsupportedOperationException with SparkUnsupportedOperationException.

Why are the changes needed?

1.When I work on https://issues.apache.org/jira/browse/SPARK-40889,

I found

QueryExecutionErrors.unsupportedPartitionTransformErrorthrow UnsupportedOperationException(but not SparkUnsupportedOperationException), it seem not to fit into the new error framework.spark/sql/catalyst/src/main/scala/org/apache/spark/sql/connector/catalog/CatalogV2Implicits.scala

Lines 71 to 72 in a27b459

2.

QueryExecutionErrors.unsupportedPartitionTransformErrorthrow SparkUnsupportedOperationException, but UT catchUnsupportedOperationException.spark/sql/core/src/test/scala/org/apache/spark/sql/execution/command/PlanResolutionSuite.scala

Lines 288 to 301 in a27b459

spark/sql/catalyst/src/main/scala/org/apache/spark/sql/errors/QueryExecutionErrors.scala

Lines 904 to 909 in a27b459

spark/core/src/main/scala/org/apache/spark/SparkException.scala

Lines 144 to 154 in a27b459

Does this PR introduce any user-facing change?

No.

How was this patch tested?

Existed UT.