This repository contains a Python notebook that uses Google Earth Engine (via its Python API) to preprocess Land Surface Temperature (LST) data for a machine learning project focused on wildfire prediction. Land Surface Temperature (LST) measures the temperature of the Earth's surface, which includes soil, vegetation, and built-up areas. It is derived from satellite thermal infrared data and reflects the heat emitted from the ground. This thermal information helps assess how different surfaces interact with heat - a critical factor in determining wildfire risk.

This repo includes my own data collection and EDA for a group machine learning project. You can view the main project, here.

To better predict how fires might behave in the future, we analyzed data from January 2012 to June 30, 2024, focusing on Uttarakhand (though you can modify the geographic region to suit your specific research).

The analysis uses Google Earth Engine (GEE) to process the MODIS/061/MOD11A1 dataset. The data is clipped to any user-defined region using a shapefile, resampled to 500m resolution, and exported for further analysis.

The files for the mean LST day and for 2024 mean LST day and 2024 mean LST night are too large to include directly in this repository. However, if you'd like to access them, you can view or download them from my DagsHub repository: Uttarakhand-Land-Surface-Temperature-LST-Analysis-Using-GEE

Before starting work on this project, ensure you have:

- Google account with access to Google Colab

- Google Earth Engine account with approved access

- Google Cloud Console project set up

- Google Drive API enabled (if planning to export results)

- Access to the project's shapefile assets

- Tested your GEE authentication in Colab

- Objective

- Requirements

- Setup Instructions

- Data Workflow

- How to Use

- Output

- Handling Task Queue Errors

- Further Exploration

- Data Visualization

- Glossary & Terms - definitions of key terms like LST and other technical concepts used in this project

This project provides a framework for analyzing daily LST data for any user-defined region. It is designed to:

- Extract daytime and nighttime LST data from the MODIS

MOD11A1dataset - Allow users to specify their region of interest (ROI) by uploading a shapefile

- Resample the data from 1 km to 500m resolution for more accurate analysis

To reproduce the results, the following tools and libraries are required:

- A Google account with access to:

- Python libraries:

earthengine-apigeemap

-

Register for Google Earth Engine:

- Visit Google Earth Engine and sign in with your Google account

- Apply for access (if not already approved)

-

Choose Your Account Type: For academic research purposes, select "Unpaid Usage":

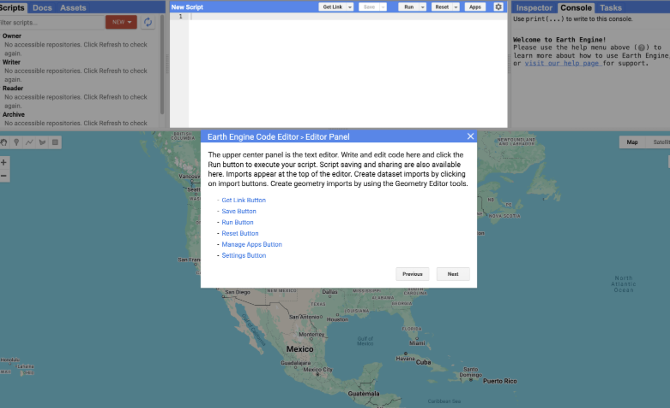

- Once approved, you'll see the Code Editor interface:

-

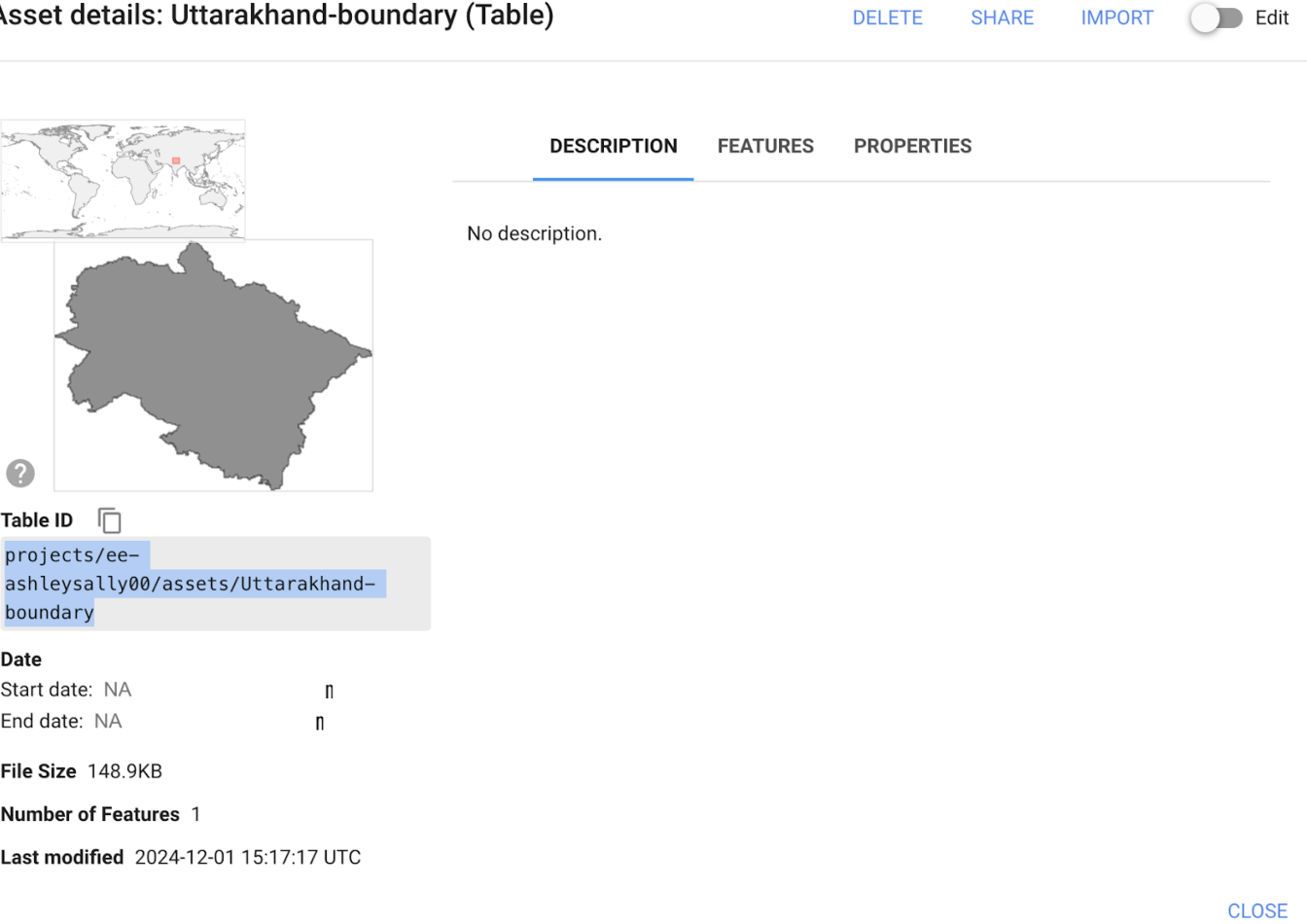

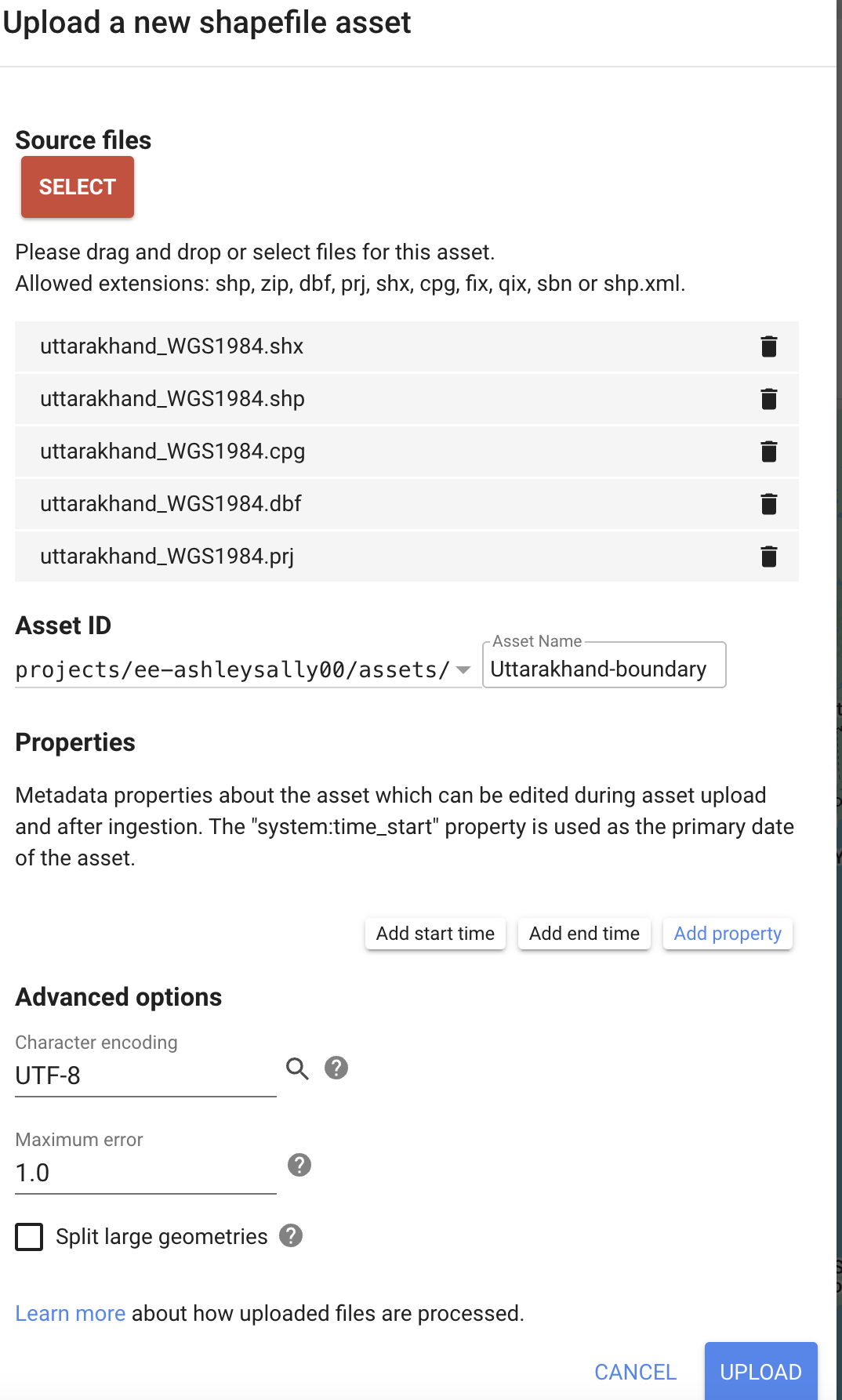

Upload a Shapefile:

-

Go to the Earth Engine Code Editor

-

In the Assets tab, click the "NEW" button and select "Shape files"

-

You'll see this upload interface:

-

- After successfully uploading, you'll see your shapefile in the Assets tab:

- Note the Asset ID for use in your code

-

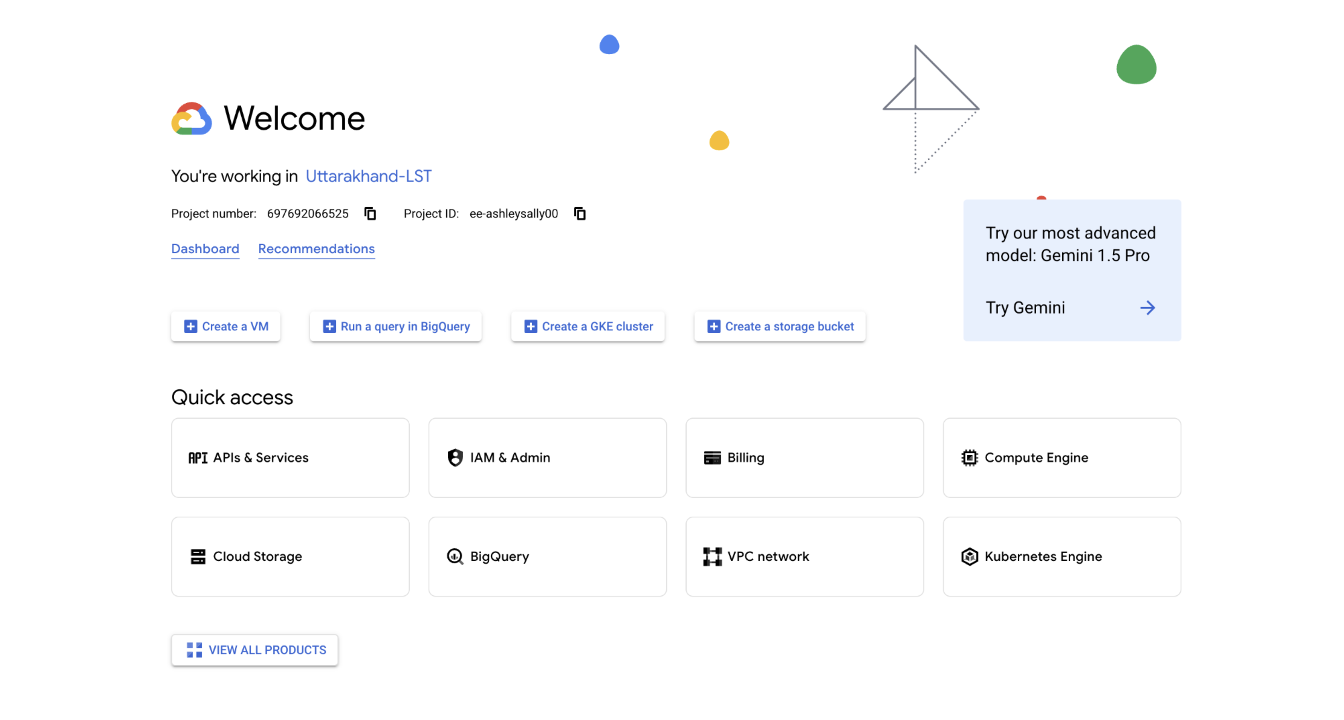

Create a Project:

- Go to Google Cloud Console

- Create a new project or select an existing one:

-

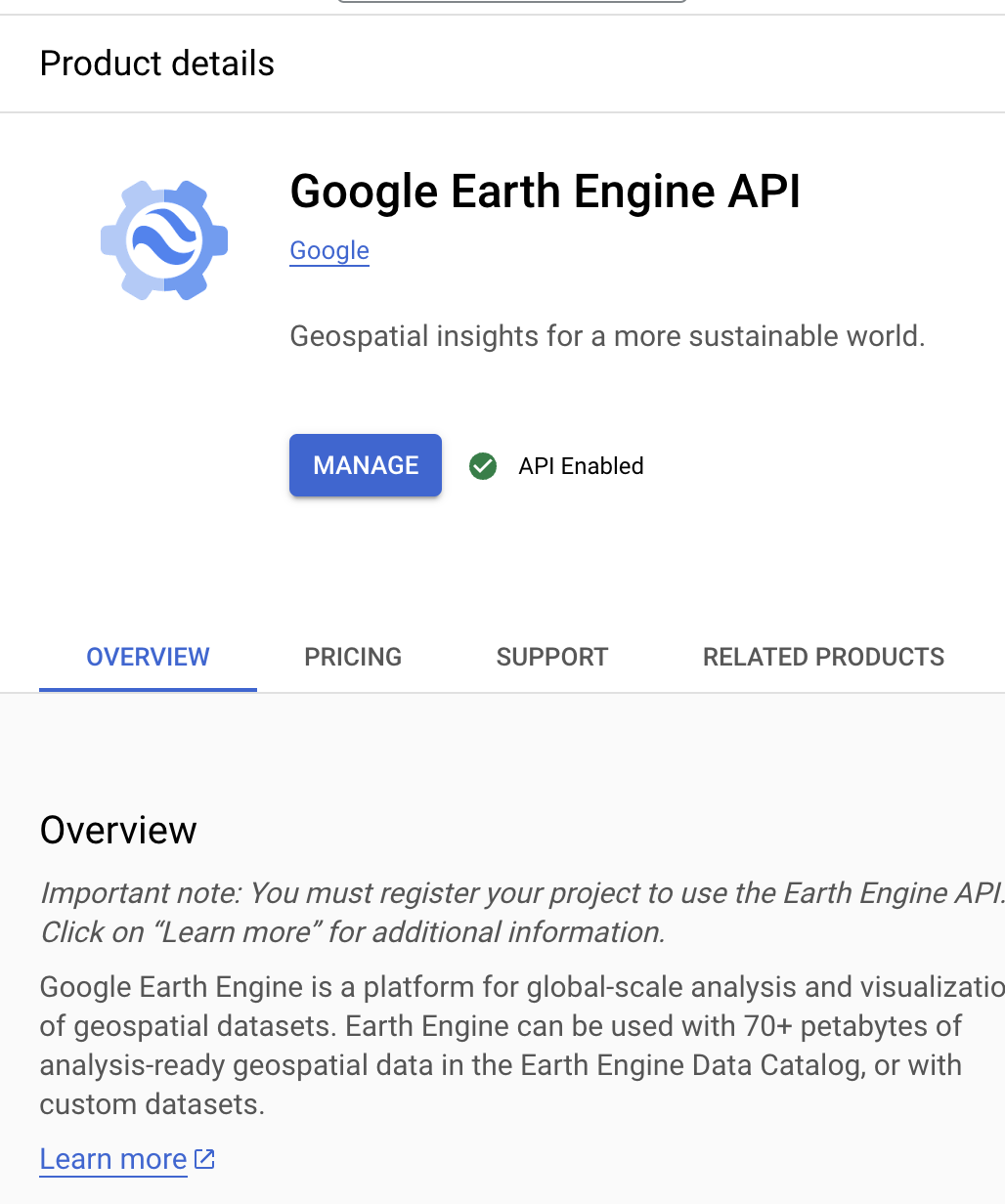

Enable Earth Engine API:

- In the APIs & Services > Library section, search for Earth Engine API

- You should see the following screen:

- Click "MANAGE" or "ENABLE" (if not already enabled)

- When successfully enabled, you'll see a green checkmark with "API Enabled" as shown in the image above

-

Optional: Enable Google Drive API:

- If exporting results to Google Drive, enable the Google Drive API

-

Open a new notebook in Colab

-

Install required libraries:

!pip install earthengine-api geemap -

Authenticate Earth Engine:

import ee ee.Authenticate() ee.Initialize()

-

Load MODIS Dataset:

- The MODIS/061/MOD11A1 dataset is filtered for the user-specified time range

-

Clip to User-Defined ROI:

- The dataset is clipped to the boundary defined by the uploaded shapefile

-

Resample to 500m:

- The data is resampled from 1 km to 500m resolution using bilinear interpolation

-

Extract and Scale LST:

- Daytime (LST_Day_1km) and nighttime (LST_Night_1km) bands are extracted

- Data is scaled to Kelvin (value * 0.02)

-

Export:

- Mean LST for the time range or daily time-series data is exported as GeoTIFF or CSV to Google Drive

-

Open the Colab Notebook:

- Download the .ipynb file from the shared repository or run it directly in Colab

-

Update the Parameters:

- Replace

users/your_username/Region_Boundarywith your shapefile's Asset ID - Specify the date range (e.g., 2012-01-01 to 2024-06-30)

- Replace

-

Run the Notebook:

- Authenticate GEE and Google Drive when prompted

-

Download the Outputs:

- Access the exported files from your Google Drive folder (default: EarthEngineExports)

- The Colab notebook processes and exports the mean daytime and nighttime LST data for the entire time range (January 2012 to June 30, 2024).

- These GeoTIFF files summarize the average Land Surface Temperature for the given period.

- Accessing the Output:

- The files are saved in Google Drive under the folder

EarthEngineExports. - The filenames are:

Mean_LST_Day.tif(for daytime mean)Mean_LST_Night.tif(for nighttime mean).

- The files are saved in Google Drive under the folder

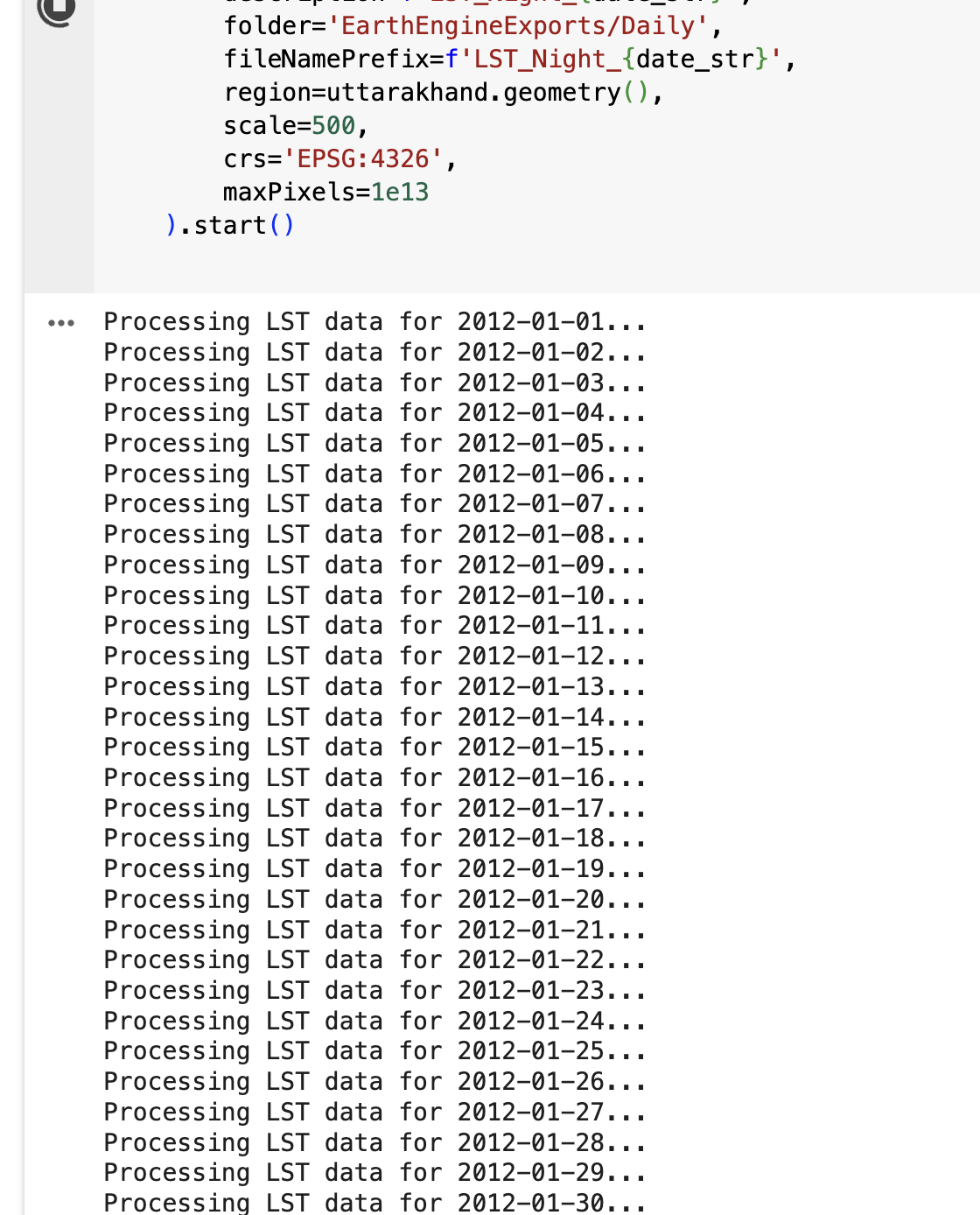

To extract daily LST data for the specified time range (January 2012 to June 30, 2024), additional Python code needs to be added to the Colab notebook. This processes and exports daily LST data as GeoTIFF files for both daytime and nighttime.

-

Google Drive Location:

- Daily LST outputs are saved in the folder

EarthEngineExports/Dailyin your Google Drive. - Each file is named in the format:

LST_Day_YYYY-MM-DD.tif(e.g.,LST_Day_2012-01-01.tiffor daytime LST on January 1, 2012)LST_Night_YYYY-MM-DD.tif(e.g.,LST_Night_2012-01-01.tiffor nighttime LST on January 1, 2012).

- Daily LST outputs are saved in the folder

-

Processing Time:

- Exporting daily LST data for an extended time range (January 2012 to June 30, 2024) may take a while.

- Below is an example of the Colab output during the process:

Add the following Python code to the end of your Colab notebook to generate and export the daily LST data:

import datetime

import ee

# Authenticate and initialize Earth Engine (if not already done)

ee.Authenticate()

ee.Initialize()

# Load the MODIS LST dataset

modis = ee.ImageCollection("MODIS/061/MOD11A1")

# Load the Uttarakhand shapefile (replace with your shapefile's Asset ID)

uttarakhand = ee.FeatureCollection("projects/ee-your-project-id/assets/Uttarakhand-boundary")

# Generate a list of dates from 2012-01-01 to 2024-06-30

start_date = datetime.date(2012, 1, 1)

end_date = datetime.date(2024, 6, 30)

dates = [start_date + datetime.timedelta(days=i) for i in range((end_date - start_date).days + 1)]

# Iterate through each date and process LST data

for date in dates:

date_str = date.strftime('%Y-%m-%d')

print(f"Processing LST data for {date_str}...")

# Filter MODIS dataset for the specific date

daily_modis = modis.filterDate(date_str, (date + datetime.timedelta(days=1)).strftime('%Y-%m-%d'))

# Clip and resample to 500m

daily_modis_clipped = daily_modis.map(lambda img: img.clip(uttarakhand))

daily_modis_resampled = daily_modis_clipped.map(

lambda img: img.resample('bilinear').reproject(crs='EPSG:4326', scale=500)

)

# Extract Daytime and Nighttime LST

daily_lst_day = daily_modis_resampled.select('LST_Day_1km').mean().multiply(0.02).rename('LST_Day_K')

daily_lst_night = daily_modis_resampled.select('LST_Night_1km').mean().multiply(0.02).rename('LST_Night_K')

# Export daily data to Google Drive

ee.batch.Export.image.toDrive(

image=daily_lst_day,

description=f'LST_Day_{date_str}',

folder='EarthEngineExports/Daily',

fileNamePrefix=f'LST_Day_{date_str}',

region=uttarakhand.geometry(),

scale=500,

crs='EPSG:4326',

maxPixels=1e13

).start()

ee.batch.Export.image.toDrive(

image=daily_lst_night,

description=f'LST_Night_{date_str}',

folder='EarthEngineExports/Daily',

fileNamePrefix=f'LST_Night_{date_str}',

region=uttarakhand.geometry(),

scale=500,

crs='EPSG:4326',

maxPixels=1e13

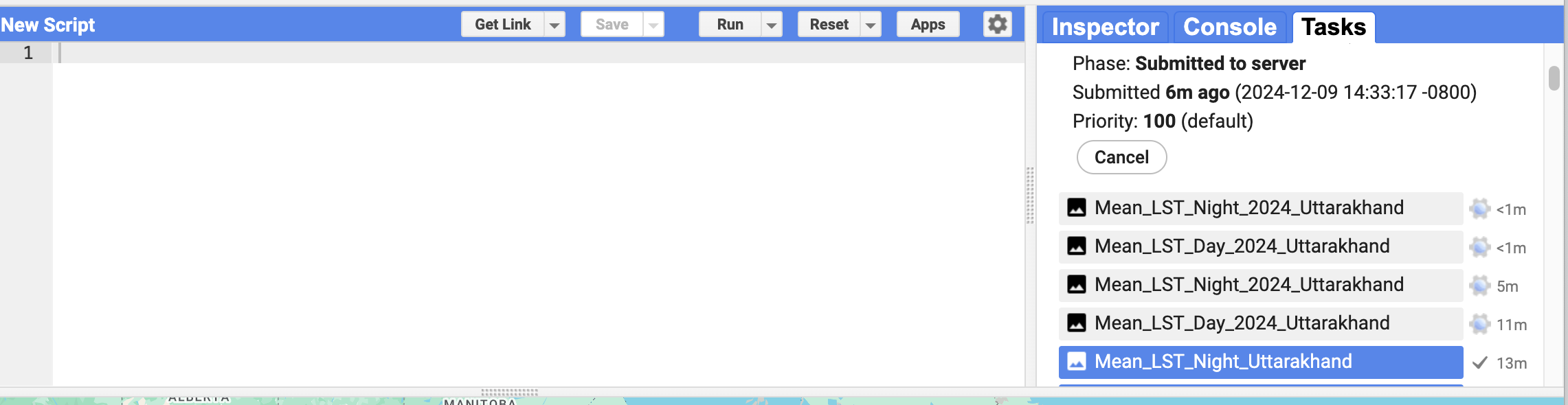

).start()When processing large datasets, it's helpful to monitor the progress of your export tasks in the Inspector Console of the Google Earth Engine Code Editor. After you run the code to export files, you can check the Tasks tab to see the status of each export. This allows you to:

- Confirm that the export has started.

- See the approximate time it will take for the file(s) to download.

- Identify and debug any errors if the task fails.

Below is an example of what the Inspector Console looks like while a task is running:

- Go to the Tasks tab in the Google Earth Engine Code Editor.

- Look for your export task(s). Each task will have:

- Phase: This shows whether the task is queued, running, or completed.

- Submitted Time: When the task was initiated.

- Estimated Time: The approximate time left for completion (if available).

- Wait for the task's status to change to Completed.

- Once completed, the exported file(s) can be found in the folder you specified in your code (e.g.,

EarthEngineExportsin Google Drive).

The following outputs are generated:

-

Mean LST (Day and Night):

- GeoTIFF files summarizing mean daytime and nighttime LST over the specified time range

-

Daily Time-Series:

- A stack of daily LST data as GeoTIFF or CSV files for analysis

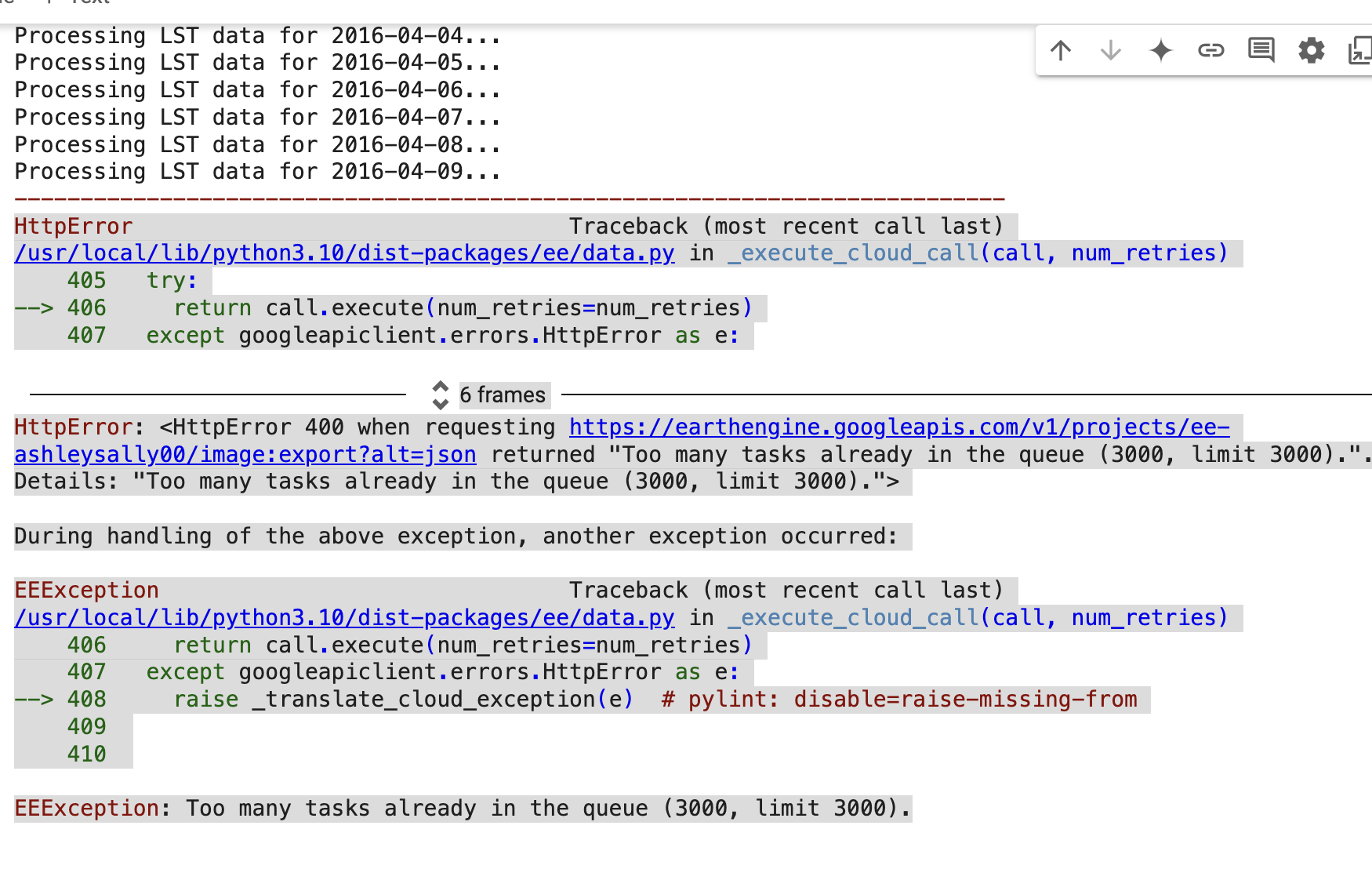

When processing and downloading large datasets such as daily LST data over a long time range, you may encounter the following error:

This error occurs because Google Earth Engine enforces a limit of 3000 queued tasks per user. When exporting data for each day across multiple years, the number of tasks can easily exceed this limit.

-

Filter by Year and Process One Year at a Time

To avoid submitting too many tasks at once, you can filter the dataset to process and export data one year at a time:

# Define the year to process year = 2012 start_date = datetime.date(year, 1, 1) end_date = datetime.date(year, 12, 31) dates = [start_date + datetime.timedelta(days=i) for i in range((end_date - start_date).days + 1)] # Iterate through each date and process LST data for the selected year for date in dates: date_str = date.strftime('%Y-%m-%d') print(f"Processing LST data for {date_str}...")

-

Use Task Monitoring

To avoid overwhelming the task queue, modify the code to submit tasks one at a time and wait for their completion:

# Export daily data to Google Drive with task monitoring export_task_day = ee.batch.Export.image.toDrive( image=daily_lst_day, description=f'LST_Day_{date_str}', folder='EarthEngineExports/Daily', fileNamePrefix=f'LST_Day_{date_str}', region=uttarakhand.geometry(), scale=500, crs='EPSG:4326', maxPixels=1e13 ) export_task_day.start() export_task_night = ee.batch.Export.image.toDrive( image=daily_lst_night, description=f'LST_Night_{date_str}', folder='EarthEngineExports/Daily', fileNamePrefix=f'LST_Night_{date_str}', region=uttarakhand.geometry(), scale=500, crs='EPSG:4326', maxPixels=1e13 ) export_task_night.start() # Wait for the tasks to complete before starting the next iteration while export_task_day.active() or export_task_night.active(): print(f"Waiting for tasks to complete for {date_str}...") time.sleep(60) # Wait for 60 seconds before checking again

- Large File Support: Handle files that are too large for GitHub

- Version Control for Data: Manage your data with DVC (Data Version Control) or Git

- Collaboration-Friendly: Integrates seamlessly with GitHub for efficient teamwork

- Go to DagsHub Sign Up and create an account if you don't already have one

- You can sign up using your GitHub or Google account, or by providing your email address

- Verify your email address to activate your account

- Once logged in, create a new repository for your project or use an existing one

- After exporting data from GEE, navigate to your Google Drive

- Find the folder with exported data. The files will typically have a

.tif(GeoTIFF) file extension - Download the

.tiffiles to your local machine

-

Connect Your GitHub Repository:

- Link your GitHub repository to DagsHub

- Synchronize files and changes between the two platforms

-

Using the Command Line Interface (CLI):

- Open your terminal or the integrated CLI in Visual Studio Code

- Navigate to the folder containing your

.tiffiles - Add, commit, and push your files to DagsHub:

git add your_file.tif git commit -m "Add LST data from GEE" git push origin main

-

DagsHub GUI:

- Log in to DagsHub and navigate to your repository

- Use the Upload button to add your

.tiffiles

Note: Some file types may not upload properly through the GUI. For large files or unsupported formats, use the CLI.

If you'd like to delve deeper into the significance of this data and its role in wildfire prediction, explore the following resources:

-

- This resource includes a detailed glossary to clarify key terms and concepts relevant to the analysis.

-

Interview with a Wildfire PIO:

- Gain insights from a public information officer and volunteer firefighter about the importance of LST data in wildfire management.