-

Notifications

You must be signed in to change notification settings - Fork 189

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Add default source nodes rendering #661

Conversation

👷 Deploy Preview for amazing-pothos-a3bca0 processing.

|

| task_full_name = node.unique_id[len("source.") :] | ||

| task_id = f"{task_full_name}_source" | ||

| args["select"] = f"source:{node.unique_id[len('source.'):]}" | ||

| args["models"] = None |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@tatiana I have a feeling that this lines can be written somewhere else.

My dbt testing showed that

dbt source freshness --models [any] fails, while using the --select works. I ended up manually setting as None the models argument.

Any thoughts?

| if node.has_freshness is False: | ||

| return TaskMetadata( | ||

| id=task_id, | ||

| # arguments=args, |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@tatiana Returning an EmptyOperator just seemed "grayish" in the dag UI. Looking at all the cool cosmos-related operators "DbtLocal..." "Dbt...", etc and having an EmptyOperator didn't seemed to go with the "cosmos pattern", Should we create a dummySourceOperator that inherits EmptyOperator just for the sake of having all Operators follow the same naming pattern?

| @@ -742,3 +742,15 @@ def __init__(self, **kwargs: str) -> None: | |||

| raise DeprecationWarning( | |||

| "The DbtDepsOperator has been deprecated. " "Please use the `install_deps` flag in dbt_args instead." | |||

| ) | |||

|

|

|||

|

|

|||

| class DbtSourceLocalOperator(DbtLocalBaseOperator): | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

|

Hi @arojasb3, it looks like you've made great progress on this feature! Would you be able to rebase it so it can be reviewed and merged? Thanks! |

<!--pre-commit.ci start--> updates: - [github.com/astral-sh/ruff-pre-commit: v0.1.3 → v0.1.4](astral-sh/ruff-pre-commit@v0.1.3...v0.1.4) <!--pre-commit.ci end--> Co-authored-by: pre-commit-ci[bot] <66853113+pre-commit-ci[bot]@users.noreply.github.com>

(cherry picked from commit a6cea8f)

Bug fixes * Support ProjectConfig.dbt_project_path = None & different paths for Rendering and Execution by @MrBones757 in astronomer#634 * Fix adding test nodes to DAGs built using LoadMethod.DBT_MANIFEST and LoadMethod.CUSTOM by @edgga in astronomer#615 Others * Add pre-commit hook for McCabe max complexity check and fix errors by @jbandoro in astronomer#629 * Update contributing docs for running integration tests by @jbandoro in astronomer#638 * Fix CI issue running integration tests by @tatiana in astronomer#640 and astronomer#644 * pre-commit updates in astronomer#637 (cherry picked from commit fa0620a)

…ronomer#649) Adds a snowflake mapping for encrypted private key using an environment variable Closes: astronomer#632 Breaking Change? This does rename the previous SnowflakeEncryptedPrivateKeyFilePemProfileMapping to SnowflakeEncryptedPrivateKeyFilePemProfileMapping but this makes it clearer as a new SnowflakeEncryptedPrivateKeyPemProfileMapping is added which supports the env variable. Also was only released as a pre-release change

This allows you to fully refresh a model from the console.

Full-refresh/backfill is a common task. Using Airflow parameters makes

this easy. Without this, you'd have to trigger an entire deployment. In our

setup, company analysts manage their models without modifying

the DAG code. This empowers such users.

Example of usage:

```python

with DAG(

dag_id="jaffle",

params={"full_refresh": Param(default=False, type="boolean")},

render_template_as_native_obj=True

):

task = DbtTaskGroup(

operator_args={"full_refresh": "{{ params.get('full_refresh') }}", "install_deps": True},

)

```

Closes: astronomer#151

…opagation if desired (astronomer#648) Add Airflow config check for cosmos/propagate_logs to allow override of default propagation behavior. Expose entry-point so that Airflow can theoretically detect configuration default. Closes astronomer#639 ## Breaking Change? This is backward-compatible as it falls back to default behavior if the `cosmos` section or `propagate_logs` option don't exist. ## Checklist - [X] I have made corresponding changes to the documentation (if required) - [X] I have added tests that prove my fix is effective or that my feature works --------- Co-authored-by: Andrew Greenburg <agreenburg@vergeventures.net>

If execution_config was reused, Cosmos 1.2.2 would raise:

```

astronomer-cosmos/dags/basic_cosmos_task_group.py

Traceback (most recent call last):

File "/Users/tati/Code/cosmos-clean/astronomer-cosmos/venv-38/lib/python3.8/site-packages/airflow/models/dagbag.py", line 343, in parse

loader.exec_module(new_module)

File "<frozen importlib._bootstrap_external>", line 848, in exec_module

File "<frozen importlib._bootstrap>", line 219, in _call_with_frames_removed

File "/Users/tati/Code/cosmos-clean/astronomer-cosmos/dags/basic_cosmos_task_group.py", line 74, in <module>

basic_cosmos_task_group()

File "/Users/tati/Code/cosmos-clean/astronomer-cosmos/venv-38/lib/python3.8/site-packages/airflow/models/dag.py", line 3817, in factory

f(**f_kwargs)

File "/Users/tati/Code/cosmos-clean/astronomer-cosmos/dags/basic_cosmos_task_group.py", line 54, in basic_cosmos_task_group

orders = DbtTaskGroup(

File "/Users/tati/Code/cosmos-clean/astronomer-cosmos/cosmos/airflow/task_group.py", line 26, in __init__

DbtToAirflowConverter.__init__(self, *args, **specific_kwargs(**kwargs))

File "/Users/tati/Code/cosmos-clean/astronomer-cosmos/cosmos/converter.py", line 113, in __init__

raise CosmosValueError(

cosmos.exceptions.CosmosValueError: ProjectConfig.dbt_project_path is mutually exclusive with RenderConfig.dbt_project_path and ExecutionConfig.dbt_project_path.If using RenderConfig.dbt_project_path or ExecutionConfig.dbt_project_path, ProjectConfig.dbt_project_path should be None

```

This has been raised by an Astro customer and our field engineer, who

tried to run: https://github.com/astronomer/cosmos-demo

Adds the `aws_session_token` argument to Athena, which was added to dbt-athena 1.6.4 in dbt-labs/dbt-athena#459 Closes: astronomer#609 Also addresses this comment: astronomer#578 (comment)

…AL` (astronomer#659) Extends the local operator when running `dbt deps` with the provides profile flags. This makes the logic consistent between DAG parsing and task running as referenced below https://github.com/astronomer/astronomer-cosmos/blob/8e2d5908ce89aa98813af6dfd112239e124bd69a/cosmos/dbt/graph.py#L247-L266 Closes: astronomer#658

Since Cosmos 1.2.2 users who used `ExecutionMode.DBT_LS` (directly or

via `ExecutionMode.AUTOMATIC`) and set

`ExecutionConfig.dbt_executable_path` (most, if not all, Astro CLI

users), like:

```

execution_config = ExecutionConfig(

dbt_executable_path = f"{os.environ['AIRFLOW_HOME']}/dbt_venv/bin/dbt",

)

```

Started facing the issue:

```

Broken DAG: [/usr/local/airflow/dags/my_example.py] Traceback (most recent call last):

File "/usr/local/lib/python3.11/site-packages/cosmos/dbt/graph.py", line 178, in load

self.load_via_dbt_ls()

File "/usr/local/lib/python3.11/site-packages/cosmos/dbt/graph.py", line 233, in load_via_dbt_ls

raise CosmosLoadDbtException(f"Unable to find the dbt executable: {self.dbt_cmd}")

cosmos.dbt.graph.CosmosLoadDbtException: Unable to find the dbt executable: dbt

```

This issue was initially reported in the Airflow #airflow-astronomer

Slack channel:

https://apache-airflow.slack.com/archives/C03T0AVNA6A/p1699584315506629

The workaround to avoid this error in Cosmos 1.2.2 and 1.2.3 is to set

the `dbt_executable_path` in the `RenderConfig`:

```

render_config=RenderConfig(dbt_executable_path = f"{os.environ['AIRFLOW_HOME']}/dbt_venv/bin/dbt",),

```

This PR solves the bug from Cosmos 1.2.4 onwards.

<!--pre-commit.ci start--> updates: - [github.com/astral-sh/ruff-pre-commit: v0.1.4 → v0.1.5](astral-sh/ruff-pre-commit@v0.1.4...v0.1.5) - [github.com/psf/black: 23.10.1 → 23.11.0](psf/black@23.10.1...23.11.0) - [github.com/pre-commit/mirrors-mypy: v1.6.1 → v1.7.0](pre-commit/mirrors-mypy@v1.6.1...v1.7.0) <!--pre-commit.ci end--> Co-authored-by: pre-commit-ci[bot] <66853113+pre-commit-ci[bot]@users.noreply.github.com> Co-authored-by: Tatiana Al-Chueyr <tatiana.alchueyr@gmail.com>

astronomer#660) This PR refactors the `create_symlinks` function that was previously used in load via dbt ls so that it can be used in `DbtLocalBaseOperator.run_command` instead of copying the entire directory. Closes: astronomer#614

…stronomer#671) Update `DbtLocalBaseOperator` code to store `compiled_sql` prior to exception handling so that when a task fails, the `compiled_sql` can still be reviewed. In the process found and fixed a related bug where `compiled_sql` was being dropped on some operations due to the way that the `full_refresh` field was being added to the `template_fields`. Closes astronomer#369 Fixes bug introduced in astronomer#623 where compiled_sql was being lost in `DbtSeedLocalOperator` and `DbtRunLocalOperator` Co-authored-by: Andrew Greenburg <agreenburg@vergeventures.net>

…stronomer#674) Add execution config to MWAA code example document. Closes: astronomer#667

Follow up from: astronomer#1055

…stronomer#1054) Using the Airflow metadata database connection as an example connection is misleading. The mismatch in the environment variable value used in the Cosmos integration tests, particularly with sqlite as the Airflow metadata database, is an issue that can hide other underlining problems. This PR decouples the test connection used by Cosmos example DAGs from the Airflow metadata Database connection. Since this change affects the Github action configuration, it will only work for the branch-triggered GH action runs, such as: https://github.com/astronomer/astronomer-cosmos/actions/runs/9596066209 Because this is a breaking change to the CI script itself, all the tests `pull_request_target` are expected to fail during the PR - and will pass once this is merged to `main`. This improvement was originally part of astronomer#1014 --------- Co-authored-by: Pankaj Koti <pankajkoti699@gmail.com>

As part of the CI build, we create a Python virtual environment with the dependencies necessary to run the tests. Currently, we recreate this environment variable every time a Github Action job is run. This PR caches the folder hatch and stores the Python virtualenv. It seems to have helped to reduce a bit, although the jobs are still very slow: - Unit tests execution from ~[2:40](https://github.com/astronomer/astronomer-cosmos/actions/runs/9550554350/job/26322778438) to [~2:25](https://github.com/astronomer/astronomer-cosmos/actions/runs/9598977261/job/26471650029) - Integration tests execution from [~11:07](https://github.com/astronomer/astronomer-cosmos/actions/runs/9550554350/job/26322894839) to [~10:27](https://github.com/astronomer/astronomer-cosmos/actions/runs/9598977261/job/26471677561)

## Description ~shutil.copy includes permission copying via chmod. If the user lacks permission to run chmod, a PermissionError occurs. To avoid this, we split the operation into two steps: first, copy the file contents; then, copy metadata if feasible without raising exceptions. Step 1: Copy file contents (no metadata) Step 2: Copy file metadata (permission bits and other metadata) without raising exception~ use shutil.copyfile(...) instead of shutil.copy(...) to avoid running chmod ## Related Issue(s) closes: astronomer#1008 ## Breaking Change? No ## Checklist - [ ] I have made corresponding changes to the documentation (if required) - [ ] I have added tests that prove my fix is effective or that my feature works

Add the node's attributes (config, tags, etc, ...) into a TaskInstance context for retrieval by callback functions in Airflow through the use of `pre_execute` to store these attributes into a task's context. As [this PR](https://github.com/astronomer/astronomer-cosmos/pull/700/files) seems to be closed, and I have a use case for this feature, I attempt to recreate the needed feature. We leverage the `context_merge` utility function from Airflow to merge the extra context into the `Context` object of a `TaskInstance`. Closes astronomer#698

…#1063) Add ability to specify `host`/`port` for Snowflake connection. At LocalStack, we have recently started building a Snowflake emulator that allows running SF queries entirely on the local machine: https://blog.localstack.cloud/2024-05-22-introducing-localstack-for-snowflake/ . As part of a sample application we're building, we have an Apache Airflow DAG that uses Cosmos (and DBT) to connect to the local Snowflake emulator running on `localhost`. Here is a link to the sample app: localstack-samples/localstack-snowflake-samples#12 Currently, we're hardcoding this integration in the user DAG file itself, [see here](https://github.com/localstack-samples/localstack-snowflake-samples/pull/12/files#diff-559d4f883ad589522b8a9d33f87fe95b0da72ac29b775e98b273a8eb3ede9924R10-R19): ``` ... from cosmos.profiles.snowflake.user_pass import SnowflakeUserPasswordProfileMapping ... SnowflakeUserPasswordProfileMapping.airflow_param_mapping["host"] = "extra.host" SnowflakeUserPasswordProfileMapping.airflow_param_mapping["port"] = "extra.port" ... ```

<!--pre-commit.ci start--> updates: - [github.com/astral-sh/ruff-pre-commit: v0.4.9 → v0.4.10](astral-sh/ruff-pre-commit@v0.4.9...v0.4.10) <!--pre-commit.ci end--> Co-authored-by: pre-commit-ci[bot] <66853113+pre-commit-ci[bot]@users.noreply.github.com>

…le (astronomer#1014) Improve significantly the `LoadMode.DBT_LS` performance. The example DAGs tested reduced the task queueing time significantly (from ~30s to ~0.5s) and the total DAG run time for Jaffle Shop from 1 min 25s to 40s (by more than 50%). Some users[ reported improvements of 84%](astronomer#1014 (comment)) in the DAG run time when trying out these changes. This difference can be even more significant on larger dbt projects. The improvement was accomplished by caching the dbt ls output as an Airflow Variable. This is an alternative to astronomer#992, when we cached the pickled DAG/TaskGroup into a local file in the Airflow node. Unlike astronomer#992, this approach works well for distributed deployments of Airflow. As with any caching solution, this strategy does not guarantee optimal performance on every run—whenever the cache is regenerated, the scheduler or DAG processor will experience a delay. It was also observed that the key value could change across platforms (e.g., `Darwin` and `Linux`). Therefore, if using a deployment with heterogeneous OS, the key may be regenerated often. Closes: astronomer#990 Closes: astronomer#1061 **Enabling/disabling this feature** This feature is enabled by default. Users can disable it by setting the environment variable `AIRFLOW__COSMOS__ENABLE_CACHE_DBT_LS=0`. **How the cache is refreshed** Users can purge or delete the cache via Airflow UI by identifying and deleting the cache key. The cache will be automatically refreshed in case any files of the dbt project change. Changes are calculated using the SHA256 of all the files in the directory. Initially, this feature was implemented using the files' modified timestamp, but this did not work well for some Airflow deployments (e.g., `astro --dags` since the timestamp was changed during deployments). Additionally, if any of the following DAG configurations are changed, we'll automatically purge the cache of the DAGs that use that specific configuration: * `ProjectConfig.dbt_vars` * `ProjectConfig.env_vars` * `ProjectConfig.partial_parse` * `RenderConfig.env_vars` * `RenderConfig.exclude` * `RenderConfig.select` * `RenderConfig.selector` The following argument was introduced in case users would like to define Airflow variables that could be used to refresh the cache (it expects a list with Airflow variable names): * `RenderConfig.airflow_vars_to_purge_cache` Example: ``` RenderConfig( airflow_vars_to_purge_cache==["refresh_cache"] ) ``` **Cache key** The Airflow variables that represent the dbt ls cache are prefixed by `cosmos_cache`. When using `DbtDag`, the keys use the DAG name. When using `DbtTaskGroup`, they consider the TaskGroup and parent task groups and DAG. Examples: 1. The `DbtDag` "cosmos_dag" will have the cache represented by `"cosmos_cache__basic_cosmos_dag"`. 2. The `DbtTaskGroup` "customers" declared inside teh DAG "basic_cosmos_task_group" will have the cache key `"cosmos_cache__basic_cosmos_task_group__customers"`. **Cache value** The cache values contain a few properties: - `last_modified` timestamp, represented using the ISO 8601 format. - `version` is a hash that represents the version of the dbt project and arguments used to run dbt ls by the time the cache was created - `dbt_ls_compressed` represents the dbt ls output compressed using zlib and encoded to base64 to be recorded as a string to the Airflow metadata database. Steps used to compress: ``` compressed_data = zlib.compress(dbt_ls_output.encode("utf-8")) encoded_data = base64.b64encode(compressed_data) dbt_ls_compressed = encoded_data.decode("utf-8") ``` We are compressing this value because it will be significant for larger dbt projects, depending on the selectors used, and we wanted this approach to be safe and not clutter the Airflow metadata database. Some numbers on the compression * A dbt project with 100 models can lead to a dbt ls output of 257k characters when using JSON. Zlib could compress it by 20x. * Another [real-life dbt project](https://gitlab.com/gitlab-data/analytics/-/tree/master/transform/snowflake-dbt?ref_type=heads) with 9,285 models led to a dbt ls output of 8.4 MB, uncompressed. It reduces to 489 KB after being compressed using `zlib` and encoded using `base64` - to 6% of the original size. * Maximum cell size in Postgres: 20MB The latency used to compress is in the order of milliseconds, not interfering in the performance of this solution. **Future work** * How this will affect the Airflow db in the long term * How does this performance compare to `ObjectStorage`? **Example of results before and after this change** Task queue times in Astro before the change: <img width="1488" alt="Screenshot 2024-06-03 at 11 15 26" src="https://github.com/astronomer/astronomer-cosmos/assets/272048/20f6ae8f-02e0-4974-b445-740925ab1b3c"> Task queue times in Astro after the change on the second run of the DAG: <img width="1624" alt="Screenshot 2024-06-03 at 11 15 44" src="https://github.com/astronomer/astronomer-cosmos/assets/272048/c7b8a821-8751-4d2c-8feb-1d0c9bbba97e"> This feature will be available in `astronomer-cosmos==1.5.0a8`.

## Description It appears there was an accident resolving conflicts in the changelog, which resulted in 1.4.2 and 1.4.1 (with the content for 1.4.3) being listed twice. ## Related Issue(s) N/A ## Breaking Change? No ## Checklist - [ ] I have made corresponding changes to the documentation (if required) - [ ] I have added tests that prove my fix is effective or that my feature works

Look like rendering for conf `enable_cache_dbt_ls` is broken in docs **Before change** <img width="834" alt="Screenshot 2024-06-27 at 1 36 27 AM" src="https://github.com/astronomer/astronomer-cosmos/assets/98807258/38565e3c-0b23-4764-936a-be40c53c0a00"> **After change** <img width="815" alt="Screenshot 2024-06-27 at 1 37 09 AM" src="https://github.com/astronomer/astronomer-cosmos/assets/98807258/1c301d6a-c233-440d-801f-f9475435fc69">

Add dbt profile caching mechanism.

1. Introduced env `enable_cache_profile` to enable or disable profile

caching. This will be enabled only if global `enable_cache` is enabled.

2. Users can set the env `profile_cache_dir_name`. This will be the name

of a sub-dir inside `cache_dir` where cached profiles will be stored.

This is optional, and the default name is `profile`

3. Example Path for versioned profile:

`{cache_dir}/{profile_cache_dir}/592906f650558ce1dadb75fcce84a2ec09e444441e6af6069f19204d59fe428b/profiles.yml`

4. Implemented profile mapping hashing: first, the profile is serialized

using pickle. Then, the profile_name and target_name are appended before

hashing the data using the SHA-256 algorithm

**Perf test result:**

In local dev env with command

```

AIRFLOW_HOME=`pwd` AIRFLOW_CONN_EXAMPLE_CONN="postgres://postgres:postgres@0.0.0.0:5432/postgres" AIRFLOW_HOME=`pwd` AIRFLOW__CORE__DAGBAG_IMPORT_TIMEOUT=20000 AIRFLOW__CORE__DAG_FILE_PROCESSOR_TIMEOUT=20000 hatch run tests.py3.10-2.8:test-performance

```

NUM_MODELS=100

- TIME=167.45248413085938 (with profile cache enabled)

- TIME=173.94845390319824 (with profile cache disabled)

NUM_MODELS=200

- TIME=376.2585120201111 (with profile cache enabled)

- TIME=418.14210200309753 (with profile cache disabled)

Closes: astronomer#925

Closes: astronomer#647

Partial parsing support was introduced in astronomer#800 and improved in astronomer#904 (caching). However, as the caching layer was introduced, we removed support to use partial parsing if the cache was disabled. This PR solves the issue. Fix: astronomer#1041

New Features * Speed up ``LoadMode.DBT_LS`` by caching dbt ls output in Airflow Variable by @tatiana in astronomer#1014 * Support to cache profiles created via ``ProfileMapping`` by @pankajastro in astronomer#1046 * Support for running dbt tasks in AWS EKS in astronomer#944 by @VolkerSchiewe * Add Clickhouse profile mapping by @roadan and @pankajastro in astronomer#353 and astronomer#1016 * Add node config to TaskInstance Context by @linchun3 in astronomer#1044 Bug fixes * Support partial parsing when cache is disabled by @tatiana in astronomer#1070 * Fix disk permission error in restricted env by @pankajastro in astronomer#1051 * Add CSP header to iframe contents by @dwreeves in astronomer#1055 * Stop attaching log adaptors to root logger to reduce logging costs by @glebkrapivin in astronomer#1047 Enhancements * Support ``static_index.html`` docs by @dwreeves in astronomer#999 * Support deep linking dbt docs via Airflow UI by @dwreeves in astronomer#1038 * Add ability to specify host/port for Snowflake connection by @whummer in astronomer#1063 Docs * Fix rendering for env ``enable_cache_dbt_ls`` by @pankajastro in astronomer#1069 Others * Update documentation for DbtDocs generator by @arjunanan6 in astronomer#1043 * Use uv in CI by @dwreeves in astronomer#1013 * Cache hatch folder in the CI by @tatiana in astronomer#1056 * Change example DAGs to use ``example_conn`` as opposed to ``airflow_db`` by @tatiana in astronomer#1054 * Mark plugin integration tests as integration by @tatiana in astronomer#1057 * Ensure compliance with linting rule D300 by using triple quotes for docstrings by @pankajastro in astronomer#1049 * Pre-commit hook updates in astronomer#1039, astronomer#1050, astronomer#1064 * Remove duplicates in changelog by @jedcunningham in astronomer#1068

<!--pre-commit.ci start--> updates: - [github.com/astral-sh/ruff-pre-commit: v0.4.10 → v0.5.0](astral-sh/ruff-pre-commit@v0.4.10...v0.5.0) - [github.com/asottile/blacken-docs: 1.16.0 → 1.18.0](adamchainz/blacken-docs@1.16.0...1.18.0) - [github.com/pre-commit/mirrors-mypy: v1.10.0 → v1.10.1](pre-commit/mirrors-mypy@v1.10.0...v1.10.1) <!--pre-commit.ci end--> Co-authored-by: pre-commit-ci[bot] <66853113+pre-commit-ci[bot]@users.noreply.github.com>

Teradata has [Provider](https://airflow.apache.org/docs/apache-airflow-providers-teradata/stable/index.html) in airflow and [adapter](https://github.com/Teradata/dbt-teradata) in dbt. The cosmos library doesn't have profile configuration with mapping support. This PR address this issue. Closes: astronomer#1053

When Airflow is getting temporary AWS credentials by assuming role with `role_arn` as only `Connection` parameter, this cause task to fail due to missing credentials. This is due to the latest changes related to profile caching. The `env_vars` are accessed before `profile` which, in this case, means required values are not populated yet.

<!--pre-commit.ci start--> updates: - [github.com/astral-sh/ruff-pre-commit: v0.5.0 → v0.5.1](astral-sh/ruff-pre-commit@v0.5.0...v0.5.1) <!--pre-commit.ci end--> Co-authored-by: pre-commit-ci[bot] <66853113+pre-commit-ci[bot]@users.noreply.github.com>

…ofile", target "dev" invalid: Runtime Error Must specify `schema` in Teradata profile (astronomer#1088) `TeradataUserPassword` profile mapping throws below error for mock profile ``` Credentials in profile "generated_profile", target "dev" invalid: Runtime Error Must specify the schema in Teradata profile ``` Closes astronomer#1087

✅ Deploy Preview for sunny-pastelito-5ecb04 canceled.

|

I messed up the rebase, I'll just open a new PR |

No worries! Please go ahead and open a new PR; I’d be happy to review and test it. |

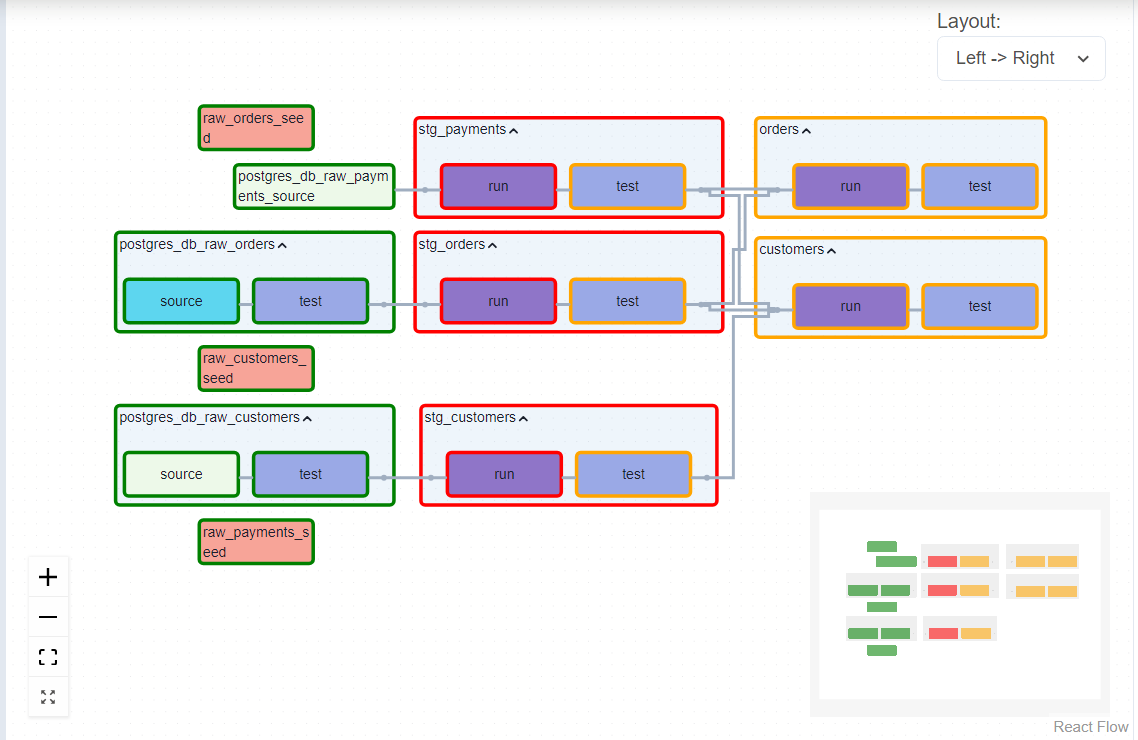

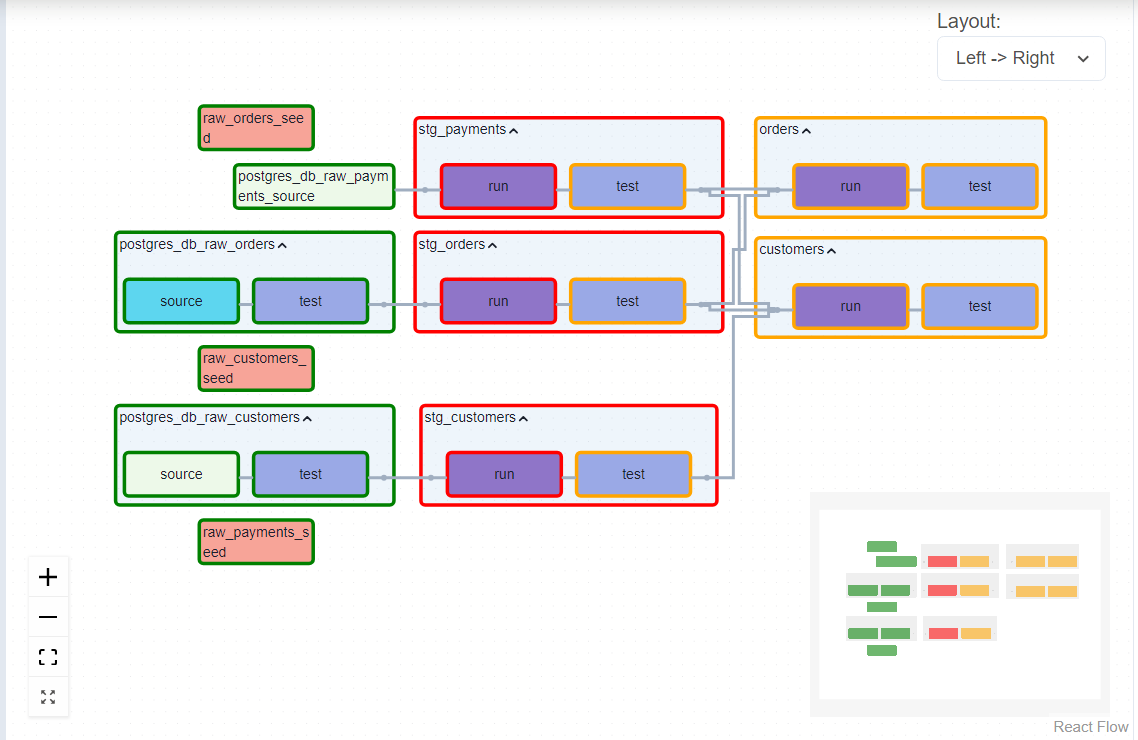

Re-Opening of PR #661 This PR features a new way of rendering source nodes: - Check freshness for sources with freshness checks - Source tests - Empty operators for nodes without tests or freshness. One of the main limitations I found while using the `custom_callback` functions on source nodes to check freshness is that nodes were being created on 100% of sources but not all of them required freshness checks, this made workers waste compute time. I'm adding a new variable into the DbtNode class called has_freshness which would be True for sources with freshness checks and False for any other resource type. If this feature is enabled with the option `ALL`: All sources with the has_freshness == False will be rendered as Empty Operators, to keep the dbt's behavior of showing sources as suggested in issue #630 <!-- Add a brief but complete description of the change. --> A new rendered template field is included too: `freshness` which is the sources.json generated by dbt when running `dbt source freshness` This adds a new node type (source), which changes some tests behavior. This PR also updates the dev dbt project jaffle_shop to include source nodes when enabled.  As seen in the image, source nodes with freshness checks are rendered with a blue color, while the ones rendered as EmptyOperator show a white/light green color ## Related Issue(s) Closes: #630 Closes: #572 Closes: #875 <!-- If this PR closes an issue, you can use a keyword to auto-close. --> <!-- i.e. "closes #0000" --> ## Breaking Change? This won't be a breaking change since the default behavior will still be ignoring this new feature. That can be changed with the new RenderConfig variable called `source_rendering_behavior`. Co-authored-by: Pankaj <pankaj.singh@astronomer.io> Co-authored-by: Pankaj Singh <98807258+pankajastro@users.noreply.github.com>

Re-Opening of PR #661 This PR features a new way of rendering source nodes: - Check freshness for sources with freshness checks - Source tests - Empty operators for nodes without tests or freshness. One of the main limitations I found while using the `custom_callback` functions on source nodes to check freshness is that nodes were being created on 100% of sources but not all of them required freshness checks, this made workers waste compute time. I'm adding a new variable into the DbtNode class called has_freshness which would be True for sources with freshness checks and False for any other resource type. If this feature is enabled with the option `ALL`: All sources with the has_freshness == False will be rendered as Empty Operators, to keep the dbt's behavior of showing sources as suggested in issue #630 <!-- Add a brief but complete description of the change. --> A new rendered template field is included too: `freshness` which is the sources.json generated by dbt when running `dbt source freshness` This adds a new node type (source), which changes some tests behavior. This PR also updates the dev dbt project jaffle_shop to include source nodes when enabled.  As seen in the image, source nodes with freshness checks are rendered with a blue color, while the ones rendered as EmptyOperator show a white/light green color Closes: #630 Closes: #572 Closes: #875 <!-- If this PR closes an issue, you can use a keyword to auto-close. --> <!-- i.e. "closes #0000" --> This won't be a breaking change since the default behavior will still be ignoring this new feature. That can be changed with the new RenderConfig variable called `source_rendering_behavior`. Co-authored-by: Pankaj <pankaj.singh@astronomer.io> Co-authored-by: Pankaj Singh <98807258+pankajastro@users.noreply.github.com>

Description

I'm aiming to give a default behaviour to source nodes for checking their source freshness and their tests.

One of the main limitations I found while using the custom_callback functions on source nodes to check freshness is that those node were being created to 100% of sources but not all of them required freshness checks, this made workers waste compute time.

I'm adding a new variable into the DbtNode class called

has_freshnesswhich would be True for sources with freshness checks and False for the ones that not and any other resource type.All sources with the

has_freshness== False will be rendered as Empty Operators, to keep the dbt's behavior of showing sources as suggested in issue #630.Related Issue(s)

#630

Breaking Change?

Checklist