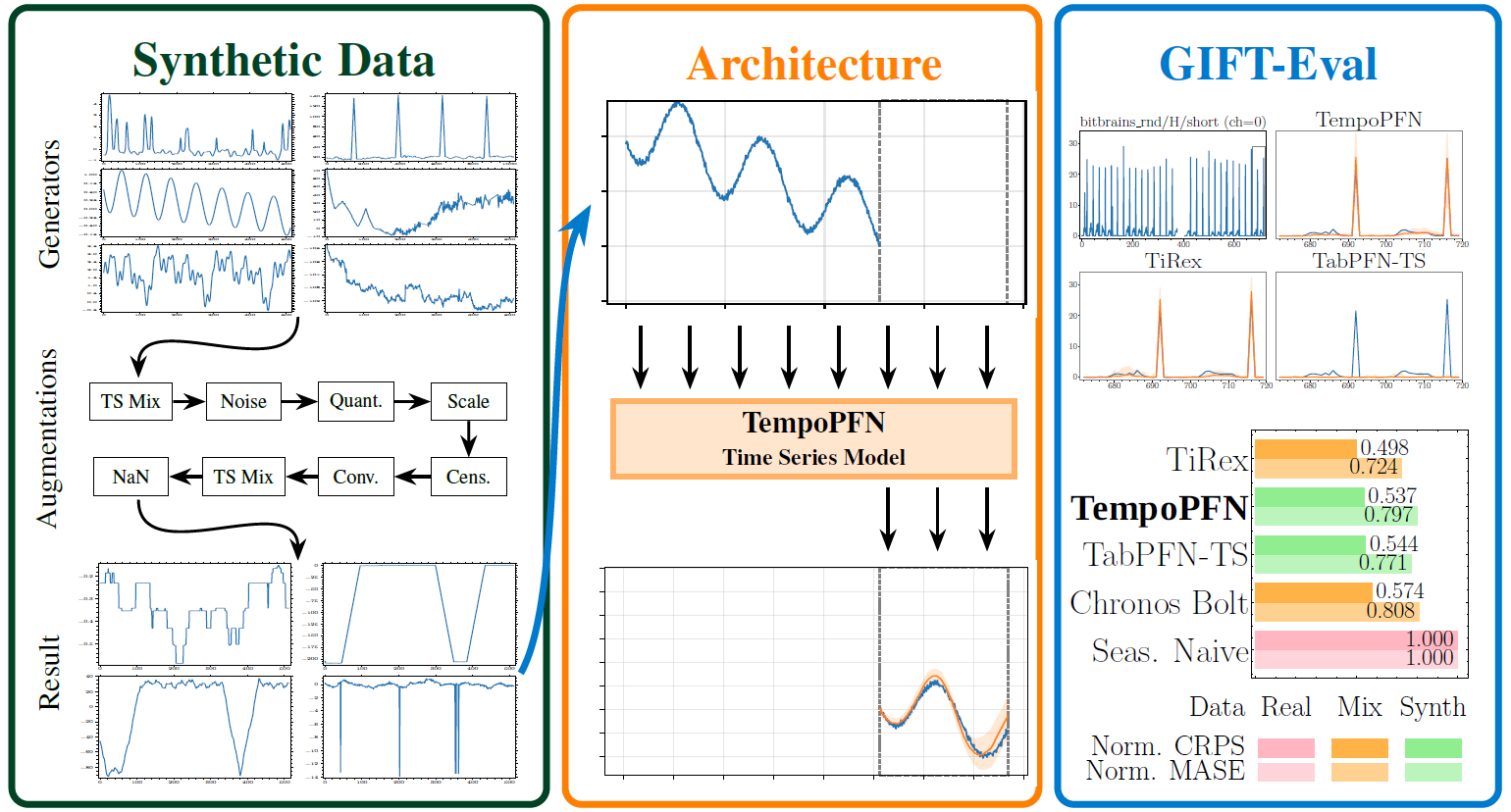

TempoPFN introduced in TempoPFN: Synthetic Pre-Training of Linear RNNs for Zero-Shot Time Series Forecasting, is a univariate time series foundation model pretrained entirely on synthetic data. It delivers top-tier zero-shot forecasting accuracy while remaining fully reproducible and free from real-data leakage.

Built on a Linear RNN (GatedDeltaProduct) backbone, TempoPFN performs end-to-end forecasting without patching or windowing. Its design enables fully parallelizable training and inference while maintaining stable temporal state-tracking across long sequences. The GatedDeltaProduct architecture is based on DeltaProduct, extended with state-weaving for time series forecasting. For detailed information about the architecture and custom modifications, see src/models/gated_deltaproduct/README.md.

This repository includes the pretrained 38M parameter model,, all training and inference code, and the complete synthetic data generation pipeline used for pretraining.

- High Performance, No Real Data: Achieves top-tier competitive results on GIFT-Eval, outperforming all existing synthetic-only approaches and surpassing the vast majority of models trained on real-world data. This ensures full reproducibility and eliminates benchmark leakage.

- Parallel and Efficient: The linear recurrence design enables full-sequence parallelization. This gives us the best of both worlds: the linear efficiency of an RNN, but with the training parallelism of a Transformer.

- Open and Reproducible: Includes the full synthetic data pipeline, configurations, and scripts to reproduce training from scratch.

- State-Tracking Stability: The GatedDeltaProduct recurrence and state-weaving mechanism preserve temporal continuity and information flow across long horizons, improving robustness without non-linear recurrence.

git clone https://github.com/automl/TempoPFN.git

cd TempoPFN

python -m venv venv && source venv/bin/activate

# 1. Install PyTorch version matching the CUDA version

# Example for CUDA 12.6:

pip install torch --index-url https://download.pytorch.org/whl/cu126

# 2. Install TempoPFN and all other dependencies

pip install .

export PYTHONPATH=$PWDPrerequisites:

- You must have a CUDA-capable GPU with a matching PyTorch version installed.

- You have run

export PYTHONPATH=$PWDfrom the repo's root directory (see Installation).

Run a demo forecast on a synthetic sine wave:

python examples/quick_start_tempo_pfn.pyIf you have already downloaded the model (e.g., to models/checkpoint.pth), you can point the script to it:

python examples/quick_start_tempo_pfn.py --checkpoint models/checkpoint.pthjupyter notebook examples/quick_start_tempo_pfn.ipynbGPU Required: Inference requires a CUDA-capable GPU. Tested on NVIDIA A100/H100.

First Inference May Be Slow: Initial calls for unseen sequence lengths trigger Triton kernel compilation. Subsequent runs are cached and fast.

Triton Caches: To prevent slowdowns from writing caches to a network filesystem, route caches to a local directory (like /tmp) before running:

LOCAL_CACHE_BASE="${TMPDIR:-/tmp}/tsf-$(date +%s)"

mkdir -p "${LOCAL_CACHE_BASE}/triton" "${LOCAL_CACHE_BASE}/torchinductor"

export TRITON_CACHE_DIR="${LOCAL_CACHE_BASE}/triton"

export TORCHINDUCTOR_CACHE_DIR="${LOCAL_CACHE_BASE}/torchinductor"

python examples/quick_start_tempo_pfn.pytorchrun --standalone --nproc_per_node=1 src/training/trainer_dist.py --config ./configs/train.yamlThis example uses 8 GPUs. The training script uses PyTorch DistributedDataParallel (DDP).

torchrun --standalone --nproc_per_node=8 src/training/trainer_dist.py --config ./configs/train.yamlAll training and model parameters are controlled via YAML files in configs/ (architecture, optimizers, paths).

A core contribution of this work is our open-source synthetic data pipeline, located in src/synthetic_generation/. It combines diverse generators with a powerful augmentation cascade.

Generators Used:

- Adapted Priors: ForecastPFN, KernelSynth, GaussianProcess (GP), and CauKer (Structural Causal Models).

- Novel Priors: SDE (a flexible regime-switching Ornstein-Uhlenbeck process), Sawtooth, StepFunction, Anomaly, Spikes, SineWave, and Audio-Inspired generators (Stochastic Rhythms, Financial Volatility, Network Topology, Multi-Scale Fractals).

You can easily generate your own data by installing the development dependencies and instantiating a generator wrapper. See examples/generate_synthetic_data.py for a minimal script, or inspect the generator code in src/synthetic_generation/.

This project is licensed under the Apache 2.0 License. See the LICENSE file for details. This permissive license allows for both academic and commercial use.

If you find TempoPFN useful in your research, please consider citing our paper:

@misc{moroshan2025tempopfn,

title={TempoPFN: Synthetic Pre-Training of Linear RNNs for Zero-Shot Time Series Forecasting},

author={Vladyslav Moroshan and Julien Siems and Arber Zela and Timur Carstensen and Frank Hutter},

year={2025},

eprint={2510.25502},

archivePrefix={arXiv},

primaryClass={cs.LG}

}