-

Notifications

You must be signed in to change notification settings - Fork 2k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Sails Memory Leak or Keep increasing #3099

Comments

|

Interesting. Is it running migrations? |

|

Approximately how long does it take for the memory to grow to that level? Are we talking minutes, hours, or days? Does this only happen when in cluster mode? If not, what happens if you start by command line without pm2? |

|

@shumailarshad Thank you for going the extra mile! This is important information. |

|

Maybe the grunt hook gets crazy in cluster mode? Try to start the sails app in cluster mode disabling the grunt hook, in the .sailsrc file, and take a look. |

|

@Josebaseba read my mind, I was wondering the same thing. 👍 |

|

How to disable grunt hook. Can you please help? Or may be how to disable sails modules which I will not be using at all. |

|

Change the .sailsrc file to: {

"hooks": {

"grunt": false

}

} |

|

That's an important bug then, but I don't have any idea what could be the reason. It looks like an infinite loop, maybe we could have more info starting the app in silly or verbose log mode, and looking the logs. |

|

Following are the logs when I run sails app with --silly and pm2 cluster mode. There has been some extra logging which might be the root cause. Can anyone please have a look at it. sailsApp-out.0.log �[36mverbose: �[39mSetting Node environment... """""""""""""" sailsApp-out-1.log: �[36mverbose: �[39mSetting Node environment... """"""""""""" |

|

Looks like something is opening a websocket connection over and over. Wondering if: a) a websocket client is attempting to connect; or You said you're not even hitting the app, so that should rule out a) above. Given the high amount of socket connections, the memory usage is probably coming from using sails-memory as the default socket/session adapter, so all this data is being stuffed in RAM rather than a data store of some kind. Anyone else have a hunch on what could be going on? |

|

It seems like the sockets sessions are saving and saving all the time in a loop, don't know why. Try disabling the sockets this time and check the memory (.sailsrc file again): {

"hooks": {

"sockets": false,

"pubsub": false

}

} |

|

@shumailarshad do you have a browser window open, pointed at the http://localhost:1337? The default |

|

@sgress454 but 4GB of data on empty sessions is too much for just a browser connecting and disconnecting for few hours... And that just happens in cluster mode, I can't figure what's going there. |

|

Not sure if this is the solution, but I found this thread over at PM2: Unitech/pm2#81 Using the memory adapter for socket sessions when clustered could cause issues, since your session would exist in memory of one process but not the others. Obviously you'd want to use a data store like Redis to solve this, but in @shumailarshad's case, he hasn't gotten far enough to play around with sockets and may not even want them yet. He did say that he wasn't even hitting the URL, so if that's true then sails.io.js file should not even be running and trying to connect. 4GB is still insane, though, but I wanted to mention the above issue in case that has something to do with it. |

|

Actually, Sails apps using the latest version of sails-hook-sockets do make their own socket connection on the backend, so that in a clustered situation all of the Sails apps in the cluster can communicate over a message bus (see #3008 (comment)). So if the log only has one of those "Could not fetch session" messages per app in the cluster, that's expected. Of course it still doesn't explain the memory leak (or its growth over time). |

|

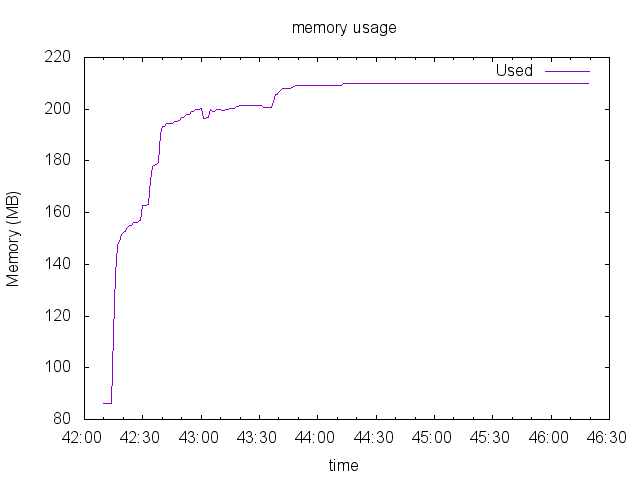

I've done a few tests with express, sails and a basic http server : https://github.com/soyuka/nodejs-http-memtest. Sails seems to be leaking : |

|

@soyuka take a look here: #2779 probably you have that memory increase because of the session storage, sails save it in the RAM. To fix it disable the sessions or just use an adaptor as redis-session. That's not the problem, the problem is that the memory increases in the cluster mode + sockets/pub-sub hooks, that's the weird stuff. |

|

@Josebaseba What are you meaning by session storage? Can you quote docs or some code please? |

|

@soyuka take a look at this specific comment: #2779 (comment) |

|

@Josebaseba I have read this comment but can't find docs on how I could disable sessions. I noticed the |

|

http://stackoverflow.com/questions/28015873/disable-some-built-in-functionality-in-sails-js/28017720#28017720 try this, but just disabling session hook, and then tell us your results. Thanks! |

|

@Josebaseba thanks for your help on this, definitely an important thing to keep in mind when doing stress tests! |

|

@jacqueslareau @AlejandroJL I know this is a stupid question, but when you say that upgrading Node fixed the problem, you are taking into account the fact that restarting your Sails app will drop the memory usage to zero (or more accurately, the baseline amount of memory usage), right? In both of your graphs, the memory starts going up again. |

|

Hi @sgress454 . The difference in my case is that now the memory goes freeing is little bit. Before it was not up to quite large quantities with a super traffic reduced (in testing phase). It is clear that it is not the solution but at least an improvement is seen. Now as we need to see the behavior of NodeJS 4.0.0. In the case of my chart if that took into account the reboot. And soon see ram is freed of little. |

|

@sgress454 I took that into account yes. Before 4.0.0, it didn't took long before the memory gets consumed. See this graph: While it's true that I may have been over excited, it still is a major improvement. Well keep posted. |

|

@AlejandroJL @jacqueslareau I ran the same app on node |

|

@particlebanana fwiw my experience is identical. Previously my app was running on 0.10 and never exceeded about 200MB or so of memory. Once upgrading my dyno to 0.12 the leak started. I've been able to mitigate this somewhat on Heroku by using the Going to attempt a jump to 4.0 and see if this changes things... |

|

Hey @particlebanana we are currently experimenting with 4.0. And we see improvements. in the previous comments you have graphs with NodeJS 4.0 |

|

yes.. i have experienced memory leak for sails using with node 0.12.7 version even before and after turn off hooks. maybe will try nodejs 4.0. hope someone else can solve this issue. thanks. |

The explanation is as simple as you'd think it is: node 0.12 simply uses more RAM and doesn't garbage collect as aggressively. |

|

Out of curiousity how are you folks getting sails to run with v4.0? Is there a workaround to the seg faults happening with socket.io? |

|

@kennysmoothx see #3211 |

|

don't think nodejs 4.0 will solve the memory after tried. |

|

@kennysmoothx I'm not getting the same seg-faults on v4 both locally and deployed on Heroku. I did have to make some updates to the @tjwebb I do think my particular issue (and potentially others too) may be related to the garbage collection in Node. I was tipped off to this because my heap dumps were nearly identical in size both at deployment and once memory was pegged out several hours later. I had also already done the suggested steps of moving sessions and sockets off of disk storage. Node v4 wasn't a magic bullet fix for me. My solution has been to set the |

|

@jacqueslareau @Ignigena thanks for the follow up- and please keep us posted. Keeping a close eye on this. |

|

Following up on this--are we prepared to chalk this up to a Node issue and close, or should we still be looking at a possible issue in Sails? @jacqueslareau @Ignigena have things continued to be stable? |

|

Still no more memory leaks for me. But my observations are anecdotal at best. Maybe something else I didn't see played a role in my leaks, but I highly doubt it. It would require more debugging and tests. |

|

Ok--closing for now. Thanks again for everyone's hard work on this. |

|

Hi all , I found this issue which i was facing on my staging and production server . Previously i was using node v 0.12.4 with passenger used for deployment and on fresh restart the memory consumption went from 13 to 14 % to 43% without any request and using loadtest to just hit the server url consumed more memory which i am yet to resolve ( may be its the socket and session that uses ram for storage ) . We then migrated to node v 4.0.24 which had better gc policy and cleared off the unused memory better than v 0.12.4 and i switched from passenger to PM2 which reduced another consumption in memory and now on fresh start up the consumption is just about 9 to 10 % and on full load it may increase to 14 to 18 % but not more than that. If there is an issue with socket or session using RAM we would like to know another alternate solution so that we can optimize our server more. |

|

@kailashyogeshwar85 Howdy. Please open a new issue. Thanks! Best, Irl |

|

@kailashyogeshwar85 check out #3638 (comment) for more exploration of your question about sessions. More generally, I wrote up some more info/tips/best practices for diagnosing suspected memory leaks in a Node/Sails application here. If you notice issues after running through those troubleshooting steps, please open a new issue to make sure we see it, and we'll look into it ASAP. Thanks again! |

|

An update with more info and tips: |

I have created a new sails js app and did not change any code in it.

Than I used pm2 to launch two instance of that app. Memory keep increasing without hitting app even once.

I have done the same with basic express.js app with following code but it does not increase memory

"

var express = require('express');

var app = express();

app.get('/', function (req, res) {

res.send('Hello World!');

});

var server = app.listen(3000, function () {

var host = server.address().address;

var port = server.address().port;

console.log('Example app listening at http://%s:%s', host, port);

});

"

The text was updated successfully, but these errors were encountered: