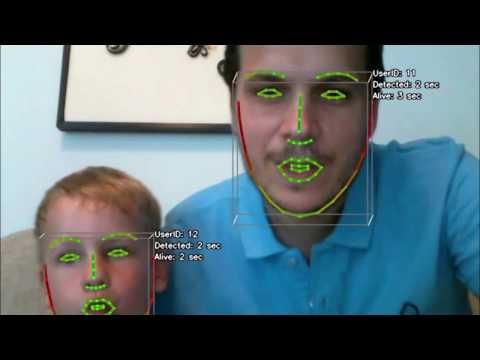

This cross-platform face API is about achieving facial information driven development (e.g. gesture-based control) in mobile environment. The scheme is purely based on the user's face or more precisely on the following data:

- Face rectangle in the 2-D pixel-, and the face box in the 3-D camera coordinate system

- 66 pieces of facial feature points in the 2-D pixel-, and 3-D camera coordinate system

- 6DoF head pose (to the camera) in the 3-D camera coordinate system

All the information is determined on monocular images therefore there is no need for a special hardware or sensor during the calculations. If you are interested in the technical background then feel free to read in the following publications: [1], [2] and [3]

The system architecture is designed to reuse the image processing algorithms in case of multiple host platforms. The code of application logic (AL) is written in C++ and is shared between the particular platforms. Only a thin-layer of platform-specific code are used for transferring data from/to the user interface (UI). For example, this platform-specific code is a JNI bridge in case of the Android host platform.

A detailed description about the architecture can be read in this paper [2]:

https://www.researchgate.net/publication/312411829_Face_recognition_on_mobile_platforms

You can view the results on the video below:

https://www.youtube.com/watch?v=iS4eDf775GI

All dependencies of the compilation in Windows (Visual Studio 2019) and Android can be downloaded (as pre-built libraries) from the following repository:

https://github.com/bkornel/3rdparty

You should follow the directory structure below during the compilation:

[local_path_of_the_project]

|- 3rdparty

|-- opencv-4.1.1

|-- ...

|- face-api

|-- Applications

|-- FaceApi

|-- Testing

|-- ...

The solution file can be found in Applications/Windows. Nothing else should be set up, after building the application the binaries can be found in Applications/Windows/Bin

The Android Studio project can be found in Applications/Android. The following packages must be installed via SDK manager

- Min SDK version: API 23 (Android 6.0)

- Target SDK version: API 28 (Android 9.0)

- LLDB 3.x or newer

- CMake 3.6.x or newer

- NDK 20.x or newer

The C++ part (image processing algorithms) is set up as a CMake external native build in the Android Studio project, thus it is built automatically when you make the project.

The whole module graph can be created from the settings file (defined in settings.json by default). Modules can interact and exchange information whith each others via ports. Every module must have one output port and can have any input ports (zero or more).

Ports can be defined by implementing the fw::Port interface. For example the UserManager class:

class UserManager :

public fw::Module,

public fw::Port<ActiveUsersMessage::Shared(ImageMessage::Shared, RoiMessage::Shared)>

{

...

ActiveUsersMessage::Shared Main(ImageMessage::Shared iImage, RoiMessage::Shared iDetections) override;

...

};

This class has the ActiveUsersMessage::Shared output and the ImageMessage::Shared and RoiMessage::Shared inputs. The Main member function of the class must defined according to the template arguments of fw::Port.

The module graph can be defined in the settings file (settings.json by default). For the UserManager module it is:

"userManager": {

"port": [ "imageQueue:1", "faceDetection:2" ],

...

}

Where the ImageQueue module returns with an ImageMessage::Shared and transfers the information to the first inputport of UserManager and so does the FaceDetection with RoiMessage::Shared.

The native side must be only extended in case of adding a new module to the system.

- First the

ModuleFactory::Create(...)inModuleFactory.cppwhich creates and initializes every module - Then the

ModuleConnector::Connect(...)inModuleConnector.cppwhich connects all the inputs (predecessors) to a given module - Finally the

connect(...)in the anonymous namespace ofModuleConnector.cppwhich connects the ith input to a given module

These are just copy-pasting 1-2 line, you should find the // REMARK: Insert new modules here comments in the files above.

[1] K. Bertok and A. Fazekas, "Facial gesture recognition and its applications," In Gesture recognition: performance, applications and features, Nova Science Publishers, New York, pp. 1-30, 2018.

[2] K. Bertok and A. Fazekas, "Face recognition on mobile platforms," In Proceedings of the 7th IEEE International Conference on Cognitive Infocommunications: CogInfoCom 2016, pp. 37-43, 2016.

[3] K. Bertok and A. Fazekas, "Recognizing complex head movements," In Australian Journal of Intelligent Information Processing Systems, vol. 14, pp. 3-17, 2016.