-

Notifications

You must be signed in to change notification settings - Fork 605

Add Real-Time License Plate Detector Example Project (YOLOv3, CRAFT, CRNN) #803

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

@RobertLucian this is really awesome! I'll go through it now and let you know if I have any feedback, but on first glance it looks great. |

|

As agreed, the client has been moved to a separate repository here: For verifying that the deployed models work as expected on AWS w/ cortex, this half of the project has gotten a This verification is useful before going heavy with the client app from the other repo (check the above link). |

There will be a follow-up article in one or two days on this subject. I've linked the article in the README, but momentarily, it leads to a 404.

This PR contains the project I've been working on for the past few months.

It's a system, be it physical or purely software-based, to which a video stream can be fed into and in real time a feedback stream is broadcasted to the browser which contains overlayed boxes representing the detected/recognized license plates in traffic. If the license plate is also recognized, a text containing its recognition is placed adjacent to the bounding box.

Here's a video of it.

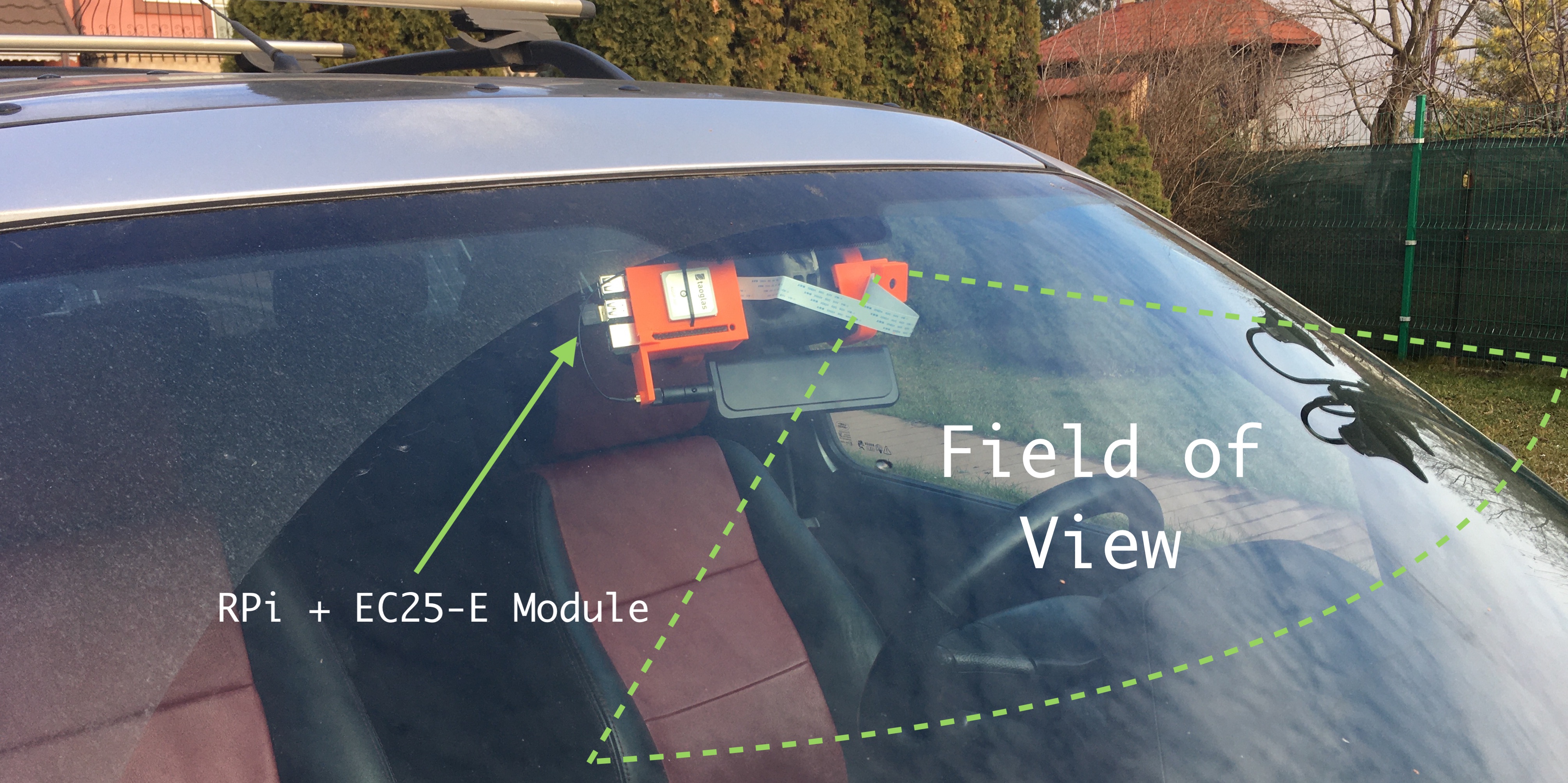

And here's how the embedded system is sitting on my car's rear-view mirror. Obviously, all these parts have been 3D printed - they are custom-built.

Other related photos about this project can be found here. I've uploaded them on imgur.

The video source for this app can either be a video file or a camera (more specifically, a Raspberry Pi Camera). The video file is treated as a continuous stream on which it must be acted immediately, much like a camera's port is. The video file support has been added for those that don't have a Raspberry Pi + Pi Camera + others laying around. I've also linked in a video you can download to experiment with.

The models used in this project are: YOLOv3, CRAFT text detector and CRNN text recognizer.

The only model which has been fine-tuned is the YOLOv3 one. I've generated a dataset by cruising around the town with my dashcam device and then fine-tuned the model to detect license plates. The dataset can be found here. The newly tuned model has been PRed to this repo and is now a part of it.

When it's working optimally (aka there are enough instances in the pool to handle a given amount of frames/s), the average latency I have experienced from the moment the frame is captured up until it's displayed in the browser (with all the bounding boxes and recognized texts) it takes about 0.5-0.7 seconds. The downside for now is that for a single stream going at 30 fps and a resolution of 480x270, about 20 GPU-equipped instances are required. If optimized (half precision, multiprocessed web API server, more vCPUs, tinyYOLOv3?), I wouldn't see why running a stream on a single instance wouldn't work (heck I think even 2 streams would be doable at that point on a single GPU).

Suggestion: the text recognition requests can be disabled within the app by leaving the CRNN API endpoint address empty (

""). Useful if you don't want to spin up that many GPUs.checklist:

make testandmake lintsummary.md(view in gitbook after merging)