Botao Ye · Sifei Liu · Haofei Xu · Xueting Li · Marc Pollefeys · Ming-Hsuan Yang · Songyou Peng

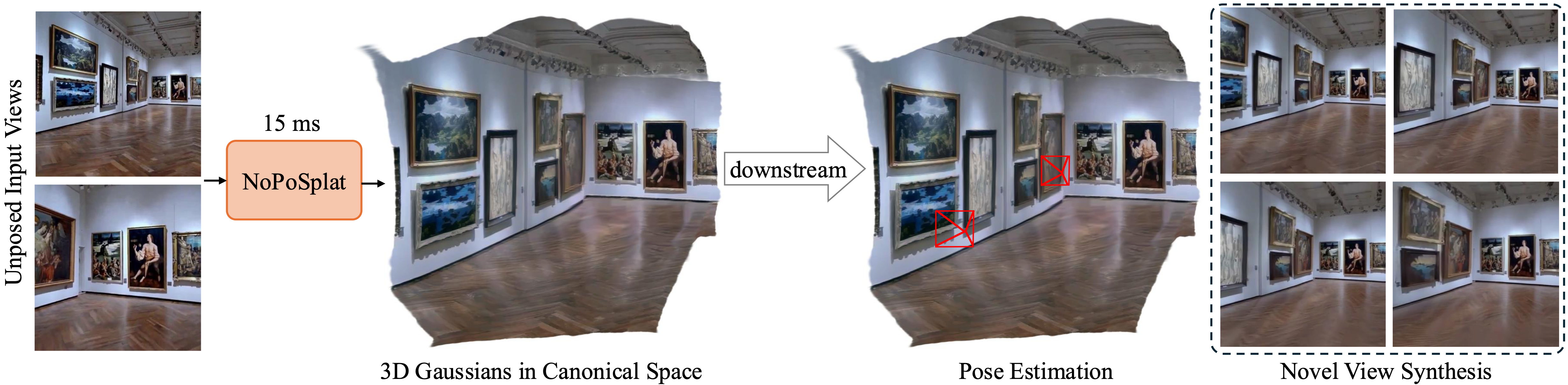

NoPoSplat predicts 3D Gaussians in a canonical space from unposed sparse images,

enabling high-quality novel view synthesis and accurate pose estimation.

Table of Contents

Our code relies on Python 3.10+, and is developed based on PyTorch 2.1.2 and CUDA 11.8, but it should work with higher Pytorch/CUDA versions as well.

- Clone NoPoSplat.

git clone https://github.com/cvg/NoPoSplat

cd NoPoSplat- Create the environment, here we show an example using conda.

conda create -y -n noposplat python=3.10

conda activate noposplat

pip install torch==2.1.2 torchvision==0.16.2 torchaudio==2.1.2 --index-url https://download.pytorch.org/whl/cu118

pip install -r requirements.txt- Optional, compile the cuda kernels for RoPE (as in CroCo v2).

# NoPoSplat relies on RoPE positional embeddings for which you can compile some cuda kernels for faster runtime.

cd src/model/encoder/backbone/croco/curope/

python setup.py build_ext --inplace

cd ../../../../../..Our models are hosted on Hugging Face 🤗

| Model name | Training resolutions | Training data |

|---|---|---|

| re10k.ckpt | 256x256 | re10k |

| acid.ckpt | 256x256 | acid |

| mixRe10kDl3dv.ckpt | 256x256 | re10k, dl3dv |

| mixRe10kDl3dv_512x512.ckpt | 512x512 | re10k, dl3dv |

We assume the downloaded weights are located in the pretrained_weights directory.

Our camera system is the same as pixelSplat. The camera intrinsic matrices are normalized (the first row is divided by image width, and the second row is divided by image height). The camera extrinsic matrices are OpenCV-style camera-to-world matrices ( +X right, +Y down, +Z camera looks into the screen).

Please refer to DATASETS.md for dataset preparation.

First download the MASt3R pretrained model and put it in the ./pretrained_weights directory.

Then call src/main.py via:

# 8 GPUs, with each batch size = 16. Remove the last two arguments if you don't want to use wandb for logging

python -m src.main +experiment=re10k wandb.mode=online wandb.name=re10kThis default training configuration requires 8x GPUs with a batch size of 16 on each GPU (>=80GB memory). The training will take approximately 6 hours to complete. You can adjust the batch size to fit your hardware, but note that changing the total batch size may require modifying the initial learning rate to maintain performance. You can refer to the re10k_1x8 for training on 1 A6000 GPU (48GB memory), which will produce similar performance.

# RealEstate10K

python -m src.main +experiment=re10k mode=test wandb.name=re10k dataset/view_sampler@dataset.re10k.view_sampler=evaluation dataset.re10k.view_sampler.index_path=assets/evaluation_index_re10k.json checkpointing.load=./pretrained_weights/re10k.ckpt test.save_image=true

# RealEstate10K

python -m src.main +experiment=acid mode=test wandb.name=acid dataset/view_sampler@dataset.re10k.view_sampler=evaluation dataset.re10k.view_sampler.index_path=assets/evaluation_index_acid.json checkpointing.load=./pretrained_weights/acid.ckpt test.save_image=trueYou can set wandb.name=SAVE_FOLDER_NAME to specify the saving path.

To evaluate the pose estimation performance, you can run the following command:

# RealEstate10K

python -m src.eval_pose +experiment=re10k +evaluation=eval_pose checkpointing.load=./pretrained_weights/mixRe10kDl3dv.ckpt dataset/view_sampler@dataset.re10k.view_sampler=evaluation dataset.re10k.view_sampler.index_path=assets/evaluation_index_re10k.json

# ACID

python -m src.eval_pose +experiment=acid +evaluation=eval_pose checkpointing.load=./pretrained_weights/mixRe10kDl3dv.ckpt dataset/view_sampler@dataset.re10k.view_sampler=evaluation dataset.re10k.view_sampler.index_path=assets/evaluation_index_acid.json

# ScanNet-1500

python -m src.eval_pose +experiment=scannet_pose +evaluation=eval_pose checkpointing.load=./pretrained_weights/mixRe10kDl3dv.ckptNote that here we show the evaluation using the mixed model trained on RealEstate10K and DL3DV. You can replace the checkpoint path with other trained models.

This project is developed with several fantastic repos: pixelSplat, DUSt3R, and CroCo. We thank the original authors for their excellent work. We thank the kindly help of David Charatan for providing the evaluation code and the pretrained models for some of the previous methods.

@article{ye2024noposplat,

title = {No Pose, No Problem: Surprisingly Simple 3D Gaussian Splats from Sparse Unposed Images},

author = {Ye, Botao and Liu, Sifei and Xu, Haofei and Xueting, Li and Pollefeys, Marc and Yang, Ming-Hsuan and Songyou, Peng},

journal = {arXiv preprint arXiv:2410.24207},

year = {2024}

}