-

-

Notifications

You must be signed in to change notification settings - Fork 143

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Avoid KeyError on worker restart #138

Avoid KeyError on worker restart #138

Conversation

|

@raybellwaves if you still go your memory use case somewhere? @wgustafson if you want to give that a go? Don't see how to do a simple unit test for now. |

|

I've been on travel so apologies for the slow response the last week. I have a script that was throwing key errors but I need to clean things up and see if it still reacts the same way. I will try to do this early this coming week. |

|

Yeah my memory issue use case is https://gist.github.com/raybellwaves/f28777bf840cc40f4c76d88beca528c5. I've added the output to the file. What's the best way to test your branch (this version)? I'm not familiar cloning branches. |

|

No worries @wgustafson! Was just browsing issues and updating things, I understand perfectly you've got other things to do, no hurry. |

|

Taking a look at this again this morning. My reproducible KeyError issue happens with the code I posted in #122 on August 14, 2018, so I just put the log output into that issue to keep things together. In my case, I can be certain the problem is not from a lack of memory. So, I don't know if my problem is the same is @raybellwaves or not. |

@raybellwaves here is something that should work for checking out this PR code: Personally I have a git alias for this (there are other options for this as well if you google for it). Here is an excerpt from my Basically I can do this to get the code of this PR (it also works for getting the latest version of the PR): |

|

@lesteve thanks. The alias looks very handy. @guillaumeeb sorry i'll be on vacation until mid-September and won't have chance to try this until then. |

|

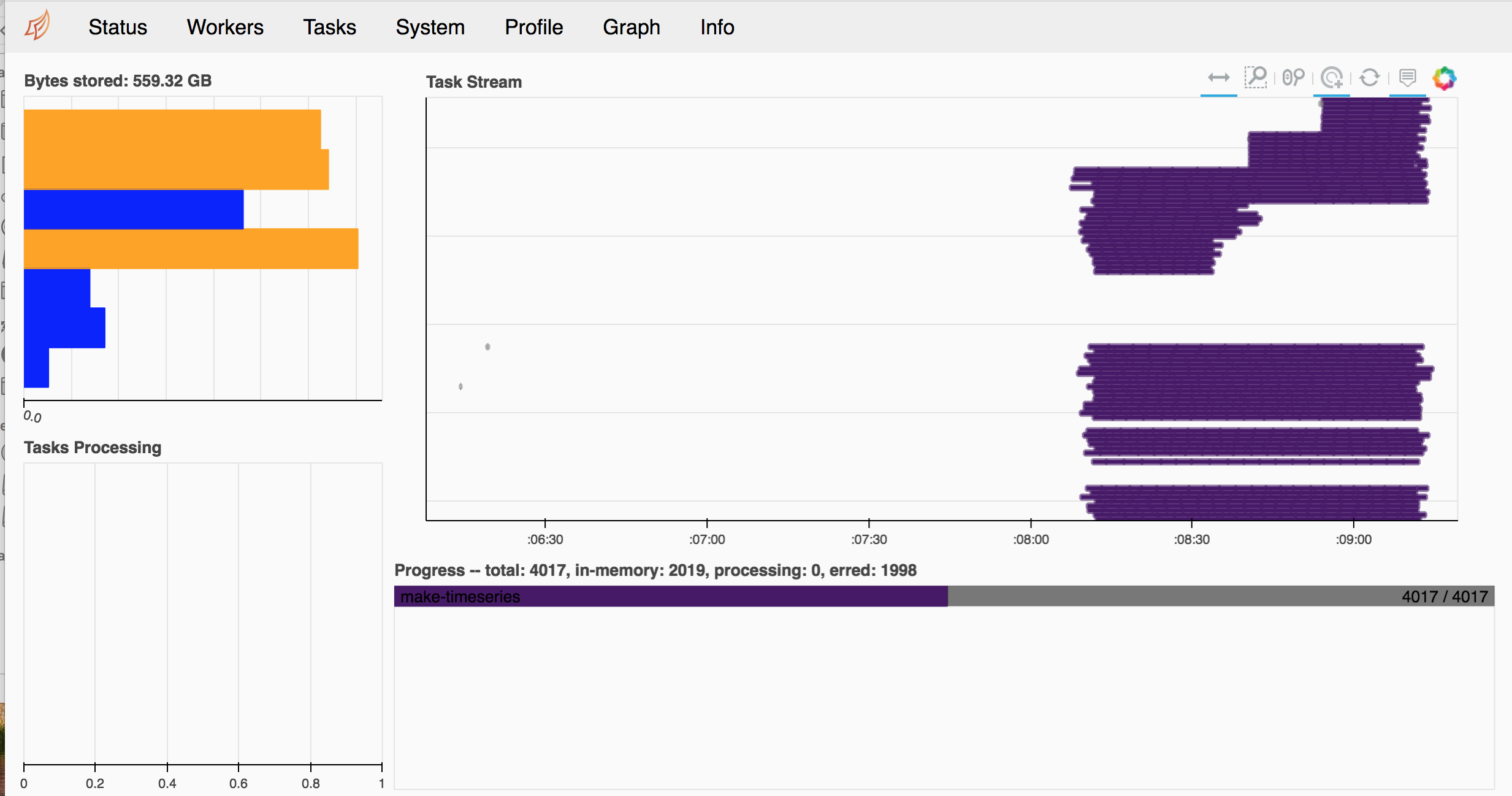

I can confirm the KeyError has gone away. It now says and looking at the dask dashboard I can see some of the tasks erred: My output is here: https://gist.github.com/raybellwaves/3033fe1679510ea4d5d64e0658225fc2 |

|

Thanks @raybellwaves, looking at your code, since you're trying to persist the whole dataframe in memory, I assume you got some Memory errors on your workers, which seems to be confirmed by your dashboard screenshot and the orange bars we see. If I could get some code reviews here this would be nice. |

According to |

Yep. A one terabyte data frame is a bit too much for our HPC as it's often hard to get multiple jobs running. The output of Is there a way to provide information in the output of memory issues? If I didn't have the dashboard up and just saw |

This message is currently in dask-jobqueue, we can improve it there and give an hint that this may be due to some memory problem. At the same time, there maybe something to do upstream to get the information about why the worker has been restarted... |

Closes #117.

Should help diagnose problem in #122.

I chose not to raise any Error, even if the job_id is not to be found in finished_jobs of the Scheduler plugin. User may want to manually add jobs and worker to the cluster? Though this is not a good practice.