-

Notifications

You must be signed in to change notification settings - Fork 4.7k

Closed

Description

I ran the example in the tutorial here, but I get an error.

First of all, if dist.init_process_group("nccl", rank=local_rank, world_size=world_size) was not set,

an error occurred. So I added this line, and this bug has been fixed. Now with below code it works fine on single GPU.

# Filename: gpt-neo-2.7b-generation.py

import os

import deepspeed

import torch

import torch.distributed as dist

import transformers

from transformers import pipeline

local_rank = int(os.getenv('LOCAL_RANK', '0'))

world_size = int(os.getenv('WORLD_SIZE', '1'))

dist.init_process_group("nccl", rank=local_rank, world_size=world_size)

generator = pipeline('text-generation', model='EleutherAI/gpt-neo-1.3B', device=local_rank)

deepspeed.init_inference(generator.model,

mp_size=world_size,

dtype=torch.float,

replace_method='auto')

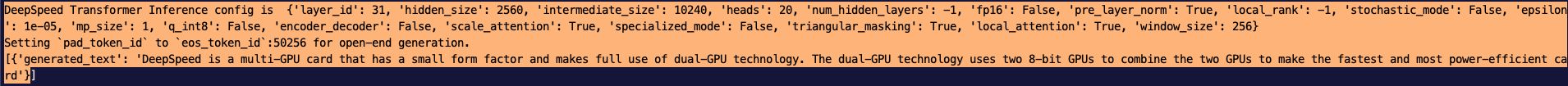

string = generator("DeepSpeed is", num_beams=5, repeation_penalty=2.0, no_repeat_ngram_size=4, min_length=50)

if torch.distributed.get_rank() == 0:

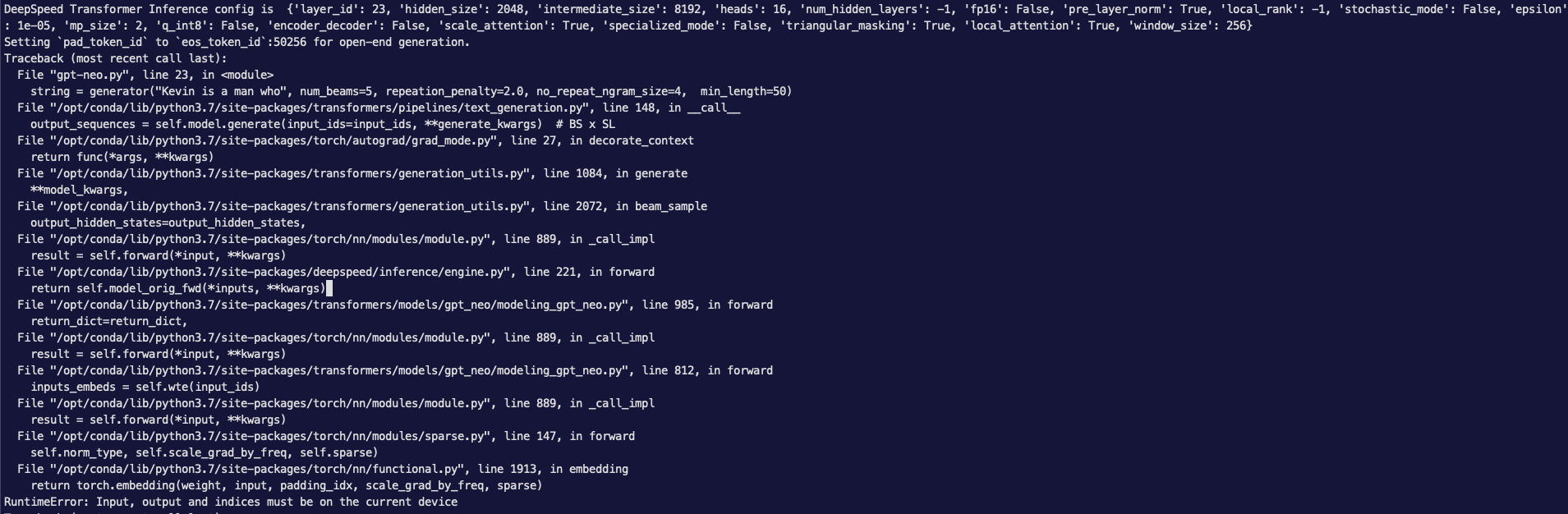

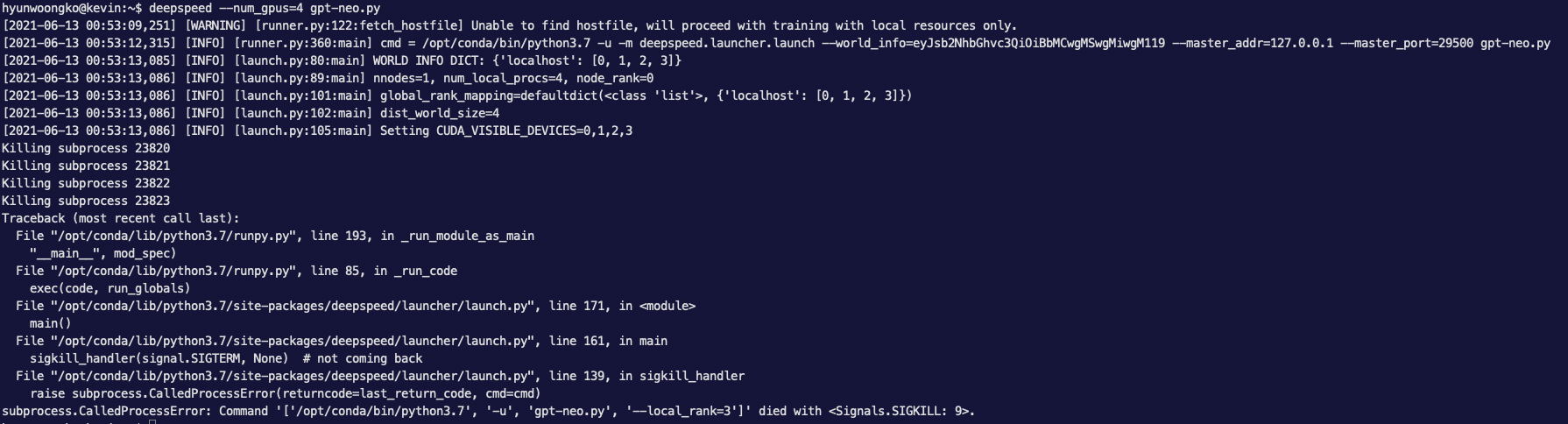

print(string)However, when I try the same thing in multi-GPU, the following error occurs. What's the problem?

Reactions are currently unavailable

Metadata

Metadata

Assignees

Labels

No labels