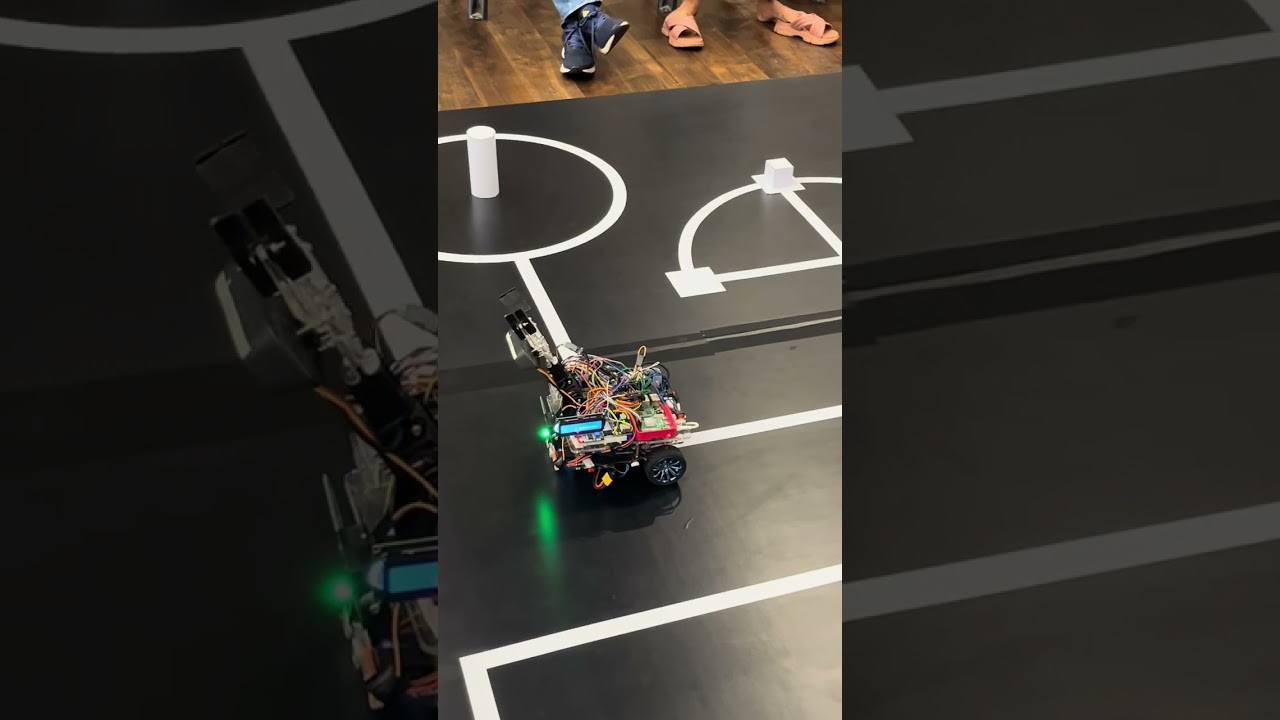

Computer Vision system for identifying 3D-objects and colors. Made for SLRC 2024. Powered by Tensorflow and OpenCV.

One of the tasks of SLRC 2024 is for the robot to identify whether the object at the center of a ring is a cube or a cylinder. We tackled this task using computer vision. We experimented with the two approaches mentioned below.

The robot includes a Raspberry Pi 4 Model B which runs a tensorflow model to inference. The video feed taken using a webcam is used for object classification. The deep learning model is trained using MobileNetV1 as the base model with 2 dense layers attatched and trained using transfer learning. The training accuracy of this model reached 99%.

The code for this technique can be found in ./object_detection folder

We also tried using OpenCV only to detect edges of the object in the center and generate contours. By hard-coding a specific number of contours as the threshold value, we can differentiate between the image of the cube and cylinder by comparing the number of contours generated. But we found this method is much more prone to errors. However the experimental code can be found in ./edge_detection.

Another task of SLRC 2024 is to identify the color of the wall infront of the line follower. Only two colors, green and blue, are possible. This could have been easily done with a color sensor module for Arduino. But since we have a camera fixed for the previous task, we implemented this functionality aslo from OpenCV. The code is much simpler and involves using two color masks for green and blue. Finally, the program counts the pixel area of green and blue seperately and outputs the color with the greater pixel area. The code for this task can be found in ./color_detection folder.

The final code running on the Raspberry Pi 4 Model B is included in the main.py file. This file consists of seperate functions get_shape() and get_color() which are called by the Raspberry Pi only when the Arduino Mega sends a request. The main.py file is run instantly after booting up the Raspberry Pi.

- Tensorflow and Keras

- OpenCV

- Google Colab to train the model

- Visual Studio Code

- Raspberry Pi 4 Model B

- Arduino Mega

- Hikvision Webcam

This guide will help you set up your Raspberry Pi 4 Model B to run a project using TensorFlow 2.15.0, Keras 2.15.0, OpenCV with contrib modules, and RPi.GPIO.

- Raspberry Pi 4 Model B with Raspbian OS installed.

- Internet connection.

First, ensure your system is up-to-date:

sudo apt-get update

sudo apt-get upgradeMake sure Python and Pip are installed:

sudo apt-get install python3 python3-pipInstall the dependencies:

pip install -r requirements.txtClone the repository to your Raspberry Pi:

git clone https://github.com/devnithw/SLRC-vision-system.git

cd SLRC-vision-systemCreate a service file for your script:

sudo nano /etc/systemd/system/main.servicePaste the following content into the main.service file:

[Unit]

Description=Main Python Script for Vision System

After=multi-user.target

[Service]

Type=idle

ExecStart=/usr/bin/python3 /home/pi/Documents/SLRC-vision-system/main.py

[Install]

WantedBy=multi-user.target- In the

[Service]section, theExecStartdirective specifies the command to execute when starting the service. The first part of the ExecStart value should be the directory where your Python interpreter is installed, and the second part should be the path to your target script file. - For example, ExecStart=/usr/bin/python3 /home/pi/Documents/main.py indicates that Python is installed in /usr/bin/ and the target script is located at /home/pi/Documents/main.py.

Save the file and close the editor. Then, enable the service:

sudo systemctl enable main.serviceTo run the script immediately without rebooting:

sudo systemctl start main.serviceVerify the service is running:

sudo systemctl status main.serviceTo temporarily stop the service:

sudo systemctl stop main.serviceEnsure the service is configured to start automatically on boot:

sudo systemctl is-enabled main.serviceThis command should return enabled if the service is set to start at boot.

With these steps, your Python script main.py will now automatically run whenever the Raspberry Pi boots up.

This project was developed for the SLRC 2024- Sri Lankan Robotics Challenge

Contributions are welcome!

- Bug Fixes: If you find any bugs or issues, feel free to create an issue or submit a pull request.

- Feature Enhancements: If you have ideas for new features or improvements, don't hesitate to share them.

This project is licensed under the MIT License.