-

Notifications

You must be signed in to change notification settings - Fork 10.2k

Deploy to Local Kubernetes

CONTENT

- Specifications

- Previous Versions

- Install Docker Desktop

- Enable Kubernetes

- Install Helm

- Install NGINX Ingress Controller

- Install eShopOnContainers Using Helm

- Known Behaviours

- Explore eShopOnContainers

- Optional

- Additional Resources

We have upgraded K8s scripts to work on the latest stable version of Docker Desktop and Kubernetes. Current dev branch has been tested with the following specifications :

| Component | Versions |

|---|---|

| OS | Windows 11/ macOS / Linux |

| Docker Desktop | 4.4.4 |

| Docker Engine | 20.10.12 |

| kubectl | 1.22.5 |

| Kubernetes | 1.22.5 |

| Kubernetes-Dashboard | 2.0 |

| Helm | 3.8.0 |

| Nginx Ingress controller | 1.1.1 |

| Nginx | 4.0.15 |

You can still refer the older version of scripts under k8s/archived directory. For more details, please visit archived page.

IMPORTANT: Directory k8s/archived will not be supported in the future.

You can install Docker Desktop following :

- Install Docker Desktop Of Windows

- Install Docker Desktop On Mac

To enable Kubernetes (k8s) click the Enable Kubernetes checkbox in the Kubernetes tab in Docker Settings and then click the "Apply" button.

If you also enable the "Show system containers" checkbox, you can see Kubernetes system containers running by using docker ps

Your Docker Desktop Kubernetes installation already contains kubectl, which is the CLI to run Kubernetes commands and you'll need for the rest of steps here.

IMPORTANT: You'll also have to increase the memory allocated to Docker to at least 6.25 GB, because you'll have 70+ containers running after deploying eShopOnContainers. But for Windows operating system, if you are using Docker Desktop WSL 2 backend then you don't need to do any manual configuration. It automatically uses the required CPU and memory resources while building and running containers. For more details refer : Docker Desktop WSL 2 backend

Helm is the package manager for Kubernetes.

-

For detailed installation steps, please refer official documentation page.

-

After successful installation,

helmversion can be seen as per below :

Please note, helm 3 onwards Tiller Server component has been removed. So you wouldn't need to install client and server separately.

Ingress is an API object that allows access to your clustered services from the outside. It's like a reverse proxy, that can handle load balancing, TLS, virtual hosting and the like.

NGINX is the Ingress controller used for eShopOnContainers.

To install the NGINX Ingress controller, run the following command:

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.1.1/deploy/static/provider/cloud/deploy.yamlAlternatively, you can also install using Helm. Refer to the Quick Start Guide for details.

Navigate to the folder deploy\k8s\nginx-ingress and run the following command:

kubectl apply -f .\local-cm.yamlThe above command will set the proxy-buffer size to 128k which is required by the identity service.

Go to the deploy/k8s/helm folder in your local copy of the eShopOnContainers repo.

At this point you have two options for installing eShopOnContainers:

- Use the public images from DockerHub (eshop) with tag

linux-dev -

Or Use your local images with tag

linux-latest

The easiest way to setup eshop on k8s is to use public images. For that you would require to run below scripts:

-

For Windows :

.\deploy-all.ps1 -imageTag linux-dev -useLocalk8s $true

-

For Mac :

.\deploy-all-mac.ps1 -imageTag linux-dev -useLocalk8s $true

If you have done some local changes in the eshop application and want to deploy that to the cluster you can do that by building your local docker images.

-

Go to the

srcdirectory of your local repo and below command :docker-compose build -

Then, you can go to

deploy\k8s\helmdirectory and run below command to deploy your local images :For Windows :

.\deploy-all.ps1 -imageTag linux-latest -useLocalk8s $true -imagePullPolicy Never

For Mac :

- Install PowerShell on Mac

- Run the below script.

.\deploy-all-mac.ps1 -imageTag linux-latest -useLocalk8s $true -imagePullPolicy Never

The parameter useLocalk8s to $true, forces the script to use localhost as the DNS for all Helm charts and also creates the ingress with the correct ingress class.

Note: When using the parameter imagePullPolicy to Never only local images will be used (pods will enter in error state if local images do not exist. If imagePullPolicy is set to IfNotPresent then local images will be used if present, and if not Kubernetes will try to download them from a docker registry. If the imagePullPolicy is set to Always then Kubernetes will never use the local images and will try to download them all from a docker registry. If the images are not found pods will enter in error state.

After running the deployment script you can check the deployment status with the command:

kubectl get deploymentAfter a while (could be ~5 min) you should get an output similar to this one:

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

eshop-apigwmm 1 1 1 1 2h

eshop-apigwms 1 1 1 1 2h

eshop-apigwwm 1 1 1 1 2h

eshop-apigwws 1 1 1 1 2h

eshop-basket-api 1 1 1 1 2h

eshop-basket-data 1 1 1 1 2h

eshop-catalog-api 1 1 1 1 2h

eshop-identity-api 1 1 1 1 2h

eshop-keystore-data 1 1 1 1 2h

eshop-locations-api 1 1 1 1 2h

eshop-marketing-api 1 1 1 1 2h

eshop-mobileshoppingagg 1 1 1 1 2h

eshop-nosql-data 1 1 1 1 2h

eshop-ordering-api 1 1 1 1 2h

eshop-ordering-backgroundtasks 1 1 1 1 2h

eshop-ordering-signalrhub 1 1 1 1 2h

eshop-payment-api 1 1 1 1 2h

eshop-rabbitmq 1 1 1 1 2h

eshop-sql-data 1 1 1 1 2h

eshop-webmvc 1 1 1 1 2h

eshop-webshoppingagg 1 1 1 1 2h

eshop-webspa 1 1 1 1 2h

eshop-webstatus 1 1 1 1 2hTo check the public service exposed, run:

kubectl get ingYou should get an output similar to this one:

NAME HOSTS ADDRESS PORTS AGE

eshop-apigwmm localhost localhost 80 2h

eshop-apigwms localhost localhost 80 2h

eshop-apigwwm localhost localhost 80 2h

eshop-apigwws localhost localhost 80 2h

eshop-identity-api localhost localhost 80 2h

eshop-webmvc localhost localhost 80 2h

eshop-webspa localhost localhost 80 2h

eshop-webstatus localhost localhost 80 2hNote that ingresses are bound to DNS localhost and the host is also "localhost". So, you can access the webspa app in the address: http://localhost and the MVC in: http://localhost/webmvc

As this is the Docker local k8s cluster, you can see also the containers running on your machine.

If you type the command:

docker psYou should see them all (something similar to this):

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

fec1e3499416 a3f21ec4bd11 "/entrypoint.sh /ngi…" 9 minutes ago Up 9 minutes k8s_nginx-ingress-controller_nginx-ingress-controller-f88c75bc6-5xs2n_ingress-nginx_f1cc7094-e68f-11e8-b4b6-00155d016146_0

76485867f032 eshop/payment.api "dotnet Payment.API.…" 2 hours ago Up 2 hours k8s_payment-api_eshop-payment-api-75d5f9bdf6-6zx2v_default_4a3cdab4-e67f-11e8-b4b6-00155d016146_1

c2c4640ed610 eshop/marketing.api "dotnet Marketing.AP…" 2 hours ago Up 2 hours k8s_marketing-api_eshop-marketing-api-6b8c5989fd-jpxqv_default_45780626-e67f-11e8-b4b6-00155d016146_1

85301d538574 eshop/ordering.signalrhub "dotnet Ordering.Sig…" 2 hours ago Up 2 hours k8s_ordering-signalrhub_eshop-ordering-signalrhub-58cf5ff6-cnlm8_default_4932c344-e67f-11e8-b4b6-00155d016146_1

7a408a98000e eshop/ordering.backgroundtasks "dotnet Ordering.Bac…" 2 hours ago Up 2 hours k8s_ordering-backgroundtasks_eshop-ordering-backgroundtasks-cc8f6d4d8-ztfk7_default_47f9cf10-e67f-11e8-b4b6-00155d016146_1

12c64b3a13e0 eshop/basket.api "dotnet Basket.API.d…" 2 hours ago Up 2 hours k8s_basket-api_eshop-basket-api-658546684d-6hlvd_default_4262d022-e67f-11e8-b4b6-00155d016146_1

133fccfeeff3 eshop/webstatus "dotnet WebStatus.dll" 2 hours ago Up 2 hours k8s_webstatus_eshop-webstatus-7f46479dc4-bqnq7_default_4dc13eb2-e67f-11e8-b4b6-00155d016146_0

00c6e4c52135 eshop/webspa "dotnet WebSPA.dll" 2 hours ago Up 2 hours k8s_webspa_eshop-webspa-64cb8df9cb-dcbwg_default_4cd47376-e67f-11e8-b4b6-00155d016146_0

d4507f1f6b1a eshop/webshoppingagg "dotnet Web.Shopping…" 2 hours ago Up 2 hours k8s_webshoppingagg_eshop-webshoppingagg-cc94fc86-sxd2v_default_4be6cdb9-e67f-11e8-b4b6-00155d016146_0

9178e26703da eshop/webmvc "dotnet WebMVC.dll" 2 hours ago Up 2 hours k8s_webmvc_eshop-webmvc-985779684-4br5z_default_4addd4d6-e67f-11e8-b4b6-00155d016146_0

1088c281c710 eshop/ordering.api "dotnet Ordering.API…" 2 hours ago Up 2 hours k8s_ordering-api_eshop-ordering-api-fb8c548cb-k68x9_default_4740958a-e67f-11e8-b4b6-00155d016146_0

12424156d5c9 eshop/mobileshoppingagg "dotnet Mobile.Shopp…" 2 hours ago Up 2 hours k8s_mobileshoppingagg_eshop-mobileshoppingagg-b54645d7b-rlrgh_default_46c00017-e67f-11e8-b4b6-00155d016146_0

65463ffd437d eshop/locations.api "dotnet Locations.AP…" 2 hours ago Up 2 hours k8s_locations-api_eshop-locations-api-577fc94696-dfhq8_default_44929c4b-e67f-11e8-b4b6-00155d016146_0

5b3431873763 eshop/identity.api "dotnet Identity.API…" 2 hours ago Up 2 hours k8s_identity-api_eshop-identity-api-85d9b79f4-s5ks7_default_43d6eb7c-e67f-11e8-b4b6-00155d016146_0

7c8e77252459 eshop/catalog.api "dotnet Catalog.API.…" 2 hours ago Up 2 hours k8s_catalog-api_eshop-catalog-api-59fd444fb-ztvhz_default_4356705a-e67f-11e8-b4b6-00155d016146_0

94d95d0d3653 eshop/ocelotapigw "dotnet OcelotApiGw.…" 2 hours ago Up 2 hours k8s_apigwws_eshop-apigwws-65474b979d-n99jw_default_41395473-e67f-11e8-b4b6-00155d016146_0

bc4bbce71d5f eshop/ocelotapigw "dotnet OcelotApiGw.…" 2 hours ago Up 2 hours k8s_apigwwm_eshop-apigwwm-857c549dd8-8w5gv_default_4098d770-e67f-11e8-b4b6-00155d016146_0

840aabcceaa9 eshop/ocelotapigw "dotnet OcelotApiGw.…" 2 hours ago Up 2 hours k8s_apigwms_eshop-apigwms-5b94dfb54b-dnmr9_default_401fc611-e67f-11e8-b4b6-00155d016146_0

aabed7646f5b eshop/ocelotapigw "dotnet OcelotApiGw.…" 2 hours ago Up 2 hours k8s_apigwmm_eshop-apigwmm-85f96cbdb4-dhfwr_default_3ed7967a-e67f-11e8-b4b6-00155d016146_0

49c5700def5a f06a5773f01e "docker-entrypoint.s…" 2 hours ago Up 2 hours k8s_basket-data_eshop-basket-data-66fbc788cc-csnlw_default_3e0c45fe-e67f-11e8-b4b6-00155d016146_0

a5db4c521807 f06a5773f01e "docker-entrypoint.s…" 2 hours ago Up 2 hours k8s_keystore-data_eshop-keystore-data-5c9c85cb99-8k56s_default_3ce1a273-e67f-11e8-b4b6-00155d016146_0

aae88fd2d810 d69a5113ceae "docker-entrypoint.s…" 2 hours ago Up 2 hours k8s_rabbitmq_eshop-rabbitmq-6b68647bc4-gr565_default_3c37ee6a-e67f-11e8-b4b6-00155d016146_0

65d49ca9589d bbed8d0e01c1 "docker-entrypoint.s…" 2 hours ago Up 2 hours k8s_nosql-data_eshop-nosql-data-579c9d89f8-mtt95_default_3b9c1f89-e67f-11e8-b4b6-00155d016146_0

090e0dde2ec4 bbe2822dfe38 "/opt/mssql/bin/sqls…" 2 hours ago Up 2 hours k8s_sql-data_eshop-sql-data-5c4fdcccf4-bscdb_default_3afd29b8-e67f-11e8-b4b6-00155d016146_0Login from the webmvc results in following error: HttpRequestException: Response status code does not indicate success: 404 (Not Found).

The reason is because MVC needs to access the Identity Server from both outside the container (browser) and inside the container (C# code). Thus, the configuration uses always the external url of the Identity Server, which in this case is just http://localhost/identity-api. But this external url is incorrect when used from C# code, and the web mvc can't access the identity api. This is the only case when this issue happens (and is the reason why we use 10.0.75.1 for local address in web mvc in local development mode)

Solving this requires some manual steps:

From the deploy/k8s folder run the following command:

kubectl apply -f .\nginx-ingress\local-dockerk8s\mvc-fix.yamlThis will create two additional ingresses (for MVC and Identity API) to any valid DNS that points to your machine. This enable the use of 10.75.0.1 IP.

Update the configmap of Web MVC by typing (line breaks are mandatory):

kubectl patch cm cfg-eshop-webmvc --type strategic --patch @'

data:

urls__IdentityUrl: http://10.0.75.1/identity

urls__mvc: http://10.0.75.1/webmvc

'@Update the configmap of Identity API by typing (line breaks are mandatory):

kubectl patch cm cfg-eshop-identity-api --type strategic --patch @'

data:

mvc_e: http://10.0.75.1/webmvc

'@Restart the SQL Server pod to ensure the database is recreated again:

kubectl delete pod --selector="app=sql-data"Wait until SQL Server pod is ready to accept connections and then restart all other pods:

kubectl delete pod --selector="app!=sql-data"Note: Pods are deleted to ensure the databases are recreated again, as identity api stores its client names and urls in the database. If client name doesn't get updated properly you may run into Sorry, there was an error : unauthorized_client

Now, you can access the MVC app using: http://10.0.75.1/webmvc. All other services (like SPA) must be accessed using http://localhost

Few environments, do have issues with Ip 10.0.75.1. This could be happening because of following reasons :

- Firewall configuration - https://github.com/docker/for-win/issues/334#issuecomment-297030101

- Docker subnet is using a different Ip segment for communication.

- The host has a changing IP address.

In such scenarios, if either docker special DNS name or host machine IP is working fine then you could use any of that rather using 10.0.75.1. For e.g - host.docker.internal or docker.for.win.localhost or 192.168.10.20. But you would need to repeat above steps again.

- Update the

configmapofWeb MVC, by typing :

kubectl patch cm cfg-eshop-webmvc --type strategic --patch @'

data:

urls__IdentityUrl: http://<dns or ip>/identity

urls__mvc: http://<dns or ip>/webmvc

'@

- Update the

configmapofIdentity APIlike above. - Clean up and restart the SQL Server pod again to update client information.

- After that, you should be able to browse the app using below urls.

- Identity Server : http://<dns or ip>/identity

- Web MVC : http://<dns or ip>/webmvc

- Web SPA : http://localhost/

- Web Status: http://localhost/webstatus

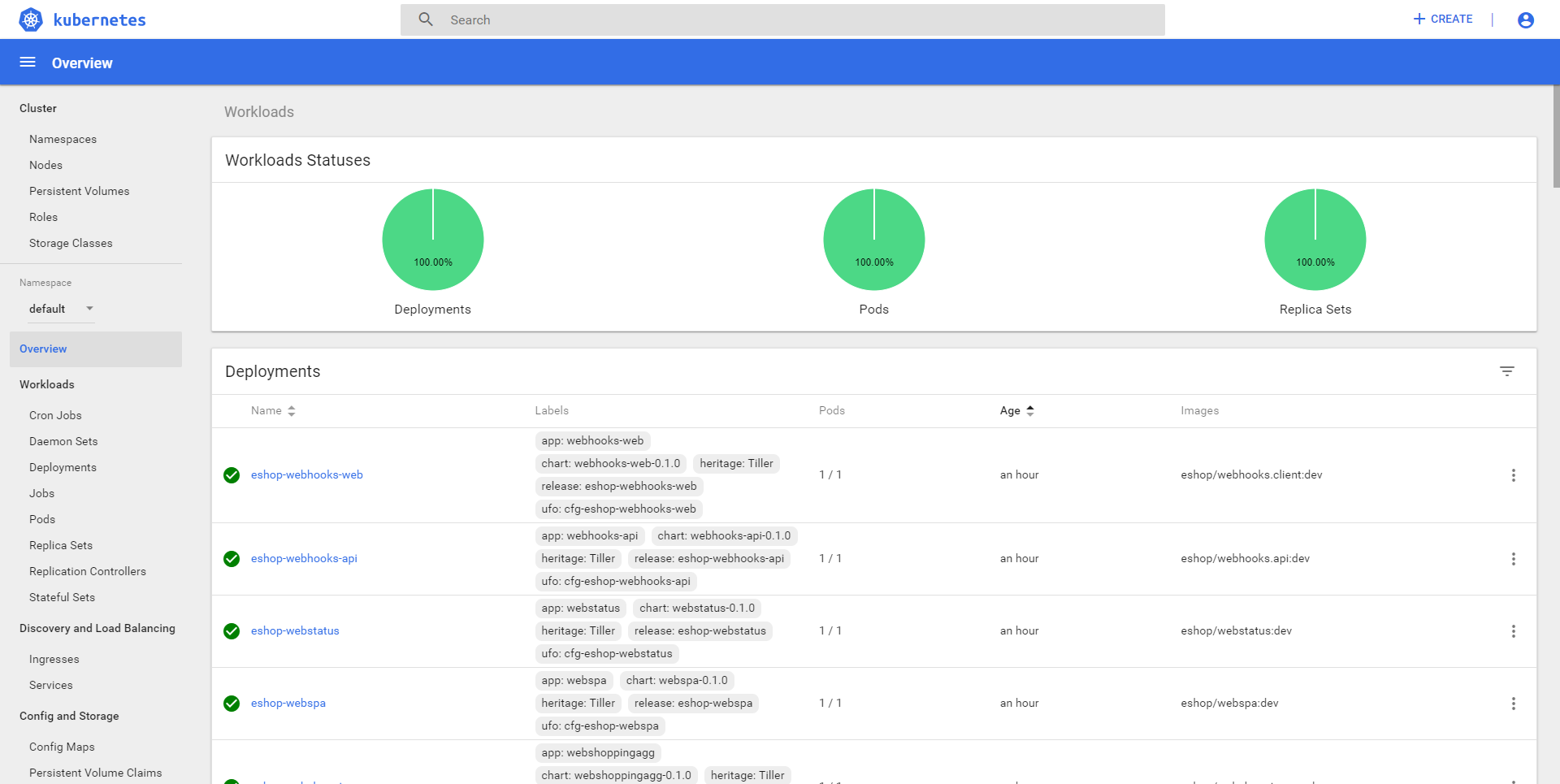

After a while, when all services are running OK, you should get to see something like this:

- WebStatus: http://localhost/webstatus

- WebMVC: http://10.0.75.1/webmvc

- WebSPA: http://10.0.75.1/webspa

To delete eShop related deployments you can use this command:

helm uninstall $(helm ls --filter eshop -q)Before performing actual delete if you want to perform a dry run, below command is useful.

helm uninstall $(helm ls --filter eshop -q) --dry-runYou can expose internal services by using NodePorts (among some other options)

To expose the SQL Server service and the RabbitMQ admin port, when deploying to local Kubernetes, you can run the following commands, from the k8s folder:

kubectl apply -f .\nodeports\rabbitmq-admin.yaml

kubectl apply -f .\nodeports\sql-service.yamlThis will expose the following infrastructure services:

- SQL Server (connect with SSMS to

tcp:localhost,31433withUser Id=sa;Password=Pass@word;and explore databases:- Identity:

Microsoft.eShopOnContainers.Service.IdentityDb - Catalog:

Microsoft.eShopOnContainers.Services.CatalogDb - Marketing:

Microsoft.eShopOnContainers.Services.MarketingDb - Ordering:

Microsoft.eShopOnContainers.Services.OrdeingDb - Webhooks:

Microsoft.eShopOnContainers.Services.WebhooksDb

- Identity:

- RabbitMQ (Queue management): http://localhost:31672/ (login with username=guest, password=guest)

You can deploy Kubernetes Web UI (Dashboard) to monitor the cluster locally.

To enable the dashboard:

-

Go to the k8s folder in your local copy of the eShopOnContainers repo.

-

Deploy the dashboard with this command:

kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0-beta6/aio/deploy/recommended.yaml

-

Create a sample admin user and role binding by running the script:

kubectl apply -f dashboard-adminuser.yaml

-

Execute the dashboard by running this command:

kubectl proxy

-

Get the bearer token to login to the dashboard by running this command:

In PowerShell :

kubectl -n kubernetes-dashboard describe secret $(((kubectl -n kubernetes-dashboard get secret | Select-String 'admin-user') -split " ")[0])

Or in other Shell :

kubectl -n kubernetes-dashboard describe secret $(kubectl -n kubernetes-dashboard get secret | grep admin-user | awk '{print $1}')You should get something like this:

Name: admin-user-token-95nxr Namespace: kube-system Labels: <none> Annotations: kubernetes.io/service-account.name=admin-user kubernetes.io/service-account.uid=aec979a2-7cb4-11e9-96aa-00155d013633 Type: kubernetes.io/service-account-token Data ==== ca.crt: 1025 bytes namespace: 11 bytes token: eyJhbGciOiJSUzI1NiIsImtpZCI...(800+ characters)...FkM_tAclj9o8T7ALdPZciaQ

-

Copy the token and navigate to: http://localhost:8001/api/v1/namespaces/kubernetes-dashboard/services/https:kubernetes-dashboard:/proxy/#/login

-

Select "Token" and paste the copied the token in the "Enter token" filed:

You should see something like this:

From there you can explore all the components of your cluster.

IMPORTANT You have to manually start the dashboard and get a new login token every time you restart the cluster.

To reset the Kubernetes cluster to the initial (new) state, you have to:

- Click the "Reset" tab on the Kubernetes settings dialog

- Click "Reset Kubernetes Cluster..."

- Click the "Reset" button on the "Reset Kubernetes cluster" confirmation dialog.

As shown in the next image:

If you ever want to stop Kubernetes from Docker Desktop, just disable Kubernetes in the Settings > Kubernetes page above and click "Apply & Restart".

-

Kubernetes Web UI setup

https://kubernetes.io/docs/tasks/access-application-cluster/web-ui-dashboard/ -

Kubernetes Dashboard

https://github.com/kubernetes/dashboard -

Kubernetes Port Forwarding

https://kubernetes.io/docs/tasks/access-application-cluster/port-forward-access-application-cluster/

- System requirements

- Development setup

- Databases & containers

- Architecture

- Application

- Code

- Logging and Monitoring

- Tests