-

Notifications

You must be signed in to change notification settings - Fork 286

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Perf: use manual masking on state flags to avoid boxing #1197

Conversation

|

LGTM. |

|

I thought we were all in favor of Also, can you link the test you used in this case to compare the difference? |

|

In netfx flags usage should always be by masking because the compiler generates box instructions and the jit wasn't able to understand the pattern enough to remove them. Marking means that the enum is treated as it's integer base type and those don't require boxing. Here's an example of simple use: using System;

public class C {

[Flags]

public enum MyFlags

{

None = 0,

Flag1 = 2,

Flag2 = 4

}

public bool Masked(MyFlags flags) {

return (flags & MyFlags.Flag1) == MyFlags.Flag1;

}

public bool HasFlag(MyFlags flags)

{

return flags.HasFlag(MyFlags.Flag1);

}

}If you look at the IL generated for the masked case you see this: and those box instructions are the problem, each one is a heap allocation that is short lived. If you look at the assembly on netfx difference between the mask and hasflags approaches there's a clear difference and masking is superior: In netcore 2.1 (i think, certainly around that timeframe) the jit team make Enum.HasFlag a jit intrinsic which means that the jit has a known pattern that it emits for that instruction sequence and this allows simplification of the surrounding box instruction interpretation by the jit. So the assembly on netcore is considerably better: In general Today when profiling under netcore I can see that the HasFlag version is allocating which means that the jit intrinsic is being put into an instruction sequence that means it isn't possible to eliminate the boxes. Changing to masking will eliminate the boxes and will use the mask asm as seen above which is good. Reproducing the problem in a way that the jit team can sensibly investigate will require running sqlclient on a custom built checked runtime so that the jit debug flags allowing dumping of compilation debugging information are available. I'm capable of doing this in theory but it's a daunting and time consuming process and even if it is fixed in NET7 that wouldn't improve perf on netcore3.1 to net6. Masking will. So the short answer is that it should work but doesn't for a simples reason that it's hard to track down. Masking reduces allocations improving memory performance and the cost is in readability. I think everyone working on this library is used to dealing with much more complex code patterns than masking vs hasflags. |

Are you sure you weren't running in Debug or something? I'm not seeing any allocations with HasFlag, at least not on .NET 6 - see benchmark code below (with MemoryDiagnoser). I mean, if the idea is to avoid HasFlag because you want to share code between netfx and netcore, and want netfx to be optimized, that's one thing (though as I previously wrote - with the general perf you get from netfx, I do doubt that this would be significant). But if you really think you're seeing a problem with HasFlag on netcore, it's probably worth confirming exactly what's going on before making a decision based on that. BenchmarkDotNet=v0.13.0, OS=ubuntu 21.04

Benchmark codeBenchmarkRunner.Run<Program>();

[MemoryDiagnoser]

public class Program

{

public MyFlags Flags { get; set; }

[Benchmark]

public bool HasFlag()

=> Flags.HasFlag(MyFlags.Flag1);

[Benchmark]

public bool Bitwise()

=> (Flags & MyFlags.Flag1) == MyFlags.Flag1;

}

[Flags]

public enum MyFlags

{

None = 0,

Flag1 = 2,

Flag2 = 4

} |

|

Yes. I'm sure I'm using the release build. I know HasFlag is supposed to generate non boxing code on netcore but I can see that in this case it is generating boxing code. In every non-sqlclient case I've tried it has generated non-boxing code. I've discussed it with some of the runtime team and in order to debug it needs a clear replication which is why I'd need a checked runtime. This method is portable between netfx and netcore and despite being slightly less readable it will perform well. I don't really understand why I'm getting so much pushback. If you don't trust my profiling results then do your own and see what you find. |

|

@Wraith2 regardless of the specific discussion here, from some Npgsql internal discussions (with @NinoFloris and @vonzshik) we have seen some cases where a profiler shows allocations where BDN does not; this may be due to tiered compilation somehow not working under the profiler (but definitely working under BDN). Bottom line: when in doubt, I'd advise confirming that allocations indeed occur via BDN. |

|

How do I identify if a specific allocation from all the other required allocations is taking place using BDN? If the profiler(s) aren't working then that needs to be identified and reported. It's their job to get this stuff right. I'm profiling against a release build from the repo build script used through the nuget package so there are no weird debug or visual studio things going on. It's an as-release environment. |

You're right about that - that needs a close-to-minimal repro, where you can compare two pieces of code which are identical except for the thing you're trying to test (or a really minimal piece of code which should have zero allocations).

Agreed, but the first step for that is to make a reproducible code sample - which only you can do as you're the one running into it. The moment some sample program using SqlClient shows that particular allocation when profiled, that can be investigated. Note that I don't have a confirmed case of profiler malfunction, just some possible weirdness. |

|

@cmeyertons you reproduced a similar behaviour in #1054 , is there any chance you could see if you can repro this one? It's a simple benchmark I'll paste below and I can see it boxing and unboxing [MemoryDiagnoser]

public class NonQueryBenchmarks

{

private string _connectionString = "Data Source=(local);Initial Catalog=Scratch;Trusted_Connection=true;Connect Timeout=1";

private SqlConnection _connection;

private SqlCommand _command;

private CancellationTokenSource _cts;

[GlobalSetup]

public void GlobalSetup()

{

_connection = new SqlConnection(_connectionString);

_connection.Open();

_command = new SqlCommand("SELECT @@version", _connection);

_cts = new CancellationTokenSource();

}

[GlobalCleanup]

public void GlobalCleanup()

{

_connection.Dispose();

_cts.Dispose();

}

[Benchmark]

public async ValueTask<int> AsyncWithCancellation()

{

return await _command.ExecuteNonQueryAsync(_cts.Token);

}

} |

|

Issue opened on dotMemory: https://youtrack.jetbrains.com/issue/PROF-1157 Which is fairly self explanatory and clearly doesn't box (no helper calls). Regardless of that; I think that this update is portable between netfx and netcore and has only a small reduction in readability so I'd still like to proceed. |

|

Response from jetbrains and careful investigation of the allocations in certain time windows of the traces show that tiering does eventually give optimized non-boxing code but that it starts off with tier0 boxing. We can just make it non-boxing from the start and do the same on netfx. So masking is still the optimal choice in this situation. |

|

CI is green, no conflicts. Anyone? |

|

1 file, 7 lines changed, CI is green, what is blocking this please? |

I'm guessing uncertainty. (Preface: I'm just here trying to mediate. I don't know whether we should merge this or not, but I do appreciate the contribution.) From what I understand, this is a reversing of a previous perf/readability change and there is uncertainty around the scope of the perf problem. HasFlags is the forward-looking and recommended pattern for .NET and is much easier to read than masking. If it's incorrectly boxing in some scenarios, it will eventually be fixed to not box in the future, right? Perhaps even in an update? So then some time in the future we would want to reverse this reversal? I can see that in your run and the scope you are showing, there appears to be a significant difference in allocations. However, what is the overall perf improvement in an end-to-end use case? Are we arguing over tens of thousands of allocations among millions? 10,000 rows/sec versus 10,001 in some hypothetical scenario? I'm honestly not sure which way to argue for this PR. I know we try really hard to squeeze perf out where we can, but given the discussion, it doesn't sound like everyone was convinced. Regards, |

|

These flags will be checked multiple times per query and on each field access because the contain various pieces of important state. They are masked together instead of being individual boolean fields for two reasons 1) it's makes the state object smaller and 2) they are copied as a group into async snapshots and the save/restore time is noticeable (but not a dominator) on profiles. Every field access will need to check if the reader has advanced to the metadata and will cause a boxed enum value on every call to reader.Get* or reader.GetfieldValue<*> so the more you do the more garbage gets generated. They're gen0 allocations and very short lived so they're cleaned up quickly, however the optimal case for garbage collection is for it to never happen. The more time spend in GC the less time you can do user work. In netfx up to current and last version using enum.HasFlags will box. On netcore 3.1 and later where tiered compilation is available+enabled the tier0 (quickly produced, relatively unoptimized) code generated by the jit will box in the same way as netfx. The tier1 code is produced once a method execution count hits a specific level and the in the tier1 compilation there is an optimization that recognises the HasFlags pattern and removes the box. Manual masking will generate optimal code even at tier0 on netcore and will generated box free code on netfx. I would prefer to use HasFlags if readability were the only concern. I acknowledge that the manual masking version is slightly less readable but I feel that the slight drawback for the small number of people who work on this code is heavily outweighed by the efficiency that all users of this library gain. It's a case of <10 people taking a few extra seconds to think through the masking vs many thousands of people spending less electricity to query their data. |

This .NET Core angle doesn't make sense to me. Tiered compilation is a completely standard feature of JITing, and trying to micro-optimize something which only occurs in tier0 is, well, odd. The whole point of tiered compilation is that the slow code gets optimized automatically by the JIT after a few iterations, so any perf gains are completely negligible and don't justify worse readability... For .NET Framework, if you believe reducing these gen0 allocations justify worse readability, then this may make sense. But I'd argue that .NET Framework is already inefficient in so many ways (compared to .NET Core), that this is again unlikely to matter. Anyone who cares even a little about perf should already be in .NET Core for a thousand reasons which are more important than this allocation. |

|

On netfx we still use individual with internal access and touch them directly. The change to add the properties and implement them using flags was made in netcore only because at the time it was part of corefx. netfx currently doesn't incur the cost of boxing here and it will be a reduction in performance when the two are merged to use the common codebase. In general I don't put a lot of effort into netfx perf improvements for exactly the reason you stated, there are bigger problems. Not using an approach which is superior on all targets in various degrees just because it benefits netfx slightly is punitive and discriminatory. There are people who use and will continue to use netfx and if we can make their code more efficient with minor effort on our part we should. |

|

You can define a private FWIW, |

|

@jkotas Not to digress too much from the main topic, but I'm curious why the |

|

I assume that you mean C# compiler (Roslyn) intrinsic. Roslyn has very minimal understanding of the base class library behaviors. It does not expand any methods as intrinsics, with a very few exceptions of methods on core types e.g. |

|

Ah, yes, that's what I meant. Got it, thanks! 🙂 |

|

Updated to use an accessor function |

|

Yay! |

While profiling something unrelated i noticed that the highest allocation was boxing of an enum that was being used with HasFlags. This is netcore so it should be using the optimized code path but as noted in #1054 this isn't happening. This PR changes from using HasFlag to manual masking.

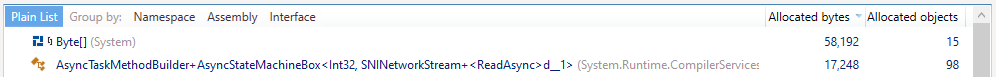

before:

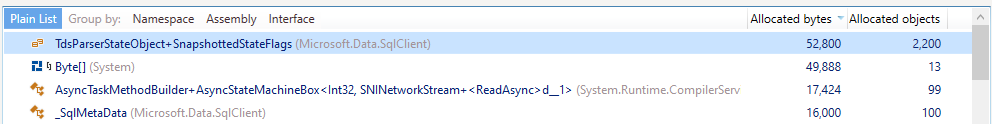

after: