…170974)

## Summary

Closes elastic#169825

This PR adds logic to Fleet's `/api/agents/available_versions` endpoint

that will ensure we periodically try to fetch from the live product

versions API at https://www.elastic.co/api/product_versions to make sure

we have eventual consistency in the list of available agent versions.

Currently, Kibana relies entirely on a static file generated at build

time from the above API. If the API isn't up-to-date with the latest

agent version (e.g. kibana completed its build before agent), then that

build of Kibana will never "see" the corresponding build of agent.

This API endpoint is cached for two hours to prevent overfetching from

this external API, and from constantly going out to disk to read from

the agent versions file.

## To do

- [x] Update unit tests

- [x] Consider airgapped environments

## On airgapped environments

In airgapped environments, we're going to try and fetch from the

`product_versions` API and that request is going to fail. What we've

seen happen in some environments is that these requests do not "fail

fast" and instead wait until a network timeout is reached.

I'd love to avoid that timeout case and somehow detect airgapped

environments and avoid calling this API at all. However, we don't have a

great deterministic way to know if someone is in an airgapped

environment. The best guess I think we can make is by checking whether

`xpack.fleet.registryUrl` is set to something other than

`https://epr.elastic.co`. Curious if anyone has thoughts on this.

## Screenshots

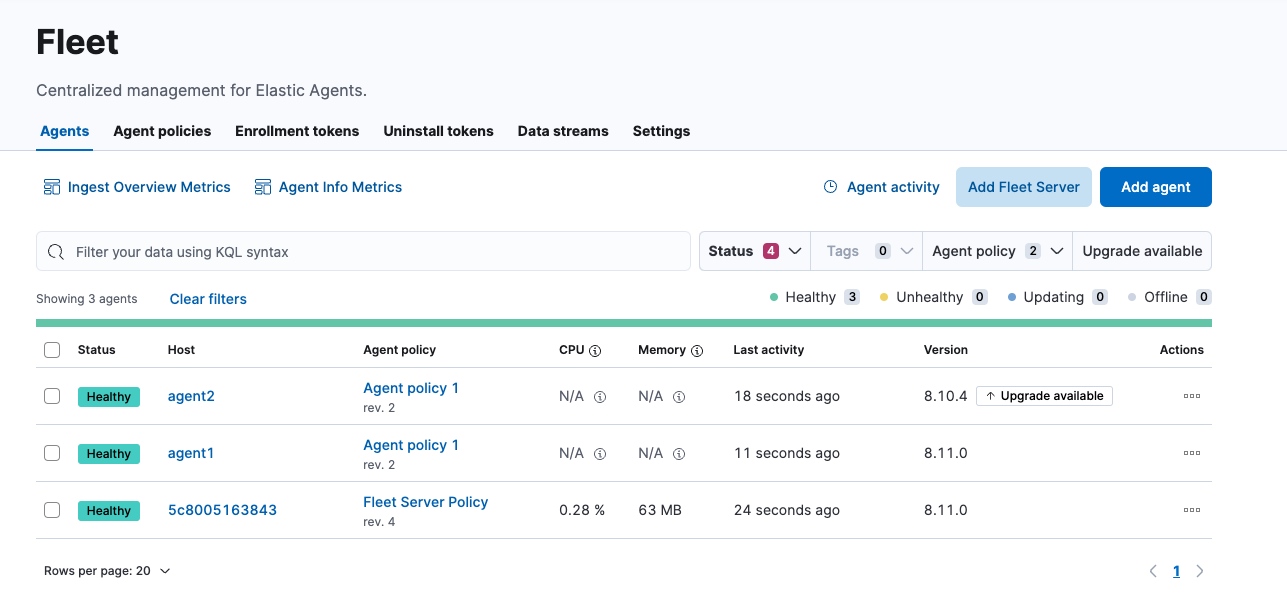

## To test

1. Set up Fleet Server + ES + Kibana

2. Spin up a Fleet Server running Agent v8.11.0

3. Enroll an agent running v8.10.4 (I used multipass)

4. Verify the agent can be upgraded from the UI

---------

Co-authored-by: Kibana Machine <42973632+kibanamachine@users.noreply.github.com>

(cherry picked from commit cd909f0)

# Conflicts:

# x-pack/plugins/fleet/server/services/agents/versions.ts