-

Notifications

You must be signed in to change notification settings - Fork 393

Home

Welcome to the SegMap wiki! This is where you find information about SegMap and its codebase.

To run the demonstrations, please consult the following wiki page.

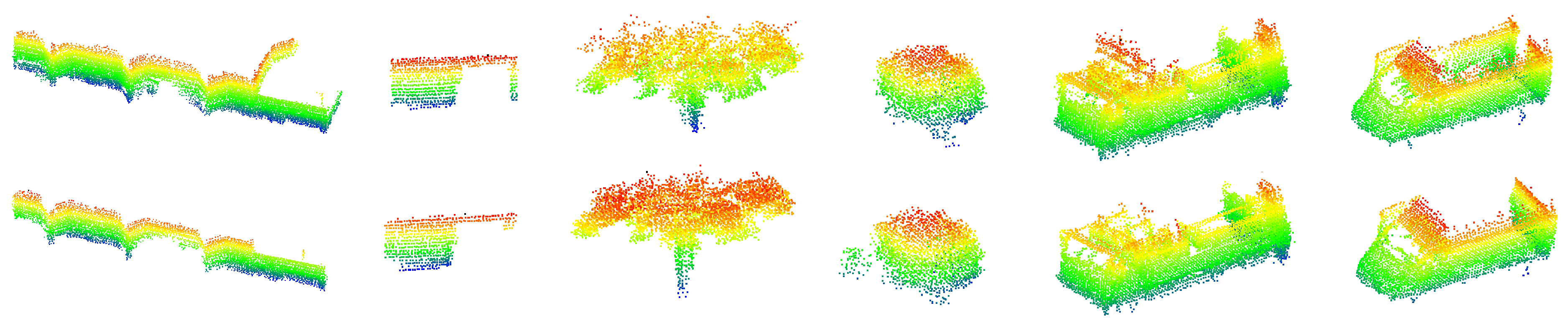

SegMap is a technique for providing autonomous vehicles with the potential to recognize previously visited areas based on the extraction and matching of 3D segments from laser point clouds. Examples of segments can be seen in the image below.

This important capability of autonomous vehicles to localize within their environment is used for constructing accurate 3D maps and for performing different tasks such as driving to goals or exploring areas of interest. These capabilities are crucial for a number of applications including autonomous driving and search and rescue operations.

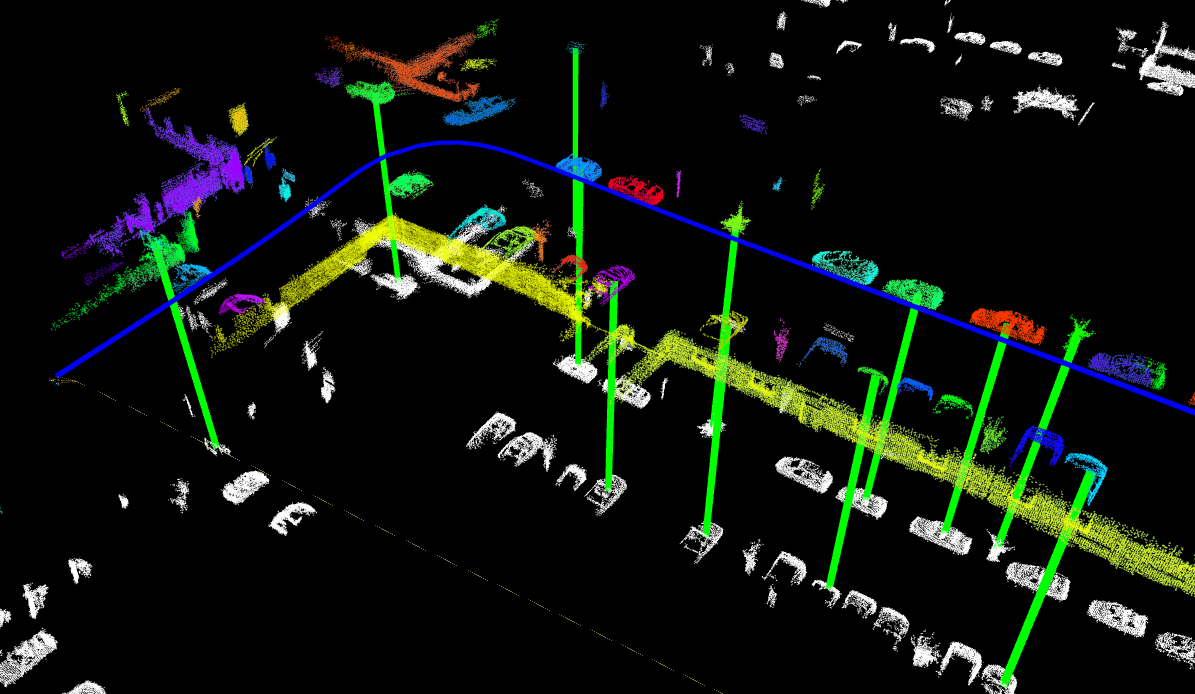

The technique is based on extracting segments (eg. parts of vehicles, trees and buildings) in the vicinity of the vehicle and matching these segments with the ones extracted from a target map. Segment matches can be directly translated into precise localization information, which enables accurate 3D map construction and localization. Examples of matched segments, between previously recorded segments (white) and recently observed segments (coloured), are shown with green lines in the image below.

The approach relies on segments which are not necessarily restricted to semantic objects, as this allows for a more general representation and enables functionality in a wider range of different environments. Some more detailed examples of matched segments are shown in the following image.

To summarize, this SegMap repository offers the following capabilities:

- efficient point-cloud segmentation into informative clusters

- extraction of meaningful segment descriptors

- efficient segment retrieval using nearest neighbour search

- robust segment matching with a random forest classifier

Resulting in:

- global localization (loop-closure) in 3D point clouds

- real-time performance

- A ROS compatible code-base for mapping and localization using lidar point clouds

The SegMap approach is illustrated in the above figure, and further demonstrated in the video below.

The following paragraphs will provide a few more details about the underlying algorithms used. A more complete description and evaluation of the approach can be found in here.

To begin, incoming point clouds are clustered into a set of segments. In practice, this can be done in a number of different ways. In the current implementation, segmentation starts by removing the ground plane, applying a voxel grid to the point cloud, and filtering out noise. Segments are then formed by Euclidean clustering.

Once the point cloud has been segmented, descriptors are extracted for each segment. This feature extraction step is used for compressing the raw data into compact descriptors suitable for recognition and classification. In the current implementation, both eigenvalue-based features and ensemble of shape features are offered.

Given query segments, the next goal is to identify any matches to previously mapped segments. This is achieved by first retrieving relevant segments using a kd-tree search in the feature space, and then classifying each retrieved segment as matching or not. As it is often difficult to select the appropriate distance metric and thresholds, a random forest is trained and used to classify whether segments match or not. Once matching segments are identified by the classifier, these are verified using a geometric consistency check between the corresponding sets of segment centroids. If the scene is geometrically consistent, a 6DOF pose is returned, providing localization within the map.

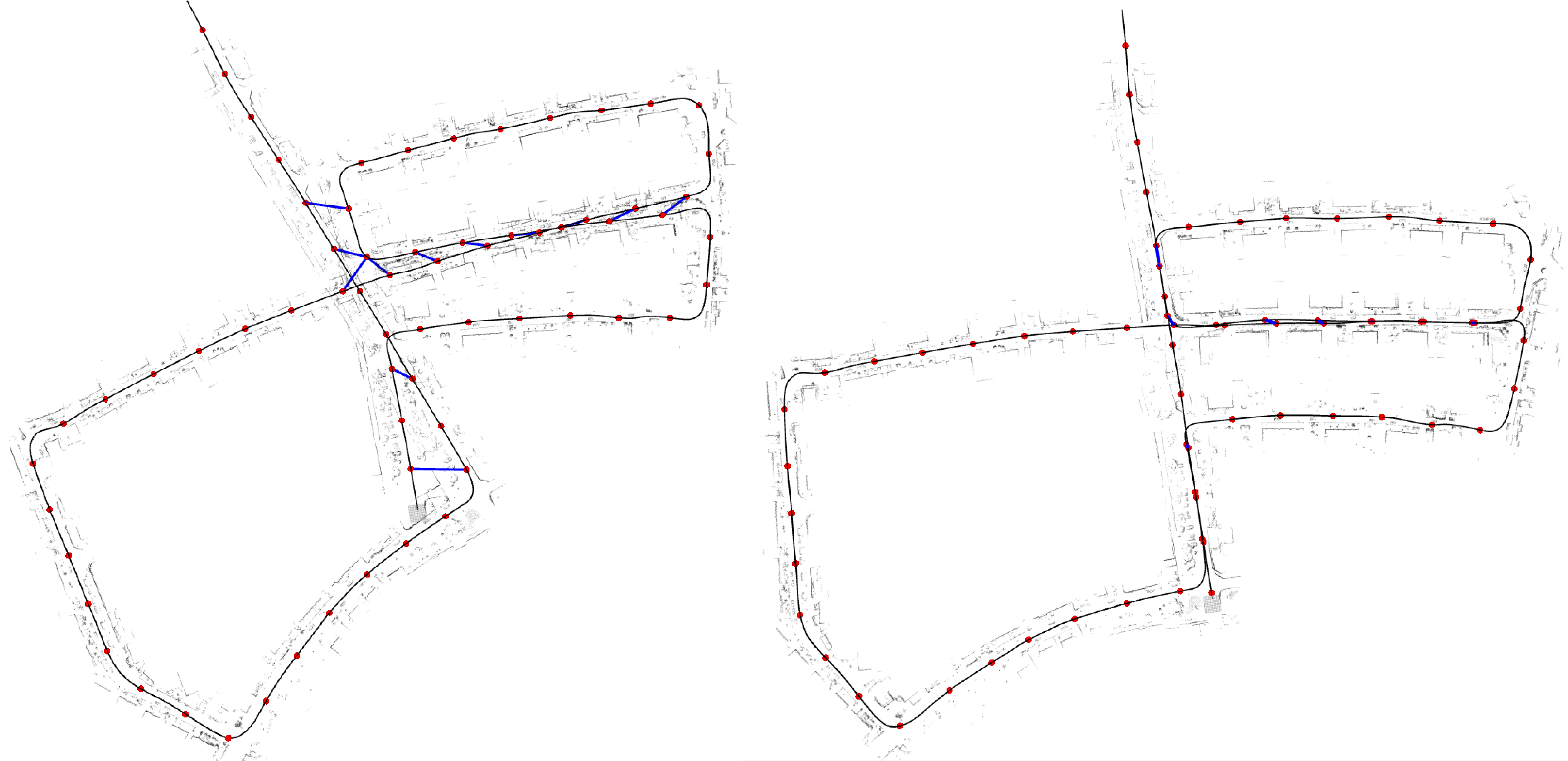

In the case where a prior map is not provided, SegMap can also be used to identify loop-closures and correct for drift in the estimation. This can be done in an online (real-time), or offline stage. In addition, because SegMap performs a global search, loop-closure detection is still possible even in the case of large drift. An example of a map produced from the KITTI 05 sequence is shown below. The estimated trajectory is shown on the left, with detected loop-closures indicated by blue lines; and the results after feeding the loop-closures to pose-graph optimization are shown on the right.