-

Notifications

You must be signed in to change notification settings - Fork 87

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Failed to run benchmark scripts in Android #291

Comments

|

Hi @liute62 , thanks for using FAI-PEP! To answer your questions: (1) I haven't encounter this problem with failed errors about this One more thing, I think from the wiki, the command you want to use is |

|

@liute62 , can you please share the entire log somewhere? To speedup the build, you can try incremental build by specifying --platforms android/interactive. Another way to speedup the build is to do the process with a more powerful host with more cores. The number of parallel threads is capped to the number of cores in the system. |

|

Hi @hl475 and @sf-wind But failed in:

thanks! |

|

For

|

|

For 3, no, we don't measure memory consumption. it is not difficult to add one though. That needs to instrument the framework code to measure it, since PEP just runs on the host system. We use a separate mechanism internally to get the memory consumption. @hl475 , why do we have a default remote report specified in the confit.txt. Is it added by @ZhizhenQin for the remote lab? In that case, it only affects the lab, not the client. |

|

haha.. I added [2]. I feel the right behavior is to move it to FAI-PEP/benchmarking/run_bench.py Line 54 in b674c39

|

|

Sure, will do. |

Summary: facebook#291 (comment) Differential Revision: D15390774 fbshipit-source-id: a38e7ad6a4ba8b13fecd563bc52af5dc2c93838c

Summary: Pull Request resolved: facebook#293 facebook#291 (comment) Differential Revision: D15390774 fbshipit-source-id: 1afa823a71ee44d204f9ac1a2eee879ab87034ab

Summary: Pull Request resolved: facebook#293 facebook#291 (comment) Differential Revision: D15390774 fbshipit-source-id: 3412bf7422bf35e9019b59fe1a218dcb1621742e

Summary: Pull Request resolved: #293 #291 (comment) Reviewed By: sf-wind Differential Revision: D15390774 fbshipit-source-id: 9e2e6c87d526a9949feae4a61faa86b15c42a9d3

|

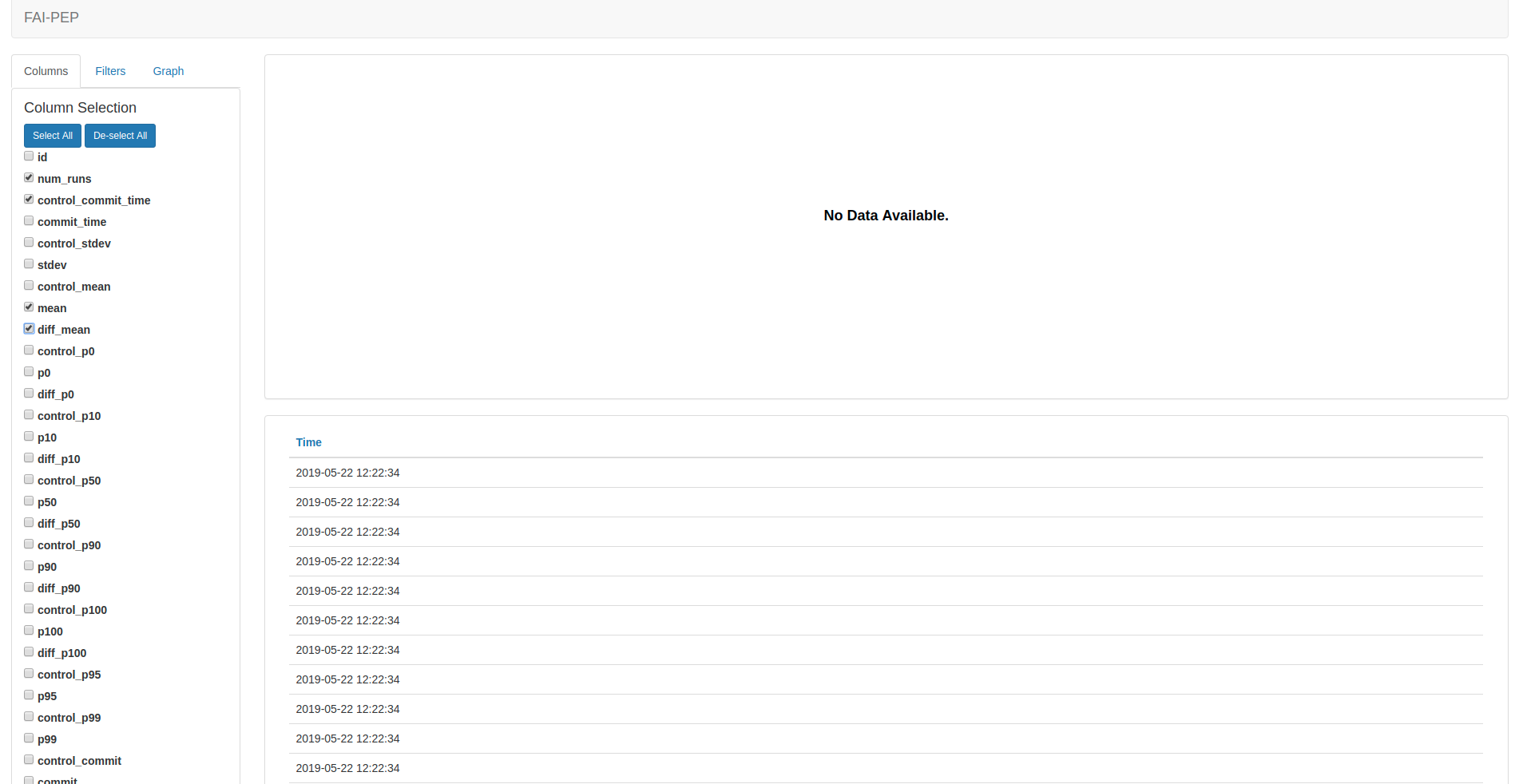

Hi @hl475 and @sf-wind I just setup the server and figured out you guys have solved the HTTP connection errors before, nice job! Btw, after I enter the data visualization platform, I have this screen: My questions are here:

benchmarking/run_bench.py -b specifications/models/caffe2/shufflenet/shufflenet.json I got numerous output with the same timestamp in the time table on the website, like: Is it the normal behavior of output and the data visualization platform? thanks! |

|

|

|

that is strange. Do you use the remote lab flow by following https://github.com/facebook/FAI-PEP/tree/master/ailab? I thought if you select some fields to display, the fields should appear in the table section. Can you post some screen shot? |

|

I didn't setup the nginx and uwsgi but I guess it is still enough to run the system locally with Django. Step1: (venv) (base) new@tower2:~/Documents/git/FAI-PEP/ailab$ python manage.py runserver Django version 2.2.1, using settings 'ailab.settings' Step2: INFO 15:10:02 lab_driver.py: 129: Running <class 'run_lab.RunLab'> with raw_args ['--app_id', None, '--token', None, '--root_model_dir', '/home/new/.aibench/git/root_model_dir', '--logger_level', 'info', '--benchmark_table', 'benchmark_benchmarkinfo', '--cache_config', '/home/new/.aibench/git/cache_config.txt', '--commit', 'master', '--commit_file', '/home/new/.aibench/git/processed_commit', '--exec_dir', '/home/new/.aibench/git/exec', '--file_storage', 'django', '--framework', 'caffe2', '--local_reporter', '/home/new/.aibench/git/reporter', '--model_cache', '/home/new/.aibench/git/model_cache', '--platform', 'android', '--remote_reporter', 'http://127.0.0.1:8000/benchmark/store-result|oss', '--remote_repository', 'origin', '--repo', 'git', '--repo_dir', '/home/new/Documents/git/pytorch', '--result_db', 'django', '--screen_reporter', '', '--server_addr', 'http://127.0.0.1:8000', '--status_file', '/home/new/.aibench/git/status', '--timeout', '300', '--claimer_id', '1'] Step3: |

|

did you submit any test? |

After I launched the Django server, i just run: benchmarking/run_bench.py -b specifications/models/caffe2/shufflenet/shufflenet.json also noticed that the django model benchmark result has been saved multiple times. So what is the test here? Need to have more extra steps? |

|

Hi @liute62, how do you submit your job remotely? are you using as said from here? In particular, this as said from here |

|

@hl475 @sf-wind Thanks for your clarification: I just write down details below as an example accompany to the tutorial, for other's references. And I didn't setup the nginx and uwsgi. 1) Terminal 1:(venv) (base) new@tower2:~/Documents/git/FAI-PEP/ailab$ python manage.py runserver Django version 2.2.1, using settings 'ailab.settings' 2)Terminal 2:(venv) (base) new@tower2:~/Documents/git/FAI-PEP/benchmarking$ python run_bench.py --lab --claimer_id 1 --server_addr http://127.0.0.1:8000 --remote_reporter "http://127.0.0.1:8000/benchmark/store-result|oss" --platform android INFO 14:49:09 lab_driver.py: 129: Running <class 'run_lab.RunLab'> with raw_args ['--app_id', None, '--token', None, '--root_model_dir', '/home/new/.aibench/git/root_model_dir', '--logger_level', 'info', '--benchmark_table', 'benchmark_benchmarkinfo', '--cache_config', '/home/new/.aibench/git/cache_config.txt', '--commit', 'master', '--commit_file', '/home/new/.aibench/git/processed_commit', '--exec_dir', '/home/new/.aibench/git/exec', '--file_storage', 'django', '--framework', 'caffe2', '--local_reporter', '/home/new/.aibench/git/reporter', '--model_cache', '/home/new/.aibench/git/model_cache', '--remote_repository', 'origin', '--repo', 'git', '--repo_dir', '/home/new/Documents/git/pytorch', '--result_db', 'django', '--screen_reporter', '', '--status_file', '/home/new/.aibench/git/status', '--timeout', '300', '--claimer_id', '1', '--server_addr', 'http://127.0.0.1:8000', '--remote_reporter', 'http://127.0.0.1:8000/benchmark/store-result|oss', '--platform', 'android'] 3) Terminal 3:(venv) (base) new@tower2:~/Documents/git/FAI-PEP/benchmarking$ python run_bench.py -b ../specifications/models/caffe2/shufflenet/shufflenet.json --remote --devices PBDM00-8.1.0-27 --server_addr http://127.0.0.1:8000 INFO 14:51:50 build_program.py: 41: + cp /home/new/Documents/git/pytorch/build_android/bin/caffe2_benchmark /tmp/tmpmvdlhx_n/program 4) Visualizationhttp://127.0.0.1:8000/benchmark/visualize Doesn't show anything though, but just typed in Result URL, you would get: 5)More

|

|

Right, you need to click the result URL to see the result. It just have some filters preset. In http://127.0.0.1:8000/benchmark/visualize, you can set the same filters to see the values. For the operator latency, you can adjust the filters to remove the NET entry, then you will get the operator latency in the plot in clearer format. |

Hi there,

I just new to here. So I typed by following the tutorial:

benchmarking/run_bench.py -b specifications/models/caffe2/shufflenet/shufflenet.json --platforms android

After a long time compiling, all of compile and link tasks are finished in the build_android folder in my pytorch repo. But it throws an error:

cmake unknown rule to install xxxx

It looks the caffe2_benchmark executable has been generated by failed to copied to the install folder, so I manually copied to the folder, namely:

/home/new/.aibench/git/exec/caffe2/android/2019/4/5/fefa6d305ea3e820afe64cec015d2f6746d9ca88

Then I modified repo_driver.py to avoid compiling again and run function _runBenchmarkSuites

But failed :

In file included from ../third_party/zstd/lib/common/pool.h:20:0,

from ../third_party/zstd/lib/common/pool.c:14:

../third_party/zstd/lib/common/zstd_internal.h:382:37: error: unknown type name ‘ZSTD_dictMode_e’; did you mean ‘FSE_decode_t’?

ZSTD_dictMode_e dictMode,

^~~~~~~~~~~~~~~

FSE_decode_t

Questions:

benchmarking/run_bench.py -b specifications/models/caffe2/shufflenet/shufflenet.json --platforms android

thanks!

The text was updated successfully, but these errors were encountered: