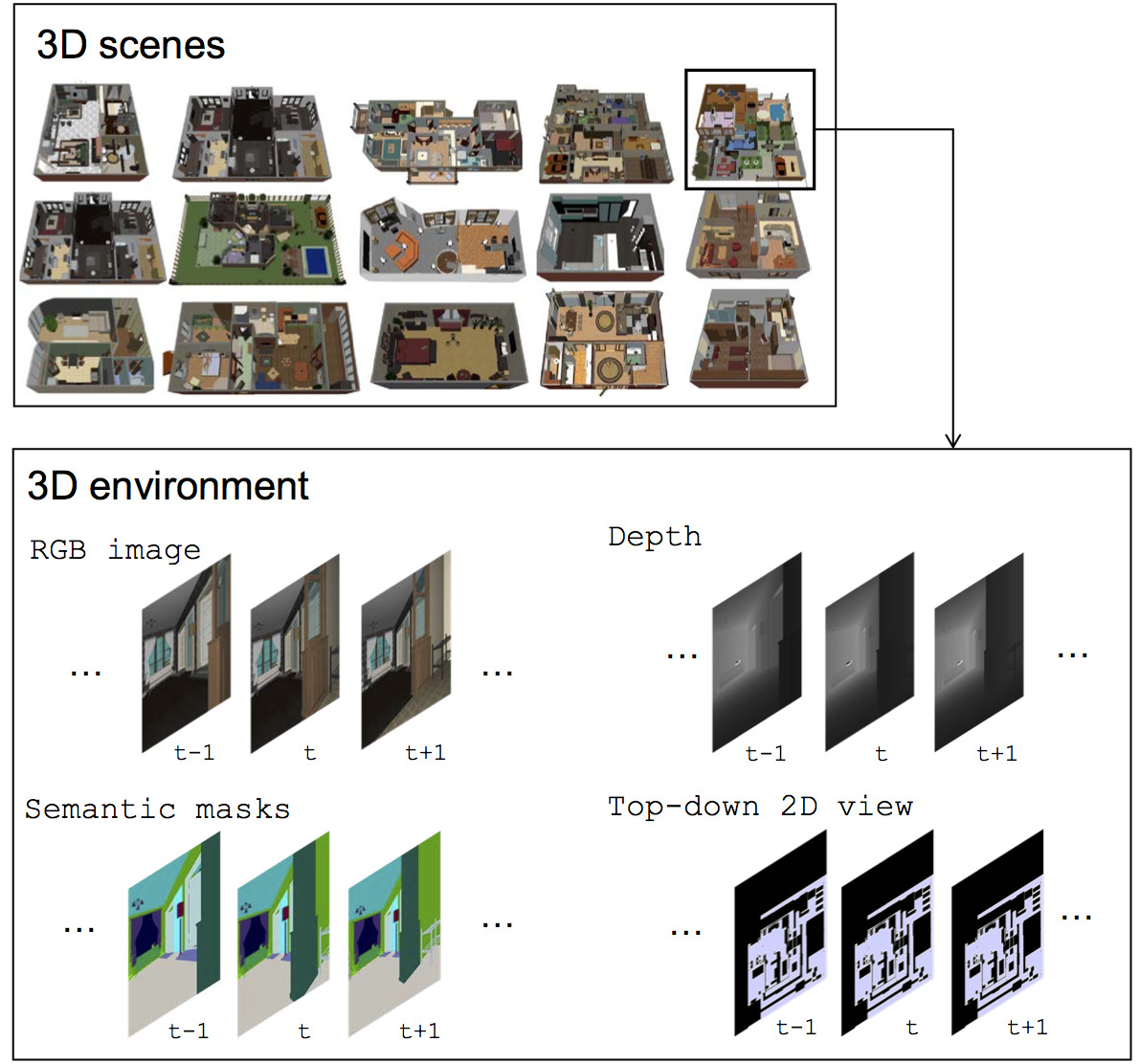

House3D is a virtual 3D environment which consists of thousands of indoor scenes equipped with a diverse set of scene types, layouts and objects sourced from the SUNCG dataset. It consists of over 45k indoor 3D scenes, ranging from studios to two-storied houses with swimming pools and fitness rooms. All 3D objects are fully annotated with category labels. Agents in the environment have access to observations of multiple modalities, including RGB images, depth, segmentation masks and top-down 2D map views. The renderer runs at thousands frames per second, making it suitable for large-scale RL training.

Usage instructions can be found at INSTRUCTION.md

A. RoomNav (paper)

Yi Wu, Yuxin Wu, Georgia Gkioxari, Yuandong Tian

In this work we introduce a concept learning task, RoomNav, where an agent is asked to navigate to a destination specified by a high-level concept, e.g. dining room.

We demonstrated two neural models: a gated-CNN and a gated-LSTM, which effectively improve the agent's sensitivity to different concepts.

For evaluation, we emphasize on generalization ability and show that our agent can generalize across environments

due to the diverse and large-scale dataset.

B. Embodied QA (project page | EQA paper | NMC paper)

Abhishek Das, Samyak Datta, Georgia Gkioxari, Stefan Lee, Devi Parikh, Dhruv Batra

Embodied Question Answering is a new AI task where an agent is spawned at a random location in a 3D environment and asked a natural language question ("What color is the car?"). In order to answer, the agent must first intelligently navigate to explore the environment, gather information through first-person (egocentric) vision, and then answer the question ("orange").

If you use our platform in your research, you can cite us with:

@article{wu2018building,

title={Building generalizable agents with a realistic and rich 3D environment},

author={Wu, Yi and Wu, Yuxin and Gkioxari, Georgia and Tian, Yuandong},

journal={arXiv preprint arXiv:1801.02209},

year={2018}

}

House3D is released under the Apache 2.0 license.