Bowen Cheng, Alexander G. Schwing, Alexander Kirillov

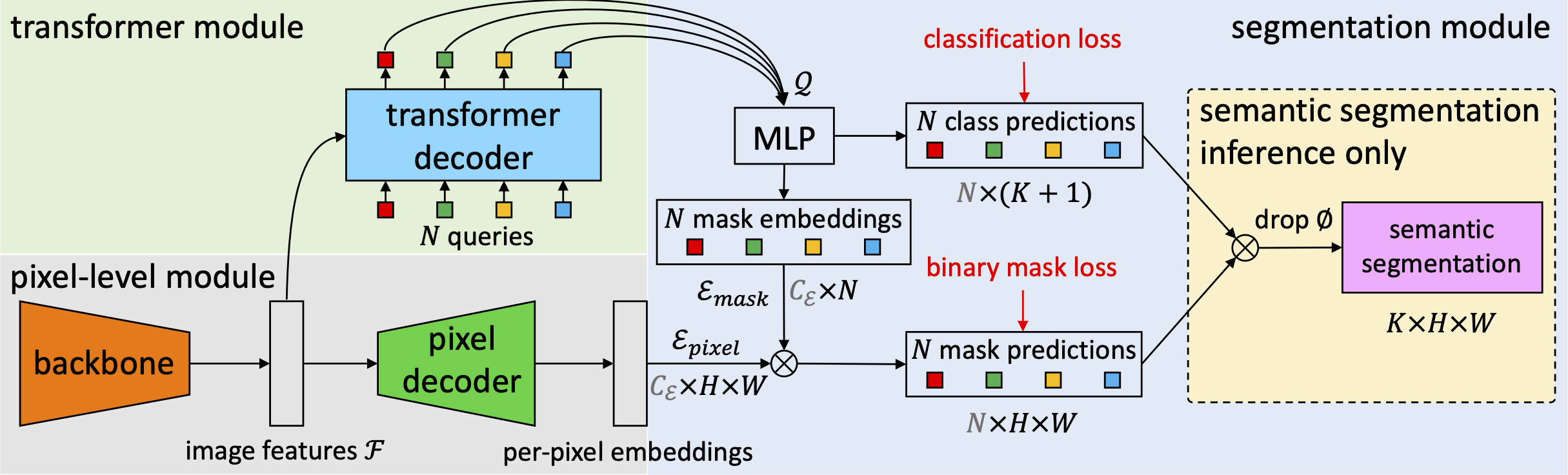

Checkout Mask2Former, a universal architecture based on MaskFormer meta-architecture that achieves SOTA on panoptic, instance and semantic segmentation across four popular datasets (ADE20K, Cityscapes, COCO, Mapillary Vistas).

- Better results while being more efficient.

- Unified view of semantic- and instance-level segmentation tasks.

- Support major semantic segmentation datasets: ADE20K, Cityscapes, COCO-Stuff, Mapillary Vistas.

- Support ALL Detectron2 models.

See installation instructions.

See Preparing Datasets for MaskFormer.

See Getting Started with MaskFormer.

We provide a large set of baseline results and trained models available for download in the MaskFormer Model Zoo.

The majority of MaskFormer is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License.

However portions of the project are available under separate license terms: Swin-Transformer-Semantic-Segmentation is licensed under the MIT license.

If you use MaskFormer in your research or wish to refer to the baseline results published in the Model Zoo, please use the following BibTeX entry.

@inproceedings{cheng2021maskformer,

title={Per-Pixel Classification is Not All You Need for Semantic Segmentation},

author={Bowen Cheng and Alexander G. Schwing and Alexander Kirillov},

journal={NeurIPS},

year={2021}

}