-

Notifications

You must be signed in to change notification settings - Fork 727

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

add docs for istio sticky canary releases

Signed-off-by: Sanskar Jaiswal <jaiswalsanskar078@gmail.com>

- Loading branch information

Showing

2 changed files

with

309 additions

and

0 deletions.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,252 @@ | ||

| # Istio Canary Deployments | ||

|

|

||

| This guide shows you how to use Istio and Flagger to perform Canary releases combined with sticky sessions/session affinity. | ||

|

|

||

| While Flagger can perform weighted routing and A/B testing individually, with Istio it can combine the two leading to a unique | ||

| strategy. For more information you can read the [deployment strategies docs](../usage/deployment-strategies.md) | ||

|

|

||

|

|

||

|  | ||

|

|

||

| ## Prerequisites | ||

|

|

||

| Flagger requires a Kubernetes cluster **v1.16** or newer and Istio **v1.5** or newer. | ||

|

|

||

| Install Istio with telemetry support and Prometheus: | ||

|

|

||

| ```bash | ||

| istioctl manifest install --set profile=default | ||

|

|

||

| # Suggestion: Please change release-1.8 in below command, to your real istio version. | ||

| kubectl apply -f https://raw.githubusercontent.com/istio/istio/release-1.8/samples/addons/prometheus.yaml | ||

| ``` | ||

|

|

||

| Install Flagger in the `istio-system` namespace: | ||

|

|

||

| ```bash | ||

| kubectl apply -k github.com/fluxcd/flagger//kustomize/istio | ||

| ``` | ||

|

|

||

| Create an ingress gateway to expose the demo app outside of the mesh: | ||

|

|

||

| ```yaml | ||

| apiVersion: networking.istio.io/v1alpha3 | ||

| kind: Gateway | ||

| metadata: | ||

| name: public-gateway | ||

| namespace: istio-system | ||

| spec: | ||

| selector: | ||

| istio: ingressgateway | ||

| servers: | ||

| - port: | ||

| number: 80 | ||

| name: http | ||

| protocol: HTTP | ||

| hosts: | ||

| - "*" | ||

| ``` | ||

| ## Bootstrap | ||

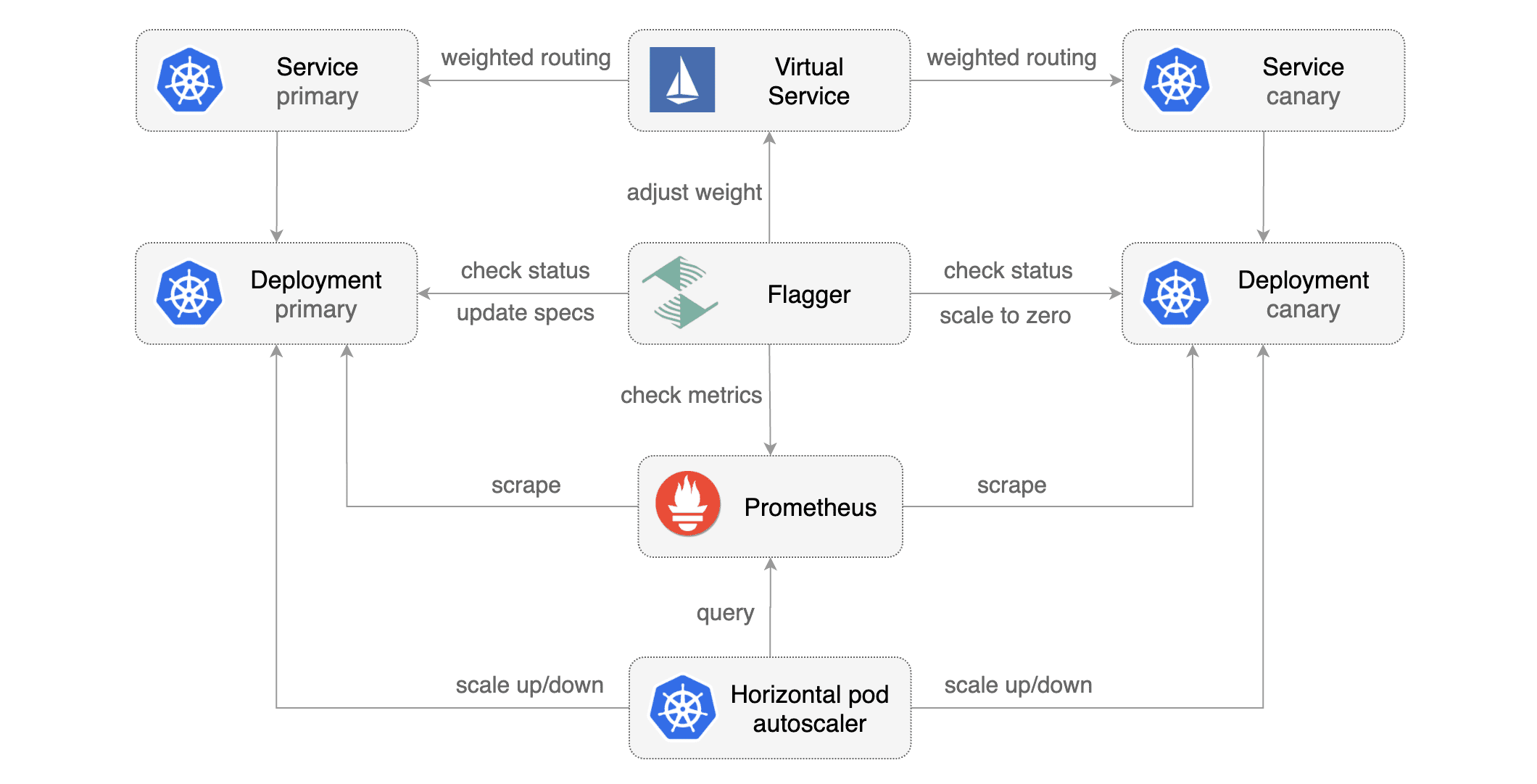

| Flagger takes a Kubernetes deployment and optionally a horizontal pod autoscaler \(HPA\), then creates a series of objects \(Kubernetes deployments, ClusterIP services, Istio destination rules and virtual services\). These objects expose the application inside the mesh and drive the canary analysis and promotion. | ||

| Create a test namespace with Istio sidecar injection enabled: | ||

| ```bash | ||

| kubectl create ns test | ||

| kubectl label namespace test istio-injection=enabled | ||

| ``` | ||

|

|

||

| Create a deployment and a horizontal pod autoscaler: | ||

|

|

||

| ```bash | ||

| kubectl apply -k https://github.com/fluxcd/flagger//kustomize/podinfo?ref=main | ||

| ``` | ||

|

|

||

| Deploy the load testing service to generate traffic during the canary analysis: | ||

|

|

||

| ```bash | ||

| kubectl apply -k https://github.com/fluxcd/flagger//kustomize/tester?ref=main | ||

| ``` | ||

|

|

||

| Create a canary custom resource \(replace example.com with your own domain\): | ||

|

|

||

| ```yaml | ||

| apiVersion: flagger.app/v1beta1 | ||

| kind: Canary | ||

| metadata: | ||

| name: podinfo | ||

| namespace: test | ||

| spec: | ||

| # deployment reference | ||

| targetRef: | ||

| apiVersion: apps/v1 | ||

| kind: Deployment | ||

| name: podinfo | ||

| # the maximum time in seconds for the canary deployment | ||

| # to make progress before it is rollback (default 600s) | ||

| progressDeadlineSeconds: 60 | ||

| # HPA reference (optional) | ||

| autoscalerRef: | ||

| apiVersion: autoscaling/v2beta2 | ||

| kind: HorizontalPodAutoscaler | ||

| name: podinfo | ||

| service: | ||

| # service port number | ||

| port: 9898 | ||

| # container port number or name (optional) | ||

| targetPort: 9898 | ||

| # Istio gateways (optional) | ||

| gateways: | ||

| - public-gateway.istio-system.svc.cluster.local | ||

| # Istio virtual service host names (optional) | ||

| hosts: | ||

| - app.example.com | ||

| # Istio traffic policy (optional) | ||

| trafficPolicy: | ||

| tls: | ||

| # use ISTIO_MUTUAL when mTLS is enabled | ||

| mode: DISABLE | ||

| # Istio retry policy (optional) | ||

| retries: | ||

| attempts: 3 | ||

| perTryTimeout: 1s | ||

| retryOn: "gateway-error,connect-failure,refused-stream" | ||

| analysis: | ||

| # schedule interval (default 60s) | ||

| interval: 1m | ||

| # max number of failed metric checks before rollback | ||

| threshold: 5 | ||

| # max traffic percentage routed to canary | ||

| # percentage (0-100) | ||

| maxWeight: 50 | ||

| # canary increment step | ||

| # percentage (0-100) | ||

| stepWeight: 10 | ||

| # session affinity config | ||

| sessionAffinity: | ||

| # name of the cookie used | ||

| cookieName: flagger-cookie | ||

| # max age of the cookie (in seconds) | ||

| # optional; defaults to 86400 | ||

| maxAge: 21600 | ||

| metrics: | ||

| - name: request-success-rate | ||

| # minimum req success rate (non 5xx responses) | ||

| # percentage (0-100) | ||

| thresholdRange: | ||

| min: 99 | ||

| interval: 1m | ||

| - name: request-duration | ||

| # maximum req duration P99 | ||

| # milliseconds | ||

| thresholdRange: | ||

| max: 500 | ||

| interval: 30s | ||

| # testing (optional) | ||

| webhooks: | ||

| - name: acceptance-test | ||

| type: pre-rollout | ||

| url: http://flagger-loadtester.test/ | ||

| timeout: 30s | ||

| metadata: | ||

| type: bash | ||

| cmd: "curl -sd 'test' http://podinfo-canary:9898/token | grep token" | ||

| - name: load-test | ||

| url: http://flagger-loadtester.test/ | ||

| timeout: 5s | ||

| metadata: | ||

| cmd: "hey -z 1m -q 10 -c 2 http://podinfo-canary.test:9898/" | ||

| ``` | ||

| Save the above resource as podinfo-canary.yaml and then apply it: | ||

| ```bash | ||

| kubectl apply -f ./podinfo-canary.yaml | ||

| ``` | ||

|

|

||

| When the canary analysis starts, Flagger will call the pre-rollout webhooks before routing traffic to the canary. The canary analysis will run for five minutes while validating the HTTP metrics and rollout hooks every minute. | ||

|

|

||

|  | ||

|

|

||

| After a couple of seconds Flagger will create the canary objects: | ||

|

|

||

| ```bash | ||

| # applied | ||

| deployment.apps/podinfo | ||

| horizontalpodautoscaler.autoscaling/podinfo | ||

| canary.flagger.app/podinfo | ||

|

|

||

| # generated | ||

| deployment.apps/podinfo-primary | ||

| horizontalpodautoscaler.autoscaling/podinfo-primary | ||

| service/podinfo | ||

| service/podinfo-canary | ||

| service/podinfo-primary | ||

| destinationrule.networking.istio.io/podinfo-canary | ||

| destinationrule.networking.istio.io/podinfo-primary | ||

| virtualservice.networking.istio.io/podinfo | ||

| ``` | ||

|

|

||

| ## Automated canary promotion | ||

|

|

||

| Trigger a canary deployment by updating the container image: | ||

|

|

||

| ```bash | ||

| kubectl -n test set image deployment/podinfo \ | ||

| podinfod=ghcr.io/stefanprodan/podinfo:6.0.1 | ||

| ``` | ||

|

|

||

| Flagger detects that the deployment revision changed and starts a new rollout: | ||

|

|

||

| ```text | ||

| kubectl -n test describe canary/podinfo | ||

| Status: | ||

| Canary Weight: 0 | ||

| Failed Checks: 0 | ||

| Phase: Succeeded | ||

| Events: | ||

| Type Reason Age From Message | ||

| ---- ------ ---- ---- ------- | ||

| Normal Synced 3m flagger New revision detected podinfo.test | ||

| Normal Synced 3m flagger Scaling up podinfo.test | ||

| Warning Synced 3m flagger Waiting for podinfo.test rollout to finish: 0 of 1 updated replicas are available | ||

| Normal Synced 3m flagger Advance podinfo.test canary weight 5 | ||

| Normal Synced 3m flagger Advance podinfo.test canary weight 10 | ||

| Normal Synced 3m flagger Advance podinfo.test canary weight 15 | ||

| Normal Synced 2m flagger Advance podinfo.test canary weight 20 | ||

| Normal Synced 2m flagger Advance podinfo.test canary weight 25 | ||

| Normal Synced 1m flagger Advance podinfo.test canary weight 30 | ||

| Normal Synced 1m flagger Advance podinfo.test canary weight 35 | ||

| Normal Synced 55s flagger Advance podinfo.test canary weight 40 | ||

| Normal Synced 45s flagger Advance podinfo.test canary weight 45 | ||

| Normal Synced 35s flagger Advance podinfo.test canary weight 50 | ||

| Normal Synced 25s flagger Copying podinfo.test template spec to podinfo-primary.test | ||

| Warning Synced 15s flagger Waiting for podinfo-primary.test rollout to finish: 1 of 2 updated replicas are available | ||

| Normal Synced 5s flagger Promotion completed! Scaling down podinfo.test | ||

| ``` | ||

|

|

||

| You can load `app.example.com` in your browser and refresh it until you see the requests being served by `podinfo:6.0.1`. | ||

| All subsequent requests after that will be served by `podinfo:6.0.1` and not `podinfo:6.0.0` because of the session affinity | ||

| configured by Flagger with Istio. | ||

|

|

||

| **Note** that if you apply new changes to the deployment during the canary analysis, Flagger will restart the analysis. | ||

|

|

||

| A canary deployment is triggered by changes in any of the following objects: | ||

|

|

||

| * Deployment PodSpec \(container image, command, ports, env, resources, etc\) | ||

| * ConfigMaps mounted as volumes or mapped to environment variables | ||

| * Secrets mounted as volumes or mapped to environment variables | ||

|

|

||

| You can monitor all canaries with: | ||

|

|

||

| ```bash | ||

| watch kubectl get canaries --all-namespaces | ||

|

|

||

| NAMESPACE NAME STATUS WEIGHT LASTTRANSITIONTIME | ||

| test podinfo Progressing 15 2019-01-16T14:05:07Z | ||

| prod frontend Succeeded 0 2019-01-15T16:15:07Z | ||

| prod backend Failed 0 2019-01-14T17:05:07Z | ||

| ``` |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters