-

Notifications

You must be signed in to change notification settings - Fork 14.1k

build: rename main → llama-cli, server → llama-server, llava-cli → llama-llava-cli, etc...

#7809

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

gitignore llama-server

fix examples/main ref

Then why are there so many reports like mine? Not necessarily official reports, mind you (although I think I saw a couple official issues). Check reddit, for example. It's not just me. Also, @crashr predicted the problem early on. He was absolutely right and should have been taken more seriously.

I don't see the correlation with speed and open-source. There are a full spectrum of development methodologies in both closed and open source. Fast and slow.

This should not be an excuse to disregard user experience. In this case (and others - again, a pattern here), the change was unnecessary and broke the user experience in a way that cost a lot of people a lot of resources. One person on reddit has been struggling for the past month. The breaking change itself wasn't the problem, perse. The problem was that the impact of the change could have been easily mitigated with the slightest effort. It's the same attitude I've been calling out for a while now. Too many devs simply care too little about the users, and I think you're illustrating my point.

Which demonstrably wasn't good enough. The readme is notoriously unreliable.

The readme details what you need to do to use llama.cpp, including "make." If the users are expected to do something different to not get screwed, it would make sense for the readme to reflect that. If you think all your users are supposed to know "make clean," then why do you tell them how to use "make?" This is obviously confusing and inconsistent. "Llama.cpp is dev-oriented so will not as friendly" is an excuse and a bad one at that. I'm not "mad" because you don't have a GUI. Maybe the readme should say in bold letters across the top, "If you don't know what 'make clean' is, f off."

If that was true, you wouldn't tell people the basics of compilation in the readme. Also, the implication that I don't have basic knowledge about compiling is presumptuous and without merit. I compiled my first linux kernel over 20 years ago and have compiled thousands of programs since.

You'll note I did ask about it and no one has responded. Also, I don't see how that precludes me from providing feedback. This is yet another case of someone criticizing me without even bothering to read my posts. You're telling me to do the very thing I've already done. You'll forgive me for not taking you very seriously moving forward, considering the smell of bad faith and elitism.

Speak for yourself. I'm not in the habit of crediting moves that cause the community and me so much trouble for no benefit. Also, I guess I fail to see how renaming a bunch of binaries and breaking everyone's workflows (including organizations like huggingface) is a good thing worthy of praise. But I'm just a user, so what could I possibly know, right?

You're out of touch. All that said, I do want to thank the devs for all the good work they do, as I often do. But for things to improve, criticism is required (tough love). I understand that's surprising to a lot of people these days. Edit - I especially want to thank @HanClinto . Most devs could learn a lot from him. MVP |

|

@oldmanjk You're not entirely wrong. I recognized the problem because I saw the old binary name in a recent screenshot on Reddit and wondered how that could happen. I generally welcome the change to the binary names, but I also don't see any way of preventing someone from falling foul of this. If, for example, the old binaries had been deleted via makefile, some pipeline somewhere would certainly have broken a productive system. Ok, at least one would have noticed it immediately. Ultimately, one should read the release notes before making any changes like compiling a new binary. Yes, very few of us do. But we should. |

Yeah, this is a bit concerning to me, and I'm trying to think of what more we can do to mitigate this fallout. I've put some suggestions here, but I'm currently leaning towards:

There is more discussion in that PR, but I like the idea of overwriting the old binaries (at least for things like @oldmanjk

Thanks for the shoutout, though it takes all kinds to move a project of this size forward, and I'm just a very small part. Nobody who works on this project takes breaking changes like this casually, and -- despite how it may appear from another vantage -- I hope you trust me when I say that nothing in this migration change was done recklessly or without consideration (the pages and pages of discussion at the beginning of the PR should illustrate this). I hope you'll give us all credit for that -- it's messy, difficult, and there are a lot of tradeoffs to balance, and sometimes we adapt on the fly (which is what we're doing here). This name change should tide us over for a while, and hopefully we won't need another one until we move to the supercommand (and that one should be more backwards-compatible). |

What am I wrong about?

There are many ways. @HanClinto and I are discussing a few in another thread.

Exactly. Although there are better ways.

I usually do when I'm installing releases. That's not what's happening here though. We're not compiling releases. |

…st to help people notice the binary name change from ggml-org#7809 and migrate to the new filenames.

The communication thing. What else could have be done but write it in big letters at the top? |

Well, there are a few things, though some are most clear in hindsight. One suggestion is implemented in #8283 -- please give it a look-see! :) |

You're welcome. You deserved it.

Disagree. I'm going to assume you're just being humble, because based on what I've seen so far, you're intelligent enough to understand your true value.

I understand what you're trying to do here, but strong disagree.

I do when you're not lying. I don't think you believe what you're saying. Again, I understand what you're trying to do, but it's misguided.

This migration change was absolutely done recklessly. There was consideration, but it wasn't taken seriously.

Disagree. They illustrate what I'm saying. Also, and more importantly, the results illustrate what I'm saying.

I give credit where credit is due. Like how we used to back in the day (as a society). The "everyone gets a trophy" mentality is more destructive than most people realize.

When you make it that way. It certainly didn't have to be.

I understand software development is difficult, but I disagree that what was done here was difficult. Changing the names of binaries is not difficult. Changing the names of binaries without screwing the userbase is a little more difficult, but still not difficult. The review-complexity label on your new PR being "low" is evidence towards this point. You being able to instantly come up with three perfectly good solutions off the top of your head is evidence towards this point.

The scale needs new batteries.

I lit a fire and you responded like an MVP with the best attitude here. That's what happened. Credit where credit is due. Just take the compliment. Also credit to @crashr for his excellent warning, even though it wasn't taken seriously.

Let's prepare for that |

The personal deprecations and insinuations are getting tiresome. I'm glad that you appreciate my approach to customer service on bug reports, but aspersions cast at the other volunteers on this project (even if it's only by way of complimenting me to the isolation of others) is not helping me or anyone else. I'm a volunteer here, as is most everyone else, and we all need to strive to be professional and gracious. A bit of grace and professional courtesy go a long way here -- it's a messy situation, and berating other devs with blame-and-shame is not going to help me do anything other than walk away for a while. Thank you (and others) for the issue reports, but please recognize that continuing in this vein will wear me (and others) thin. |

It's getting personal, off-topic, and starting to go in circles. If you want to continue this argument, there are more appropriate venues. Thank you. |

This post is so off-base I'm not even going to bother anymore. My work here is done (for now) |

It was a repeat of a question aimed at Crashr. It's cluttering up this PR which is (currently) linked as the primary source for information about the name migration. Continuing in circles with personal arguments will make it more difficult for other users to sift through and find relevant information about the change in the future. Because of the prominence of this PR, anything we can do to keep this thread focused and un-cluttered is good. If you'd like to continue, maybe moving things to a less prominent location would be best (perhaps #8283?). Thank you. |

* Update llama.cpp.rst Llama.cpp just updated their program names, I've updated the article to use the new name. quantize -> llama-quantize main -> llama-cli simple -> llama-simple [Check out the PR](ggml-org/llama.cpp#7809) * Updated llama.cpp.rst ( removed -cml ) * Update llama.cpp.rst --------- Co-authored-by: Ren Xuancheng <jklj077@users.noreply.github.com>

* Adding a simple program to provide a deprecation warning that can exist to help people notice the binary name change from #7809 and migrate to the new filenames. * Build legacy replacement binaries only if they already exist. Check for their existence every time so that they are not ignored.

…l-org#8283) * Adding a simple program to provide a deprecation warning that can exist to help people notice the binary name change from ggml-org#7809 and migrate to the new filenames. * Build legacy replacement binaries only if they already exist. Check for their existence every time so that they are not ignored.

|

personally i don't think this change is needed? Because i thought the old scripts or docs is already stable? But definitely good to make it neat. But,If its going to be renamed. Did no one noticed this problem? |

I agree with you that |

Agreed. I can see some small benefit, but it certainly wasn't worth the damage done. Had it been done in a manner that didn't break anything (and yes, this was very possible), I would have supported it.

Well, stable might be a stretch, but not breaking things is obviously more-stable.

If you do it without breaking things.

Agreed, but IMO, not prepending everything with the same thing is even better. Even better is to not fix what isn't broken, so I think it's best to just leave it alone now.

Agreed. I don't think the usage of "llama" really makes sense at all anymore for this project, but again, better to leave it now.

It never made sense to me, but I assumed it was used for a good reason |

|

Thanks @HanClinto for giving me reference. My bad for not noticing /reading the full convo. |

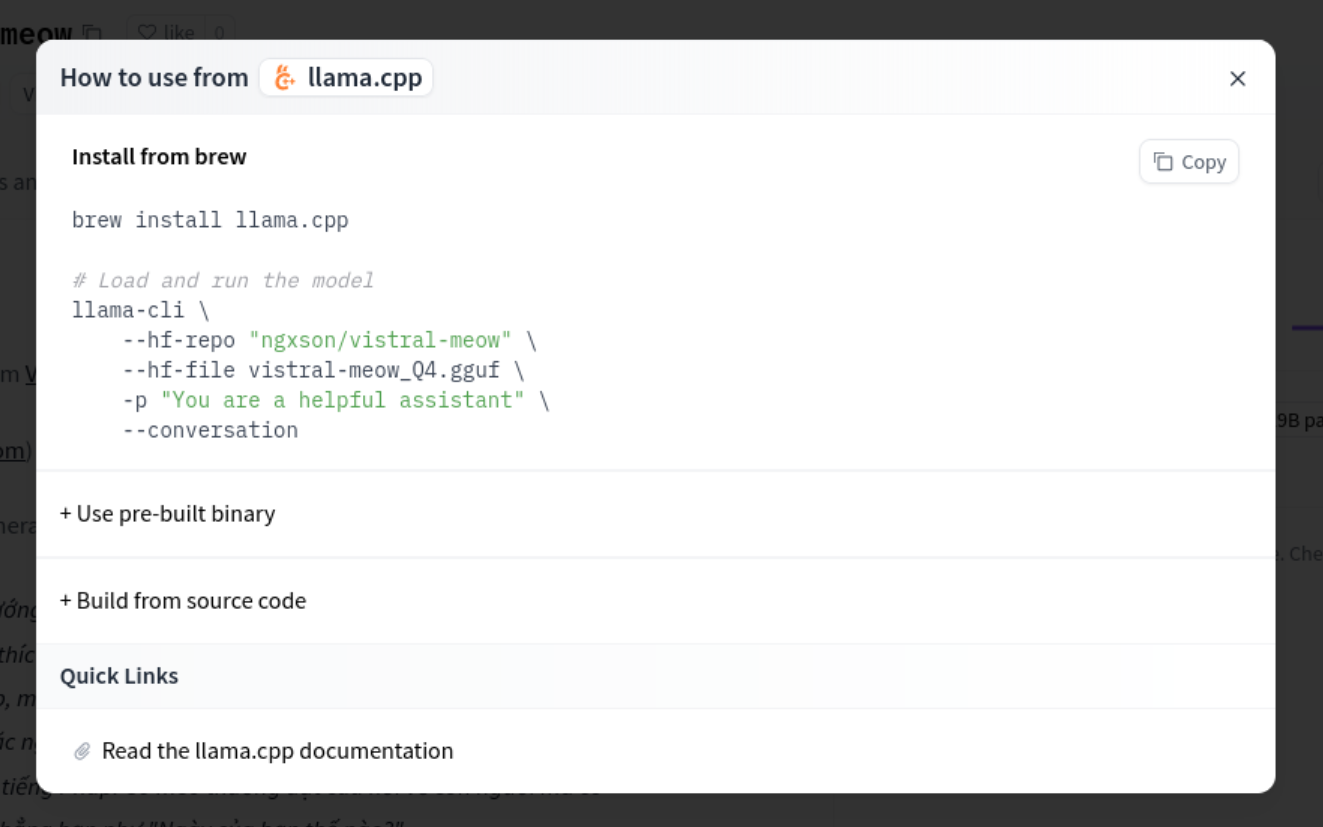

In this PR, I propose some changes: - Update binary name to `llama-cli` (for more details, see this PR: ggml-org/llama.cpp#7809 and this [homebrew formula](https://github.com/Homebrew/homebrew-core/blob/03cf5d39d8bf27dfabfc90d62c9a3fe19205dc2a/Formula/l/llama.cpp.rb)) - Add method to download llama.cpp via pre-built release - Split snippet into 3 sections: `title`, `setup` and `command` - Use `--conversation` mode to start llama.cpp in chat mode (chat template is now supported, ref: ggml-org/llama.cpp#8068) --- Proposal for the UI: (Note: Maybe the 3 sections title - setup - command can be more separated visually)

This renames binaries to have a consistent

llama-prefix.main→llama-cliany-other-example→llama-any-other-example, e.g:llama-server,llama-baby-llama,llama-llava-cli,llama-simplerpc-server= only exception, name unchanged - see below)Goal is to make any scripts / docs work across all the ways to install (cmake, brew, rpm, nix...).

For RPM-based distros, the cuda & clblast variants' prefix is

llama-cuda-/llama-clblast-.The Homebrew formula will be updated separately (

llama→llama-cli).Note: can install a local build w/:

See discussion below about naming options considered.