-

Notifications

You must be signed in to change notification settings - Fork 11.6k

AVX2 ggml_vec_dot_q4_0 performance improvement ~5% #768

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

AVX2 ggml_vec_dot_q4_0 performance improvement ~5% #768

Conversation

Prefetch data used later in the loop

|

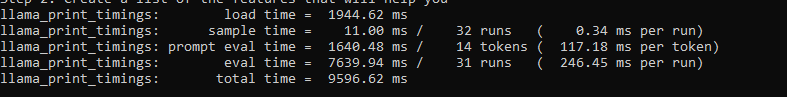

Well, running master and this PR about 5 times in a row each, here's what I got. Ryzen 2600 on Linux. This PR: Edit: On a 13B model, the average is about 325ms versus 315ms. |

|

Same for me, 7B q4_0 This pr: |

|

13B q4_0 |

|

7b q4_0 |

| @@ -1975,6 +1975,10 @@ static void ggml_vec_dot_q4_0(const int n, float * restrict s, const void * rest | |||

|

|

|||

| // This loop will be unrolled by the compiler | |||

| for (int u=0;u<UNROLL_COUNT;u++) { | |||

| // Prefetch data used later in the loop | |||

| // TODO these numbers are device dependent shouldn't be hard coded derive | |||

| _mm_prefetch ( x[i+u].qs + 32*20, 1); // to-do: document what 32*20 even is | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes what even is it? ;-) Is 20 intended to be == sizeof(block_q4_0)?

Or could you use CACHE_LINE_SIZE here somehow?

An interesting experiment would be to pad block_q4_0 by e.g. 4 bytes and see if the optimum prefetch offset moves. That would give a clue that the offset should be a certain multiple of sizeof(block_q4_0), or rather independent of it.

|

Fix hardcoded constants and reopen if there is confirmed improvement |

Prefetches some data before it's usage

(basing on original work: #295)

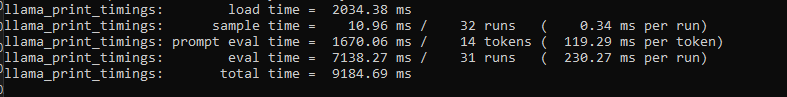

Before (usually 245 - 260 ms):

After (usually 226 - 234 ms):

Tested on Windows 10 + i7-10700k, needs further testing on different OSes and CPUs to make sure there's no unintentional issues hence draft. Also naming constants appropriately!