An input-component for navigating and controlling your app in natural language using an LLM using LangChain.dart

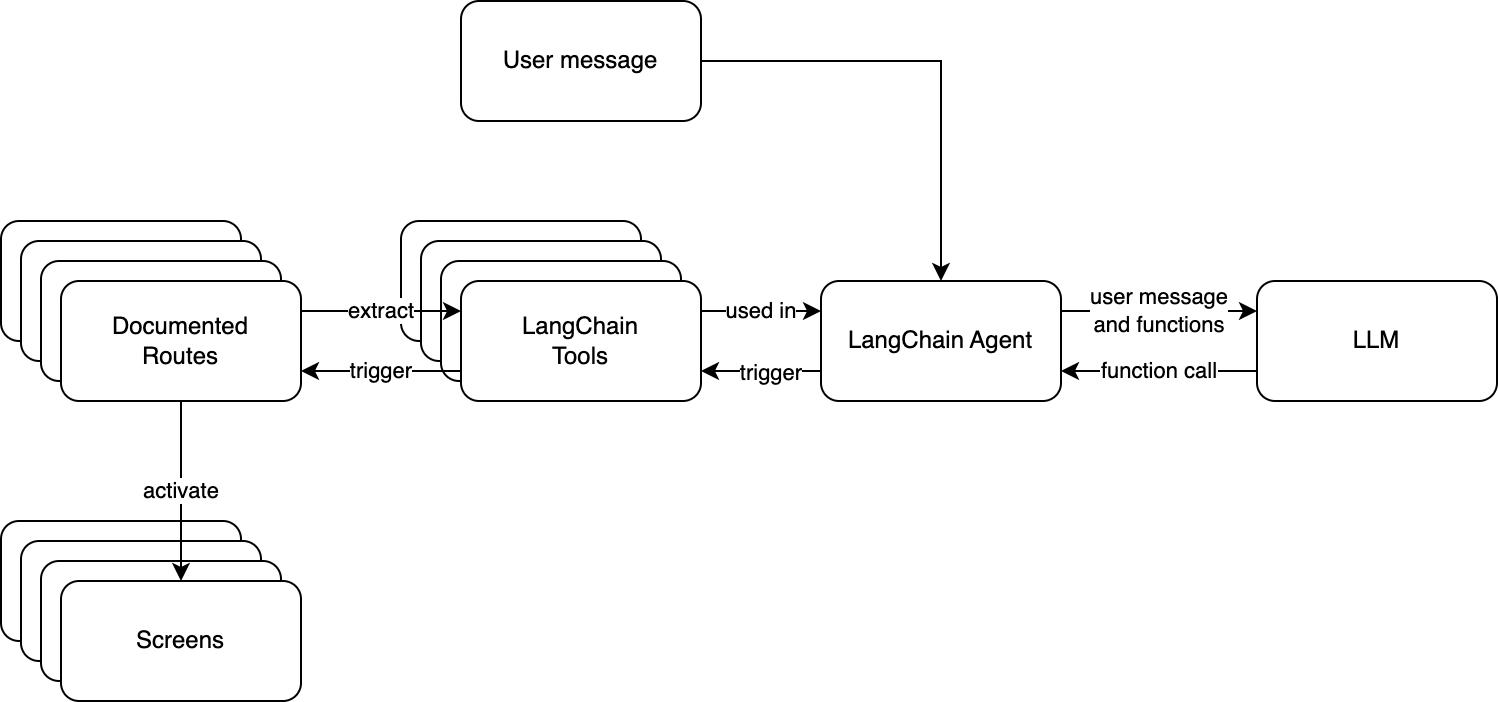

A natural language input field sends a user's request to an LLM along with functions defining the screens of the app using 'function calling'. The response JSON is used to activate the appropriate screen in response.

Medium Medium Article: Natural Language Bar

Google Play Demo: Langbar

Web Demo: Langbar

Youtube: Langbar

LinkedIn: Hans van Dam

- Flutter SDK

- Dart

- Clone the repo

- Install dependencies by running the following command in the project root folder:

flutter pub get

To run the project you have to provide your own OpenAI api key

and put it in lib/openAIKey.dart between the quotes:

String getOpenAIKey() => "";This is not the way for production code, but for now it is the easiest way to get you started. Also: do NOT deploy a web-version of the app this way (local is fine), because you API-key will be blocked within 10 seconds from the first request.

- Download Android Studio ;)

- Open the project in Android Studio

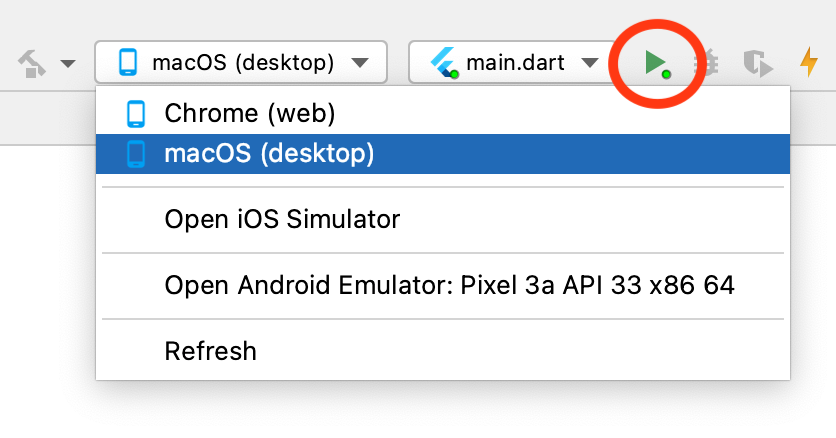

- Run it:

Currently the this project is still in the form of a sample app.

routes are wrapped in a LangbarWrapper (see file routes.dart), which is responsible for showing the NLB at the bottom of every screen, but you also omit it on some screens if you prefer. This file also shows all functional documentation of the routes.

The actual triggering of routes takes place from LangChain Tools. LangChain Tools are automatically (see file send_to_llm.dart) extracted from the DocumentedGoRoutes and fed into a LangChain agent that generates the prompt.

The current version of the app uses the RAG via PineCone API. This is a very simple way to get started with RAG. If you don't specify anything for RAG, you get an exception message. You can easily create a free account at PineCone and get your own API key. Then you can put it in the file retrieval.dart. It is rough, but it works. I have personally routed all calls through a firebase proxy to protect keys.