-

Notifications

You must be signed in to change notification settings - Fork 323

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

RFC: Token stream #560

RFC: Token stream #560

Conversation

|

Forget this, I accidentally enabled Added few bang patterns, now the speedup is noticeable ( |

|

The |

|

For fancy encoding of token stream see https://gist.github.com/phadej/31bdb72c815766edde1eaf3efe8cf0ff, that would allow making safe I think it's a good exercise for type-hackery, but |

Small performance optimisation efforts - which doesn't make a difference.

| ------------------------------------------------------------------------------- | ||

|

|

||

| newtype TokenParser = TokenParser | ||

| { runTokenParser :: [TokenParser] -> BSL.ByteString -> TokenStream } |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

turning [TokenParser] into strict list (both elements and spine) doesn't seem to have effect on performance.

|

I would like to use this order preserving decoder in aeson or as a separate library for my encoding agnostic validation language and use TokenStream to create an instance of Tree. data Label

= String Text

| Number Scientific

| Bool Bool

class Tree a where

getLabel :: a -> Label

getChildren :: a -> [a]But maybe this isn't the right place for this comment. I just want to say that I am looking forward to using this. |

|

Superseded by #996 |

While working on the sax-like stream based JSON parser, I noticed that parsing

Valuewith it is faster than withattoparsec. It started as a separate library, but as it makes isolated (and quite internal) part ofaesona bit faster, I made this PR. If you think this doesn't below intoaeson, I'm ok with making it into own library (exposing few internalsjsstring_andscientificwould be useful though).Notes:

,or colons:as they are redundant, at makes things a bit more straightforward.[Token]seems to be faster then fusing the structures rogether. The decoder variant as in https://github.com/well-typed/binary-serialise-cbor/blob/0236307ba953d8d2a8351455587e4c12b813397d/cborg/src/Codec/CBOR/Decoding.hs could be an improvement too (we could have different decoder actions to read number as a bytestring/text for example. I should try and benchmark that variant too.Differences:

decode&decode')Further work

ByteString.TokenStreamwhich rules out invalid streams. I think it's possible to have an encoding such forTokenStreamwithoutTkError(which can be enforced with a GADT parameter, but I don't see a need to do so), one could write totalTokenStream -> Valuefunction.This would require

GADTs,,DataKindsandTypeFamilies. This encoding might be useful for hand-written "fancy"TokenStreamconsumers.TokenStream(the list version). First iteration could be done usingparsec. Probably for little more performance, one would need specialized version at the end.- tag-encoding of sum (one would need to retain the pointer to the point where object tokens started). Similarly monadic parsers are problematic

- record's unordered nature is a problem too, but not impossible

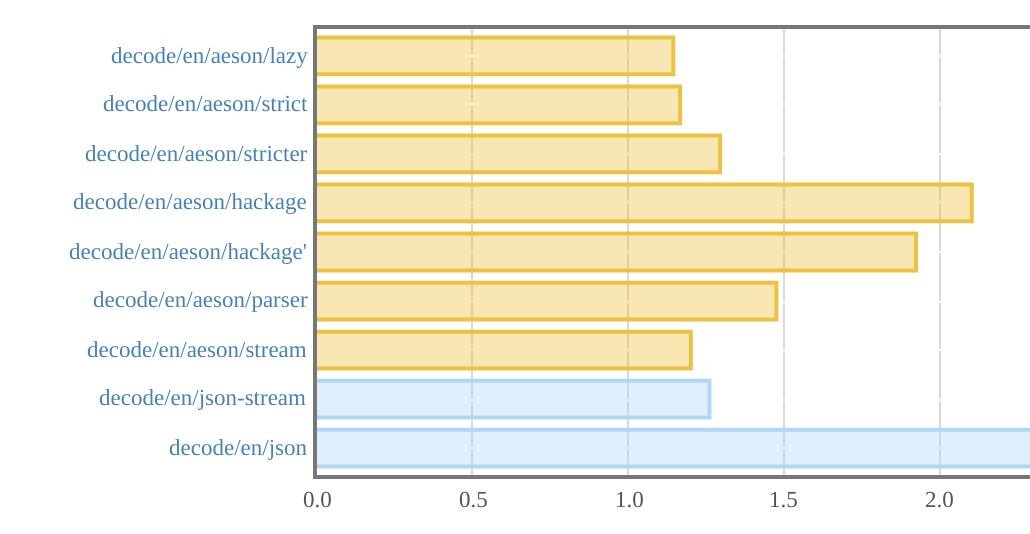

Map!)decodeanddecode'separating might not be needed!)Benchmarks

my machine is surprisingly noisy right now:

Legend (numbers in parentheses point which benches are virtually the same)

decodeusing stream (1)decode'using streamdecodeStrictusing attoparsec (3)decodeusing attoparsec from aeson1.2.1.0(cffi: false) (2)decodeStrictas previous (3)decodeWithfromParser(2)decodeWithfromStream(1)