This repository contains the implementation of Open-Vocabulary Instance Segmentation via Robust Cross-Modal Pseudo-Labeling.

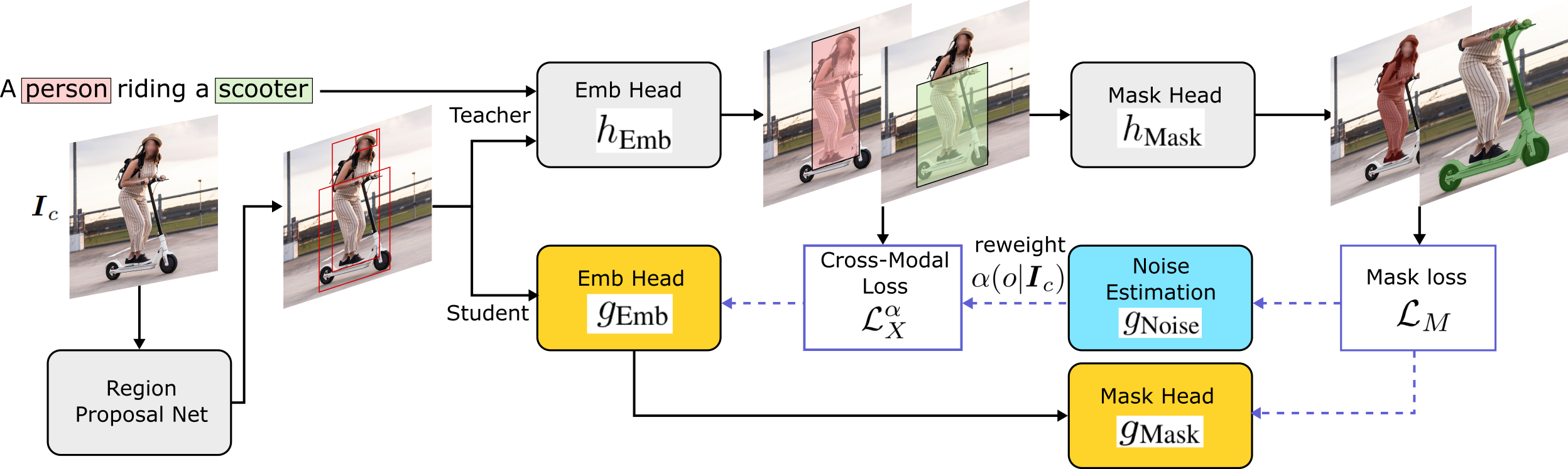

In this work, we address open-vocabulary instance segmentation, which learn to segment novel objects without any mask annotation during training by generating pseudo masks based on captioned images.

Our code is based upon OVR, which is built upon mask-rcnn benchmark. To setup the code, please follow the instruction within INSTALL.md.

To download the datasets, please follow the below instructions.

For more information the data directory is structured, please refer to maskrcnn_benchmark/config/paths_catalog.py.

- Please download the MS-COCO 2017 dataset into

./datasets/coco/folder from its official website: https://cocodataset.org/#download. - Following prior works, the data is partitioned into base and target classes via:

python ./preprocess/coco/construct_coco_json.py

- Please download the correspond datasets from:

- Please extract the corresponding datasets into

./datasets/conceptual/and./datasets/openimages/folder.

- Convert annotation format of Open Images to COCO format (json):

cd ./preprocess/openimages/openimages2coco

python convert_annotations.py -p ../../../datasets/openimages/ --version challenge_2019 --task mask --subsets train

python convert_annotations.py -p ../../../datasets/openimages/ --version challenge_2019 --task mask --subsets val

- Partition all classes into base and target classes:

python ./preprocess/openimages/construct_openimages_json.py

Coming Soon!

To reproduce the main experiments in the paper, we provide the script to train the teacher and the student models on both MS-COCO and Open Images & Conceptual Captions below. Please notice that the teacher must be trained first in order to produce pseudo labels/masks to train the student models.

- Caption pretraining:

python -m torch.distributed.launch --nproc_per_node=8 tools/train_net.py --config-file configs/coco_cap_det/mmss.yaml --skip-test OUTPUT_DIR ./model_weights/model_pretrained.pth

- Teacher training:

python -m torch.distributed.launch --nproc_per_node=8 tools/train_net.py --config-file configs/coco_cap_det/zeroshot_mask.yaml OUTPUT_DIR ./checkpoint/mscoco_teacher/ MODEL.WEIGHT ./model_weights/model_pretrained.pth

- Student training:

python -m torch.distributed.launch --nproc_per_node=8 tools/train_net.py --config-file configs/coco_cap_det/student_teacher_mask_rcnn_uncertainty.yaml OUTPUT_DIR ./checkpoint/mscoco_student/ MODEL.WEIGHT ./checkpoint/mscoco_teacher/model_final.pth

- Evaluation

- To quickly evaluate the performance, we provide pretrained student/teacher model in Pretrained Models. Please download them into

./pretrained_model/folder and run the following script:

- To quickly evaluate the performance, we provide pretrained student/teacher model in Pretrained Models. Please download them into

python -m torch.distributed.launch --nproc_per_node=8 tools/test_net.py --config-file configs/coco_cap_det/student_teacher_mask_rcnn_uncertainty.yaml OUTPUT_DIR ./results/mscoco_student MODEL.WEIGHT ./pretrained_model/coco_student/model_final.pth

- Caption pretraining:

Coming Soon!

- Teacher training:

Coming Soon!

- Student training:

Coming Soon!

- Evaluation

Coming Soon!

| Dataset | Teacher | Student |

|---|---|---|

| MS-COCO | model | model |

| Conceptual Caps + Open Images | model | model |

If this code is helpful for your research, we would appreciate if you cite the work:

@article{Huynh:CVPR22,

author = {D.~Huynh and J.~Kuen and Z.~Lin and J.~Gu and E.~Elhamifar},

title = {Open-Vocabulary Instance Segmentation via Robust Cross-Modal Pseudo-Labeling},

journal = {{IEEE} Conference on Computer Vision and Pattern Recognition},

year = {2022}}