-

Notifications

You must be signed in to change notification settings - Fork 53

Commit

- Loading branch information

There are no files selected for viewing

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,4 @@ | ||

| # Sphinx build info version 1 | ||

| # This file hashes the configuration used when building these files. When it is not found, a full rebuild will be done. | ||

| config: 3b8ffe9dcb146bebeb7124d107766ef8 | ||

| tags: 645f666f9bcd5a90fca523b33c5a78b7 |

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

Large diffs are not rendered by default.

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,102 @@ | ||

| # Contributing to Hezar | ||

| Welcome to Hezar! We greatly appreciate your interest in contributing to this project and helping us make it even more | ||

| valuable to the Persian community. Whether you're a developer, researcher, or enthusiast, your contributions are | ||

| invaluable in helping us grow and improve Hezar. | ||

|

|

||

| Before you start contributing, please take a moment to review the following guidelines. | ||

|

|

||

| ## Code of Conduct | ||

|

|

||

| This project and its community adhere to | ||

| the [Contributor Code of Conduct](https://github.com/hezarai/hezar/blob/main/CODE_OF_CONDUCT.md). | ||

|

|

||

| ## How to Contribute | ||

|

|

||

| ### Reporting Bugs | ||

|

|

||

| If you come across a bug or unexpected behavior, please help us by reporting it. | ||

| Use the [GitHub Issue Tracker](https://github.com/hezarai/hezar/issues) to create a detailed bug report. | ||

| Include information such as: | ||

|

|

||

| - A clear and descriptive title. | ||

| - Steps to reproduce the bug. | ||

| - Expected behavior. | ||

| - Actual behavior. | ||

| - Your operating system and Python version. | ||

|

|

||

| ### Adding features | ||

|

|

||

| Have a great idea for a new feature or improvement? We'd love to hear it. You can open an issue and add your suggestion | ||

| with a clear description and further suggestions on how it can be implemented. Also, if you already can implement it | ||

| yourself, just follow the instructions on how you can send a PR. | ||

|

|

||

| ### Adding/Improving documents | ||

|

|

||

| Have a suggestion to enhance our documentation or want to contribute entirely new sections? We welcome your input!<br> | ||

| Here's how you can get involved:<br> | ||

| Docs website is deployed here: [https://hezarai.github.io/hezar](https://hezarai.github.io/hezar) and the source for the | ||

| docs are located at the [docs](https://github.com/hezarai/hezar/tree/main/docs) folder in the root of the repo. Feel | ||

| free to apply your changes or add new docs to this section. Notice that docs are written in Markdown format. In case you have | ||

| added new files to this section, you must include them in the `index.md` file in the same folder. For example, if you've | ||

| added the file `new_doc.md` to the `get_started` folder, you have to modify `get_started/index.md` and put your file | ||

| name there. | ||

|

|

||

| ### Commit guidelines | ||

|

|

||

| #### Functional best practices | ||

|

|

||

| - Ensure only one "logical change" per commit for efficient review and flaw identification. | ||

| - Smaller code changes facilitate quicker reviews and easier troubleshooting using Git's bisect capability. | ||

| - Avoid mixing whitespace changes with functional code changes. | ||

| - Avoid mixing two unrelated functional changes. | ||

| - Refrain from sending large new features in a single giant commit. | ||

|

|

||

| #### Styling best practices | ||

|

|

||

| - Use imperative mood in the subject (e.g., "Add support for ..." not "Adding support or added support") . | ||

| - Keep the subject line short and concise, preferably less than 50 characters. | ||

| - Capitalize the subject line and do not end it with a period. | ||

| - Wrap body lines at 72 characters. | ||

| - Use the body to explain what and why a change was made. | ||

| - Do not explain the "how" in the commit message; reserve it for documentation or code. | ||

| - For commits referencing an issue or pull request, write the proper commit subject followed by the reference in | ||

| parentheses (e.g., "Add NFKC normalizer (#9999)"). | ||

| - Reference codes & paths in back quotes (e.g., `variable`, `method()`, `Class()`, `file.py`). | ||

| - Preferably use the following [gitmoji](https://gitmoji.dev/) compatible codes at the beginning of your commit message: | ||

|

|

||

| | Emoji Code | Emoji | Description | Example Commit | | ||

| |----------------------|-------|----------------------------------------------|----------------------------------------------------------------| | ||

| | `:bug:` | 🐛 | Fix a bug or issue | `:bug: Fix issue with image loading in DataLoader` | | ||

| | `:sparkles:` | ✨ | Add feature or improvements | `:sparkles: Introduce support for text summarization` | | ||

| | `:recycle:` | ♻️ | Refactor code (backward compatible refactor) | `:recycle: Refactor data preprocessing utilities` | | ||

| | `:memo:` | 📝 | Add or change docs | `:memo: Update documentation for text classification` | | ||

| | `:pencil2:` | ✏️ | Minor change or improvement | `:pencil2: Improve logging in Trainer` | | ||

| | `:fire:` | 🔥 | Remove code or file | `:fire: Remove outdated utility function` | | ||

| | `:boom:` | 💥 | Introduce breaking changes | `:boom: Update API, requires modification in existing scripts` | | ||

| | `:test_tube:` | 🧪 | Test-related changes | `:test_tube: Add unit tests for data loading functions` | | ||

| | `:bookmark:` | 🔖 | Version release | `:bookmark: Release v1.0.0` | | ||

| | `:adhesive_bandage:` | 🩹 | Non-critical fix | `:adhesive_bandage: Fix minor issue in BPE tokenizer` | | ||

|

|

||

| ## Sending a PR | ||

|

|

||

| In order to apply any change to the repo, you have to follow these step: | ||

|

|

||

| 1. Fork the Hezar repository. | ||

| 2. Create a new branch for your feature, bug fix, etc. | ||

| 3. Make your changes. | ||

| 4. Update the documentation to reflect your changes. | ||

| 5. Ensure your code adheres to the [Google Python Style Guide](https://google.github.io/styleguide/pyguide.html). | ||

| 6. Format the code using `ruff` (`ruff check --fix .`) | ||

| 7. Write tests to ensure the functionality if needed. | ||

| 8. Run tests and make sure all of them pass. (Skip this step if your changes do not involve codes) | ||

| 9. Open a pull request from your fork and the PR template will be automatically loaded to help you do the rest. | ||

| 10. Be responsive to feedback and comments during the review process. | ||

| 11. Thanks for contributing to the Hezar project.😉❤️ | ||

|

|

||

| ## License | ||

|

|

||

| By contributing to Hezar, you agree that your contributions will be licensed under | ||

| the [Apache 2.0 License](https://github.com/hezarai/hezar/blob/main/LICENSE). | ||

|

|

||

| We look forward to your contributions and appreciate your efforts in making Hezar a powerful AI tool for the Persian | ||

| community! |

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,8 @@ | ||

| # Get Started | ||

| ```{toctree} | ||

| :maxdepth: 1 | ||

|

|

||

| overview.md | ||

| installation.md | ||

| quick_tour.md | ||

| ``` |

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,41 @@ | ||

| # Installation | ||

|

|

||

| ## Install from PyPi | ||

| Installing Hezar is as easy as any other Python library! Most of the requirements are cross-platform and installing | ||

| them on any machine is a piece of cake! | ||

|

|

||

| ``` | ||

| pip install hezar | ||

| ``` | ||

| ### Installation variations | ||

| Hezar is packed with a lot of tools that are dependent on other packages. Most of the | ||

| time you might not want everything to be installed, hence, providing multiple variations of | ||

| Hezar so that the installation is light and fast for general use. | ||

|

|

||

| You can install optional dependencies for each mode like so: | ||

| ``` | ||

| pip install hezar[nlp] # For natural language processing | ||

| pip install hezar[vision] # For computer vision and image processing | ||

| pip install hezar[audio] # For audio and speech processing | ||

| pip install hezar[embeddings] # For word embeddings | ||

| ``` | ||

| Or you can also install everything using: | ||

| ``` | ||

| pip install hezar[all] | ||

| ``` | ||

| ## Install from source | ||

| Also, you can install the dev version of the library using the source: | ||

| ``` | ||

| pip install git+https://github.com/hezarai/hezar.git | ||

| ``` | ||

|

|

||

| ## Test installation | ||

| From a Python console or in CLI just import `hezar` and check the version: | ||

| ```python | ||

| import hezar | ||

|

|

||

| print(hezar.__version__) | ||

| ``` | ||

| ``` | ||

| 0.23.1 | ||

| ``` |

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,20 @@ | ||

| # Overview | ||

|

|

||

| Welcome to Hezar! A library that makes state-of-the-art machine learning as easy as possible aimed for the Persian | ||

| language, built by the Persian community! | ||

|

|

||

| In Hezar, the primary goal is to provide plug-and-play AI/ML utilities so that you don't need to know much about what's | ||

| going on under the hood. Hezar is not just a model library, but instead it's packed with every aspect you need for any | ||

| ML pipeline like datasets, trainers, preprocessors, feature extractors, etc. | ||

|

|

||

| Hezar is a library that: | ||

| - brings together all the best works in AI for Persian | ||

| - makes using AI models as easy as a couple of lines of code | ||

| - seamlessly integrates with Hugging Face Hub for all of its models | ||

| - has a highly developer-friendly interface | ||

| - has a task-based model interface which is more convenient for general users. | ||

| - is packed with additional tools like word embeddings, tokenizers, feature extractors, etc. | ||

| - comes with a lot of supplementary ML tools for deployment, benchmarking, optimization, etc. | ||

| - and more! | ||

|

|

||

| To find out more, just take the [quick tour](quick_tour.md)! |

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,214 @@ | ||

| # Quick Tour | ||

| ## Models | ||

| There's a bunch of ready to use trained models for different tasks on the Hub! | ||

|

|

||

| **🤗Hugging Face Hub Page**: [https://huggingface.co/hezarai](https://huggingface.co/hezarai) | ||

|

|

||

| Let's walk you through some examples! | ||

|

|

||

| - **Text Classification (sentiment analysis, categorization, etc)** | ||

| ```python | ||

| from hezar.models import Model | ||

|

|

||

| example = ["هزار، کتابخانهای کامل برای به کارگیری آسان هوش مصنوعی"] | ||

| model = Model.load("hezarai/bert-fa-sentiment-dksf") | ||

| outputs = model.predict(example) | ||

| print(outputs) | ||

| ``` | ||

| ``` | ||

| [[{'label': 'positive', 'score': 0.812910258769989}]] | ||

| ``` | ||

| - **Sequence Labeling (POS, NER, etc.)** | ||

| ```python | ||

| from hezar.models import Model | ||

|

|

||

| pos_model = Model.load("hezarai/bert-fa-pos-lscp-500k") # Part-of-speech | ||

| ner_model = Model.load("hezarai/bert-fa-ner-arman") # Named entity recognition | ||

| inputs = ["شرکت هوش مصنوعی هزار"] | ||

| pos_outputs = pos_model.predict(inputs) | ||

| ner_outputs = ner_model.predict(inputs) | ||

| print(f"POS: {pos_outputs}") | ||

| print(f"NER: {ner_outputs}") | ||

| ``` | ||

| ``` | ||

| POS: [[{'token': 'شرکت', 'label': 'Ne'}, {'token': 'هوش', 'label': 'Ne'}, {'token': 'مصنوعی', 'label': 'AJe'}, {'token': 'هزار', 'label': 'NUM'}]] | ||

| NER: [[{'token': 'شرکت', 'label': 'B-org'}, {'token': 'هوش', 'label': 'I-org'}, {'token': 'مصنوعی', 'label': 'I-org'}, {'token': 'هزار', 'label': 'I-org'}]] | ||

| ``` | ||

| - **Mask Filling** | ||

| ```python | ||

| from hezar.models import Model | ||

|

|

||

| model = Model.load("hezarai/roberta-fa-mask-filling") | ||

| inputs = ["سلام بچه ها حالتون <mask>"] | ||

| outputs = model.predict(inputs, top_k=1) | ||

| print(outputs) | ||

| ``` | ||

| ``` | ||

| [[{'token': 'چطوره', 'sequence': 'سلام بچه ها حالتون چطوره', 'token_id': 34505, 'score': 0.2230483442544937}]] | ||

| ``` | ||

| - **Speech Recognition** | ||

| ```python | ||

| from hezar.models import Model | ||

|

|

||

| model = Model.load("hezarai/whisper-small-fa") | ||

| transcripts = model.predict("examples/assets/speech_example.mp3") | ||

| print(transcripts) | ||

| ``` | ||

| ``` | ||

| [{'text': 'و این تنها محدود به محیط کار نیست'}] | ||

| ``` | ||

| - **Image to Text (OCR)** | ||

| ```python | ||

| from hezar.models import Model | ||

| # OCR with TrOCR | ||

| model = Model.load("hezarai/trocr-base-fa-v2") | ||

| texts = model.predict(["examples/assets/ocr_example.jpg"]) | ||

| print(f"TrOCR Output: {texts}") | ||

|

|

||

| # OCR with CRNN | ||

| model = Model.load("hezarai/crnn-fa-printed-96-long") | ||

| texts = model.predict("examples/assets/ocr_example.jpg") | ||

| print(f"CRNN Output: {texts}") | ||

| ``` | ||

| ``` | ||

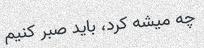

| TrOCR Output: [{'text': 'چه میشه کرد، باید صبر کنیم'}] | ||

| CRNN Output: [{'text': 'چه میشه کرد، باید صبر کنیم'}] | ||

| ``` | ||

|  | ||

|

|

||

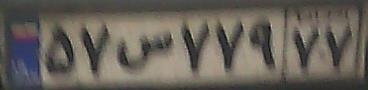

| - **Image to Text (License Plate Recognition)** | ||

| ```python | ||

| from hezar.models import Model | ||

|

|

||

| model = Model.load("hezarai/crnn-fa-64x256-license-plate-recognition") | ||

| plate_text = model.predict("assets/license_plate_ocr_example.jpg") | ||

| print(plate_text) # Persian text of mixed numbers and characters might not show correctly in the console | ||

| ``` | ||

| ``` | ||

| [{'text': '۵۷س۷۷۹۷۷'}] | ||

| ``` | ||

|  | ||

|

|

||

| - **Image to Text (Image Captioning)** | ||

| ```python | ||

| from hezar.models import Model | ||

|

|

||

| model = Model.load("hezarai/vit-roberta-fa-image-captioning-flickr30k") | ||

| texts = model.predict("examples/assets/image_captioning_example.jpg") | ||

| print(texts) | ||

| ``` | ||

| ``` | ||

| [{'text': 'سگی با توپ تنیس در دهانش می دود.'}] | ||

| ``` | ||

|  | ||

|

|

||

| We constantly keep working on adding and training new models and this section will hopefully be expanding over time ;) | ||

| ## Word Embeddings | ||

| - **FastText** | ||

| ```python | ||

| from hezar.embeddings import Embedding | ||

|

|

||

| fasttext = Embedding.load("hezarai/fasttext-fa-300") | ||

| most_similar = fasttext.most_similar("هزار") | ||

| print(most_similar) | ||

| ``` | ||

| ``` | ||

| [{'score': 0.7579, 'word': 'میلیون'}, | ||

| {'score': 0.6943, 'word': '21هزار'}, | ||

| {'score': 0.6861, 'word': 'میلیارد'}, | ||

| {'score': 0.6825, 'word': '26هزار'}, | ||

| {'score': 0.6803, 'word': '٣هزار'}] | ||

| ``` | ||

| - **Word2Vec (Skip-gram)** | ||

| ```python | ||

| from hezar.embeddings import Embedding | ||

|

|

||

| word2vec = Embedding.load("hezarai/word2vec-skipgram-fa-wikipedia") | ||

| most_similar = word2vec.most_similar("هزار") | ||

| print(most_similar) | ||

| ``` | ||

| ``` | ||

| [{'score': 0.7885, 'word': 'چهارهزار'}, | ||

| {'score': 0.7788, 'word': '۱۰هزار'}, | ||

| {'score': 0.7727, 'word': 'دویست'}, | ||

| {'score': 0.7679, 'word': 'میلیون'}, | ||

| {'score': 0.7602, 'word': 'پانصد'}] | ||

| ``` | ||

| - **Word2Vec (CBOW)** | ||

| ```python | ||

| from hezar.embeddings import Embedding | ||

|

|

||

| word2vec = Embedding.load("hezarai/word2vec-cbow-fa-wikipedia") | ||

| most_similar = word2vec.most_similar("هزار") | ||

| print(most_similar) | ||

| ``` | ||

| ``` | ||

| [{'score': 0.7407, 'word': 'دویست'}, | ||

| {'score': 0.7400, 'word': 'میلیون'}, | ||

| {'score': 0.7326, 'word': 'صد'}, | ||

| {'score': 0.7276, 'word': 'پانصد'}, | ||

| {'score': 0.7011, 'word': 'سیصد'}] | ||

| ``` | ||

| For a full guide on the embeddings module, see the [embeddings tutorial](https://hezarai.github.io/hezar/tutorial/embeddings.html). | ||

| ## Datasets | ||

| You can load any of the datasets on the [Hub](https://huggingface.co/hezarai) like below: | ||

| ```python | ||

| from hezar.data import Dataset | ||

|

|

||

| sentiment_dataset = Dataset.load("hezarai/sentiment-dksf") # A TextClassificationDataset instance | ||

| lscp_dataset = Dataset.load("hezarai/lscp-pos-500k") # A SequenceLabelingDataset instance | ||

| xlsum_dataset = Dataset.load("hezarai/xlsum-fa") # A TextSummarizationDataset instance | ||

| alpr_ocr_dataset = Dataset.load("hezarai/persian-license-plate-v1") # An OCRDataset instance | ||

| ... | ||

| ``` | ||

| The returned dataset objects from `load()` are PyTorch Dataset wrappers for specific tasks and can be used by a data loader out-of-the-box! | ||

|

|

||

| You can also load Hezar's datasets using 🤗Datasets: | ||

| ```python | ||

| from datasets import load_dataset | ||

|

|

||

| dataset = load_dataset("hezarai/sentiment-dksf") | ||

| ``` | ||

| For a full guide on Hezar's datasets, see the [datasets tutorial](https://hezarai.github.io/hezar/tutorial/datasets.html). | ||

| ## Training | ||

| Hezar makes it super easy to train models using out-of-the-box models and datasets provided in the library. | ||

|

|

||

| ```python | ||

| from hezar.models import BertSequenceLabeling, BertSequenceLabelingConfig | ||

| from hezar.data import Dataset | ||

| from hezar.trainer import Trainer, TrainerConfig | ||

| from hezar.preprocessors import Preprocessor | ||

|

|

||

| base_model_path = "hezarai/bert-base-fa" | ||

| dataset_path = "hezarai/lscp-pos-500k" | ||

|

|

||

| train_dataset = Dataset.load(dataset_path, split="train", tokenizer_path=base_model_path) | ||

| eval_dataset = Dataset.load(dataset_path, split="test", tokenizer_path=base_model_path) | ||

|

|

||

| model = BertSequenceLabeling(BertSequenceLabelingConfig(id2label=train_dataset.config.id2label)) | ||

| preprocessor = Preprocessor.load(base_model_path) | ||

|

|

||

| train_config = TrainerConfig( | ||

| output_dir="bert-fa-pos-lscp-500k", | ||

| task="sequence_labeling", | ||

| device="cuda", | ||

| init_weights_from=base_model_path, | ||

| batch_size=8, | ||

| num_epochs=5, | ||

| metrics=["seqeval"], | ||

| ) | ||

|

|

||

| trainer = Trainer( | ||

| config=train_config, | ||

| model=model, | ||

| train_dataset=train_dataset, | ||

| eval_dataset=eval_dataset, | ||

| data_collator=train_dataset.data_collator, | ||

| preprocessor=preprocessor, | ||

| ) | ||

| trainer.train() | ||

|

|

||

| trainer.push_to_hub("bert-fa-pos-lscp-500k") # push model, config, preprocessor, trainer files and configs | ||

| ``` | ||

|

|

||

| Want to go deeper? Check out the [guides](../guide/index.md). |

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,2 @@ | ||

| # Advanced Training | ||

| Docs coming soon, stay tuned! |