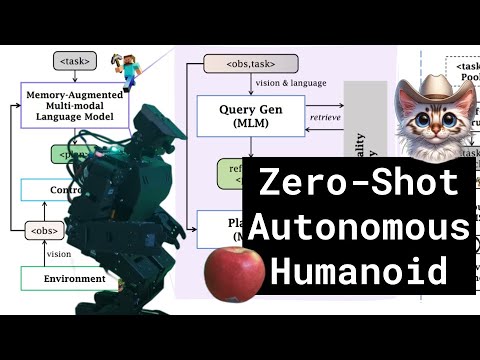

o stands for Zero-Shot Autonomous Robots.

This repo uses model APIs to create a Zero-Shot Autonomous Robot. Individual robot behaviors are wrapped in asynchronous nodes (python) which are launched via scripts (bash). It's kind of like a more minimalist and simpler ROS. Four main types of models are used:

- LLM (Language Language Model) a

text2textmodel used for planning, reasoning, dialogue, and more! - VLM (Vision Language Model) - a

image2textmodel used for scene understanding, object detection, and more! - TTS (Text-to-Speech) a

text2audiomodel used for speech synthesis so the robot can talk. - STT (Speech-to-Text) a

audio2textmodel used for speech recognition so the robot can listen.

To get started follow the setup guide.

The models module contains code for different model apis. For example models/rep.py is for the open source Replicate API, and models/gpt.py is for the OpenAI API. More info on models.

The robots module contains code for different robots. For example robots/nex.py is for the HiWonder AiNex Humanoid. More info on robots.

The nodes module contains code for different nodes. For example nodes/look.py contains the loop used vision with a Vision Language Model. More info on nodes.

The params module contains code for different parameters. For example params/default.sh will load environment variables (params) that contain default values. More info on params.

If you are interested in contributing, please read the contributing guide.

@misc{zero-shot-robot-2023,

title={Zero-Shot Autonomous Robots},

author={Hugo Ponte},

year={2023},

url={https://github.com/hu-po/o}

}