-

Notifications

You must be signed in to change notification settings - Fork 970

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[docs] Doc sprint #3099

[docs] Doc sprint #3099

Conversation

|

The docs for this PR live here. All of your documentation changes will be reflected on that endpoint. The docs are available until 30 days after the last update. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Nice! 🤩

cc @SunMarc for the inference related docs

| # Utilities for Fully Sharded Data Parallelism | ||

| # Fully Sharded Data Parallel utilities | ||

|

|

||

| ## enable_fsdp_ram_efficient_loading |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Wish we didn't have to do this everywhere but I suppose can't be helped :/

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks for this sprint @stevhliu ! The big model inference part is a lot nicer now ! I left a few suggestions

|

|

||

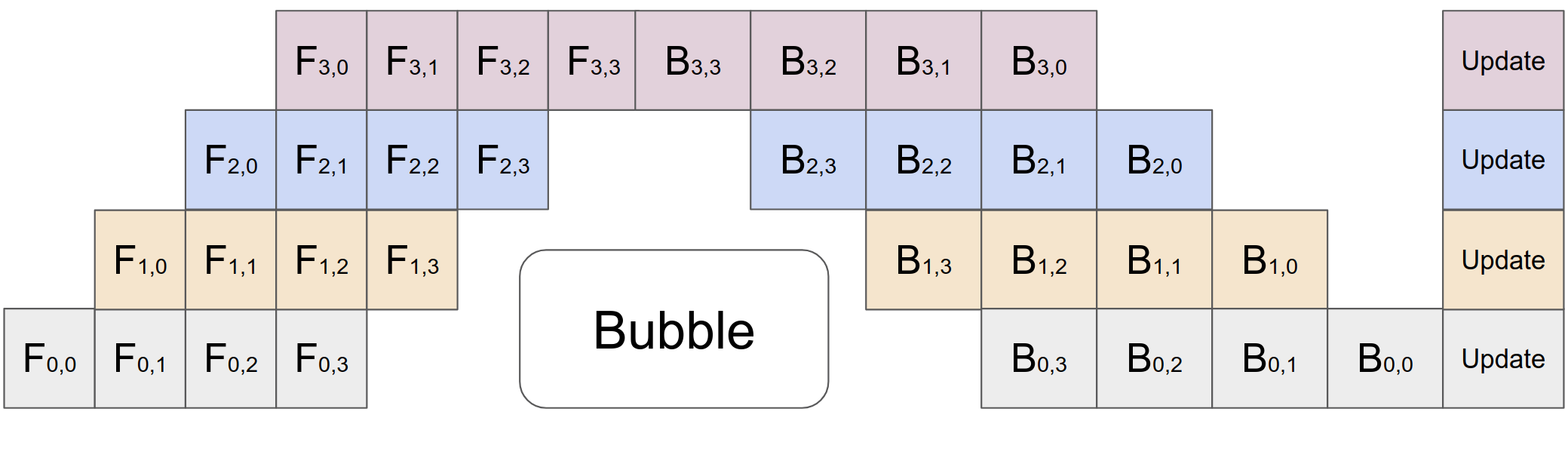

| The general idea with pipeline parallelism is: say you have 4 GPUs and a model big enough it can be *split* on four GPUs using `device_map="auto"`. With this method you can send in 4 inputs at a time (for example here, any amount works) and each model chunk will work on an input, then receive the next input once the prior chunk finished, making it *much* more efficient **and faster** than the method described earlier. Here's a visual taken from the PyTorch repository: | ||

|

|

||

|  | ||

|

|

||

| To illustrate how you can use this with Accelerate, we have created an [example zoo](https://github.com/huggingface/accelerate/tree/main/examples/inference) showcasing a number of different models and situations. In this tutorial, we'll show this method for GPT2 across two GPUs. | ||

|

|

||

| Before you proceed, please make sure you have the latest pippy installed by running the following: | ||

| Before you proceed, please make sure you have the latest PyTorch version installed by running the following: |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I also updated the installation instructions here, let me know if its incorrect!

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Nope it's correct :)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

in a follow-up after this I'll fix the PiPPy example chunk so we don't have a merge conflict :)

Addresses the docs improvements internally discussed:

<Note>tagstoctreewith the header referenced in the doc