-

Notifications

You must be signed in to change notification settings - Fork 27.4k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Issue: Adding new tokens to bert tokenizer in QA #11200

Comments

|

I am unable to reproduce: the notebook with your added code works smoothly on my side. |

|

Thank you @sgugger. Thank you again for your help |

|

Hi @sgugger, If I perform these operations I get the error

To solve I have to: Kind regards, |

|

Hi @sgugger, |

|

It looks like a bug in colab (from the screenshots I assume that is what you are using for training?) since I didn't get any error on my side by executing this as a notebook. |

|

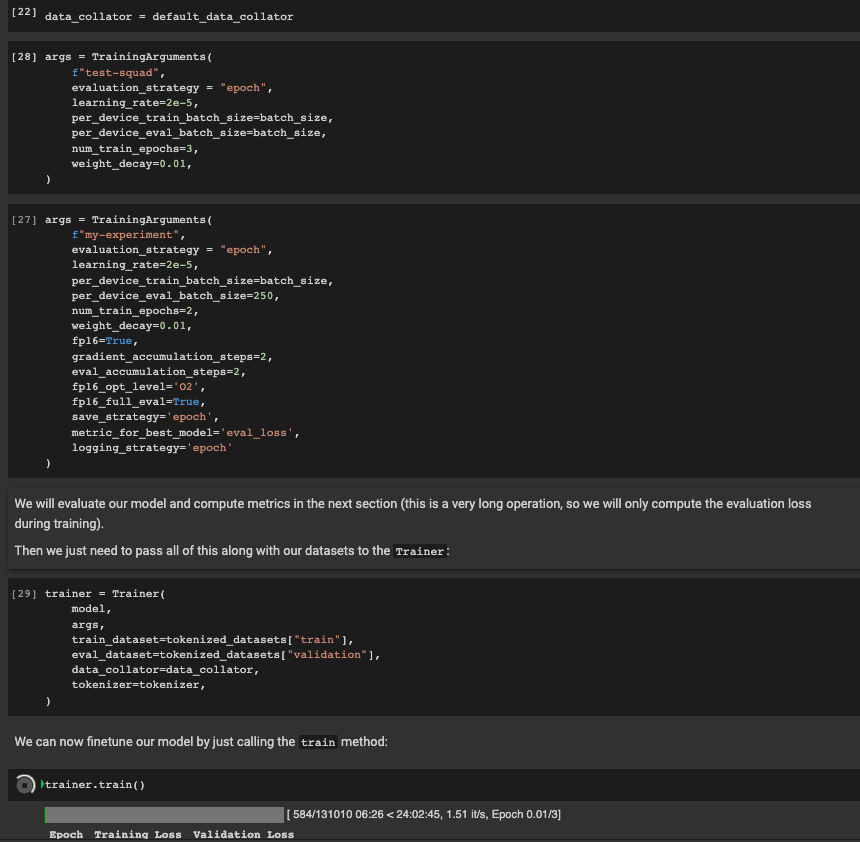

Hi @sgugger args = TrainingArguments(

f"my-experiment",

evaluation_strategy = "epoch",

learning_rate=2e-5,

per_device_train_batch_size=batch_size,

per_device_eval_batch_size=250,

num_train_epochs=2,

weight_decay=0.01,

fp16=True,

gradient_accumulation_steps=2,

eval_accumulation_steps=2,

fp16_opt_level='O2',

fp16_full_eval=True,

save_strategy='epoch',

metric_for_best_model='eval_loss',

logging_strategy='epoch'

)Because I encountered the same issue on my machine. Kind regards, |

|

Ah you're right, I must have made a mistake. This comes from the option @stas00 I'm not sure what the best place is for fixing this but if someone uses |

|

But there is no The logic is very explicit to not place on the device only for non-train when You need to add |

Ok so Regarding the notebook, can I use the same Trainer object for fit and predict? Because these Booleans are never set in the notebook. I mean when I am doing trainer.predict() is obvious for the trainer to set model.eval() and torch.no_grad()? Thank you both, |

Why did you think it shouldn't be compatible? The only reason there is a special case for non-training is to avoid placing the full model on device before it was

Of course. It was designed for you to pass all the init args at once and then you can call all its functions. |

|

@stas00 Ok clear, I have just checked and the trainer works perfectly. Because it's clear in the point of view of performance as you said but the documentation don't bring out this things for this reason I have open then issue. Kind regards, |

|

Oh, I see. Until recently @sgugger, do you agree if we add this? |

|

I would rather avoid adding this, as users have been used to not have to set that argument to True when not using example scripts. Can we just add the proper line in (Sorry I didn't catch you were using |

|

We will probably have to rethink the design then, since it's not a simple "put on device if it wasn't already" - there are multiple cases when it shouldn't happen. For now added a hardcoded workaround: #11322 |

WARNING: This issue is a replica of this other issue open by me, I ask you sorry if I have open it in the wrong place.

Hello Huggingface's team (@sgugger , @joeddav, @LysandreJik)

I have a problem with this code base

notebooks/examples/question_answering.ipynb - link

ENV: Google Colab - transformers Version: 4.5.0; datasets Version: 1.5.0; torch Version: 1.8.1+cu101;I am trying to add some domain tokens in the bert-base-cased tokenizer

Then during the trainer.fit() call it report the attached error.

Can you please tell me where I'm wrong?

The tokenizer output is the usual bert inputs expressed in the form of List[List[int]] eg inputs_ids and attention_mask.

So I can't figure out where the problem is with the device

Input, output and indices must be on the current deviceKind Regards,

Andrea

The text was updated successfully, but these errors were encountered: