- Overview

- Environment Specification

- System Requirements

- IMPORTANT!!

- Installation

- Interface Specification

- System Explanation in a Nutshell

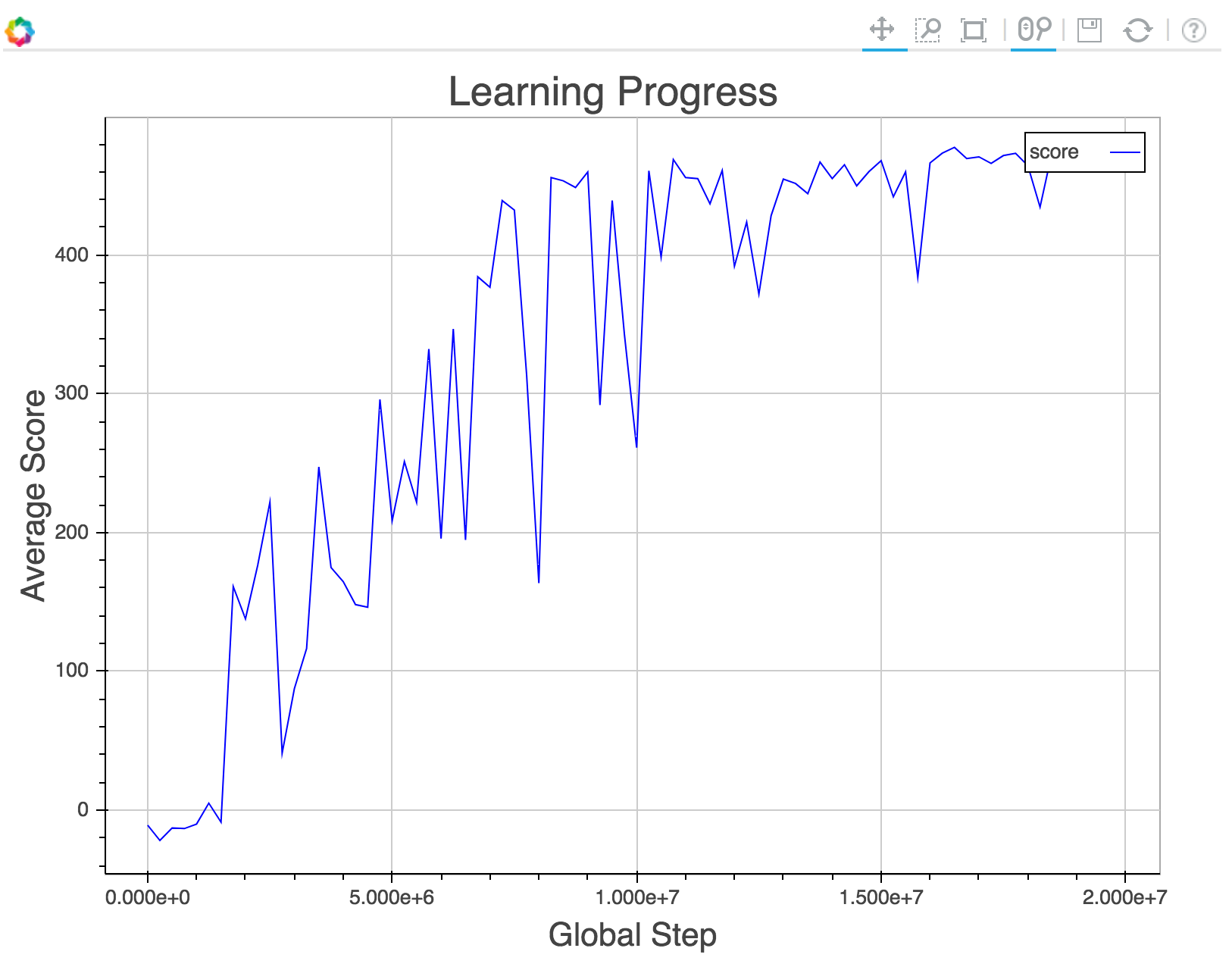

- Training results

- [Optional] Further Customization

- Reference

TORCS (The Open Racing Car Simulator) is a famous open-source racing car simulator, which provides a realistic physical racing environment and a set of highly customizable API. But it is not so convenient to train an RL model in this environment, for it does not provide visual API and typically needs to go through a GUI MANU to start the game.

This is a modified version of TORCS in order to suit the needs for deep reinforcement learning training with visual observation. Through this environment, researchers can easily train deep reinforcement learning models on TORCS via a Lua interface (Python interface might also be supported soon).

The original TORCS environment is modified in order to

- Be able to start a racing game with visual output without going through a GUI MANU

- Obtain the first-person visual observation in games

- Provide fast and user-friendly environment-agent communication interface for training

- Train visual RL models on TORCS on a server (machines without display output)

- Support multi-thread RL training on TORCS environment

- Support semantic segmentation on the visual in inputs via some hacks

This environment interface is originally written for my RL research project, and I think open-sourcing this can make it much easier for current studies on both autonomous cars and reinforcement learning.

This repository contains

torcs-1.3.6the source code of a modified torcs-1.3.6TORCSctrl.cppa dynamic linking library for communications between RL models (in lua) and TORCSTORCSthe lua interfacesmakefilefor compilingTORCSctrl.soinstall.shfor installing torcs

- ubuntu 14.04+ (16.04 is preferable, see below)

- torch

-

If you want to run this environment on a server (without display device) with Nvidia graphic cards, please make sure your Nvidia drivers are installed with flag

--no-opengl-files! (otherwise, there will be problems when using xvfb, see this) -

In order to correctly install the dependency packages and if you are using a desktop version of Ubuntu, please go to System Settings -> Software & Updates and tick the source code checkbox before installation, or you should modify your

/etc/apt/sources.list. -

If your server is Ubuntu 14.04, please do

cd torcs-1.3.6 ./configure --disable-xrandrbefore installation, otherwise, there will also be some problems when using xvfb.

Just run install.sh in the root folder with sudo:

sudo ./install.sh

After installation, in command line, type torcs (xvfb-run -s "-screen 0 640x480x24" torcs if on a server). If there jumps off a torcs window (or there are no errors popping up on the command line), the installation has succeeded. Press ctrl + \ to terminate it (ctrl + c has been masked.).

The overall interface of this environment follows https://github.com/Kaixhin/rlenvs#api

[NOTICE] You need to call cleanUp() if the whole training ends.

Make sure the folder structure of the environment interface is like this

\TORCS

- Env.lua

- Torcs.lua

- TorcsContinuous.lua

- TORCSctrl.so

- TorcsDiscrete.lua

- TorcsDiscreteConstDamagePos.lua

\game_config

- aaa.xml

- bbb.xml

...

local TorcsDiscrete = require 'TORCS.TorcsDiscrete'

-- configure the environment

local opt = {}

opt.server = false

opt.game_config = 'quickrace_discrete_single_ushite-city.xml'

opt.use_RGB = false

opt.mkey = 817

opt.auto_back = false

local env = TorcsDiscrete(opt)

local totalstep = 817

print("begin")

local reward, terminal, state = 0, false, env:start()

repeat

repeat

reward, observation, terminal = env:step(doSomeActions(state))

nowstep = nowstep + 1

until terminal

if terminal then

reward, terminal, state = 0, false, env:start()

end

until nowstep >= totalstep

print("finish")

env:cleanUp()Before running the environment, run this in a terminal window:

./xvfb_init.sh 99Then a xvfb is set up on display port 99, allowing at most 14 TORCS environment simultaneously running on this xvfb

Example script: test.lua

-- test.lua

local TorcsDiscrete = require 'TORCS.TorcsDiscrete'

-- configure the environment

local opt = {}

opt.server = true

opt.game_config = 'quickrace_discrete_single_ushite-city.xml'

opt.use_RGB = false

opt.mkey = 817

opt.auto_back = false

local env = TorcsDiscrete(opt)

local totalstep = 817

print("begin")

local reward, terminal, state = 0, false, env:start()

repeat

repeat

reward, observation, terminal = env:step(doSomeActions(state))

nowstep = nowstep + 1

until terminal

if terminal then

reward, terminal, state = 0, false, env:start()

end

until nowstep >= totalstep

print("finish")

env:cleanUp()Run the script while the xvfb is running:

export DISPLAY=99:

th test.luaYou can pass a table to the environment to configure the racing car environment:

server: bool value, settrueto run on a servergame_config: string, the name of game configuration file, should be stored in thegame_configfolderuse_RGB: bool value, settrueto use RGB visual observation, otherwise grayscale visual observationmkey: integer, the key to set up memory sharing. Different running environment should have different mkeyauto_back: bool value, setfalseto disable the car's ability to reverse its gear (for going backward)

See torcs_test.lua.

We provide environments with two different action spaces. Note that different action space should choose different types of game configurations respectively (see Further Customization section below for explanations on game configuration).

In original torcs, the driver can have controls on four different actions:

- throttle: real value ranging from

[0, 1].0for zero acceleration,1for full acceleration - brake: a real value ranging from

[0, 1].0for zero brake,1for full brake - steer: a real value ranging from

[-1, 1].-1for full left-turn,1for full right-turn - gear: a integer ranging from

[-1, 6].-1for going backward.

The following is my customization, you can customize the action space by yourself (see Further Customization section).

This environment has a discrete action space:

1 : brake = 0, throttle = 1, steer = 1

2 : brake = 0, throttle = 1, steer = 0

3 : brake = 0, throttle = 1, steer = -1

4 : brake = 0, throttle = 0, steer = 1

5 : brake = 0, throttle = 0, steer = 0

6 : brake = 0, throttle = 0, steer = -1

7 : brake = 1, throttle = 0, steer = 1

8 : brake = 1, throttle = 0, steer = 0

9 : brake = 1, throttle = 0, steer = -1

The corresponding driver controlled by this environment is torcs-1.3.6/src/drivers/ficos_discrete/ficos_discrete.cpp. Note that the action will be performed in a gradually changing way, e.g., if the current throttle command of the driver is 0 while the action is 2, then the actual action will be some value between 0 and 1. Such customization mimics the way a human controls a car using a keyboard. See torcs-1.3.6/src/drivers/ficos_discrete/ficos_discrete.cpp:drive for more details.

There are two dimensions of continuous actions in this environment:

-

brake/throttle: a real value ranging from

[-1, 1].-1for full brake,1for full throttle, i.e.if action[1] > 0 then throttle = action[1] brake = 0 else brake = -action[1] throttle = 0 end -

steer: a real value ranging from

[-1, 1].-1for full left-turn,1for full right-turn

In above two customized environment, note that

- There are hard-coded ABS and ASR strategies on corresponding drivers

- gear changes automatically

You can customize the racing track by the way stated in Customize the Race section. Here we provide some game configuration on different tracks, see *.xml files, which are named in format quickrace_<discrete|continuous>_<single|multi>_<trackname>.xml, in the game_config folder.

You can modify the original reward function of the environment by override reward() function, as in TorcsDiscreteConstDamagePos.

In this section, we briefly explain how the racing car environment works.

In TORCS environment, when the game is running, it actually maintains a state machine (see torcs-1.3.6/src/libs/raceengineclient/racestate.cpp). On each update of the the state (in 500 Hz), the underlying physical states are updated (e.g. update each cars' speed according to its previous speed and acceleration, etc.), and in 50 Hz, each of car drivers is queried for actions. Drivers can have access to a variety of information (e.g. speed, distance, etc.) to decide what action it will take (i.e. decide the value of throttle, brake, steer, gear, etc.). You can refer to this on how to customize drivers.

To make it possible to run RL model in torch or python, we use memory sharing method to set up IPC between the environment and the model (thanks to DeepDriving from Princeton).

Once the environment is set up, it will request a portion of shared memory using the given mkey. If the RL agent running in another process also requests a portion of shared memory of the same size with the same mkey, then it can see the information written by the customized driver in TORCS.

The customized driver can regularly write information about the current racing status into that memory, and wait for the agent for its action (also, write in different part of that memory). Those information can be

- current first-person view from the driver

- the speed of the car

- the angle between the car and the tangent of the track

- current amount of damage

- the distance between the center of the car and the center of the track

- total distance raced

- the radius of the track segment

- the distance between the car and the nearest car in front of it ...

and it can be customized if more information is needed.

Here we show a training result of running a typical A3C model (Asynchronous Methods for Deep Reinforcement Learning) on our customized environment.

Details about the test:

- game_config:

quickrace_discrete_slowmulti_ushite-city.xml - environment:

TorcsDiscreteConstDamagePos - auto_back:

false - threads:

12 - total steps:

2e7 - observation: gray scale visual input with resizing (

84x84) - learning rate:

7e-5

In this section, I explain how I modify the environment. If you do not attempt to further customize the environment, you can just skip this section.

All modifications on the original TORCS source code are indicated by a yurong marker.

Related files:

Run torcs _rgs <path to game configuration file> then the race is directly set up and begins running.

Related files:

- torcs-1.3.6/src/main.cpp -- set up memory sharing

- torcs-1.3.6/src/libs/raceengineclient/raceengine.cpp -- forward pointers, write the first person view image into the shared memory

- torcs-1.3.6/src/drivers/ficos_discrete/ficos_discrete.cpp -- receive commands from the shared memory, write race information

- torcs-1.3.6/src/drivers/ficos_continuous/ficos_continuous.cpp -- receive commands from the shared memory, write race information

- TORCS/TORCSctrl.cpp -- manage the communication between lua and C++

Memory sharing structure

struct shared_use_st

{

int written;

uint8_t data[image_width*image_height*3];

uint8_t data_remove_side[image_width*image_height*3];

uint8_t data_remove_middle[image_width*image_height*3];

uint8_t data_remove_car[image_width*image_height*3];

int pid;

int isEnd;

double dist;

double steerCmd;

double accelCmd;

double brakeCmd;

// for reward building

double speed;

double angle_in_rad;

int damage;

double pos;

int segtype;

double radius;

int frontCarNum;

double frontDist;

};To specify the memory sharing key:

- In TORCS:

torcs _mkey <key> - In lua interface:

opt.mkey = <key>

Related file: torcs-1.3.6/src/libs/raceengineclient/raceengine.cpp.

See

863: glReadPixels(0, 0, image_width, image_height, GL_RGB, GL_UNSIGNED_BYTE, (GLvoid*)pdata);You can generate a corresponding xxx.xml game configuration file by following way:

- Enter

torcsin cli to enter the game GUI MANU - Configure the race in

Race -> QuickRace -> Configure Race. You can choose tracks, robots. And do select robot ficos_discrete / ficos_continuous. - In

Race Distancechoose10 km - click

accepts - In

~/.torcs/config/racemanthere is a filequickrace.xml, which is the corresponding game configuration file.

To obtain the corresponding semantic segmentation of visual observation, we use some hacks stated below. Note that the modification should be done on a track-by-track basis.

Related files:

- torcs-1.3.6/src/interfaces/collect_segmentation.h -- compile flag COLLECTSEG

- torcs-1.3.6/src/libs/raceengineclient/raceengine.cpp -- write first person view image into the shared memory

- torcs-1.3.6/src/modules/graphic/ssggraph/grscene.cpp -- load different 3D models and control the rendering

In the shared memory, data_remove_side, data_remove_middle and data_remove_car are intended to store visual observations with road shoulders, middle lane and cars removed respectively for track torcs-1.3.6/data/tracks/road/Ushite-city. In such way, the difference between the original observation and the removed ones is the desired segmentation.

To obtain visual observations with some parts removed, we use a pre-generated 3D model (.ac files) where the corresponding part's texture mapping is changed, for example, if we want to obtain visual observations with the middle of the lane marks removed, we need to generate a new 3D model where the texture mapping of the surface of the road is changed into another picture.

Before the game start, load the modified 3D model. And when the game is running, we can first render the original observation using the original 3D model, then switch to the modified 3D model to obtain the observation with some parts removed. [Related file: torcs-1.3.6/src/modules/graphic/ssggraph/grscene.cpp]

What (generally) you need to do to obtain the segmentation:

-

Uncomment the

defineline in torcs-1.3.6/src/interfaces/collect_segmentation.h. -

Generate the modified 3D model of the track. Duplicate the

.acfile in the corresponding track folder (torcs-1.3.6/data/tracks/*), and change the texture mapping of the objects. Remember to add all your texture file into theMakefile. -

Modify codes around

line 254of torcs-1.3.6/src/modules/graphic/ssggraph/grscene.cpp to load your modifed 3D model. -

Modify codes around

line 269grDrawScenefunction of torcs-1.3.6/src/modules/graphic/ssggraph/grscene.cpp to make the environment render different models according todrawIndicator. -

Modify codes around

line 857in torcs-1.3.6/src/libs/raceengineclient/raceengine.cpp to setdrawIndicatorto get different render result, store them in proper places. -

Reinstall TORCS with

sudo ./install.sh -

Start the environment with corresponding game configuration

.xmlfile. In the Lua interface, callenv:getDisplay(choose)to obtain observations with different parts removed. In current implementation for track torcs-1.3.6/data/tracks/road/Ushite-city:0: original RGB observation 1: RGB observation with road shoulders removed 2: RGB observation with middle lane-marks removed 3: RGB observation with cars removed

-

Take the difference between the observation with different parts removed and the original observation, and pixels where the difference is large is where the corresponding parts locate.

- DeepDriving from Princeton: the memory sharing scheme of this training environment is the same with this project.

- Custom RL environment

- Implementations of Several Deep Reinforcement Learning Algorithm

- Asynchronous Methods for Deep Reinforcement Learning