This repository has been archived by the owner on Apr 19, 2023. It is now read-only.

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

!Outdated, see latest comments!

This Pr adds a different more flexible way to use the tensorboard logger.

the usage after this pr is the following

the logger is a singleton which can accessed in every header:

The logger needs to be set up bevor calling

trainer.fitWith that we can use the tensorboard logger inside every module / piece of code.

This allows the very coolest usages of the tensorboard logger.

For example:

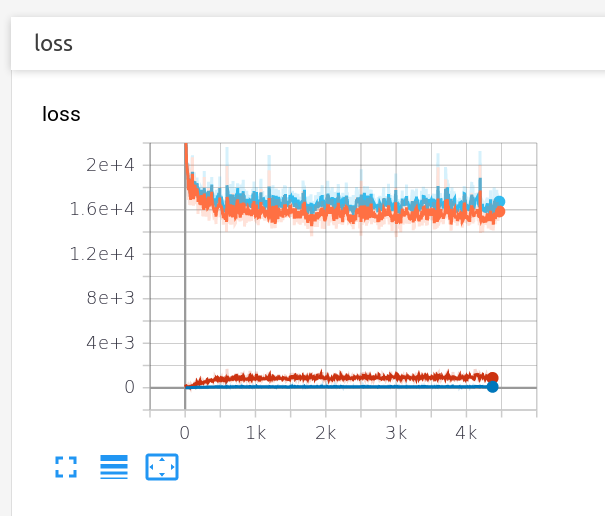

for a loss composed out of multiple loss function one can plot the individual componets (ie. kde loss vs reconstruction loss in a vae)

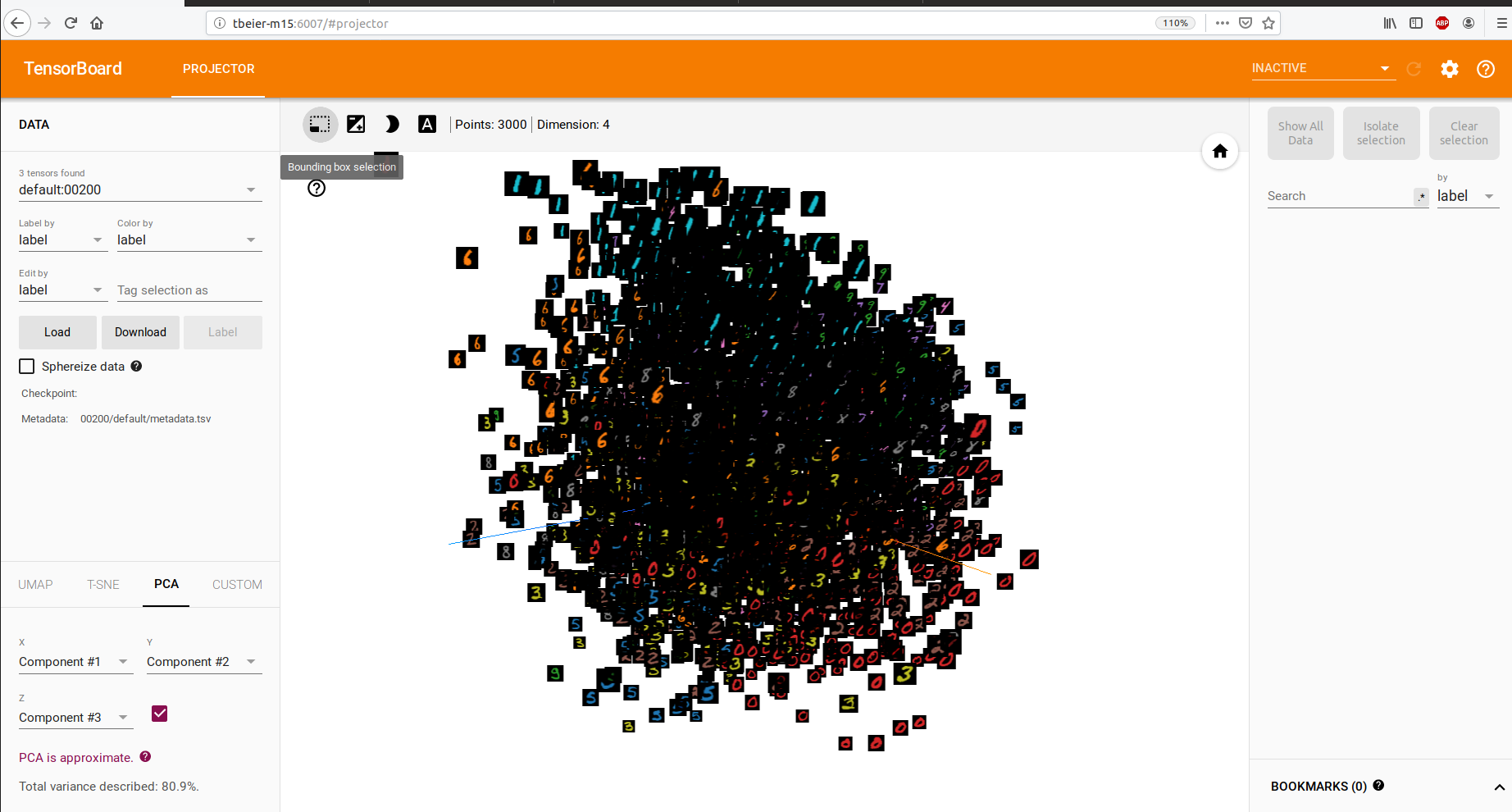

plot the embeddings with the corresponding labels

Here is some complete example in usage:

The interesting part is mostly in the loss function

I am rather new to the usage of tensorboard, but find this approach way more flexible as the one currently implemented in inferno .

Those of you using the tensorboard features more frequently (@Steffen-Wolf @nasimrahaman @constantinpape ), what do you think of this approach?