-

Notifications

You must be signed in to change notification settings - Fork 1.3k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

JENKINS-40825 close resource leak, fix broken pipe error #182

JENKINS-40825 close resource leak, fix broken pipe error #182

Conversation

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The only thing I am not sure about, is if we should remove the call to close() from stop and form the the BodyExecutionCallback.

| started.await(); | ||

| } catch (InterruptedException e) { | ||

| closeWatch(watch); | ||

| throw new IOException("interrupted while waiting for websocket connection"); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Maybe an InterruptedIOException would be better here.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Sounds good.

| .start(); | ||

| return false; | ||

| } | ||

|

|

||

| @Override | ||

| public void stop(Throwable cause) throws Exception { | ||

| LOGGER.log(Level.FINE, "Stopping container step."); | ||

| closeQuietly(client, decorator); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This is likely to be called on interruption.

What happens to the proc in this case? Is it handled by Jenkins, or we should do the cleanup ourselves?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This also applies to the callback.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

'stop' is not called on interruption, I checked (by manually aborting the build - what other interruptions exist?).

Unfortunately I don't know which callback you are referring to.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Sorry, it was obvious which callback you meant.

I didn't check that one. If it is actually called on interruption, we can collect all the procs and do an additional clean up there - although I don't think it will be necessary.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The callback is actually called on interruption.

I will check if keeping a reference of all the procs makes sense.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

|

So we just tried out the code for this PR. We had a single job, running concurrent builds, on exclusive agents with random labels. This ensured each running job was on its on label and agent. The podTemplate code simply switched to the JNLP container and did a sleep 300. We were running 17 agents. The first 7 jobs got this during its The other jobs then immediately stopped printing output and appeared frozen at points before the The jobs that had the (actually 6 got this and a 7th succeeded without the log line) All the other jobs that were frozen failed with: |

|

Thanks for the test. The exception you are getting now would likely have been the "pipe not connected" error before this. This means that Jenkins takes more than 10 seconds to connect to kubernetes and start executing the command. Unfortunately, Jenkins will just interrupt us in this case... Are you able to paste a minimal example that you are using? That might help, as I was not able to trigger this again just running the same build 10 times... Probably we are hitting some other limit here, I can't imagine that it takes so long to start executing the command. Maybe the thread pool is exausted, maybe we should try re-using connections instead of opening a new one for each 'ps' that Jenkins wants us to execute... will investigate tomorrow. |

|

Added the implementation of Will now investigate @michaelajr's issues. Maybe it helps to add the suggested fix for |

|

@michaelajr I can reproduce your problems, however only if I run A LOT of jobs at exactly the same time - e.g. 4 of 30 jobs fail.. |

|

@marvinthepa We saw a greater failure ratio yesterday. We definitely need to run/start multiple jobs at or around the same time. We'll test again this morning with the new code. |

|

@marvinthepa Hey, I work with @michaelajr. I just tested the new commits you've just added. I have a pipeline job that just sleeps for 300 seconds: https://gist.github.com/austinmoore-/b26b61d65072400b64ec205ba31628cb First, I ran 15 instances of this job. 3/15 of the jobs failed with the After than I ran it again but 20 times. 7/20 jobs failed with the same

I'm not sure, but the failures might only occur when running the same job. When I ran through our deployment jobs (8 simultaneous jobs), I did not encounter this error. Were you running the same job, or different jobs? |

|

To add to @austinmoore- 's comment, under normal circumstances there are no jobs that run concurrent builds. It was just our test job where we executed concurrent builds, each with it's own EXCLUSIVE agent and label. The fact that we were able to run 8 of our REAL jobs at the same time without this issue is a huge step forward. So this is good news. Would be nice to understand why we are seeing such a high failure rate when we run concurrent builds of the same job. |

|

EDIT: The following is based on a local build from ed766ca. To add some more experiences to this. It still appears the issue is related to running a job that uses more than one container in the Pod Template. We've found that when using a Pod Template that has greater than 1 container defined you're okay to run many parallel jobs (even the same job) so long as you don't use that extra container. As soon as you run commands in the additional containers we get the following exception and the job fails: What we've been using for testing is the following Pipeline:

Then we click the build button ten or so times. In some cases the loop will work but the |

|

I think I found another (probably big) part of the problem. OkHttpClient per default only runs 5 concurrent requests against the same hostname, which is probably to little when you are constantly opening websocket connections to that one host. Seems like I will have to open another pull request to make this configurable in the kubernetes-client, but I will verify my assumption first. |

|

Given that each pod is meant to serve a single job, I can't see why we would possibly need more than 2 concurrent concurrent connection to a single pod (one for the sh part and one for the probe). If we don't have any leaking, we should be just fine. |

|

@iocanel: It is not about connections to the pod - it is about connections to |

|

You are right! I think I need more coffee !!! |

|

Here you go: ☕️ . |

|

Really excited to see the progress being made here, the investigation being done by @marvinthepa, and the help from @iocanel. Keep up the good work 👍 I'm a colleague of @soudy, who initially reported JENKINS-40825, so if there's anything that we can do to help out, let use now. Also: ☕️ |

|

@iocanel: I added two new commits:

Could I kindly ask you to review those changes? I am especially unsure about the configuration in the second one - don't know if it makes sense to make it configurable from the GUI. I think it should definitely be added to the Then we could also set it via Java system property or environment variable (instead of or in addition to the GUI). Please let me know what you think. Always happy to incorporate improvements. |

|

@marvinthepa @iocanel Hey, I just tried out the new changes. I ran 20 jobs, and they all succeeded with no issue! This seems to fix all of our issues. This really great! We'll continue to test this. |

|

We've also tested the latest changes with the pipeline I described before. 100 Jobs with no issues! We're getting ready to push this out to our main Jenkins instance. I'll let you know how it goes. Thanks for the quick work on this @marvinthepa. |

| @@ -47,6 +47,10 @@ | |||

| <f:textbox default="10"/> | |||

| </f:entry> | |||

|

|

|||

| <f:entry title="${%Max number of current connections}" field="maxRequestsPerHostStr"> | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

It would be nice to have a more detailed description of what this does on the configuration page. Or maybe changing the title to Max current connections to Kubernetes API would be sufficient.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Agreed.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I changed the wording a little bit (tried to keep it as short as possible, sacrificing grammar and elegance) and also pushed the corresponding help file which I forgot to git add 🤦♀️ 🤦♂️ , which contains a longer description. Okay like that?

|

I have good news: We've upgraded our main Jenkins master with a plugin built from this PR and all is looking well. Prior to this we would begin to experience the broken pipe issue with a single handful of Jobs. Since the upgrade we've been running approximately 15 Jobs at minimum and about 22 max -- all have either succeeded or failed for legitimate reasons. We're no longer experiencing the broken pipe issue. Thanks again for the quick fix! |

|

We too have our production Jenkins running this fix. Working great. |

|

That's excellent news. I am cutting a kubernetes-client 2.6.0 release right now, that allows you to config |

|

2.6.1 was released so we should align this pr accordingly. |

294ea6d

to

d4ec16e

Compare

|

Can you rebase, so that we can merge? |

|

FYI we've tested this with the 2.6.1 changes as well and all our issues seem fixed! Great job!! |

First of all, `Launcher.launch` is called not once, but again and again. I.e. overwriting shared members of the enclosing class that a different thread is still using (and only cleaning the ones that were written last). That is a bad idea, and caused the thread and connection leak. So, move them (`watch` and `proc`) to local variables, and close them elsewhere: * `proc.kill()` should be taken care of by the shell step (that needs to be verified) * `watch` was already cleaned up in `ContainerExecProc` anyway. Second of all, `waitQuietly` on the `started` latch was what caused the "pipe not connected" errors: In case of InterruptedException, it caused the launcher to just continue as if `started` had already been counted down (i.e. the websocket connection established), when in reality the thread just interrupted itself because of a timeout (probably from [`DurableTaskStep.check()`][1]). [1]: https://github.com/jenkinsci/workflow-durable-task-step-plugin/blob/workflow-durable-task-step-2.13/src/main/java/org/jenkinsci/plugins/workflow/steps/durable_task/DurableTaskStep.java#L303

Strongly inspired by [Docker Pipeline Plugin][1], but sending just a single command to the container to be faster. The command only uses linux standard tools and works on debian and alpine, so it should be sufficiently portable. Gets rid of one TODO :-). [1]: https://github.com/jenkinsci/docker-workflow-plugin/blob/45f0c04/src/main/java/org/jenkinsci/plugins/docker/workflow/WithContainerStep.java#L249,

This solves the actual problem causing JENKINS-40825 - OkHttp limiting itself to 5 requests per hosts, which is not sufficient when running 20 builds at once. Each build uses a websocket connection for the command it runs, and additionally spawns more connections to check if the command is still running.

if log level is not set to FINEST..

Although this *should* not happen, just free up the watches when the ContainerStep finishes for good measure.

we should be fine if we get deserialized - how are we supposed to clean up those procs in that case?

9fc0dea

to

a11d177

Compare

|

@iocanel: done, please review again. |

|

Unfortunately, with the latest Jenkins version, and the master branch version of this plugin, I am still seeing this issue occur: This stack trace was preceded by: This happened, even though the pod was clearly up and running (using |

|

@JeanMertz:

|

|

Hmm. Good find @marvinthepa. In fact, I used the new Jenkins integration in this repo to download the hpi from here: But perhaps something didn't go right there. I'll check and get back to you. |

|

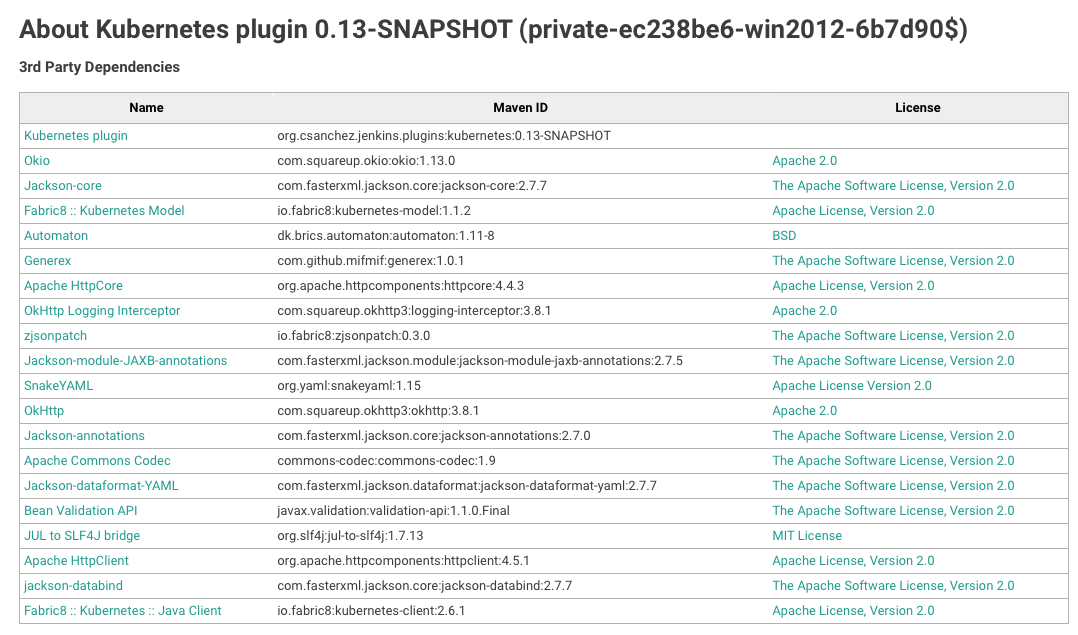

I just checked on our CI, but it does look like the HPI is correctly built from the latest master branch: (not quite sure what the |

|

@JeanMertz stupid question, but did you actually restart your Jenkins? |

|

UPDATE actually, scratch that. below results where from yesterday. I just ran 3 batches of 10 concurrent jobs, and none of them failed. Not sure what happened before, but I can't seem to reproduce it anymore. I'm not sure what caused this, but it seems to be working now 👍 However, starting 20 jobs, I do still get errors, not sure if they are related though: |

|

Hi, When do you expect to release a new version of the plugin? |

|

I have a reproducible cascade of "Pipe not connected" errors when many build jobs (~20) are triggered, or simply when Jenkins is "unthawed" from some time being down. With the 0.13-SNAPSHOT version I verify that the "Pipe not connected" problem is gone. |

|

I have to report that although the pipe not connected problem is gone, containers fail with "script returned exit code 2". It might be due to reasons unrelated to this though. |

|

@gm42 if it is If you can reproduce this with a minimal example, it might be worthwhile to investigate this further, as it might point to another problem. |

|

@marvinthepa oh yes, it is actually Unfortunately all the logs are gone as I have recently rebuilt the Jenkins deployment, I will not be able to reproduce this - sorry, we are moving away from the plugin for now. |

|

This is how the notifications of build failures look like (5 different projects, obviously some cascade effect): All failing very closely. |

|

Hi, I've managed to reproduce the script returned exit code -2 issue with a very simple example. `def a = [ parallel a def getPod(podNum, body) { I'm using as internal jnlp container, which is part of my organization so you will need to change it to another one. |

|

@i059304 I took the freedom to simplify your example a little bit: def a = (1..17).toList().collectEntries {

def label = "test$it"

[

"test$it": {

podTemplate(name: "pod_test_$it", label: label, containers: [

containerTemplate(name: 'devx',

image: 'ubuntu:16.04',

ttyEnabled: true,

command: 'cat',

)

]) {

node(label) {

container('devx') {

sh "echo hello world"

}

}

}

}

]

}

stage('a') {

parallel a

}

stage('b') {

parallel a

}

stage('c') {

parallel a

}However, this runs successfully for me. |

|

This does seem to fix the "pipe not connected" error. It was getting really bad so thanks! |

|

Any ideas when an official 0.13 release that will include this bug fix will be made available? |

|

@jamiecoleman92 Looks like it was released a few hours ago https://github.com/jenkinsci/kubernetes-plugin/releases/tag/kubernetes-1.0 |

|

@mikesplain Thanks for letting me know. I am currently testing the new plugin in our pipeline as I type! |

resource leak

First of all,

Launcher.launchis called not once, but again and again.I.e. overwriting shared members of the enclosing class that a different

thread is still using (and only cleaning the ones that were written last).

That is a bad idea, and caused the thread and connection leak.

So, move them (

watchandproc) to local variables, and close themelsewhere:

watchwas already cleaned up inContainerExecProcproc.kill()was not correctly executed anyway, and this is still broken. See discussion below.pipe not connected

Second of all,

waitQuietlyon thestartedlatch was what causedthe "pipe not connected" errors:

In case of InterruptedException, it caused the launcher to just continue

as if

startedhad already been counted down (i.e. the websocket connectionestablished), when in reality the thread just interrupted itself because of a timeout

(probably from

DurableTaskStep.check()).still TODO:

proc.killwhen step is interruptedIf the

shstep insidecontaineris interrupted,DefaultStepContextwill create a newLauncherandDurableTaskStepwill callkillon it - not callkillon existingprocsthat have finished (or not). If that one does not kill the process (whichDurableTaskStepchecks),ContainerStepExecution.stopwill also not be called.Keeping a single reference of

procaround and callingkillon it whenContainerStepExecution.stopis called (that is what the current master does) would not work anyway.This is because both

ContainerExecDecorator.decorateandLauncher.launchare called multiple times (at least to start the actual script, and then to run a number ofpsexecutions every 10 seconds to check on the process) - so we are very likely to call kill on the wrong instance.Even if we keep track of all the

procswe created - how will we know which is the right one?The "right" solution is one like in the Docker Pipeline Plugin:

I.e. open a new connection to the container, find the right process to kill (using the environment variable

JENKINS_SERVER_COOKIE=durable*, and kill it.I can implement that solution, but as it has little to do with JENKINS-40825, do you want it on a new pull request?

Sorry for writing such a "novel", I hope I can make myself clear.