-

Notifications

You must be signed in to change notification settings - Fork 14

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

Merge pull request #150 from khanlab/markdown

Docs from RST to Markdown

- Loading branch information

Showing

19 changed files

with

729 additions

and

498 deletions.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,46 @@ | ||

| [](https://hippunfold.readthedocs.io/en/latest/?badge=latest) | ||

| [](https://circleci.com/gh/khanlab/hippunfold) | ||

|  | ||

|  | ||

|

|

||

| # Hippunfold | ||

|

|

||

| This tool aims to automatically model the topological folding structure | ||

| of the human hippocampus, and computationally unfold the hippocampus to | ||

| segment subfields and generate hippocampal and dentate gyrus surfaces. | ||

|

|

||

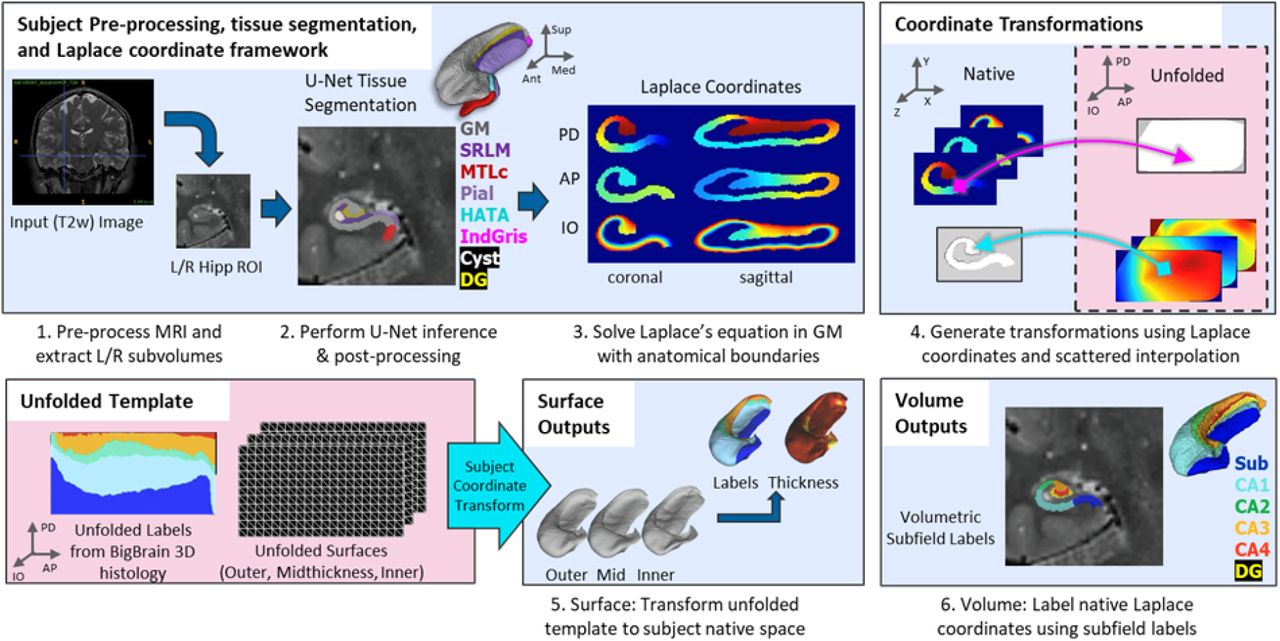

| The overall workflow can be summarized in the following steps: | ||

|

|

||

|  | ||

|

|

||

| 1. Pre-processing MRI images including non-uniformity correction, | ||

| resampling to 0.3mm isotropic subvolumes, registration and cropping to coronal-oblique | ||

| subvolumes around each hippocampus | ||

| 2. Automatic segmentation of hippocampal tissues and surrounding | ||

| structures via deep convolutional neural network U-net (nnU-net), models available | ||

| for T1w, T2w, hippocampal b500 dwi, and neonatal T1w, and post-processing | ||

| with fluid label-label registration to a high-resolution average template | ||

| 3. Imposing of coordinates across the anterior-posterior and | ||

| proximal-distal dimensions of hippocampal grey matter | ||

| via solving Laplace's equation, and using an equivolume solution for | ||

| laminar coordinates | ||

| 4. Generating transformations between native and unfolded spaces using scattered | ||

| interpolation on the hippocampus and dentate gyrus coordinates separately | ||

| 5. Applying these transformations to generate surfaces meshes of the hippocampus | ||

| and dentate gyrus, and extraction of morphological surface-based features including | ||

| thickness, curvature, and gyrification index, sampled at the midthickness surface, and mapping | ||

| subfield labels from the histological BigBrain atlas of the hippocampus | ||

| 6. Generating high-resolution volumetric segmentations of the subfields using the same | ||

| transformations and volumetric representations of the coordinates. | ||

|

|

||

|

|

||

| **Full Documentation:** [here](https://hippunfold.readthedocs.io**) | ||

|

|

||

|

|

||

| **Relevant papers:** | ||

| - DeKraker J, Haast RAM, Yousif MD, Karat B, Köhler S, Khan AR. HippUnfold: Automated hippocampal unfolding, morphometry, and subfield segmentation. bioRxiv 2021.12.03.471134; doi: 10.1101/2021.12.03.471134 [link](https://www.biorxiv.org/content/10.1101/2021.12.03.471134v1) | ||

| - DeKraker J, Ferko KM, Lau JC, Köhler S, Khan AR. Unfolding the hippocampus: An intrinsic coordinate system for subfield segmentations and quantitative mapping. Neuroimage. 2018 Feb 15;167:408-418. doi: 10.1016/j.neuroimage.2017.11.054. Epub 2017 Nov 23. PMID: 29175494. [link](https://pubmed.ncbi.nlm.nih.gov/29175494/) | ||

| - DeKraker J, Lau JC, Ferko KM, Khan AR, Köhler S. Hippocampal subfields revealed through unfolding and unsupervised clustering of laminar and morphological features in 3D BigBrain. Neuroimage. 2020 Feb 1;206:116328. doi: 10.1016/j.neuroimage.2019.116328. Epub 2019 Nov 1. PMID: 31682982. [link](https://pubmed.ncbi.nlm.nih.gov/31682982/) | ||

| - DeKraker J, Köhler S, Khan AR. Surface-based hippocampal subfield segmentation. Trends Neurosci. 2021 Nov;44(11):856-863. doi: 10.1016/j.tins.2021.06.005. Epub 2021 Jul 22. PMID: 34304910. [link](https://pubmed.ncbi.nlm.nih.gov/34304910/) | ||

|

|

||

|

|

This file was deleted.

Oops, something went wrong.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,68 @@ | ||

| # Contributing to Hippunfold | ||

|

|

||

| Hippunfold dependencies are managed with Poetry, which you\'ll need | ||

| installed on your machine. You can find instructions on the [poetry | ||

| website](https://python-poetry.org/docs/master/#installation). | ||

|

|

||

| ## Set-up your development environment: | ||

|

|

||

| Clone the repository and install dependencies and dev dependencies with | ||

| poetry: | ||

|

|

||

| git clone http://github.com/khanlab/hippunfold | ||

| cd hippunfold | ||

| poetry install | ||

|

|

||

| Poetry will automatically create a virtualenv. To customize \... (TODO: | ||

| finish this part) | ||

|

|

||

| Then, you can run hippunfold with: | ||

|

|

||

| poetry run hippunfold | ||

|

|

||

| or you can activate a virtualenv shell and then run hippunfold directly: | ||

|

|

||

| poetry shell | ||

| hippunfold | ||

|

|

||

| You can exit the poetry shell with `exit`. | ||

|

|

||

| ## Running code format quality checking and fixing: | ||

|

|

||

| Hippunfold uses [poethepoet](https://github.com/nat-n/poethepoet) as a | ||

| task runner. You can see what commands are available by running: | ||

|

|

||

| poetry run poe | ||

|

|

||

| We use `black` and `snakefmt` to ensure | ||

| formatting and style of python and Snakefiles is consistent. There are | ||

| two task runners you can use to check and fix your code, and can be | ||

| invoked with: | ||

|

|

||

| poetry run poe quality_check | ||

| poetry run poe quality_fix | ||

|

|

||

| Note that if you are in a poetry shell, you do not need to prepend | ||

| `poetry run` to the command. | ||

|

|

||

| ## Dry-run testing your workflow: | ||

|

|

||

| Using Snakemake\'s dry-run option (`--dry-run`/`-n`) is an easy way to verify any | ||

| changes to the workflow are working correctly. The `test_data` folder contains a | ||

| number of *fake* bids datasets (i.e. datasets with zero-sized files) that are useful | ||

| for verifying different aspects of the workflow. These dry-run tests are | ||

| part of the automated github actions that run for every commit. | ||

|

|

||

| You can use the hippunfold CLI to perform a dry-run of the workflow, | ||

| e.g. here printing out every command as well: | ||

|

|

||

| hippunfold test_data/bids_singleT2w test_out participant --modality T2w -np | ||

|

|

||

| As a shortcut, you can also use `snakemake` instead of the | ||

| hippunfold CLI, as the `snakebids.yml` config file is set-up | ||

| by default to use this same test dataset, as long as you run snakemake | ||

| from the `hippunfold` folder that contains the | ||

| `workflow` folder: | ||

|

|

||

| cd hippunfold | ||

| snakemake -np |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,167 @@ | ||

| # Installation | ||

|

|

||

| BIDS App for Hippocampal AutoTop (automated hippocampal unfolding and | ||

| subfield segmentation) | ||

|

|

||

| ## Requirements | ||

|

|

||

| - Docker (Mac/Windows/Linux) or Singularity (Linux) | ||

| - `pip install` is also available (Linux), but will still require | ||

| singularity to handle some dependencies | ||

| - For the T1w workflow: BIDS dataset with T1w images. | ||

| Higher-resolution data are preferred (\<= 0.8mm) but the pipeline | ||

| will still work with 1mm T1w images. | ||

| - GPU not required | ||

|

|

||

| ### Notes: | ||

|

|

||

| - T1w and/or T2w input images are supported, and we recommend using | ||

| sub-millimetric isotropic data for best performance. | ||

| - Other 3D imaging modalities (eg. ex-vivo MRI, 3D histology, etc.) | ||

| can be used, but may require manual tissue segmentation. | ||

|

|

||

| ## Running with Docker | ||

|

|

||

| Pull the container: | ||

|

|

||

| docker pull khanlab/hippunfold:latest | ||

|

|

||

| Do a dry run, printing the command at each step: | ||

|

|

||

| docker run -it --rm \ | ||

| -v PATH_TO_BIDS_DIR:/bids:ro \ | ||

| -v PATH_TO_OUTPUT_DIR:/output \ | ||

| khanlab/hippunfold:latest \ | ||

| /bids /output participant -np | ||

|

|

||

| Run it with maximum number of cores: | ||

|

|

||

| docker run -it --rm \ | ||

| -v PATH_TO_BIDS_DIR:/bids:ro \ | ||

| -v PATH_TO_OUTPUT_DIR:/output \ | ||

| khanlab/hippunfold:latest \ | ||

| /bids /output participant -p --cores all | ||

|

|

||

| For those not familiar with Docker, the first three lines of this | ||

| example are generic Docker arguments to ensure it is run with the safest | ||

| options and has permission to access your input and output directories | ||

| (specified here in capital letters). The third line specifies the | ||

| HippUnfold Docker container, and the fourth line contains the requires | ||

| arguments for HippUnfold. You may want to familiarize yourself with | ||

| [Docker options](https://docs.docker.com/engine/reference/run/), and an | ||

| overview of HippUnfold arguments is provided in the [Command line | ||

| interface](https://hippunfold.readthedocs.io/en/latest/usage/app_cli.html) | ||

| documentation section. | ||

|

|

||

| ## Running with Singularity | ||

|

|

||

| If you are using singularity, there are two different ways to run | ||

| `hippunfold`. | ||

|

|

||

| 1. Pull the fully-contained BIDS app container from | ||

| `docker://khanlab/hippunfold:latest` and run it | ||

| 2. Clone the github repo, `pip install`, and run `hippunfold`, which | ||

| will pull the required singularity containers as needed. | ||

|

|

||

| If you want to modify the workflow at all or run a development branch, | ||

| then option 2 is required. | ||

|

|

||

| Furthermore, if you want to run the workflow on a Cloud system that | ||

| Snakemake supports, or with a Cluster Execution profile, you should use | ||

| Option 2 (see snakemake documentation on [cluster | ||

| profiles](https://github.com/snakemake-profiles/doc) and [cloud | ||

| execution](https://snakemake.readthedocs.io/en/stable/executing/cloud.html)). | ||

|

|

||

| ### Option 1: | ||

|

|

||

| Pull the container: | ||

|

|

||

| singularity pull khanlab_hippunfold_latest.sif docker://khanlab/hippunfold:latest | ||

|

|

||

| Do a dry run, printing the command at each step: | ||

|

|

||

| singularity run -e khanlab_hippunfold_latest.sif \ | ||

| PATH_TO_BIDS_DIR PATH_TO_OUTPUT_DIR participant -np | ||

|

|

||

| Run it with maximum number of cores: | ||

|

|

||

| singularity run -e khanlab_hippunfold_latest.sif \ | ||

| PATH_TO_BIDS_DIR PATH_TO_OUTPUT_DIR participant -p --cores all | ||

|

|

||

| ### Option 2: | ||

|

|

||

| This assumes you have created and activated a virtualenv or conda | ||

| environment first. | ||

|

|

||

| 1. Install hippunfold using pip: | ||

|

|

||

| pip install hippunfold | ||

|

|

||

| 2. Run the following to download the U-net models: | ||

|

|

||

| hippunfold_download_models | ||

|

|

||

| 3. Now hippunfold will be installed for you and can perform a dry-run | ||

| with: | ||

|

|

||

| hippunfold PATH_TO_BIDS_DIR PATH_TO_OUTPUT_DIR participant -np | ||

|

|

||

| 4. To run on all cores, and have snakemake pull any required | ||

| containers, use: | ||

|

|

||

| hippunfold PATH_TO_BIDS_DIR PATH_TO_OUTPUT_DIR participant --cores all --use-singularity | ||

|

|

||

| ## Additional instructions for Compute Canada | ||

|

|

||

| This section provides an example of how to set up a `pip installed` copy | ||

| of HippUnfold on CompateCanada\'s `graham` cluster. | ||

|

|

||

| ### Setting up a dev environment on graham: | ||

|

|

||

| Here are some instructions to get your python environment set-up on | ||

| graham to run HippUnfold: | ||

|

|

||

| 1. Create a virtualenv and activate it: | ||

|

|

||

| mkdir $SCRATCH/hippdev | ||

| cd $SCRATCH/hippdev | ||

| module load python/3 | ||

| virtualenv venv | ||

| source venv/bin/activate | ||

|

|

||

| 2. Follow the steps above to `pip install` from github repository | ||

|

|

||

| ### Running hippunfold jobs on graham: | ||

|

|

||

| Note that this requires | ||

| [neuroglia-helpers](https://github.com/khanlab/neuroglia-helpers) for | ||

| regularSubmit or regularInteractive wrappers, and the | ||

| [cc-slurm](https://github.com/khanlab/cc-slurm) snakemake profile for | ||

| graham cluster execution with slurm. | ||

|

|

||

| In an interactive job (for testing): | ||

|

|

||

| regularInteractive -n 8 | ||

| hippunfold PATH_TO_BIDS_DIR PATH_TO_OUTPUT_DIR participant \ | ||

| --participant_label 001 -j 8 | ||

|

|

||

| Here, the last line is used to specify only one subject from a BIDS | ||

| directory presumeably containing many subjects. | ||

|

|

||

| Submitting a job (for larger cores, more subjects), still single job, | ||

| but snakemake will parallelize over the 32 cores: | ||

|

|

||

| regularSubmit -j Fat \ | ||

| hippunfold PATH_TO_BIDS_DIR PATH_TO_OUTPUT_DIR participant -j 32 | ||

|

|

||

| Scaling up to \~hundred subjects (needs cc-slurm snakemake profile | ||

| installed), submits 1 16core job per subject: | ||

|

|

||

| hippunfold PATH_TO_BIDS_DIR PATH_TO_OUTPUT_DIR participant \ | ||

| --profile cc-slurm | ||

|

|

||

| Scaling up to even more subjects (uses group-components to bundle | ||

| multiple subjects in each job), 1 32core job for N subjects (e.g. 10): | ||

|

|

||

| hippunfold PATH_TO_BIDS_DIR PATH_TO_OUTPUT_DIR participant \ | ||

| --profile cc-slurm --group-components subj=10 |

Oops, something went wrong.