-

Notifications

You must be signed in to change notification settings - Fork 965

Tensorflow parser problem (Slim and tf.layers)

Jiahao Yao edited this page May 28, 2018

·

3 revisions

Model: Inception-resnet-v2 and inception-resnet-v1

Source: Tensorflow

Destination: IR

Author: Jiahao

When we convert the above two models from tensorflow to IR, they crashed because of flatten and batchnorm layer.

Our tensorflow parser works on those op from tf.layers. Nevertheless, the op of the above two models comes from tensorflow.slim.

The difficulty in slim module is that there are lots of condition operator in the graph.

In graph definition, we can see the difference in tensorboard.

tf.layers

tf.slim

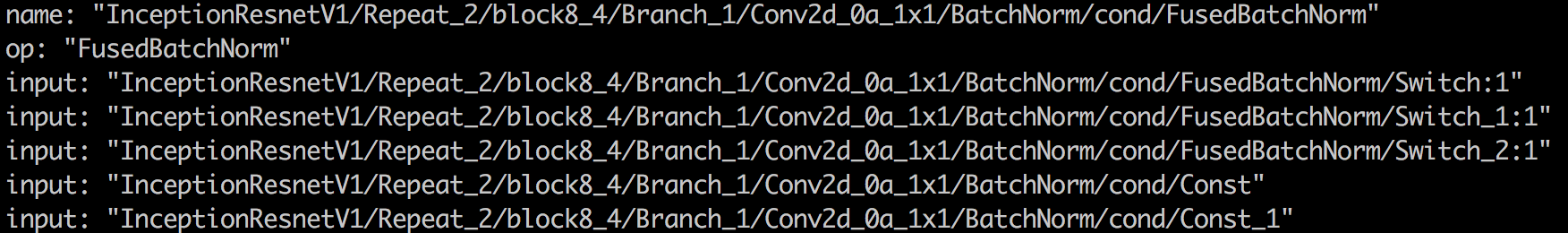

We can output the inputs of the FusedBatchNorm operator.

tf.layers

tf.slim

For the flatten layer in slim, it is also built up with many small ops.

To conclude, the complexity of the ops adds to the difficulty of conversion.